Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence

Planning as an Iterative Process†

David E. Smith

NASA Ames Research Center

Moffet Field, CA 94035–0001

david.smith@nasa.gov

Abstract

allow the user to edit a partial or completed plan by moving actions around, locking actions down, or removing actions. The MAPGEN planning system, which was used to do

daily planning for the two Mars Exploration Rovers (MER),

follows this model (Bresina et al. 2005). Human Tactical

Activity Planners (TAPs) used MAPGEN in an interactive

mode where they would select and place activities on timelines, and MAPGEN would instantiate details and enforce

constraints. The TAP could also remove and reorder activities, and MAPGEN would identify and flag any violated

constraints. This approach was quite successful, and has led

to similar follow-on systems being adopted for the Phoenix

Mars Lander and the recently launched Mars Science Laboratory. However, this approach addresses only a small part

of the planning problem, and does not take full advantage of

the power of automated planning, as many of us in AI would

like. There are a number of technical difficulties that stand

in the way of a more fully automated approach to planning

for such missions, and I discuss some of these in the next

section. However, there is a bigger issue with the planning

process – it is still being considered as a separate, isolated

component that is used after the scientific team has fully

specified their goals and preferences. It is this issue which

I would like to bring to the fore – integrating planning into

an iterative process where the goals, objectives, and preferences are only partially understood.

Activity planning for missions such as the Mars Exploration Rover mission presents many technical challenges, including oversubscription, consideration of

time, concurrency, resources, preferences, and uncertainty. These challenges have all been addressed by

the research community to varying degrees, but significant technical hurdles still remain. In addition, the integration of these capabilities into a single planning engine remains largely unaddressed. However, I argue that

there is a deeper set of issues that needs to be considered – namely the integration of planning into an iterative process that begins before the goals, objectives, and

preferences are fully defined. This introduces a number of technical challenges for planning, including the

ability to more naturally specify and utilize constraints

on the planning process, the ability to generate multiple

qualitatively different plans, and the ability to provide

deep explanation of plans.

Introduction

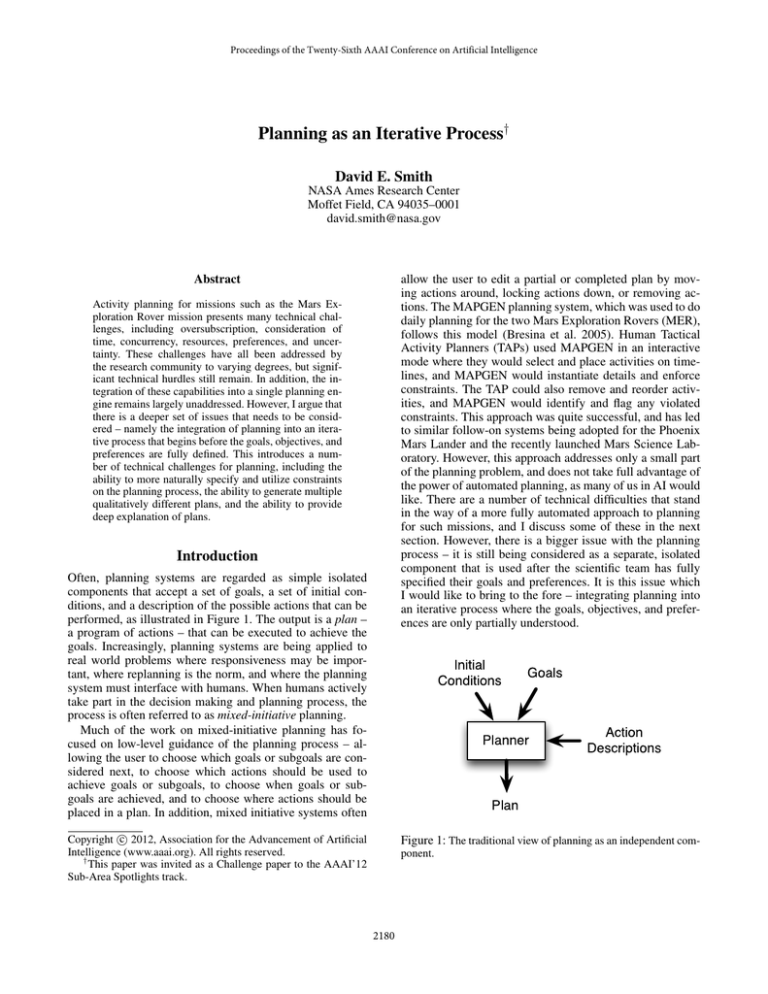

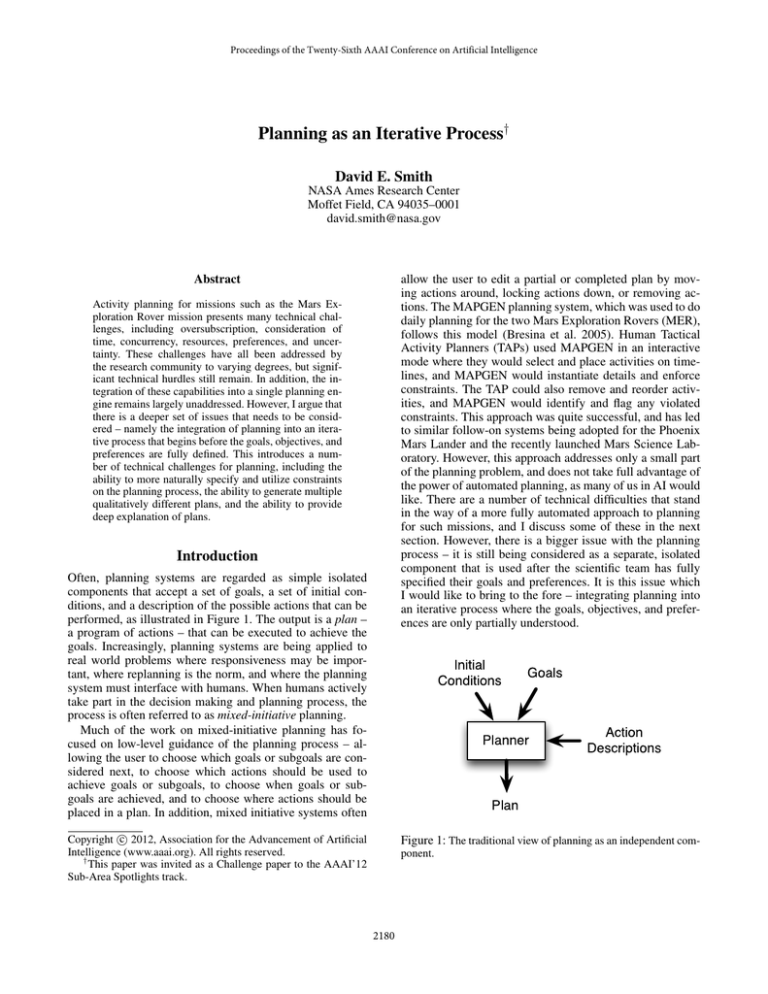

Often, planning systems are regarded as simple isolated

components that accept a set of goals, a set of initial conditions, and a description of the possible actions that can be

performed, as illustrated in Figure 1. The output is a plan –

a program of actions – that can be executed to achieve the

goals. Increasingly, planning systems are being applied to

real world problems where responsiveness may be important, where replanning is the norm, and where the planning

system must interface with humans. When humans actively

take part in the decision making and planning process, the

process is often referred to as mixed-initiative planning.

Much of the work on mixed-initiative planning has focused on low-level guidance of the planning process – allowing the user to choose which goals or subgoals are considered next, to choose which actions should be used to

achieve goals or subgoals, to choose when goals or subgoals are achieved, and to choose where actions should be

placed in a plan. In addition, mixed initiative systems often

c 2012, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

†

This paper was invited as a Challenge paper to the AAAI’12

Sub-Area Spotlights track.

Figure 1: The traditional view of planning as an independent component.

2180

MER Planning

achieve more than is possible, given the time, energy and

data storage available. As is typical in such problems there

are different values to different goals, and combinations of

goals, so that it is not an easy matter to identify an optimal

subset of goals to pursue, even if the goals were independent of each other.

Temporal Planning This is a temporal planning problem,

which means that different actions have different durations, and concurrent actions are necessary. There are also

many time constraints on various activities, due to illumination constraints, temperature constraints, solar power

availability, atmospheric conditions, and communication

windows.

Resources There are discrete and continuous resources,

such as state of battery charge and data storage, that are

temporarily used, consumed, or produced by different activities.

Preferences There are preferences involved – scientists

may have preferences for one objective over another, but

they may also have preferences for time windows, or for

the order in which experiments are done.

Uncertainty There is uncertainty about the initial state of

the rover (battery charge, pose, terrain map), about the

exogenous events (atmospheric conditions, dust devils,

communication bandwidth, solar radiation), and about the

outcomes of actions (pose, energy usage, time taken).

All of these issues have received attention over the last ten

years and considerable progress has been made. However,

there are still some significant shortcomings to this work.

For oversubscription planning, work has largely been limited to the special case of net-benefit planning, where actions are augmented with costs, goals are augmented with

rewards, and the optimal plan is defined as the one with the

greatest sum of rewards less action costs (Benton, Do, and

Kambhampati 2009). What is missing is work on the tougher

problem of oversubscription planning where actions consume resources and there are limits on the resources available (energy, data storage). For this class of problems, action

“costs” (resource usage) are not directly comparable to goal

rewards.

For temporal planning, relatively little attention has been

paid to problems with large numbers of exogenous events

and time constraints. For this kind of problem, it is not at all

clear that forward state-spaced search with any of the currently popular search heuristics will be very effective. For

preferences, there has been little work on dealing with time

preferences, namely preferences on the order in which certain activities are performed, or the time windows in which

activities are performed.1

For planning under uncertainty, most work has been limited to consideration of instantaneous actions, and sequential

plans. Dealing with concurrent actions that have uncertain

duration, or have uncertain use of continuous resources such

as energy, is particularly problematic. In a state-space approach, one is forced to encode time in the state space, and

Figure 2 shows a meeting of the Science Operations Working Group (SOWG) for one of the MER rovers. During the

first year of MER operations, a meeting like this took place

each Martian night, in order to decide on the science goals

and activities for the next day. There are a number of different scientists in the room, including planetary geologists,

atmospheric scientists, and biologists. In addition, there are

many engineers in the room with specialized knowledge

of particular instruments, rover mobility and driving, arm

manipulation and placement, thermal characteristics of the

rover, the power system, communication systems, and various software systems. As with any group this large you

would not expect there to be complete agreement about the

goals for the next day. Different scientists have different

places they want the rover to go and different measurements

they want it to take. These measurements are not entirely independent; a scientist may want multiple different measurements of a specific rock, or might want atmospheric measurements at regular intervals. There are time constraints and

preferences as well, due to lighting and temperature considerations. For example, when taking a visible image of a location, good illumination is important. However, when using

an infrared spectrometer, the instrument needs to be cold and

dark. There are many additional constraints on resources,

such as energy and power available throughout the day, data

storage available, and available communication windows.

Figure 2: Science Operations Working Group (SOWG) meeting

for one of the MER rovers.

What we’d like to think is that the scientists would produce a nice clean set of goals, which, together with the objective criteria and current rover state (initial conditions),

could be fed into a planning engine. We could then turn the

crank and get out a detailed plan (like that shown in Figure

3) that could then be uplinked to the rover. Unfortunately,

this is not a simple STRIPS-style planning problem, for a

number of reasons:

Oversubscription This is an oversubscription planning

problem, which means that not all the goals can be accomplished, given the resources available. With a diverse

group of scientists, it is no surprise that they want to

1

It is generally rather awkward to express many of these preferences in PDDL 3.0 (Gerevini et al. 2009).

2181

consider combinations of actions starting at different times.

If actions have uncertain durations, the branching factor is

large, and the search space quickly becomes intractable.

There have been some notable attempts at addressing these

problems (e.g. Younes and Simmons 2004, Aberdeen and

Buffet 2007, Mausam and Weld 2008, Meuleau et al. 2009),

but, so far, these techniques make many simplifying assumptions, and are far from achieving the kind of performance

typical of state-of-the-art classical planning systems.

Figure 4: Rover activity planning viewed as an iterative process of

plan revision under constraints.

while abandoning others. Thus, they might want to say

something like:

Keep activities A, B, and C, but make sure you do A

before 4pm, and B before C.

or

Don’t do both D and E unless there is extra energy

available after doing all the other primary goals.

Figure 3: A rover plan. Note the prevalence of concurrency and

the range of activity durations.

While it may be possible to enforce such constraints by

using PDDL 3.0 preferences (Gerevini et al. 2009) or

cleverly modifying operator descriptions, it is awkward

to do so, and it is unclear whether existing planners are

able to efficiently cope with such constraints.

Apart from these individual issues, there has been little attempt to integrate all of the above capabilities together. Each

one of these problems is hard enough, and the techniques developed so far are not exactly plug-and-play.

Multiple Plans In the early stages of the process, the scientists have not yet completely settled on or elucidated their

preferences or their optimization criteria. As a result, it

is not clear what the best plan is. The planner needs to

be able to return multiple solutions, that are “qualitatively

different” and somehow reflect the range of possible preferences or optimization criteria that might be considered

by the scientists.

The Broader Problem

Although the above issues are a significant barrier to producing a system capable of tackling the MER planning problem,

this alone is not enough. The broader problem is that initially

the scientists don’t have a clear idea of what is achievable,

of what their goals should be, of the relative value of different goals, or of their preferences. Through a process of

proposing and examining different options they eventually

arrive at a set of primary and secondary goals that they can

hand off to a TAP to produce a detailed plan like that shown

in Figure 3. If they could use a planner much earlier in the

process it would help them to develop their goals and preferences. In effect, it would allow them to perform trade studies to examine the space of possible goals, preferences, and

plans. This makes the planning part of an iterative process,

like that shown in Figure 4, which has several interesting

implications:

Plan Explanation These plans are complicated and the scientists need to be able to ask questions and get back useful

answers. Some questions, like:

Why is activity A in the plan?

would seen to be relatively easy to answer, but others such

as:

Why is action A done before action B?

What would happen if I delayed action A until 4pm?

Why wasn’t goal G chosen for inclusion in the plan?

Plan Constraints As the process goes on, the scientists increasingly place constraints on the nature of the plan. For

example, from an initial run, they might decide they like

particular choices or activities, and want to keep them,

Why didn’t you satisfy preference P ?

are much tougher to answer because they require deeper

analysis of the relationship between actions in the plan,

2182

are hypothetical in nature, or are negative questions asking why something wasn’t done differently.

(2003), Gough, Fox, and Long (2004), and Foss, Onder,

and Smith (2007).

Addressing the above three issues would allow us to embed a planning system into an iterative process like that envisioned in Figure 4, and use it to perform trade studies

that can help the scientists refine and elucidate their goals,

objectives, and preferences. One could regard this overall

process as being preference elicitation (Chen and Pu 2004;

Brafman and Domshlak 2009). We are in fact, trying to help

the scientists converge on the right “product”, in this case a

plan to achieve their goals. As with many other instances of

preference elicitation, direct questioning of the scientists to

try to elicit their preferences would likely be annoying and

would converge very slowly. In addition, the scientists may

not even be aware of some of their preferences or their implications. The process we have described is more like that

of example-critiquing as described by Viappiani, Faltings,

and Pu (2006), in which examples (plans) are presented, and

criticisms lead to the expression of additional preferences. In

our case the preferences are often very complex. The scientists generally don’t select a specific plan or directly indicate

a preference for one plan over another – instead, they often

zero in on parts of plans that they want preserved or changed,

and provide additional constraints that would enforce these

preferences. In addition, these preferences tend to be heavily

dependent on the the current state and resources available, as

well as the set of goals being considered. These preferences

also tend to evolve with the process of scientific discovery.

• Plan revision under constraints. We need simple ways of

expressing constraints on how a planner should be allowed to revise a plan (keep this subset of activities but

do A after 4pm, and make sure you do B before C), and

good search techniques for producing plans that satisfy

those constraints. Being able to place constraints on the

nature of a plan could be seen as a special case of a very

old idea – McCarthy’s Advice Taker (McCarthy 1990).

• Producing multiple, qualitatively different plans. While

there has been some preliminary work in this area (Tate,

Dalton, and Levine 1998; Myers and Lee 1999), we need

to take a deeper look at this problem. The root of the problem is that the planner doesn’t initially have a complete

model of the value of different goals or the costs (resource

usage) of different actions. A real solution to this problem

needs to explicitly consider uncertainty in the valuation of

goals and uncertainty in resource usage of actions. A critical part of what it means for two plans to be qualitatively

different is for those plans to make different assumptions

about goal rewards, and action durations or resource usage. One possible source of inspiration here is work on

presenting distinct solutions to users for purposes of preference elicitation (e.g. Viappiani, Faltings, and Pu 2006).

• Plan explanation. Literature on the problem of plan explanation appears to be surprisingly sparse. Questions like:

Why is activity A in the plan?

Carving off Pieces

can be answered relatively easily by elucidating the causal

structure of the plan, thereby identifying what conditions

the action achieves that are needed to support other actions and achieve desired goals. Questions like:

The overall problem of building an integrated planning system that addresses all of the issues I raised in the preceding

sections is quite daunting. Fortunately, there are some separable components and issues that I believe can be addressed

by the research community:

Why is this action done before that one?

require a deeper analysis of the relationship between two

activities, and the constraints that govern their relative

positions in the plan. Bresina and Morris (2006) have

done some work on explaining temporal inconsistencies

in plans. Hypothetical questions, such as:

• Oversubscription under resource constraints. It’s time to

move beyond the simple net-benefit model and deal with

the problem of generating plans for oversubscribed problems where actions use resources, and there are limits on

the resources available. Benton, Do, and Kambhampati

(2009) have developed effective heuristics for net-benefit

problems, but it is not obvious how to extend these heuristics to this more general class of problems.

What would happen if I delayed this action until

4pm?

would seem to require modifying the plan and simulating it to determine what parts of the plan still work, and

what parts will now violate time or resource constraints. A

reasonable answer to this type of question might be something like:

• Temporal planning with time windows, time constraints,

and temporal preferences. While this can be done now,

there is little indication that current heuristics will be effective on larger scale problems. What is needed here is

the development of more effective search strategies and

heuristics for these problems.

Delaying this action until 4pm would mean delaying

actions A4 A5 and A6 , so the goal of photographing

Rock13 could no longer be completed while it is in

direct sunlight, violating preference P2 .

• Planning under time uncertainty. Here, what is needed is

a practical, computationally tractable approach that worries less about constructing complete and optimal policies, and more about simple, partial policies that do things

like introducing slack in important places, and making

sure that the resulting plan will not result in a dead end

with poor reward. Some early forays in this direction

are Musliner, Durfee, and Shin (1993), Dearden et al.

Questions, such as:

Why wasn’t this resource used instead of that one?

might require reinvoking the planner with additional constraints, and comparing the resulting plan with the original

to determine what is achieved by the two different plans,

2183

References

as well as how they differ structurally. Finally, purely negative questions, such as:

Aberdeen, D., and Buffet, O. 2007. Concurrent probabilistic

temporal planning with policy gradients. In Proc. of the Seventeenth Intl. Conf. on Automated Planning and Scheduling

(ICAPS-07).

Benton, J.; Do, M.; and Kambhampati, S. 2009. Anytime

heuristic search for partial satisfaction planning. Artificial

Intelligence 173:562–592.

Brafman, R. I., and Domshlak, C. 2009. Preference handling

– an introductory tutorial. AI Magazine 30(1):58–86.

Bresina, J. L., and Morris, P. H. 2006. Explanations and recommendations for temporal inconsistencies. In Proc. of the

Fifth Intl. Workshop on Planning and Scheduling for Space

(IWPSS-06).

Bresina, J.; Jonsson, A.; Morris, P.; and Rajan, K. 2005.

Activity planning for the Mars Exploration Rovers. In

Proc. of the Fifteenth Intl. Conf. on Automated Planning and

Scheduling (ICAPS-05), 40–49.

Chen, L., and Pu, P. 2004. Survey of preference elicitation

methods. Technical Report IC/2004/67, Ecole Politechnique

Federale de Lausanne (EPFL).

Dearden, R.; Meuleau, N.; Ramakrishnan, S.; Smith, D.; and

Washington, R. 2003. Incremental contingency planning. In

Proc. of ICAPS 2003 Workshop on Planning under Uncertainty and Incomplete Information.

Foss, J.; Onder, N.; and Smith, D. 2007. Preventing unrecoverable failures through precautionary planning. In Proc. of

ICAPS 2007 Workshop on Moving Planning and Scheduling

Systems into the Real World.

Gerevini, A.; Haslum, P.; Long, D.; Saettia, A.; and Dimopoulos, Y. 2009. Deterministic planning in the Fifth International Planning Competition: PDDL3 and experimental

evaluation of the planners. Artificial Intelligence 173:619–

668.

Gough, J.; Fox, M.; and Long, D. 2004. Plan execution

under resource consumption uncertainty. In Proc. of ICAPS

2004 Workshop on Connecting Planning Theory with Practice, 24–29.

Mausam, and Weld, D. 2008. Planning with durative actions

in stochastic domains. Journal of Artificial Intelligence Research 31:33–82.

McCarthy, J. 1990. Programs with common sense. In Lifschitz, V., ed., Formalizing Common Sense: Papers by John

McCarthy. Ablex.

Meuleau, N.; Benazera, E.; Brafman, R.; Hansen, E.; and

Mausam. 2009. A heuristic search approach to planning

with continuous resources in stochastic domains. Journal of

Artificial Intelligence Research 34:27–59.

Musliner, D.; Durfee, E.; and Shin, K. 1993. Circa: a cooperative intelligent real-time control architecture. IEEE Transactions on Systems, Man and Cybernetics 23(6):1561–1574.

Myers, K., and Lee, T. 1999. Generating qualitatively different plans through metatheoretic biases. In Proc. of the Sixteenth National Conf. on Artificial Intelligence (AAAI-99),

570–576.

Why didn’t you satisfy this preference?

also seem to require replanning with additional constraints – in this case enforcing the preference. An answer

to this type of question would again seem to require comparison of the new plan with the previous plan. Considering this problem in the context of planning appears to give

us the ability to actually answer such tougher hypothetical

or counterfactual questions, which goes well beyond current work on inferential question answering in other areas

of AI.

Conclusions

Activity planning for the MER rovers presents many technical challenges, including consideration of time, concurrency,

resources, preferences, and uncertainty. These have all been

addressed by the research community to varying degrees,

but significant technical hurdles still remain. The integration

of these techniques into a single planning engine also remains largely unaddressed. In addition, I have argued that

there is a deeper set of issues that needs to be addressed –

namely the integration of planning into an iterative process

that begins before the goals, objectives, and preferences are

fully defined. This has a number of technical implications

for planning, including the need to more naturally specify

and utilize constraints on the planning process, the need to

generate multiple qualitatively different plans, and the need

to provide deep explanation of planning decisions. Although

I introduced these challenges in the context of planning for

Mars Rovers, the process and issues I’ve outlined are quite

typical of the planning that goes on in many complex science missions. In particular, similar processes take place in

planning for crew activities aboard the International Space

Station (ISS). For the ISS, there is the additional complication that the resulting plans will be executed by astronauts,

rather than robots. The plans must therefore be easily understandable by the astronauts, who may want to ask their

own questions. As smart executives, astronauts don’t readily

tolerate stupidity or obvious inefficiency in the plans. In addition, they may take the liberty of reordering actions, interleaving tasks, collaboration, or substituting resources. This

means that any run-time replanning must be able to model

and take these deviations into account as well.

Acknowledgments

This paper grew out of a panel presentation I originally gave

at ICAPS 2010. Thanks to John Bresina, Paul Morris, and

Tony Barrett for their insights and observations on rover

planning. Thanks to Jeremy Frank for insights on ISS crew

activity planning. Thanks to Jörg Hoffman for comments on

the paper, and for pointing out the connection with preference elicitation Thanks to Ronen Brafman for additional insights on work in preference elicitation. This work has been

supported by the NASA Exploration Systems Program and

the NASA Aviation Safety Program.

2184

Tate, A.; Dalton, J.; and Levine, J. 1998. Generation of

multiple qualitatively different plan options. In Proc. of

the Fourth Intl. Conf. on Artificial Intelligence Planning and

Scheduling (AIPS-98).

Viappiani, P.; Faltings, B.; and Pu, P. 2006. Preferencebased search using example-critiquing with suggestions.

Journal of Artificial Intelligence Research 27:465–503.

Younes, H., and Simmons, R. 2004. Solving generalized

semi-markov decision processes using continuous phasetype distributions. In Proc. of the Nineteenth National Conf.

on Artificial Intelligence (AAAI-04), 742.

2185