Generalized frequent episodes in event sequences

advertisement

Generalized frequent episodes in event sequences

Srivatsan Laxman, K.P.Unnikrishnan∗and P.S.Sastry

Dept. Electrical Engineering

Indian Institute of Science

Bangalore 560012, INDIA

Abstract

Discovering patterns in temporal data is an important task

in Data Mining. A successful method for this was proposed

by Mannila et al. [1] in 1997. In their framework, mining

for temporal patterns in a database of sequences of events is

done by discovering the so called frequent episodes. These

episodes characterize interesting collections of events occurring relatively close to each other in some partial order.

However, in this framework (and in many others for finding patterns in event sequences), the ordering of events in

an event sequence is the only allowed temporal information.

But there are many applications where the events are not instantaneous; they have time durations. Interesting episodes

that we want to discover may need to contain information

regarding event durations etc. In this paper we extend Mannila et al.’s framework to tackle such issues. In our generalized formulation, episodes are defined so that much more

temporal information about events can be incorporated into

the structure of an episode. This significantly enhances the

expressive capability of the rules that can be discovered in

the frequent episode framework. We also present algorithms

for discovering such generalized frequent episodes.

1.

INTRODUCTION

Data sets with temporal dependencies frequently occur in

business, engineering and scientific scenarios. Development

of data mining techniques specially suited for such data sets

is rapidly gaining momentum today (see, e.g., [1] and references therein). These data sets may often be viewed as logs

(or records) of events occurring in one or more sequences.

The goal of data mining here would be to identify patterns in

the form of some partially ordered collection of events that

are interesting or can serve some useful predictive function.

An often cited example of temporal data is the customer

transaction log in a grocery store. Each purchase by a customer may be regarded as an event in the transaction logs.

One can either maintain different transaction sequences for

∗

General Motors R&D Center, Warren, MI

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that copies

bear this notice and the full citation on the first page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specific

permission and/or a fee.

Copyright 200X ACM X-XXXXX-XX-X/XX/XX ...$5.00.

different customers, or a single sequence for all the customers

put together. Discovering frequent (or popular) buying patterns from these transaction logs can be of great use to the

store [2, 3]. Another example for a temporal database is

an alarm sequence in a telecommunication network [1]. The

goal of data mining here would be to find relationships between alarms in the sequence. This would help in predicting

impending alarms and faults in an on-line analysis of the incoming alarm stream.

It is important to note that in both the examples cited

above, the data is viewed as a time-ordered sequence of

events. This ordering of events is the only temporal information that is used for defining patterns or episodes that

we want to discover through mining techniques. In other

words, the events logged are viewed as instantaneous, and

the duration of existence of an event is is not considered relevant for the discovery process. This is true of most temporal

data mining techniques today. There have been some recent

attempts to allow time durations for events while analyzing

temporal data [4] though such information does not seem

to have been used for defining what constitute interesting

sequential patterns.

There are many applications where the event duration can

often be a very important attribute. Consider, for example,

the logs of an assembly line in a manufacturing plant. At

every change in state of the line, we can log (as an event)

the current state along with its start time. This would

yield a temporal database in which each event is associated with (among other attributes) its duration of existence.

The assembly line could either be running (with the current

throughput metric logged as an attribute) or it could have

stopped for a variety of reasons, such as an electrical system malfunction, a mechanical system malfunction, a lunch

break or an end of shift. The role of data mining here would

typically be to discover patterns of frequent breakdown and

to suggest potential process improvements for achieving better throughputs and lower down times. The significance of

event durations in such applications cannot be overemphasized. For example, extended lunch breaks certainly do not

help the plant’s throughput! Or frequent short stoppages

due to a series of electrical system malfunctions could foretell a severe power problem in the plant. Clearly, many of

the interesting rules that we will want to discover (in this

assembly line example) require constructs with some timing information built into them. This motivates our idea

of introducing time durations and time intervals into the

definition of an episode.

In this paper we extend the approach of discovering fre-

0

5

10

E1 E3

E1

15

20

E2

25

TIME

30

35

E1

E2

40

45

E3

50

55

60

E4

65

E1

different kinds of valid time overlaps that successive events

in the sequence may possess.

It is easy to see that the duration of the ith event (denoted

by ∆i ) in the event sequence (s, Ts , Te ), can be simply written as

70

E4

Figure 1: An event sequence

∆i = τi − ti ∀i.

quent episodes as discussed in [1] for incorporating time duration information into episodes. We propose the generalized

serial episode which incorporates explicit timing information

for the events that constitute it. In the sections to follow

we first define the generalized serial episode by associating

event durations with each node of the episode. Next we

present algorithms for discovering rules in this generalized

framework. We show that the algorithms described in [1]

for finding frequent serial episodes can be adapted to meet

our requirements. We then briefly discuss how we can easily extend our definition of the episode to incorporate time

intervals between nodes in an episode. We also discuss how

we can associate with each node, not just a single event, but

a collection of events. All these generalizations enhance the

expressive power of the rules that can be discovered in the

generalized serial episode framework.

2.

EPISODES AND SUBEPISODES

The problem we consider is the following. We are given a

time-ordered sequence of events with each event persisting

for some duration. We want to discover interesting patterns,

which will be called generalized serial episodes, that denote

some ordered collection of events that occur frequently in the

data sequence. The definition for episodes allows specification of both the events that constitute it and the duration

of such events. While the basic framework is similar to that

in [1], the main difference is in the incorporation of time

durations.

This section presents our definitions for the generalized

serial episode and its subepisode. Let E denote a finite set

of possible event types,

E = {E1 , E2 , . . . , EM }.

(1)

An event is defined as a triple (A, t, τ ), where A ∈ E, t is

the event start time (an integer) and τ is the event end time

(also an integer). We shall require of t and τ that τ > t in all

events. It is important to note that unlike in [1] the events

here are not instantaneous and hence require (for their full

specification) both start and end times.

An event sequence on E is simply an ordered collection of

events and is represented by the triple (s, Ts , Te ), with Ts

and Te fixing the lower and upper time extremities for the

sequence and

s = {(A1 , t1 , τ1 ), (A2 , t2 , τ2 ), . . . , (An , tn , τn )}

(2)

where Ai ∈ E & ti , τi ∈ [Ts , Te ], 1 ≤ i ≤ n, and ti ≥

ti−1 , 2 ≤ i ≤ n. Clearly, our definition of the event sequence allows for successive events in it to occur in either

one of simultaneous, disjoint or partially overlapping time

intervals. These notions do not exist when the events in the

sequence are viewed as instantaneous as in [1].

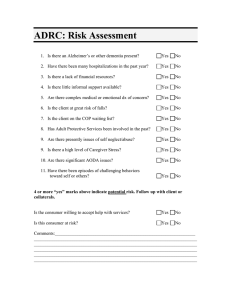

Fig. 1 illustrates a typical example for an event sequence.

The line segments represent the time intervals in which the

events occur. The number above each line segment corresponds to the event’s duration of occurrence. Notice the

(3)

In the manufacturing plant example that we considered in

Section 1, the event sequence basically describes the state

of the line as a function of time, with the state at any given

instant being fully specified by the conjunction of all event

types that occur simultaneously (at that instant). For example, there could well be a simultaneous breakdown of different subsystems, each being denoted by a separate event

in the sequence. An important special case of our event sequence would be one where we have ti+1 = τi , ∀i. This

would be the case for our manufacturing system example

if the event type definition is such that only one type of

event can exist at any given time. By incorporating event

start and end times in our event sequences, we expect to be

able to define and discover episodes with greater expressive

capability in them.

A window on the event sequence (s, Ts , Te ) is simply a

time slice from that sequence and is specified by another

triple (w, ts , te ). The window may be regarded as an event

sequence in its own right. ts and te are the window’s time extremities and w is an appropriate contiguous slice of events

from s.

It is important however to note that events in s that start

after ts but terminate only beyond te need to be dropped

out of w. For example, for the event sequence specified in

Fig. 1 the window (w, 0, 10) includes the event (E4 , 06, 08)

but excludes (E2 , 06, 12) and (E1 , 06, 15), since their end

times go beyond the upper extremity of the window.

2.1 The generalized serial episode

We are now ready to define the generalized serial episode

along the same lines as the serial episode definition of [1].

We can think of the episode1 as a finite ordered collection of

nodes. Each node is associated with an event type and a collection of time intervals (which describe the event duration

possibilities for that node).

Let B = {B1 , . . . , BK } denote a collection of time intervals. Recall that E is the set of possible event types.

The generalized serial episode is defined as a quadruple α =

(Vα , <α , gα , dα ). Vα is a finite collection of nodes and <α

specifies a total order over it. gα and dα are both 1-1 maps

where gα : Vα → E specifies the rule for associating an event

type with each node and dα : Vα → 2B maps each node to

the possible event durations that episode α allows. As a

notation, we denote the number of nodes in Vα by n.

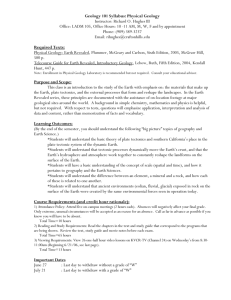

Fig. 2 pictorially depicts a few example episodes. The

event type associated with a node is written inside the circle that represents it. The set written directly below each

node represents the subset of 2B to which (the episode’s) dα

maps that node. In these examples, we have chosen the collection of time intervals as B = {[0, 3], [4, 7], [8, 11], [12, 15]}.

It is important to observe that α2 and α4 represent different episodes, purely on the basis of having different timing

information (i. e. different d(·) maps). Having proposed this

modified definition for the episode, the most natural ques1

From here on the term “episode” always refers to the generalized serial episode.

“α1 ”

“α2 ”

n

n

E2

E2

{[0, 3], [8, 11]}

{[0, 3], [4, 7], [12, 15]}

α (s, Ts , Te ) and β α =⇒ β (s, Ts , Te ).

B \ {[0, 3]}

E1

- En

4

B

B

n

E2

{[0, 3], [8, 11]}

- En

3

{[12, 15]}

hβ (v) = hα (fβα (v)) ∀v ∈ Vβ .

- En

1

B \ {[12, 15]}

B

- En

4

{[0, 3], [4, 7]}

3. SIMILAR AND PRINCIPAL EPISODES

Figure 2: Some example episodes

tion to ask next is when does such an episode occur in a

given event sequence?

Consider an episode α = (Vα , <α , gα , dα ). Let the number of nodes in α (i. e. |Vα |) be n. The episode α is

said to occur in the event sequence (s, Ts , Te ) if ∃ a 11 hα : Vα → {1, . . . , n} such that the following conditions

hold ∀v, w ∈ Vα :

Ahα (v) = gα (v),

∃b ∈ dα (v) such that ∆hα (v) ∈ b and

v <α w in Vα =⇒ thα (v) thα (w) in s.

(4)

(5)

(6)

The occurrence of episode α in the event sequence (s, Ts , Te )

is written as α (s, Ts , Te ). It can be immediately seen that

among the example episodes defined in Fig. 2 all except

α4 occur in the example event sequence of Fig. 1. Also,

since a window in (s, Ts , Te ) may be viewed as an event

sequence on its own, the same definitions are used to determine whether or not an episode occurred in a window of

(s, Ts , Te ) as well. Observe that α1 , α2 , α3 and α5 all occur

in the window (w, 0, 10) itself.

2.2 The subepisode

Consider an episode α = (Vα , <α , gα , dα ). An episode

β = (Vβ , <β , gβ , dβ ) is said to be a subepisode of α (written β α) if ∃ a 1-1 fβα : Vβ → Vα such that ∀v, w ∈ Vβ

we have:

gα (fβα (v)) = gβ (v),

(7)

dα (fβα (v)) ⊆ dβ (v), and

(8)

v <β w in Vβ =⇒ fβα (v) <α fβα (w) in Vα . (9)

Basically, according to our definition of the subepisode, it

does not suffice if just the relative ordering of events in the

candidate subepisode matches that of the episode (which is

the case in [1]). In addition, we also require that the timing

constraints on the events of the candidate subepisode must

be consistent with what those in the episode itself would

allow. Notice that while α1 6 α2 we have α3 α5 and

α2 α4 in Fig. 2.

Strict subepisodes, i. e. β α and β 6= α, are denoted by

β ≺ α. When β α we will sometimes prefer to say that

α is a superepisode of β (written α β)2 . Also, from the

2

Strict superepisodes are similarly denoted like α β.

(11)

This is a “suitable” map hβ (·) which demonstrates that β (s, Ts , Te ).

“α5 ”

n

E2

(10)

Since α (s, Ts , Te ) there is a “suitable” hα : Vα →

{1, 2, . . . , n} and since β α there is also a “suitable” fβα :

Vβ → Vα . Thus, we can simply define a new map hβ :

Vα → {1, 2, . . . , n} as

“α4 ”

“α3 ”

n

definitions thus far it can be easily see that

- En

3

With definitions for the episode, subepisode and occurrence of an episode now in place, we proceed to introduce

the important concepts of similar and principal episodes. It

is by virtue of these concepts that our framework for rule

discovery becomes both interesting and feasible. These ideas

are closely tied to the notion of frequency of an episode in

an event sequence.

Consider an event sequence (s, Ts , Te ). For the sake of

notational succinctness this event sequence triple is denoted

by S. Let λS (α) represent some measure of how often α

occurs in the event sequence S. This could be based either

on the number of occurrences of α, or on the number of

windows of S which contain α (both in the same sense as

in [1]) or on just any other consistent frequency measure.

Whatever the definition of λS (α) we shall require of it the

property that

β ≺ α =⇒ λS (β) ≥ λS (α)

(12)

i. e. in a given event sequence, the subepisodes are always at

least as frequent (if not more frequent) as their corresponding superepisodes. This is a very important property which

needs to be exploited well in order to facilitate efficient rule

discovery using frequent episodes.

We now motivate the idea of similar and principal episodes

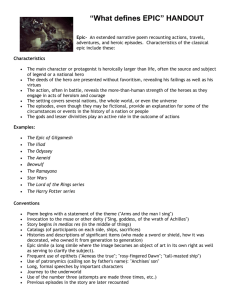

by examining the occurrence (in the event sequence example

of Fig. 1) of some simple episodes defined in Fig. 3. Notice

that all the episodes have identical event type assignments

i. e. they have the same gα (·) maps. However, since the

episodes describe events with different timing information

they differ in their dα (·) maps. Let us represent the event

sequence in Fig. 1 by S. Further, in this example, our choice

for the λS (·) function is simply the number of occurrences

(of the episode) in the event sequence S.

3.1 Similar episodes

First we consider the βi ’s in Fig. 3. Since β3 ≺ β2 ≺ β1 ≺

β0 and since β0 S it can be inferred (from Eq. 10) that

βi S 0 ≤ i ≤ 3. The βi ’s differ only in the durations that

they allow for the second node (with event type E1 ). As

more and more intervals are deemed allowed in the episode,

we naturally expect the episode frequency to increase in S.

This can be readily seen from Eq. (12). By counting the

number of occurrences in S (i. e. the event sequence in Fig. 1)

we find that, for the βi ’s in Fig. 3

λS (β3 ) = λS (β2 ) > λS (β1 ) > λS (β0 ).

(13)

Essentially, with respect to their frequencies of occurrence

in S, the episodes β2 and β3 are indistinguishable. Such

episodes are referred to as being similar in S. It can be

“β0 ”

n

E3

cipal episode is one in which each time interval in each event

duration set (of the episode) has earned its place by virtue

of a strictly positive contribution to the episode’s frequency

in the event sequence.

An episode α∗ is said to be principal in an event sequence

S if the following two conditions hold:

- En

1

{[4, 7]}

{[4, 7]}

“β1 ”

E3

- En

1

{[4, 7]}

n

{[4, 7], [8, 11]}

α∗ S and

∗

(17)

S

∗

@α α such that α ∼ α .

“β2 ”

n

E3

{[4, 7]}

- En

1

B \ {[12, 15]}

“β3 ”

E3

- En

1

{[4, 7]}

n

B

Figure 3: Similar and principal episodes

likewise verified that among the γi ’s in Fig. 3 the episodes

γ1 and γ2 are also similar in S. In fact, of the episodes

defined in Fig. 3 the pairs {β2 , β3 } and {γ1 , γ2 } are the only

sets of similar episodes with respect to the event sequence

S. It is important to note that unlike in the case of episodes

and subepisodes, similar episodes are always defined with

respect to a given event sequence only. Two episodes are

said to be similar if they are identically defined except for

the fact that one allows some more time intervals (for the

event durations) than the other, and if their frequencies of

occurrence are equal in the given event sequence.

Let us now formally define the concept of similarity between episodes in an event sequence. Two episodes α and

β are said to be similar in the event sequence S (written

S

α ∼ β) if all of the following hold:

α β or β α,

|Vα | = |Vβ | and

λS (α) = λS (β).

(14)

(15)

(16)

It is clear that every episode α is trivially similar to itself

(reflexive). From Eqs. (14)–(16) it is also evident that if

S

S

α ∼ β then β ∼ α (symmetric). Further, it is also easy

S

S

S

to show that if α ∼ β and β ∼ γ then α ∼ γ (transitive).

Similarity between episodes (in a given event sequence) is

therefore an equivalence relation over the set of all episodes.

3.2 The principal episodes

Consider once more the example episodes of Fig. 3. The

event duration sets in all those episodes, except for β3 and

γ2 , have the property that on removing any one time interval (or more) from any of them, the frequency of the corresponding episode (in the event sequence S defined in Fig. 1)

reduces by a strictly non-zero quantity. In other words, the

additional time intervals that β3 and γ2 permit (compared

to say those permitted by β2 and γ1 respectively) for the durations of their second nodes are clearly not interesting given

the event sequence S. We therefore wish to keep episodes

like β3 and γ2 out of our rule discovery process. This leads

us to the idea of principal episodes. Simply speaking, a prin-

(18)

It can be easily verified that all episodes in Fig. 3 excluding β3 and γ2 (and including δ0 ) are principal in the event

sequence of Fig. 1. Notice that as was the case with similar

episodes, the notion of principal episodes is also always tied

to a specific event sequence.

The similarity relation (being an equivalence relation) partitions the space of all episodes into equivalence classes. The

episodes within each class are similar to one another. There

will exist exactly one principal episode in each partition. All

the other episodes in the partition can in fact be generated

by appending time intervals (that do not contribute to the

eventual episode frequency) to the event duration sets of the

principal episode.

When we are concerned with finite length event sequences,

the space of all possible episodes that can occur in the sequence is definitely finite. Similarity with respect to the

event sequence naturally yields a finite partitioning of this

episode space. Each partition has exactly one principal

episode which may be regarded as the generator of that

equivalence class. Therefore it is guaranteed that the collection of all principal episodes in a given finite length event

sequence is always a well defined and finite set.

Subepisodes of principal episodes are in general not principal. Indeed, the non-principal episodes in each partition of

our similarity-based quotient space are after all subepisodes

of the partition’s generator (or principal episode). However,

all subepisodes generated by dropping nodes (rather than

by appending time intervals to the d(·) sets) of a principal episode are most certainly principal. Consider an l-node

principal episode α∗l . Let α∗l−1 be an (l −1)-node subepisode

of α∗l obtained by dropping one of its nodes (and keeping

everything else identical). Recall that every time interval

in the d(·) sets of α∗l contributes a strictly positive quantity to its frequency in the (given) event sequence (say S).

Therefore, dropping a time interval from the d(·) set of a

node in α∗l will reduce its frequency by a strictly positive

quantity. Removing the same time interval from the corresponding node in α∗l−1 will naturally cause a similar fall in

frequency since α∗l−1 S every time α∗l S. This shows that

there can be no “redundant” time intervals in the d(·) sets

of α∗l−1 (and therefore it is also principal in S). This is a

very important property which needs to be exploited during

the candidate generation step (in Algorithm 3) of our rule

discovery process.

4. DISCOVERING RULES

We are now ready to describe the rule discovery process

in our generalized framework. It is very much in the same

lines as in [1] except that we work with the collection of

frequent principal episodes FS∗ rather than just the set of

frequent episodes FS in the event sequence S. In this section

we describe the various algorithms that constitute our rule

discovery process. Each of these algorithms is an adaptation

of its counterpart in [1]. For completeness, we provide all

the algorithms here. However, to keep the discussion brief,

we only indicate the prominent differences with respect to

the algorithms in [1] in each case. Thus, this section assumes

that the reader is familiar with the algorithms in [1].

4.1 The rules

Let FS∗ denote the set of all frequent principal episodes

in S. We are looking for rules like β ∗ → α∗ , where β ∗ ≺

α∗ and α∗ , β ∗ ∈ FS∗ . To obtain such rules we need to

first compute all the frequent principal episodes in the event

sequence S. For each frequent episode-subepisode pair, we

declare that the subepisode implies the episode if the ratio of

their frequencies of occurrence in the event sequence exceeds

a threshold. This is what Algorithm 1 does. The important

difference with respect to the algorithm proposed in [1] is

that here the episodes and subepisodes considered for rule

discovery are all not just frequent but also principal in S.

Algorithm 1 To discover rules based on the frequent principal episodes of an event sequence

Input: Set E of event types, event sequence S, set E of allowed

episodes, window width bw , minimum frequency λmin , minimum confidence ρmin , set B of allowed time intervals

Output: Rules that hold in S

1: /∗ Find set FS∗ of all frequent principal episodes in S ∗/

2: Compute FS∗ using Algorithm 2

3: /∗ Generate rules ∗/

4: for all α∗ ∈ FS∗ do

5: for all β ∗ ∈ FS∗ such that β ∗ ≺ α∗ do

6:

if (λS (α∗ )/λS (β ∗ )) ≥ ρmin then

7:

Output the rule β ∗ → α∗

4.2 The frequent principal episodes

The process of computing the set of frequent principal

episodes is incremental and is done through Algorithm 2.

We start with the set C1 of all possible 1-node episodes. F1∗ is

the collection of frequent principal episodes in C1 . Once F1∗

is obtained, the collection C2 of candidate 2-node episodes

is generated from it. This incremental process of first obtaining Fl∗ from the candidates in Cl and then generating

Cl+1 from Fl∗ is repeated till the set of new candidates generated is empty. The crucial step here is to designate an

episode as a candidate only if all the subepisodes obtained

by dropping a node from it have already been declared as

being both frequent and principal.

4.3 Candidate generation

The candidate generation (i. e. Algorithm 3) is also along

the same lines as that in [1]. The l-node frequent principal episodes are arranged as a lexicographically sorted collection Fl∗ of l-length arrays (of event types). However,

the ith episode in the collection now needs two components

for its specification – the first is Fl∗ [i].g[j] which refers to

the event type association for j th node, and the second is

Fl∗ [i].d[j] which stores the collection of intervals associated

with the j th node, the kth element of which is denoted by

Fl∗ [i].d[j][k]. The episode collection is viewed as to be constituted in blocks (like in [1]) such that within each block in

Fl∗ the first (l − 1) nodes are identical i. e. both the event

type as well as the timing information of the first (l − 1)

nodes are the same for the episodes in a block. Potential

(l + 1)-node candidates are constructed by combining two

Algorithm 2 To compute the set of frequent principal

episodes in an event sequence

Input: Set E of event types, event sequence S, set E of allowed

episodes, window width bw , minimum frequency λmin , set B

of allowed time intervals

Output: Set FS∗ of all frequent principal episodes in S

1: Initialize FS∗ = φ

2: /∗ Construct set C1 of 1-node candidate episodes ∗/

3: Compute C1 = {α ∈ E such that |α.g| = 1}

4: Initialize l = 1

5: while Cl 6= φ do

6: /∗ Obtain set Fl∗ of all l-node frequent principal episodes

∗/

7: Compute Fl∗ = {α ∈ Cl such that λS (α) ≥ λmin &

α is principal in S} using Algorithm 4

8: /∗ Obtain set Cl+1 of all (l + 1)-node candidate episodes

∗/

9: Compute

Cl+1 = {α ∈ El+1 such that ∀β ≺ α with |β.g| < l

∗

we have β ∈ F|β.g|

}

using Algorithm 3

10: Update l = l + 1

11: for all l do

S

12: Update FS∗ = FS∗ Fl∗

∗

13: Output FS

episodes within a block. The newly constructed (l + 1)-node

episode is declared a candidate only if every subepisode generated by dropping one of its nodes is already known to be

principal and frequent (and hence must have been found in

Fl∗ ).

4.4 Counting frequencies of principal episodes

We now need an algorithm that can compute the frequency of the candidates that have been generated. Algorithm 4 implements a frequency computing scheme. The

episode frequency is defined based on the number of windows of the event sequence that contain the episode. This

is identical to the frequency definition used in [1]. Since our

episodes and their occurrence in an event sequence are essentially generalizations of the concepts defined in [1], the

basic idea of using finite state automata to recognize episode

occurrences continue to be very useful. However, the exact initialization and updating procedures for the automata

differ slightly to account for our generalizations. In Algorithm 4, waits(A, δ) (rather than the waits(A) of [1]) stores

(α, i) pairs, indicating that an automaton for episode α is

waiting in its ith state for the event type A to occur with a

duration in the δ time interval. This is the main difference

between the algorithm proposed in [1] and our algorithm

here. All the other structures have identical functions in

both the algorithms. The number of windows of S that contain α is stored in α.f req. (α, j) ∈ beginsat(t) indicates

that an automaton for α has reached its j th state at time

t. trans stores the various automata transitions in the current window shift. Each element in trans is a triple (α, j, t)

implying that an automaton for α that was initialized at

time t has transited to state j in the current window. An

important step in Algorithm 4 (that was not needed in its

counterpart in [1]) is the check for principal episodes. Once

all the frequencies have been computed, an l-node episode

is declared as principal only if all its l-node superepisodes

differ in frequency with respect to itself.

It can be seen that this principal episode check in Algorithm 4 is the only serious computational overhead that our

Algorithm 3 To generate (l + 1)-node candidates from lnode frequent principal episodes

Input: Set Fl∗ of appropriately sorted l-node frequent principal

episodes, set B of allowed time intervals

Output: Set Cl+1 of appropriately sorted (l + 1)-node candidate

episodes

1: Initialize Cl+1 = φ and k = 0

2: if l = 1 then

3: for h = 1 to |Fl∗ | do

4:

Initialize Fl∗ [i].BlkStart = 1

5: for i = 1 to |Fl∗ | do

6: Initialize CurrentBlkStart = k + 1

7: for (j = Fl∗ [i].BlkStart;

Fl∗ [j].BlkStart = Fl∗ [i].BlkStart; j = j + 1) do

8:

/∗ Fl∗ [i] and Fl∗ [j] have their first (l − 1) nodes common

∗/

9:

/∗ Build a potential candidate α from these two ∗/

10:

for x = 1 to l do

11:

Assign α.g[x] = Fl∗ [i].g[x] and α.d[x] = Fl∗ [i].d[x]

12:

Assign α.g[l + 1] = Fl∗ [j].g[l] and α.d[l + 1] = Fl∗ [j].d[l]

13:

/∗ Build and test subepisodes of α ∗/

14:

for y = 1 to (l − 1) do

15:

for x = 1 to (y − 1) do

16:

Assign β.g[x] = α.g[x] and β.d[x] = α.d[x]

17:

for x = y to l do

18:

Assign β.g[x] = α.g[x + 1] and β.d[x] = α.d[x + 1]

19:

if β 6∈ Fl∗ then

20:

Goto next j in line 8

21:

Update k = k + 1

22:

Assign Cl+1 [k] = α and

Cl+1 [k].BlkStart = CurrentBlkStart

23: Output Cl+1

rule discovery process has when compared to the algorithms

in [1]. This, and of course, the natural swelling of the set

of all episodes due to the inclusion of timing information

in them, are the only significant sources for the additional

computational cost that we need to bear.

5.

DISCUSSION

In this paper our objective is to show how the framework

for discovering frequent episodes, due to Mannila et al. [1],

can be extended to handle applications where time durations

of events is an important attribute. As briefly outlined in

Section 1, being able to include time durations of events

is useful, e.g., in analyzing failure modes of manufacturing

processes. The framework presented here is being developed

specifically to handle such an application.

At the level of formalism, the main change needed is the

incorporation of event durations explicitly in the definition

of episodes. Thus, in defining the generalized serial episode

(cf. Section 2.1), in addition to the node set, Vα , the total

order <α , and the map gα that assigns an event type to

each node, we also included dα that prescribes the allowable

time durations for events associated with different nodes.

Given that the data of event sequence contains time durations of events, it is now fairly straight forward to count the

frequency of such generalized events. However, incorporation of dα results in a structure whereby interesting frequent

episodes now become equivalence classes rather than single

episodes. This is the reason we defined the concept of principal episodes in Section 3 so that the search for frequent

episodes does not return too many redundant ones.

The main computational task here is to discover all frequent principal episodes. The algorithms presented follow

essentially the same strategy as in [1], namely, constructing

frequent episodes of progressively increasing length starting with episodes of length one. However, the inclusion

of dα and the need to ensure that only principal episodes

are considered as candidates, together imply that the algorithms presented in [1] need to be modified. As explained in

Section 4, the needed modifications are easily implemented

without too much additional computational burden.

It may be worth noting that the structure developed here

is a strict generalization of the serial episodes discussed in

[1]. If we always choose dα as the set of all possible time

durations, then we essentially get back the serial episodes of

[1].

There are some modifications to the structure of generalized serial episodes that are easily implemented and which

enhance the expressive capabilities of the formalism. We

briefly describe two such modifications below.

We can associate more than one event type with each node

in defining episodes. This is done by making the gα a function from Vα to 2E . That is, both gα and dα would become

set-valued functions now. This would make our generalized

serial episodes to include some parallel episodes as defined

in [1] as well, in addition to being a strict generalization of

serial episodes. However, this would not result in a structure

that is a strict generalization of parallel episodes also. Our

structure would not be able to include all parallel episodes of

[1] though it would contain episodes that are not expressible

either as serial episodes or as parallel episodes. For example,

consider a two node episode where the first node is associated with the set {A, C} and the second node is associated

with {B}. This would represent a pattern of either A or C

but not both occurring before B. Such an episode cannot

be represented in the formalism of [1]. On the other hand,

in our formalism, we cannot represent the episode that says

both A and C should occur, in any order, before B, as a

single episode.

Discovering such generalized episodes is also easily done.

We have explained in Section 3 that because dα is a setvalued function, one can construct subepisodes that are not

interesting by simply tagging more time intervals with each

node. That is why we defined the concept of principal

episodes. In a similar way, when gα is a set-valued function,

we can make uninteresting subepisodes by simply tagging

irrelevant event types with each node. Hence, we need to

modify our definition of similar and principal episodes to

take care of this redundancy due to the new set-valued gα

function also. This is done easily. Now the modifications

needed for the algorithms would be similar to what we have

done to take care of the requirements due to presence of dα

in our generalized serial episodes.

There is another direction in which we can extend the

formalism presented here. In the generalized serial episode

framework presented here, we have included only intra-event

time durations. That is, for an episode to occur in an

event sequence, the only additional constraints here are in

terms of duration of individual events. Another kind of constraint that may be relevant in applications is in terms of

the time interval between successive events in the episodes.

This is very easily done by changing the definition of ∆i to

∆i = ti+1 − ti in Eq. (3). Now the dα function prescribes

constraints on the duration between starting times of successive events. The only modification needed in the algorithms

now is in the part where we decide whether an episode has

occurred in an event sequence while counting the frequency.

As is easy to see, we can extend our structure to take care

of both intra-event and inter-event time constraints. In the

definition of an episode, instead of having only one function

dα , we can have two functions, say, d1α and d2α , one to take

care of intra-event time constraints and another to take care

of inter-event time constraints.

Thus, the structure proposed in this paper allows one to

discover episodes or patterns in which information regarding time durations of events can form an important part.

Most of the currently available data mining techniques do

not allow for this though such a capability may be useful in

analyzing data pertaining to many engineering processes. It

is in this sense that we feel our extension of the framework

of Mannila et al. [1] is significant in enriching the expressive

capabilities of the technique of discovering frequent episodes.

Acknowledgements

The work reported here is supported in part by project

funded by GM R&D Center, Warren, through SID, IISc,

Bangalore.

6.

REFERENCES

[1] H. Mannila, H. Toivonen, and A. I. Verkamo,

“Discovery of frequent episodes in event sequences,”

Data Mining and Knowledge Discovery, vol. 1, no. 3,

pp. 259–289, 1997.

[2] R. Agrawal and R. Srikant, “Mining sequential

patterns,” in 11th Int’l Conference on Data

Engineering, Taipei, Taiwan, Mar. 1995.

[3] R. Srikanth and R. Agrawal, “Mining generalized

association rules,” in The 21st VLDB Conference,

Zurich, Switzerland, Sept. 1995.

[4] R. Villafane, K. A. Hua, D. Tran, and B. Maulik,

“Mining interval time series,” in Data Warehousing and

Knowledge Discovery, 1999.

Algorithm 4 To generate the set of all l-node frequent principal episodes from the set of l-node candidates

Input: Set C of (candidate) episodes, event sequence S =

(s, Ts , Te ), window width bw , minimum frequency λmin

Output: Subset F (of C) of frequent episodes in the sequence S

1: for all α ∈ C do

2:

for i = 1 to |α.g| do

3:

Initialize α.init[i] = 0

4:

for all δ ∈ α.d[1] do

5:

Initialize waits(α.g[i], δ) = φ

6: for all α ∈ C do

7:

for all δ ∈ α.d[1] do

8:

Update

S

waits(α.g[1], δ) = waits(α.g[1], δ) {(α, 1)}

9:

Initialize α.f req = 0

10: for t = Ts − bw to Ts − 1 do

11:

Initialize beginsat(t) = φ

12: for start = Ts − bw + 1 to Te do

13:

/∗ Start of new window∗/

14:

Initialize beginsat(start + bw − 1) = φ and trans = φ

15:

for all (A, t, τ ) ∈ s such that τ = start + bw − 1 and

(τ − t) ≤ bw do

16:

Assign d = {b ∈ B such that (τ − t) ∈ b}

17:

for all (α, j) ∈ waits(A, d) do

18:

if j = |α.g| and α.init[j] = 0 then

19:

Assign α.inwindow = start

20:

if j = 1 then

S

21:

Update trans = trans {(α, j, t)}

22:

else

S

23:

Update trans = trans {(α, j, α.init[j − 1])}

24:

Update

beginsat(α.init[j − 1]) = beginsat(α.init[j −

1]) \ {(α, j − 1)}

25:

Reset α.init[j − 1] = 0

26:

for all δ ∈ α.d[j] do

27:

Update waits(A, δ) = waits(A, δ) \ {(α, j −

1)}

28:

for all (α, j, t) ∈ trans do

29:

Update α.init[j] = t

S

30:

Update beginsat(t) = beginsat(t) {(α, j)}

31:

if j < |α.g| then

32:

for all δ ∈ α.d[j] do

33:

Update

S waits(α.g[j + 1], δ) = waits(α.g[j +

1], δ) {(α, j + 1)}

34:

for all (α, j) ∈ beginsat(start − 1) do

35:

if j = |α.g| then

36:

Update α.f req = α.f req + start − α.inwindow

37:

else if α.init[j] = start − 1 then

38:

for all δ ∈ α.d[j + 1] do

39:

Update waits(α.g[j + 1], δ) = waits(α.g[j +

1], δ) \ {(α, j + 1)}

40:

Reset α.init[j] = 0

41: /∗ Output ∗/

42: Initialize F ∗ = φ

43: for all α ∈ C such that α.f req/(Te −Ts +bw −1) ≥ λmin

do

44:

for all β α do

45:

if β.f req = α.f req then

46:

Skip to next S

α in line 43

47:

Update F ∗ = F ∗ {α}

48: Output F ∗