From: AAAI Technical Report FS-01-01. Compilation copyright © 2001, AAAI (www.aaai.org). All rights reserved.

Grounding of Robots Behaviors

Louis HuguesandAlexis Drogoul

Universit6Pierre et MarieCurie, LIP6- Paris - France

louis.hugues@lip6.fr, alexis.drogoul@lip6.fr

Abstract

of real experiencescontainingpertinent associationsof pereeption and action.

Thispaperaddressesthe problemof learningrobot behaviorsin real environments.

Robotbehaviorshavenot

onlyto be grounded

in the physicalworldbut also in

the human

spacewherethey are supposeto take place.

Thepaperbriefly presentsa learningmodelrelyingon

teachingby demonstrations,

enablingthe user to transmit its intentionsduringreal experiments.Important

propertiesare outlinedandthe probabilisticlearning

processis described.Finally,weindicate howgrounded

behaviorcouldbe interfacedto a symbolic

level.

Teachingby Showing

The worksrelated to this view are Programming

by Demonstration (Cypher93), Imitative Learning(Demirisand Hayes

96) or Supervised learning methodssuch as in the ALVINN

system(Pomeflau93).

Introduction

It has been shown in numerous works that behavior

paradigmis a good way to specify the actions of robots

in real environments.0nee clearly identified and named,

behaviorscan be incorporated into complexaction/selection

schemesand can be referred to, symbolically, by deliberative processes at upper levels. Thusbehaviors provide a

nice humandesigner/robot interface. Howeverbehaviors

are still difficult to modelandformulatein the real world.In

realistic applications, robots behaviorshave to be adapted

to the Physical space and the Human

space. In the Physical

Spacebehaviors have to deal with sensors incertitude and

imprecision, incompleteness of real world models and

actions too peculiar to be easily defined and specified. In

the Human

Space they have to be adapted to our intentions

and requirement, our habits, the way we organize our

environment(signs, stairways...) and the importance

give to variouscontexts.

Figure 1: Three exampleswhich maybe used to shape the

behavior"Exit by the door".

Wepropose a modelfor behavior learning by demonstration - more details can be found in (Huguesand Drogoul

2001). Theapproachdo insists on the followingpoints:

¯ Shapinga behavior with a few examples: The user must

be able to incrementally shape the behavior by showing

a few examples. To showa behavior such as "Exit by

the door" in figure 1 we wouldlike to demonstrateit by

showingonly a fewsignificativefacets of the behavior.

¯ Finding a Perceptual Support for the behavior A behavior needsa supportin the perceptualfield, somefeatures

to rely on for choosingthe right actions. The features

to be detect cannotbe modeleda priori, they mustbe inferred from the demonstrations.In a wayinspired by Gibson’s Theoryof affordances (Gibson86), the environment

is viewedas directly providinga supportfor possible behaviors. Thisleads us to learn actions by relating themto

the elementsof the perceptualfield at the lowestlevel.

Behaviors have to be grounded either in Physical and

Humanspace, and explicit programmingof behaviors or

optimization processes (genetic algorithms, reinforcement

learning..) cannot alone provide a satisfying adaptation to

those both spaces. Teachingbehaviors by showingthemto

the robots permits to face this problem. By showingthem

directly ( ie: using a remotecontrol device ) robots behaviors are anchoredfrom the beginningin the only space that

matters, the space of the final user’s intentions in its real

physical environment.The

learning process can take benefit

¯ Contextof the behavior:The behaviorhas to be independent of irrelevant features of the contexts of realization

and it also mustbe triggered only in valid contexts. The

identification of contextshas to be associatedtightly to a

behaviordescription.

Copyright~) 2001,American

Associationfor Artificial Inteliigenee(www.aaal.org).

All fights reserved.

115

BehaviorSynthesis

A synthetic behavior is represented by a large population

of elementary cells. Eachcell observes, along each exampies, a particular point in the perceptual field and records

statistically the valueof the effectors. Cells are activatedby

detection of specific prope~es(visual properties mainly)

of the perceptual field such as color, density, gradient of

small pixel regions. A cell records the arithmetic meanof

the robot’s effectors taken over its periods of activations,

the importanceof a particular cell dependson the number

of its activations. Duringthe reproductionof the behavior,

the current values of the effectors are obtained by the

ponderatedcombinationof active cells.

The synthetic behavior also contains a context description

formed by several histograms recording distribution of

perceptualproperties ( again color, density etc ...) over all

the examples.Thebehavior synthesis is divided into four

main steps: (1) individual synthesis of each example

a population of cells and context structure. (2) Fusion

separate synthetic behaviors in a unique population. (3)

Real-Timeuse of the cells structure. (4) Eventually,on line

correctionby the tutor.

multiplicationand importantfeatures are highlighted.

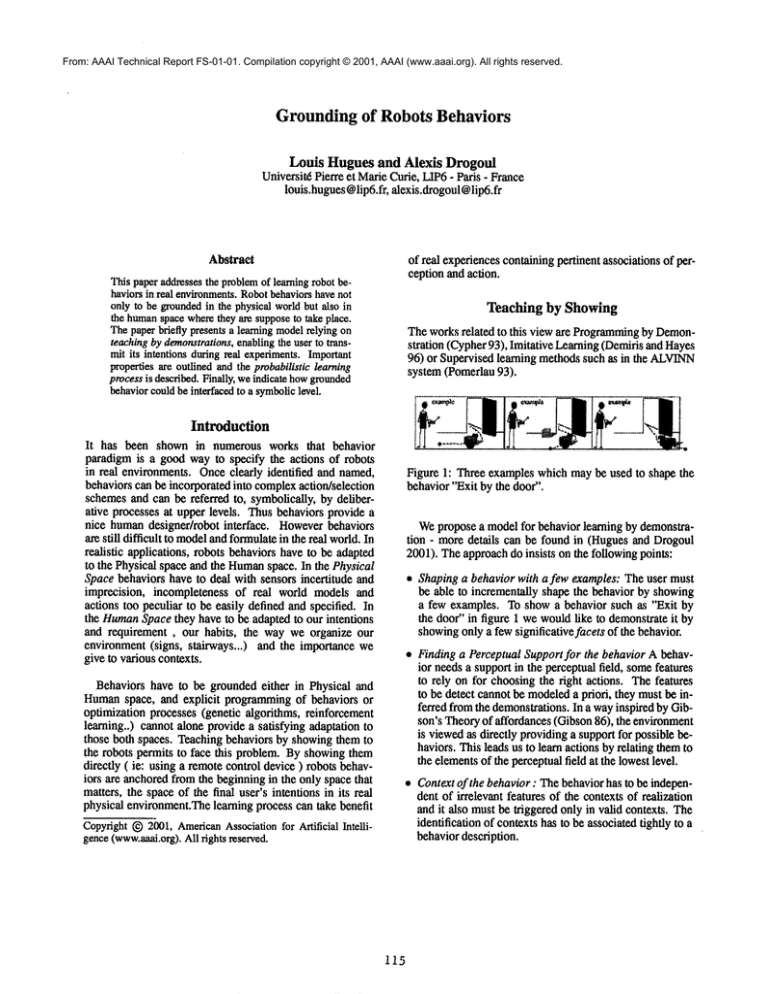

Step 3 : Real-time use At replay time, the behavior can

be activated if the context histogrammatchescurrent video

images.Robotis controlled in real time by deducingeffectors values fromthose stored in active ceils (usinga majority

principle), Theset of cells reacts like a populationwithdominant opinion (possible opinion are the possible values for

the effeetors). Videoimageshave only to overlap partially

the recordeddata so as to producecorrect control values.

Step 4 : Online correction The final behavior can be corrected on line by the tutor. The tutor corrects the robots

movements

by using the joystick in contrary motion. Active

ceils are correctedproportionallyto their importance.

Behaviorinterface with symboliclevel

Modulesoperating at symbolic level (action/selection

mainly)can interface with a groundedbehaviorby referring

to its logical definition (whatit is supposedto do in logical/symbolicterms) and its associated context stimuli. The

user can re-shapethe behaviorreal definition independently

of the symbolicmodules. The value of the stimuli correspondsto the quality of matchingof context histogramand

is used to trigger the behavior.Themodelthus providessupport for programming

at the high level and groundingin real

data at the behaviorlevel.

Conclusion

This modelis implementedon pioneer 2DXrobots equipped

with color camera.In a preliminary phasewe have obtained

promisingresults for simple behaviors such as object approachand door exit. Despite its simplicity the population

of cells encodesa large numberof situations and is robust to

noise. Fewexamples(< 10) are neededto synthesize this

kind of behaviors. Weare currently extending the workin

three directions : Validationof contextdetection, validation

over morecomplexbehaviors,( integrating time and perceptual aliasing), integrating behaviorsinto an action/selection

scheme.

References

L. Huguesand A, Drogoul (2001) Robot Behavior Learning

by Vision-Based Demonstrations, 4th European workshop

on advancedmobile robots (EUROB

OT01).

Figure 2: Collective reaction of the cells in two different

situation of "Exit by the door" behavior. (Top)Far formthe

door the cells proposeto go forward- (Bottom)near the door

a lot of cells tells to turn left.

A.Cypher, (1993) Watch what I do, programming

demonstration. MITPress. CambridgeMA.

J.Demiris and G.M. Hayes (1996) Imitative Learning

Mechanisms in Robots and Humans. In Proe. of 5th

EuropenaWorkshopon Learning Robot, V Klingspor (ed.).

Step 1 : ExamplesCapture Each exampleis shownusing a joystick, the robot vision and movements

are recorded.

Cells are activated by video frames of the example. The

meanof the effeetors values is stored in each cell for periods

of activation of the cell. A Context Histogramis deduced

fromperceptual properties observedalong the example.Step

2: FusingexamplesThe final behavioris obtained by fusing

separate cells populationcomingfromseveral examplesinto

a uniquecell population. Context histogramsare mergedby

J.J. Gibson (1986) The Ecological Approach to Visual

Perception,LEAPublishers, London.

D.A Pomerlau, (1993) Knowledge-based training

artificial neural networksfor autonomous

robot driving. In

J,Connell and S. Mahadevan(Eds.) Robot Learning (pp.

19-43)

116