From: AAAI Technical Report FS-01-05. Compilation copyright © 2001, AAAI (www.aaai.org). All rights reserved.

Enhancing Crew Coordination with Intent Inference

Benjamin Bell and Daniel McFarlane

Lockheed Martin Advanced Technology Laboratories

1 Federal Street, Camden, NJ 08102

{bbell,dmcfarla}@atl.lmco.com

Abstract

Users of complex automation sometimes make mistakes.

Vendors and supervisors often place accountability for

these mistakes on the users themselves and their limited

understanding of how these systems are “supposed” to be

used (a perspective that influences how operators are

trained and fosters efforts to design systems that explain

their actions). Seeking to give users a better

understanding of their systems, however, sidesteps the

critical issue of how we ought to get computers to

shoulder the responsibility of understanding humans. The

machine is the piece of this equation that is "supposed" to

conform. Our approach has the potential to make what

people do naturally be the right thing. This work derives

somewhat from related research in Intent Inference,

which has focused on representing the tasks and actions

of a user so that a system can maintain a representation of

the user’s intent and can tailor its decision support

accordingly. There is a growing need to extend the notion

of intent inference to multi-operator venues, as systems

are targeted increasingly at collaborative environments

and operators are called upon to perform in multiple roles.

In this paper we describe an approach to Crew Intent

Inference that relies on models representing the goals and

actions of individual operators and the overall team. We

focus on the potential for intent inference to enhance

coordination and present example scenarios highlighting

the utility of intent inference in a multi-operator domain.

Introduction

Crews are often simultaneously responsible for several

critical interdependent task activities. They also usually

have a suite of complex automated support systems that

provide some assistance but must themselves be

managed or supervised by the crew. Failure to coordinate

these activity dependencies or errors in supervising the

automation that coordinates these dependencies can have

serious consequences.

We follow Malone & Crowston’s definition of

coordination as “managing dependencies between

activities” (1994). This definition guides an approach to

solving coordination problems based on identifying the

dependencies in a crew work context and devising ways

to manage the coordination processes required to

accommodate them.

In this paper we describe an approach that promotes

more effective crew coordination by applying our ongoing work in team intent inference.

Importance of Coordination

People intuitively employ strategies during coordinated

activity, whether in routine every-day tasks or highly

complex technical procedures. Coordination among

operators in high-risk engineering environments is an

area of intensive study, because of the enormous

potential consequences of lapses in coordination (e.g.,

for airline flightcrews, power plant crews). In this paper

we focus on a mission aircrew, such as would be found

on aircraft that perform command and control (C2),

airborne early warning (AEW), or intelligence,

surveillance and reconnaissance (ISR) missions.

Coordination of military teams has received

considerable attention from government research

sponsors, notably in the area of air operations (see Salas,

Bowers & Cannon-Bowers, 1995, for a summary of ten

years of DoD-sponsored research in aircrew

coordination). Safety is of great concern in aviation

because crew-related error is believed to be a

contributing factor in at least 69% of civil aviation

accidents (International Civil Aviation Organization,

1989) and perhaps as many as 80% of civil aviation

accidents (Sarter & Alexander, 2000). Military

operations show similar patterns: during FY 1994–1995,

human error was a factor in 71% of Air Force, 76% of

Army, and 74% of Navy/USMC aircraft mishaps

(General Accounting Office, 1996). Besides safety, there

is a critical and obvious link between crew coordination

and effective military operations (Barker, et al., 1997;

LaJoie & Sterling, 1999; US Army, 1995).

Structure and Breakdown of Coordination

Research into coordination has revealed patterns of

coordination and some of the conditions under which

Copyright © 2001, American Association for Artificial Intelligence

(www.aaai.org). All rights reserved.

coordination breakdowns are more likely. Activity can

be coordinated by a centralized supervisor, by a team of

cooperating peers, or by a distributed supervisory team

(Palmer, et al., 1995; Wilkins, et al., 1998). Studies have

identified patterns that subjects exhibit while

coordinating activity (Decker, 1998; Malone &

Crowston, 1990, 1994; Patterson, et al., 1998; Schmidt,

1994) and have observed subjects attenuating their

coordination activity in response to the demands of the

current situation (Schutte & Trujillo, 1996). Findings

from these studies can help identify specific coordination

activities that automation systems should detect and

support. For instance, Patterson et al. (1998) identified

patterns that give rise to what they term “coordination

surprises” that include agents working autonomously,

gaps in agents’ understanding of how their partners

work, and weak feedback about current activities and

planned behavior.

Factors that contribute to coordination failures

frequently involve an increased workload or need for

accelerated activity and more rapid decision-making.

Often, a chain of events escalates coordination

difficulties. A typical instance is when a process being

monitored exhibits some anomalous behavior and the

automation system begins generating alerts. The

malfunction will often induce off-nominal states

throughout other, related subsystems, and the operator

monitoring these processes will be confronted by a

growing queue of alerts. Demands on the operator’s

cognitive activity increase as the problem cascades, and

paradoxically, reporting and resolving these alerts

introduces a heightened demand for coordination with

his team members. These system-generated alerts are

intended to deliver critical "as-it-happens" information

about process status that the user needs to make good

decisions about how to react to the problem. However,

from the user's perspective each alert is also an

interruption, and people have cognitive limitations that

restrict their capacity to handle interruptions (McFarlane,

1997, 1999; Latorella, 1996, 1998).

The need for more coordination, therefore, presents

itself precisely when the operator is least able to sacrifice

any additional attention. This process has been

formalized as the Escalation Principle (Woods &

Patterson, 2001); the authors further observe that both

the cascading anomalies and the escalation of cognitive

demands are dynamic processes, making it difficult for

operators and supervisors to stay ahead of the situation.

Impact of automation on crew coordination

Patterns of coordination lapses such as the Escalation

Principle are likely to become more ubiquitous in

aircrew coordination through the effects of five related

trends. First, improved sensor packages are bringing

more information onboard, and better communications

links are pulling in data collected from other sources. As

a result, operators are facing increased volumes of

information. Second, aircraft roles are becoming more

multi-mission and operator duties are as a result

becoming more diverse and interdependent. Third,

military and supporting agencies are emphasizing joint

and coalition operations, which incur more demanding

requirements for coordination among personnel from

different services and nations. Fourth, military planners

are focused on “network-centric warfare”, which will

generate both a more distributed team structure and an

accelerated operational tempo. Fifth, computer

automation in systems continues to grow in both ubiquity

and complexity. This automation requires intermittent

human supervision and its presence results in a much

higher rate of computer-initiated interruptions of

operators.

New systems, developed to enhance mission

effectiveness (e.g., more powerful sensors, improved

communications links), often carry implications for crew

coordination but are seldom designed to support

coordinated activity (Qureshi, 2000). The increased use

of automation has imposed new dynamics on how

crewmembers work together. Automation has changed

the nature of crew communication in subtle ways

(Bowers, et al., 1995). For instance, automated systems

that capture the attention of an operator may compromise

established coordination processes (Sheehan, 1995). The

use of automation may adversely affect crew

coordination, possibly leading to unsafe conditions

(Diehl, 1991; Eldredge, Mangold & Dodd, 1992; Funk,

Lyall & Niemczyk, 1997; Wiener, 1989; Wiener, 1993;

Wise, et al, 1994).

Designing systems that support coordination

A tactical picture can be radically altered in minutes or

even seconds. The level of coordination that an operator

must sustain under an accelerated tempo is likely to

increase with the amount of information he must manage

(leaving less time and attention to devote to

coordination). Automated systems are not responsive to

such changes in workload, and in fact frequently worsen

the operator’s situation by generating a rapid stream of

alerts which may fail to communicate their relevance,

priority, or reliability (FAA, 1996; Palmer, et al., 1995;

Rehmann, et al., 1995). Operators and crew rely instead

on maintaining coordination directly, by talking to each

other (NTSB, 1990; Patterson, Watts-Perotti, & Woods,

1999). When conversation interrupts the operator’s task,

he later must resume the interrupted task by mentally

recalling his previous state or by referring to his written

log. Recasting these issues as design principles, we

would like systems that:

•

monitor the mission and anticipate situations

where coordination may be degraded;

•

•

•

•

adapt to coordination-threatening conditions by

more conspicuously intervening to keep crew

members in sync;

respond to a lapse in coordination by rapidly and

smoothly bridging crewmembers back into

coordinated activity;

help operators resume interrupted tasks by

assisting with context restoration and updating

logs to reflect recent events;

empower users with efficient and effective direct

supervisory power over automation and the mixedinitiative interaction that it requires.

Enhancing Coordination with UserCentered Approaches to Decision Support

User-centered approaches explore how humans interact

with computers and how to create computers that better

support users. When a user experiences a problem, there

is a general tendency to either blame the user, or posit an

anomaly in the system. A user-centered perspective

would characterize the problem as a failure of the system

to capture and execute what the user wants to make

happen. Addressing this limitation requires that systems

maintain a representation of these user goals.

Efforts to develop systems that enhance coordination

have generally focused on helping operators manage

information overload. The basic strategy has been to

solve the problem of too much information by displaying

less of it, and to reduce workload through automation.

Automation systems though tend to be least helpful when

workload is highest (Bainbridge, 1983). Another

problem with these approaches is that systems do not

adequately recognize what information is most relevant

in the current situation. What automation systems

typically lack is an understanding of context (Woods, et

al., 1999), a key determiner of the relevance of

information. Context includes the current environment or

tactical picture as well as the team’s and operators’

current tasks. A capability to recognize an operator’s

task state could be used to monitor and report any

deviations from planned procedures to keep supervisors,

peers, and subordinates informed (Patterson, et al.,

1998). An additional aspect to understanding context is

appreciating the dependencies that obtain among

operators (Malone & Crowston, 1994). Promoting

effective coordination therefore hinges on systems that

(1) understand what the operators are doing and what the

intent of the overall mission team is; and (2) recognize

the dynamics and dependencies of the situation and

anticipate effects on operators.

Modeling Users and their Tasks

Attempts at understanding user activity have

emphasized user modeling and task modeling. User

modeling seeks to develop a declarative representation of

the user’s beliefs, skills, limitations, current goals, and

previous activity with the system (Linton, Joy, &

Schaefer, 1999; Miller, 1988; Self, 1974). Task modeling

focuses on representing the goals a user is likely to bring

to the interaction, the starting points into that interaction,

and the paths a user might follow in getting from a start

state to a goal state (Deutsch, 1998; Mitchell, 1987;

Rich, 1998; Rouse, 1984). Task modeling relies on an

explicit representation of the steps (perhaps orderindependent) that a user might take, of the transitions

among these steps, and of the language that a user

employs in specifying those steps and transitions. Steps

in the task need not be physical; approaches such as

Cognitive Work Analysis represent mental tasks as well

(Sanderson, Naikar, Lintern & Goss, 1999; Vicente,

1999). Modeling user activity is not the goal of our work

but is a necessary element in developing systems with

intent inference capability as a step toward promoting

better crew coordination.

Intent Inference

Intent-inference is an approach to developing

intelligent systems in which the intentions of the user are

inferred and tracked. Intent inference involves the

analysis of an operator’s actions to identify the possible

goals being pursued by that operator (Geddes, 1986).

Systems that are intent inference-capable can anticipate

an operator's future actions, automatically launch the

corresponding tools, and collect and filter the associated

data, so that much of the information required by the

operator can be at hand when needed. This approach

relies on a task model, or state space (Callantine,

Mitchell, & Palmer, 1999) to follow human progress

through work tasks, monitoring human-system and

human-human interaction within the task environment

(e.g., a flight deck).

To leverage task models in creating intent-aware

systems, mechanisms are needed to capture user actions

through observation mechanisms that supply information

about operator activity in real time (from control inputs,

keystrokes, the content of information queries, or spoken

dialogue). These observations are coordinated with task

models to help the system generate inferences about the

operator’s current tasks and goals, as well as to anticipate

the operator’s next actions. Operator actions can also be

inferred in cases where direct observation is not feasible

(Geib & Goldman, 2001). These inferences and

predictions are central to the proactive property of intentaware systems, allowing them to execute proactive

information queries, focus operator attention on

upcoming tasks, and diagnose and report operator errors.

An early example of a complex intent-inference

application was The Pilots’ Associate, a DARPA

program that provided foundational progress in intentinference (Banks & Lizza, 1991) and the use of task

models to infer goals from actions (Brown, Santos, &

Banks, 1998).

Crew-Intent Inference

While operator intent inference is a vital factor in

helping users smoothly negotiate increasingly complex

interactions with computers, perhaps more important is

extending intent inference to cases involving teams,

where one operator’s activity influences the work of

others (e.g., Alterman, Landsman, Feinman & Introne,

1998). The “members” of the team may not understand

themselves to be such, since the team approach can be

applied to the study of dynamic systems of discrete

participants, such as in intent inference for Free Flight

(Geddes, Brown, Beamon, & Wise, 1996). But for this

discussion “team” will imply a shared goal among

cooperative players who may represent a team of

operators (co-located or distributed), such as an aircrew

or command staff. Observations of individual operators’

actions can be used to infer the goals of the entire team.

Conversely, knowledge of the entire team’s goals can be

used to predict the actions and information needs of

individuals in the team. A system that tracks the team’s

goals can facilitate stronger coordination among crew

members and can support improved workload balancing.

In addition, systems that are team intent-aware can

remediate detected differences between individual and

team goals.

Intent Inference for Crew Coordination

In previous work (Bell, Franke & Mendenhall, 2000),

we have advanced team-level Intent Inference as an

method for providing autonomous information gathering

and filtering in support of a shipboard theater missile

defense cell. Our approach, called Automated

Understanding of Task / Operator State (AUTOS), calls

for individual and team task models, observables to track

operator activity, and intelligent agents to gather taskspecific information in advance of the operators’

requests for such information. The task models

characterize the discrete activities that are expected to

occur while performing a given task. By progressing

through the model as activity is detected, AUTOS can

optimize information flow to best serve the operator’s

intent. Direct observations are gathered dynamically by

automatically examining the actions the operator is

taking: button clicks, mouse movements, and keystrokes.

Indirect observations arise from an examination of the

semantic content resulting from the operator’s actions,

including analyses of information that the operator

displays and analyses of the verbal communication

among operators within the command center.

The AUTOS design solution emphasizes user control

for coordinating interruptions. Operators control when

they will pause what they are doing to review the

system-initiated results of the proactive automation.

When the automation has something new, the user

interface creates an iconified representation of it and

presents it at the bottom of the user's work window. The

user can quickly see the availability and general meaning

the new information. They then control the decision of

when and how to interrupt their current activity to get the

new information. This solution focuses on user control

and is a type of negotiation support for coordinating

human interruption. A negotiation-based solution was

shown by McFarlane (1999) to support better overall

task performance than alternative solutions except for

cases where small differences in the timeliness of

handling interruptions is critical.

Limitations of Intent Inference for Coordination

Lessons learned from this work suggest the challenges

posed by approaches that rely on task models, among

them, the intrinsic complexities of the processes being

modeled, the uncertainties in recognizing user intent, and

the unresolved issues surrounding scalability.

Limitations on task modeling constrain intent inference,

since the latter relies on matching observed actions

against the former to generate hypotheses about what the

user is trying to do. Coordination is an especially nonlinear, non-deterministic activity that task models of the

type explored in our previous work would be hardpressed to accommodate.

The daunting and unsolved problem of how to costeffectively develop plausible task models can be partially

overcome by reducing the need for some of the task

modeling. In particular, tasks associated with

coordination can be tracked using generic models of

coordination, and imminent coordination lapses can be

detected and corrected using these same general-purpose

models.

Proposed Approach

Our approach integrates three capabilities: (1) predict

states under which heightened coordination will be

needed; (2) detect and report the onset of coordination

lapses; (3) recognize and facilitate operator attempts to

re-establish coordination.

Predicting the states under which heightened

coordination will be needed requires that operator

workload and the tactical environment be monitored.

Coordination lapses generate symptoms that help people

recognize and correct them. Unusual delays, duplication

of effort, and interference are routine indicators.

Monitoring might also reveal an operator performing the

wrong tasks or executing tasks out of sequence. Subtle

cues can serve as early indicators, and include an

operator performing a task that is “correct” but was not

assigned, an operator executing a task other than what he

has reported, or (subtler yet) an operator performing a

task other than what he believes to be performing.

Workload levels can be estimated directly by

examining system outputs (number of alerts being

displayed, number of targets being tracked) and system

inputs (submode changes, keystrokes, trackball

movements). The tactical picture can be assessed (with

respect to workload) by considering the complexity of

the mission plan, current mission activity, volume of

incoming message traffic, presence of hostile forces, etc.

Domain-tailored models of crew-level tasks would

allow even more explicit specification of the conditions

under which coordination becomes critical. Obvious

cases where rapid and closely coordinated activity is

called for are threats to the aircraft, such as when a

missile launch is detected, though more routine situations

will also indicate a heightened need for coordination. For

instance, a team-level model might recognize that a piece

of equipment is inoperative and recommend to the crew

a re-distribution of tasks to minimize the effects of the

equipment failure on the mission.

Detecting the onset of coordination lapses could be

enabled by monitoring frequency of exchanges over the

ICS, recording whenever an operator sets the display to

mirror another station, and detecting when control of a

sensor is tasked to another operator. Sufficiently welldefined operator and team models could detect when two

operators are out of sync, performing duplicate tasks, or

interfering with each other. Once detected, the

coordination problem must be corrected, which

introduces a potential paradox of having to generate an

alert for operators who are already faced with too many

interruptions (McFarlane, 1999).

Recognizing and facilitating operator attempts to reestablish coordination is perhaps the most subtle of the

three proposed capabilities. Coordination can be

regained through requests for clarification, selfannounced reports of current activity, reference to a

mission log or other archival material, direct observation

of on-going activity, or by reasoning from indirect

observations. A system could recognize such activities

by monitoring for specific commands (such as displaying

a mission log, searching an archive, or requesting a

playback). Explicit representations of coordination

activity (Jones & Jasek, 1997) could also be applied to

recognizing attempts to restore coordination.

Example

A P-3 Orion, the U.S. Navy’s maritime anti-submarine

warfare (ASW) platform, has mission stations for the

tactical coordinator (TACCO) – who also may be the

mission commander (MC), navigator/communicator

(NAV/COMM), acoustic sensor operators (SS/1-2), and

non-acoustic sensor operator (SS/3). The most intuitive,

effective, and widely-used means for maintaining crew

coordination is verbal interaction over the internal

communications system (ICS). The configuration of the

aircraft prevents operators from seeing each other

(Figure 1) and the displays restrict what each operator

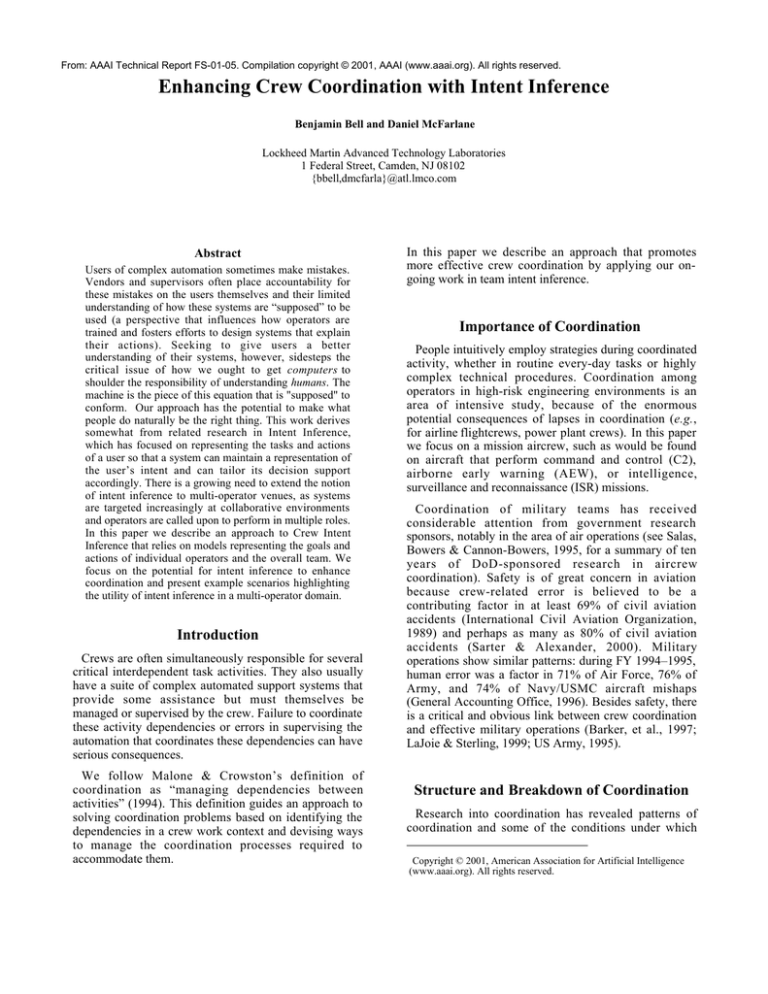

can view.

Figure 1. Operator stations SS/1-2 (left) looking forward toward flight deck. SS/3 is immediately forward on right; TACCO is just aft of

flight deck, on left; NAVCOMM is aft of flight deck, on right

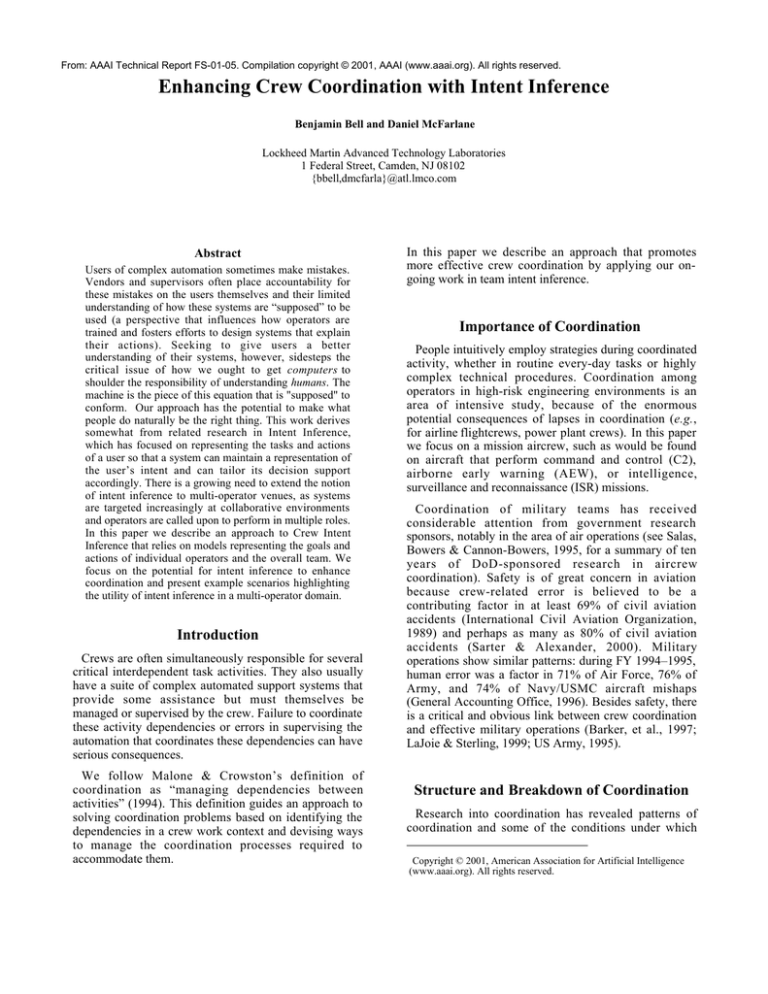

For instance, the SS/1-2 stations cannot show the

tactical picture that is displayed to the TACCO (Figure

2). A seasoned TACCO will maintain close coordination

via the ICS (which every operator listens to), reminding

stations of tasks-in-progress and calling out updates to

the tactical situation. There are no features designed into

the automation specifically for supporting coordination.

On P3s upgraded under the antisurface warfare

improvement program (AIP), operators have the ability

to view what other stations are seeing and a workload

sharing feature enables some sensors to be controlled

from any station. But assuming multimission roles (an

objective of AIP) introduces additional responsibilities

(such as rapid precision targeting and detection of

transporter-erector-launcher sites) and with those, a

greater need for coordination that simple display

mirroring cannot compensate for. Below are three brief

vignettes illustrating potential threats to coordination and

how an intent inference-enabled automation system

could recognize and help overcome (or avoid)

coordination breakdowns.

Equipment failure: During a mission there always exists

some likelihood that an operator could become

overtasked, a potential that becomes more acute when

equipment failures occur. For instance, failures of the ontop position indicator (OTPI) are not uncommon, and in

the absence of the OTPI the TACCO calculates buoy

positions manually, which induces an increased

workload. Coordination becomes especially important at

such times, and an intent-aware system would be able to

draw relationships between specific failure modes and

the overall effects on the mission, further identifying

how to retask all mission crewmembers to overcome the

failure in a coordinated, synchronized fashion.

Figure 2. SS/1-2 stations (left) show a different tactical picture using different data, displays, and format than TACCO (right).

Workload imbalance : Additional on-board sensors and

workload sharing introduce more multi-tasking

responsibilities. For instance, a TACCO may task SS/1

with controlling the Advanced Imaging Multi Spectral

(AIMS) camera and SS/3 with operating the inverse

synthetic aperture radar (ISAR). These operators’ other

obligations may at the same time begin to grow (for

instance, SS/1 must also listen to acoustic data transmitted

from deployed buoys while analyzing the acoustic

waveforms displayed on his console, while supervising and

delegating to SS/2). Intent-inference capabilities would

help the automation systems recognize what tasks

crewmembers were engaged in, what level of tasking they

were confronting, and what suitable workload sharing

solutions to recommend to TACCO. Even simple graphics

depicting the system’s assessment of each station’s loading

would provide the MC with a quick, visual summary and

offer early warnings of imminent coordination lapses.

Context Switching: With P-3 crews routinely engaging in

multi-tasking, a typical situation might have the crew

suspend the ASW mission, fly to a release point to launch

an air-to-surface weapon, and return to the prior location to

resume the ASW task thirty or forty minutes later.

Transitioning back into the ASW task requires recalling the

Last Known Position for each track, re-entering the

frequencies being tracked, looking at the historicallygenerated tracks, and checking any relevant data links for

updated information. When an operator's task is interrupted

by a more urgent task, his previous context is restored in

part by reference to his written mission log, though he must

manually restore equipment configurations if any were

changed in the interim (see Malin et al. (1991) for another

example of the use of logs for recovery after interruption).

Intent-inference would enable systems that detect changes

in the mission, verify with each operator that his equipment

is properly reconfigured, and automatically save the current

state (settings, mission log, tactical picture). The

interrupted task in this case need not lie dormant –

intelligent sentinel agents would instead monitor incoming

data source such as Link-11/Link-16, record imagery, and

monitor comms channels. Resuming the interrupted task is

analogous in some respects to a shift change (Patterson &

Woods, 1997), so restoration of context could be handled

in part by offering each operator a “playback” tailored to

that operator’s responsibilities. The system should also be

able to restore all configurations to their prior states, and

integrate historical information with updates filtered from

the information streams that were autonomously monitored

while the task was in suspense.

Conclusion

Mission operations invariably demand close coordination

among aircrew members serving different roles and

requiring information support from a disparate array of

assets. New responsibilities for operators must be

accompanied by systems that possess an understanding of

crew roles and coordination. The simple vignettes in this

paper offer a brief glimpse of how intent inference-enabled

systems could promote more effective aircrew

coordination. The brief examples portrayed situations that

are relatively routine and non-critical. Tactical

environments with real-time mission profiles and hostile

threats have an even more pronounced impact on the need

for crew coordination (as alluded to by the Escalation

Principle). The need for intent inference approaches to

promoting aircrew coordination is rendered all the more

urgent when these real-world conditions are considered.

References

Alterman, R., Landsman, S., Feinman, A., and Introne, J. (1998).

Groupware for Planning. Technical Report CS-98-200,

Computer Science Department, Brandeis University, 1998.

Bainbridge, L. (1983). Ironies of Automation, Automatica, 19, pp.

775-779.

Banks, S.B., and Lizza, C.S. (1991). Pilot's associate: A

cooperative, knowledge based system application. IEEE

Expert, 18–29, June 1991.

Barker, J., McKinny, E., Woody, J., and Garvin, J.D. (1997).

Recent Experience and Tanker Crew Performance: The

Impact of Familiarity on Crew Coordination and

Performance. In Proceedings of the 9th International

Symposium on Aviation Psychology, Ohio state university,

Columbus, OH.

Bell, B.L., Franke, J., and H. Mendenhall (2000). Leveraging

Task Models for Team Intent Inference. In Proceedings of

the 2000 International Conference on Artificial Intelligence,

Las Vegas, NV.

Brown, S.M., Santos, E., and Banks, S.B. (1998). Utility TheoryBased User Models for Intelligent Interface Agents. In Proc.

of the 12th Canadian Conf on Artificial Intelligence (AI '98),

Vancouver, British Columbia, Canada, 18-20 June 1998.

Callantine, T.J., Mitchell, C.M., and Palmer, E.A. (1999). GTCATS: Tracking Operator Activities in Complex Systems.

Technical Memo NASA/TM-1999-208788. NASA Ames

Research Center, Moffet Field, CA.

Decker, K. (1998). Coordinating Human and Computer Agents.

In W. Conen, and G. Neumann, (Eds.), Coordination

technology for Collaborative Applications - Organizations,

Processes, and Agents. LNCS #1364, pages 77-98, 1998.

Springer-Verlag.

Diehl, A.E. (1991). The Effectiveness of Training Programs for

Preventing Aircrew "Error". In Proceedings of the Sixth

International Symposium of Aviation Psychology, Ohio St.

Univ., Columbus, OH.

Deutsch, S. (1998). Multi-disciplinary Foundations for Multipletask Human Performance Modeling in OMAR. In

Proceedings of the 20th Annual Meeting of the Cognitive

Science Society, Madison, WI, 1998.

Eldredge, D., Mangold, S., and Dodd, R.S. (1992). A Review and

Discussion of Flight Management System Incidents

Reported to the Aviation Safety Reporting System. Final

Report - DOT/FAA/RD-92/2, Washington, DC: Federal

Aviation Administration.

FAA (1996). The Interfaces Between Flight Crews and Modern

Flight Deck Systems. Report of the FAA Human Factors

Team. Washington, D.C.: FAA.

Funk, K.H., Lyall, E.A., and Niemczyk, M.C. (1997). Flightdeck

Automation Problems: Perceptions and Reality. In M.

Mouloua and J.M. Koonce (Eds.) Human-automation

interaction: Research and practice. Mahwah, New Jersey:

Lawrence Erlbaum Associates.

Geddes, N.D. (1986). The use of individual differences in

inferring human operator intentions. In Proceedings of the

Second Annual Aerospace Applications of Artificial

Intelligence Conference, 1986. Dayton, OH.

Geddes, N.D., Brown, J.L., Beamon, W.S., & Wise, J.A. (1996).

A shared model of intentions for free flight. In Proc. of the

14th IEEE/AIAA Digital Avionics Systems Conference.

Atlanta, GA. 27-31 October.

Geib, C., and Goldman, R.P. (2001). Issues for Collaborative

Task Tracking. In Proc. of the AAAI Fall Symposium on

Intent Inference for Collaborative Tasks, AAAI Press,

forthcoming.

General Accounting Office (1996). Military Aircraft Safety:

Significant Improvements Since 1975 (Briefing Report,

02/01/96, GAO/NSIAD-96-69BR.

International Civil Aviation Organization (1989). Human Factors

Digest No. 1 , ICAO Circular 216- AN/ 131, December

1989.

Jones, P. M. and Jasek, C. A. (1997). Intelligent support for

activity management (ISAM): An architecture to support

distributed supervisory control. IEEE Transactions on

Systems, Man, and Cybernetics, 27, No. 5, 274-288.

LaJoie, A.S. & Sterling, B.S. (1999) Team and Small Group

Processes: An Annotated Bibliography, Army Research

Institute Research Report, 2000-01.

Latorella, K. A. (1996). Investigating interruptions: An example

from the flightdeck. In Proceedings of the Human Factors

and Ergonomics Society 40th Annual Meeting.

Latorella, K. A. (1998). Effects of modality on interrupted

flightdeck performance: Implications for Data Link.

Proceedings of the Human Factors and Ergonomics Society

42th Annual Meeting.

Linton, F., Joy, D., Schaefer, H.P. (1999). Building user and

expert models by long-term observation of application usage.

In Proceedings of the 7 th International Conference on User

Modeling, 20-24 June, Banff, CA.

Malin, J. T., Schreckenghost, D. L., Woods, D. D., Potter, S. S.,

Johannesen, L., Holloway, M., & Forbus, K. D. (1991).

Making Intelligent Systems Team Players: Case Studies and

Design Issues, Vol. 1, Human-Computer Interaction Design

(NASA Technical Memorandum 104738): National

Aeronautics & Space Administration (NASA), Washington,

DC.

Malone, T. and Crowston, K. (1990). What is coordination theory

and how can it help design cooperative work systems? In

Proceedings of the 1990 ACM Conference on ComputerSupported Cooperative Work, 357-370.

Malone, T. and Crowston, K. (1994). The Interdisciplinary Study

of Coordination, ACM Computing Surveys, Vol. 26, No. 1,

pages 87-119.

McFarlane, D. C. (1997). Interruption of People in HumanComputer Interaction: A General Unifying Definition of

Human Interruption and Taxonomy (NRL Formal Report

NRL/FR/5510—97-9870): Naval Research Laboratory,

Washington, DC.

McFarlane, D. C. (1999). Coordinating the Interruption of People

in Human-Computer Interaction, Human-Computer

Interaction - INTERACT'99, Sasse, M. A. & Johnson, C.

(Editors), Published by IOS Press, Inc., The Netherlands,

IFIP TC.13, 295-303.

Miller, J.R. (1988). The role of human-computer interaction in

intelligent tutoring systems. In M.C. Polson and J.J.

Richardson (Eds.), Foundations of Intelligent Tutoring

Systems, 143–190. Hillsdale, NJ: Lawrence Erlbaum.

Mitchell, C.M. (1987). GT-MSOCC: a research domain for

modeling human-computer interaction and aiding decision

making in supervisory control systems. IEEE Transactions

on Systems, Man, and Cybernetics, 17(4), 553-570.

NTSB (1990). Aircraft Accident Report: United Airlines Flight

232, McDonnell Douglas DC-10-10 N1819U, Sioux

Gateway Airport, Sioux City, Iowa, July 19, 1989.

Washington: NTSB, Report No. NTSB-AAR-90-06.

Qureshi, Z.H. (2000). Modeling Decision-Making In Tactical

Airborne Environments Using Cognitive Work AnalysisBased Techniques. In Proc. of the 19th Digital Avionics

System Conference, Philadelphia, PA, October, 2000.

Palmer, N.T., Rogers, W.H., Press, H.N., Latorella, K.A., and

Abbott, T.S. (1995). A crew-centered flight deck design

philosophy for high-speed civil transport (HSCT) aircraft.

NASA Technical Memorandum 109171, Langley Research

Center, January, 1995.

Patterson, E.S., and Woods, D.D. (1997). Shift Changes, Updates,

and the On-Call Model In Space Shuttle Mission Control. In

Proceedings of the 41st Annual Meeting of the Human

Factors and Ergonomics Society, September, 1997.

Patterson, E.S., Watts-Perotti, J., and Woods, D.D. (1999). Voice

Loops as Coordination Aids in Space Shuttle Mission

Control. Computer Supported Cooperative Work, 8(4): 353371.

Patterson, E.S., Woods, D.D., Sarter, N.B., & Watts-Perotti, J.

(1998). Patterns in cooperative cognition. In Proc. of

COOP '98, Third International Conference on the Design of

Cooperative Systems. Cannes, France, 26-29 May.

Rehmann, A. J., Mitman, R. D., Neumeier, M. E., and Reynolds,

M. C. (1995). Flightdeck Crew Alerting Issues: An Aviation

Safety Reporting System Analysis. (DOT/FAA/CTTN95/18). Washington, DC: U.S. Department of

Transportation, Federal Aviation Administration.

Rich, E. (1998). User modeling via stereotypes. In M. Maybury

and W. Wahlster (Eds.), Readings in Intelligent User

Interfaces, 329-342. San Francisco: Morgan Kaufmann.

Rouse, W.B. (1984). Design and evaluation of computer-based

support systems. In G. Salvendy (Ed.), Advances in Human

Factors/Ergonomics, Volume 1: Human-Computer

Interaction. New York: Elsevier.

Salas, E., Bowers, C. A., & Cannon-Bowers J. A. (1995). Military

team research: Ten years of progress. Military Psychology,

7, 55-75.

Sanderson, P, N. Naikar, G. Lintern and S. Goss (1999). Use of

Cognitive Work Analysis Across the System Life Cycle:

Requirements to Decommissioning. In Proc. of 43rd Annual

Mtg of the Human Factors & Ergonomics Society, Houston,

TX, 27-Sep – 01-Oct.

Sarter, N.B. and Alexander, H.M. (2000). Error Types and

Related Error Detection Mechanisms in the Aviation

Domain: An Analysis of ASRS Incident Reports.

International Journal of Aviation Psychology, 10(2), 189206.

Schmidt, K. (1994). Modes and mechanisms for cooperative

work. Risø Technical Report, Risø National Laboratory,

Roskilde, Denmark.

Schutte, P.C., and Trujillo, A.C. (1996). Flight crew task

management in non-normal situations. In Proceedings of the

Human Factors and Ergonomics Society 40th Annual

Meeting, 1996. pp. 244-248.

Self, J.A. (1974). Student models in computer-aided instruction.

International Journal of Man-Machine Studies, 6, 261-276.

Sheehan, J. (1995). Professional Aviation Briefing, December

1995.

US Army (1995). Aircrew Training Program Commander's Guide

to Individual and Crew Standardization, Training Circular 1210, October, 1995.

Vicente, K (1999). Cognitive Work Analysis. Lawrence Erlbaum

Associates, Mahwah, NJ.

Wiener, E.L. (1989). Human factors of advanced technology

("glass cockpit") transport aircraft. (NASA Contractor

Report No. 177528). Moffett Field, CA: NASA Ames

Research Center.

Wiener, E. L. (1993). Intervention strategies for the management

of human error. NASA Contractor Report Number

NAS1264547. Moffett Field, CA: NASA Ames Research

Center.

Wilkins, D.C., Mengshoel, O.J., Chernyshenko, O., Jones, P.M.,

Bargar, R., and Hayes, C.C. (1998). Collaborative decision

making and intelligent reasoning in judge advisor systems.

In Proceedings of the 32nd Annual Hawaii International

Conference on System Sciences.

Wise, J.A., D.W. Abbott, D. Tilden, J.L. Dyck, P.C. Guide, & L.

Ryan. (1994). Automation in the Corporate Aviation

Environment. In N. McDonald, N. Johnston, & R. Fuller

(Eds.), Applications of Psychology to the Aviation System,

Cambridge: Avebury Aviation. pp 287-292.

Woods, D.D., Patterson, E.S., Roth, E.M., and Christoffersen, K.

(1999). Can we ever escape from data overload? In Proc. of

the Human Factors and Ergonomics Society 43rd annual

meeting, Sept-Oct, 1999, Houston, TX.