From: AAAI Technical Report FS-94-01. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

KnowledgeAcquisition

and

Reactive Planning for the Deep Space Network

Randall W. Hill,

Jr., Kristina Fayyad, Patricia Santos, Kathryn Sturdevant

Jet PropulsionLaboratory

California Institute of Technology

4800 OakGrove Drive, M/S525-3660

Pasadena, CA91109-8099

{hill, kristina, trish, kathys}@negev.jpl.nasa.gov

Abstract

Weare building a reactive planning agent (REACT-P)

to automatethe operadonof a the communications

link

in NASA’s

DeepSpace Network(DSN). Wedesire

agent capable of executing plans and reactively replanning whennecessary. Since the reactive planning

agent must be built to interact with legacy (i.e.,

unmodifiable) systems, there are a number of

significant challengesthat mustbe addressed:(1)

what extent and howshould the legacy systems be

reverse-engineered

andmodeledin order to supportthe

developmentof a reactive planning agent? (2) Since

the legacy systems were built to support human

interactive control, mostof the informationneededfor

reactive planning is located in the humancomputer

interface. Howdo we identify and acquire the

knowledgeelements neededfor reactive planning? To

address the fast issue weare using a scenario-based

knowledgeacquisition methodologyand subsequently

building models of the legacy systems using a

simulation authoring toolkit namedRIDES(Munro,

1993). The reactive planner is being built in Soar

(Laird et al., 1987), whichwill be integrated and

tested, and refmedwith the RIDES-based

simulator.

whilemaintaining

at least the currentlevel of reliability. To

.accomplish this goal REACT-Pmust be capable of

performingmanyof the sameoperations that are currently

done manually by DSNstation Operators, and thereby

significantly reducethe amountof humaneffort neededto

performmonitorand control tasks in the domain.

Wehaveelsewhereidentified a numberof factors that

makeit difficult to implementa planner for a domainlike

the DSN(see Chien,Hill, and Fayyad,1994). In this paper

we address the knowledgeacquisition problem by fast

giving a brief overviewof the DSNtask domainand the

nature of problem solving there. Next, we present an

approach to knowledgeacquisition that builds on our

experiences in developing a DSNperformance-support

system called the Link Monitor and Control Operator

Assistant (LMCOA)

and an intelligent tutoring systemfor

DSNOperators called REACT.

Finally, wedescribe a set

of knowledge acquisition methods and tools we are

developingto supportthe approach.

2. Task Domain: Deep Space Network

The Deep Space Network (DSN) is a worldwide

network of deep space tracking and communications

complexeslocated in Madrid,Spain, Canberra, Australia,

and Goldstone, California. Each of these complexesis

1. Introduction

¯ capable of performingmultiple missions simultaneously,

each of whichinvolves operating a communications

link. A

Oneof the significant challenges to developingand

DSNcommunications

link is a collection of devicesused to

fielding a real-worldplanner is in the acquisition of the

track and communicate

with an unpiloted spacecraft.

knowledge

neededfor it to function autonomously

in a realCurrently,mostof the tasks requiringthe control of a

world problem domain.This paper describes howwe are

DSNcommunications link are performed by human

addressing the knowledgeacquisition problem in the

Operators on a system called the LMC

(Link Monitorand

developmentof an agent, namedREACT-P,

for a realControl)

system.

The

Operators

are

given

tasks that involve

world domain, the communications link monitor and

configuringand calibrating the communications

equipment,

control system of NASA’sDeep Space Network (DSN).

and then they monitor and control these devices while

Theunderlying motivationfor developingREACT-P

is the

goal of significantly improvingthe productivityof the DSN tracking a spacecraft or celestial body. The Operators

followwritten proceduresto performtheir missiontasks. A

This paper describes workperformedby the Jet Propulsion procedurespecifies a sequenceof actions to execute, where

the actions are usually commands

that mustbe entered via

Laboratory,CaliforniaInstitute of Technology,

undereontIact

systemkeyboard.

with the NationalAeronauticsandSpaceAdministration.Other the link’s monitor-and-control

Once issued, a commandis forwarded to another

members

of the teamworkingon this project includeW.Lewis

subsystem,whichmayaccept or reject it, dependingon the

JohnsonandLornaZorman

at Universityof SouthernCalifornia

state of the subsystemat the time that the command

is

(USC)InformationSciencesInstitute, andAllenMunroat the

USCBehavioralTechnology

Laboratory.

received. The Operator receives a messageback from the

72

subsystemindicating whether the command

was accepted

or rejected, and in cases wherethere is no response, a

messagesaying that the command

"timed out" is sent.

These messagesdo not indicate whether the action was

successful or whatthe results of the action were. Rather,

the Operator has to monitor subsystem displays for

indicationsthat the actioncompleted

successfullyandthat it

had its intended effects. It is common

for commands

to be

rejected or for commands

to fail due to a numberof realworldcontingenciesthat arise in the executionof a plan or

procedure.

4. General Approach to Knowledge

Acquisition

Wetreat the knowledge acquisition problem of

developing a DSNreactive planning agent as being

analogous to the challenge of training novice DSN

Operators. To understand the analogy, consider the

following.Achieving

an expert-levdof skill is difficult: it

takes humanOperators manymonthsof training and onthe-job experience to become experts. Muchof the

difficulty in human

skill acquisitionis relatedto the situated

nature

of

the

domain

knowledge(Hill&Johnson, 1993a;

3. Automating the DSN

Hill, 1993; Suchman, 1987). Most of the procedure

A prototype called the Link Monitor and Control

manualsonly describe howto do the job In a cookbook

Operator Assistant (LMCOA)

was developed to improve

style, wherea plan is expressedas a sequenceof actions. In

Operatorproductivity by automatingsomeof the functions

manyinstances, the dependencies,goals, and assumptions

of the LMC.The LMCOA

performstasks by: (1) selecting

behind each action, which happens to be the kinds of

a set of plans to execute, (2) checkingwhethera plan’s

knowledge

that humansacquire through experience, are not

preconditions have been satisfied, (3) issuing the

documented. The humanOperator learns what she can

commands,and (4) subsequently verifying that the

fromthe proceduremanuals;the gaps in her knowledge

are

commandshad their intended effects. The Operator

often filled by trial anderror experimentation,

whichcan be

interacts with the LMCOA

by watching the plans as they

a slowprocessin the absenceof a tutor.

are being executedand pausingor skippingportions of the

TheREACT

intelligent tutoring system implementsan

plan that needto be modifiedfor somereason. Whena plan

approachcalled impasse-driven tutoring (Hill&Johnson,

fails, the LMCOA

lacks the ability to recover on its own.

1993b;Hill, 1993; Hill&Johnson,1994). Students acquire

Instead, the Operatoris left to figure out howto recover

knowledgeusing REACT

by interacting with simulated

from the failure. In observing Operators perform their

DSNcommunications

devices; they receive training whenit

duties, wefind that they havean amazingability to adapt .is clear that they havereachedan impassein executinga

the written proceduresto the contingenciesthat arise in the

procedure.It is assumedthat the humanagent knowsor has

courseof their execution.Areal-worldplannerhas to have

access to the basic plans or proceduresfor operating the

the sameflexibility and knowledgeas a humanOperatorin

equipment;she incrementally adds to her knowledgeand

order to succeedin performingDSNmissions.

skill by performingtasks on a realistic simulator. When

the

REACT-P

is a new version of the LMCOA

that will

student reaches an impasse(i.e., she is no longer able to

reactively generatea plan in responseto a plan failure or

makeprogresstowardthe goal), the tutor Interacts with the

changein goals initiated by the Operator.It will needto

her and provides the knowledge dements required to

have the sameability to recover from plan failure as is

resolve the impasse. Problem solving impasses are

demonstratedby humanOperators. In (Chien, Hill, and

therefore viewedas learningopportunitiesfor the student.

Fayyad,1994) we describe a numberof reasons whyit is

Theimpasseis a result of a knowledge

gapor error, andthe

difficult to deploy a planner for the DSNdomain.Oneof

tutor’s role is to identify that the impassehas occurredand

the reasons given is that it is difficult to acquire and

then determine what knowledgeis neededto resolve the

maintainthe knowledge

that is neededto do the planning.

impasse.

Since the REACT-P

agent mustinteract with legacy (i.e.,

The knowledgeacquisition process for the REACT-P

unmodifiable)systems, there are a numberof significant

reactive planningagentcan be viewedas similar to tutoring

knowledgeacquisition challenges that mustbe addressed:

human Operators. Knowledgeacquisition begins by

(1) To what extent and howshould the legacy systems

acquiring a declarative description of as muchof the

reverse-engineered and modeledin order to support the

domain knowledgeas possible. The knowledgeis then

developmentof a reactive planning agent? (2) SInce the

refmedand gaps are filled by applying the REACT-P

agent

legacy systems were built to support humaninteractive

to a task. Gapsand errors are indicated by impasses,where

control, muchof the information needed for reactive

an impasseis an inability of the REACT-P

agent to make

planning is located in the humancomputerinterface. How progress towardthe achievementof a goal. Theseimpasses

do weidentify and acquire the knowledgeelementsneeded represent opportunitiesto acquire newknowledge

or correct

for reactive planning?(3) Finally, muchof the knowledge existing knowledge.

neededfor planning is not explicitly documented:it is

Thekind of knowledge

that expert Operatorsappearto

learned on the job by the Operators. Howdo weacquire

needfor skilled behavioris the sameas whatis neededfor

knowledge

that is currently a learned skill? Theseare the

the reactive planner. At a minimum,this knowledge

knowledgeacquisition challenges that drive the approach includes the following:(1) plans andtheir actions, (2)

wedescribe below.

preconditions for each action, (3) the effects

postconditionfor eachaction, (4) the goalsof eachplan, (5)

73

a partial order amongplans, (6) dependenciesamongplans,

(7) temporalconstraints on plans and actions, wherethe

preconditions, postconditions and goals are expressedin

terms of object attribute-values (Hill, 1993). The

knowledge

acquisition problem,of course, is in gathering

this information,since, as we’vealreadysaid, it is normally

only found in an expert’s skill base and not recordedin a

declarativeform.Fragments

of this informationexist in the

procedure manualsand operating guides for the various

devices, but muchof it is undocumented

and can only be

deducedfromexperiencesinteracting with the devices.

to performan operation. Precedencerelations are specified

by the nodes and arcs of the network. The behavioral

knowledge

identifies system-statedependenciesin the form

of pre- and postconditions. Temporalknowledgeconsists

of both absolute (e.g. Acquire the spacecraft at time

02:30:45)and relative (e.g. Performstep Y5 minutesafter

step X) temporalconstraints. Conditionalbranchesin the

network are performed only under certain conditions.

Optional paths are those whichare not essential to the

operation, but may,for example,providea higher level of

confidencein the data resulting froma plan if performed.

Eachnodein the TDN

is called a plan andcontains actions

to be performed. A plan also has goals, pre- and

5. Knowledge Acquisition Tools and Methods

postcondition constraints and temporal constraints

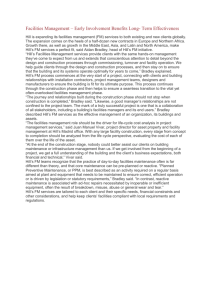

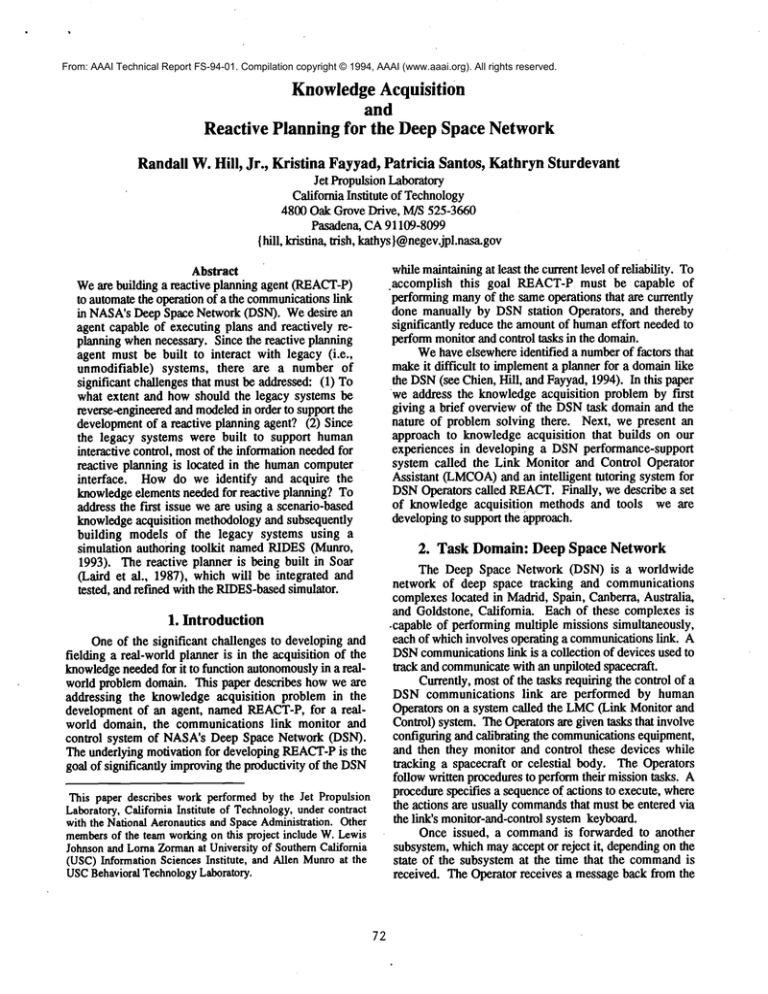

Ourapproachto acquiring knowledge

for the reactive

"associatedwith it. Moredetails about TDNs

are providedin

planner employs the tools shownin Figure 1. REBUS (Fayyad& Cooper1992).

(RequirementsEnvisaging ByUsing Scenarios) (Zorman,

In our experience of building TDNs,the knowledge

1994) and the TDN(Temporal Dependency Network)

bases associatedwith evena single TDN

are quite large and

Authoring Tool are used for acquiring declarative

difficult to build andmaintain. In addition, they rely on

descriptionsof the domain(i.e., devicemodels,procedures, informationfrommanydifferent sources: documentationin

etc.) and the plans used by REACT-P

to performtasks in

paper form, interviews with experts, and text logs of

the domain. RIDESis a simulation authoring toolkit

operationsproceduresfromactual missions, just to namea

(Munroet al., 1993) that is used for implementing

few. As we build more TDNs,muchof this information

executable modelof the devices whosedescriptions were

can be usedagain. Asa result, weare currently developing

acquired with REBUS.

Thesimulation serves as a testing

a TDN

authoringtool to assist Operatorsand developersin

groundfor the REACT-P

agent, and it also helps in the

building, editing, and maintainingTDNs.

validation of the domainmodel. Finally, REACT-P

is an

Withthe TDNauthoring tool, users can graphically

agent implementedin Soar (Laird et al., 1987) that uses

build a TDN

and specify all the informationrequired in a

knowledge from REBUS

and the TDNauthoring tool to

TDN. For example, they graphically create boxes

performtasks andreactively plan, as necessary.In the rest

representingplans, and drawthe links betweenthe plans to

of this section wedescribeeach of the tools just mentioned represent dependenciesbetweenplans. A small segmentof

and howthey are used for acquiring knowledgefor the

a TDNis shownin Figure 2 and contains three plans in

REACT-P

agent.

sequence.This maybe all that the users sees if she initially

creates just a high level TDN

includingskeletal plans anda

partial order of plans. Whena plan is created, the user

It ~citiclioa

gives it a nameandspecifies whattype of plan it is; there

REBUS ]

&rid

description

are different types of plansdepending

on the type of actions

to be takenin a plan, e.g. a manualactionto be takenby the

.Operator, or a list of commands

to be sent to a subsystem.

goals ]

plans

In our example, each of these plans is a command

plan

actionsI

associated

with

the

34

meter

Antenna

Subsystem.

The

Pm/lx~on’~ims

I

putial ¢rd~ ofplam]

sequence of actions for the plan namedClear Boresight

Offsets is shownin Table1. Thefirst two actions set the

simulltioa

ElevationandCross Elevationcorrection offsets to 0. The

execate TDN

tztims

last action sends the structure containing these two

REA

CT-P]

RIDES

reau to a¢iom

variablesto the subsystem.

¯ .

mdLMCOA

.[°N

1

[

1"

¯ ...~L~d

Botesight

"~r

Ct’ar

Borm~ght

Figure 1. REACT-P

and Knowledge

Acquisition Tools.

Figure 2. Portion of a TDN

Temporal Dependency Network (TDN)

Weuse a representation called a TemporalDependency

Network(TDN)to express the basic plan structure and

interrelationships amongplans. ATDN

is a directed graph

that incorporates temporal and behavioral knowledgeand

also provides optional and conditional paths through the

network.Thedirected graph represents the steps required

74

For each plan, the user can then specify the following

informationabout it: the sequenceof actions within the

plan, the pre- and postconditionsof each action, goals of

plans, and temporalconstraints on plans. Depending

on the

knowledgethat the user has about the actions, he mayor

maynot be able to specify all of this information. The

postconditionsof the last action in Table1. fall out nicely

fromthe action itself; thoughthis isn’t alwaysthe case.

Since this last action sends data to the subsystem,it is

necessary to makesure that the subsystem did indeed

receive the newdata values. In this case, we are only

concernedwith the two variables in the structure which

correspond to the first two actions in the plan. The

postconditions for the last command

in Clear Boresight

Offsetsare shownin Table2.

Plan:

Clear BoresightOffsets

Actions:

setvar A34CorrEl0

setvar A34CorrXel0

sendvar ActualCorrData

I

Table1. Plan actions.

Pian:

Action:

Post.condition:

I

Clear BoresightOffsets

sendvar ActualCorrData

ActualCorrData.el= 0

ActualCorrData.xel= 0

Table2. Postconditionfor ClearBoresightOffsets.

In our current prototype,mostof the informationabout

a plan is specified by filling in text forms. Asthe tool

develops,knowledge

bases of actions with their associated

pre and postconditionsand knowledgebases of plans will

be available so that newTDNs

can be built frompieces of

existingones.

Theoutputs of the tool are a text file that completely

defines the TDNand a file depicting the graphical

representation of the TDN.Thesetwo files will be loaded

by the reactive planner.Thegraphicalrepresentationis the

basis for a dynamicdisplay of the TDNin the reactive

planner which shows the progress of the TDNas it is

executing. Thetext file is loadedby the reactive planner

whichuses it to create an executableTDN.

In our example, REBUS

helped to define the device

¯ modelsthat were needed to build a simulation of the

antenna subsystem with RIDES. REBUShas a rich

ontologyfor describingobjects, types, andattributes in the

domain.This fits well with the object, attribute, value

informationneededfor authoringa simulation with RIDES.

For example,in the plan namedLoadBoresight Parameters

shownin Figure2., oneof the parametersset is the number

of boresight points, numbs_points.The representation of

this object using REBUS

is shownin Table 3. This object

of coursehas manyother attributes correspondingto other

boresight parameters. Analysis with REBUSfurther

defined the attribute numbs_.pointsas havingone of two

values: 3 or 5. In addition, REBUS

representedthe default

valueof this object as being3. Definingthe rangeof values

for a value is very necessaryfor our simulationin RIDES

as

well as for pre- and postconditionsin the TDN.Hence,in

the user interface of our simulationbuilt with RIDES,

two

objects weredefined:onefor eachof the possible valuesof

numbs_points.This forces the user to select betweenone

of the twovalid settings of this attribute. Aportionof the

object definition for one of these settings in RIDES

is

shownin Table3.

REBUS

object: ant_boresight

attribute: num_bs_points

range:one of 3, 5

default:3

I

RIDES

object: boresight_w

name:3-button

textvalue:"3"

location:[40r1055

]

Table 3. REBUS

and RIDES

object representations.

Analysis using REBUS

also helped to define pre- and

postconditionsin the TDN.Again, referring to the example

in Figure 2., weneededto find the preconditionsfor the

plan ClearBoresightOffsets. Thepreconditionwasthat the

boresighting procedurehad stopped. However,instead of

representing this as a precondition, weonly ne~edto put

the Clear BoresightOffsets plan after the Boresightplan.

Thereasonfor this is that the Boresightplan executedthe

boresighting procedure. Hence, whenthe boresighting

REBUS:Scenario-based Interview Methodology

procedurefinished, the Boresightplan finished. In this

case, REBUS

defined a dependencybetweenthe Boresight

One of the ways that we acquire knowledgeis

and ClearBoresightOffsetsplans.

through a scenario-based interview methodology.This

Given that REBUS,the TDNauthoring tool, and

approach is being implementedin a tool called REBUS

¯

RIDES

overlap in the knowledgethat they help to acquire

(Requirements Envisaging By Using Scenarios) at the

about

a domain, we envision using these tools as a

University of SouthernCalifornia Information Sciences

acquisitiontools.

Institute by LomaZormanand W.LewisJohnson(Zorman, coordinatedsuite of knowledge

1994; Benneret al., 1993). REBUS

is a graphical editing

RIDESSimulation Authoring Toolkit

tool designed to enable domainexperts and knowledge

The TDNauthoring tool and REBUS

provide the first

engineersto define the behaviorrequirementsfor a system

steps

in

the

knowledge

acquisition

process--they

assist in

by using scenarios. Scenarios provide a context for

the acquisition of declarative descriptions of the domain,

acquiring informationboth about devices andproceduresby

including descriptions of howthe devices work. This

having the expert walk through a scenario with the

interviewer. This is a rigorous approachto capturing much knowledgemayinclude a lot of the operator pre- and

in both planning and plan

of the informationthat is usedunderspecial circumstances, postconditions used by REACT-P

andit reveals failure modesthat are often not documented. execution. To refine the model of the domainthat is

acquiredwith these twotools, weuse the RIDES

simulation

75

authoringtoolkit (Munroet al., 1993)to developworking

models of the devices. We use the simulation to

communicate

with the subsystemengineers about howtheir

system worksso as to refine the knowledgeabout system

states, state transitions, and control. By observing a

simulation,the engineercan validate the system’sbehavior

in a morefamiliar waythan is achieved by looking at a

declarative description of the behavior. Wewould

eventually like to put RIDES

into the hands of these same

DSNsubsystemengineers for use as a design tool and

meansof creating a living and interactive functional

specification of their system, somethingwhichis greatly

neededif wehope to understandand automaticallycontrol

it.

Hence, one of the ways that we refine the REACT-P

knowledgebase is to showa behavioral modelof what is

knownabout a subsystemto an engineer. Thesimulationis

refined by modifyingthe deviceobjects andtheir attributevalues. In manycases a changein the device modelmay

also cause changesto the REACT-P

knowledge

base (e.g.,

preconditionmayneedto be addedor modifiedto reflect a

change to a device attribute.) Since RIDESuses an

attribute-centered approachto representing devices and

their behavior,this fits nicely with the kind of knowledge

that weneedfor representingpreconditions,postconditions,

goalstates, andso on.

A second way that the RiDES-basedsimulation is

usedfor refining the knowledge

base is throughinteractions

with the REACT-P

agent. The simulation provides a

realistic setting for testing REACT-P

and iteratively

refining both the simulator and the planning agent’s

knowledge.Therole of the simulator is further enhanced

by the fact that the DSNoperational environment is

relatively inaccessiblefor routine testing of experimental

prototypes. Whenaccess to the DSNis granted, the time

spent there is quite costly and there are extremesafety

measures that must be observed, so there are some

pragmaticreasonsfor usinga simulator.

experimenters, will likely have to do muchof the

knowledgeacquisition using the tools described in this

paper.

REACT-P:A Soar Agent for Monitor and Control

The REACT-P

agent is being developed in the Soar

(Laird et al., 1987) problem solving architecture.

mentionedabove, wehave developedan interface between

Soar and RIDES

so that REACT-P

can be tested against the

RIDES-based

simulations of the DSNdevices. Theinitial

REACT-P

agent design is based on the expert cognitive

modelused for the REACT

intelligent tutoring system(Hill,

1993). TheREACT

tutor is capable of reactively planning

smallportionsof a task basedon the needto help a student

overcomean impasse encountered while performing the

task. REACT-P

will extendthis capability to deal with the

needto recoverfromdeviceanomaliesor failures.

REACT-P

will be run against different scenarios on

the RiDES-based

simulator. In this waywe will emulate

the skill acquisition process wesee in humanOperators

wholearn throughexperience--particularlywhenthey reach

impasse points where they are forced to acquire new

knowledge

in order to completea task. In this case, we, the

6. Conclusions

In conclusion, weare building an agent to perform

monitor and control tasks on complexcommunications

devices. Theagent must be capable of expert humanlevels

of performance,and wepostulate that the wayto acquire

the knowledgeto accomplishthis goal is similar to how

humanslearn and acquire skill. Weoutline someof the

difficulties of acquiringknowledge

in a real-worlddomain,

.and wepostulate that the knowledgeacquisition problem

for developing a real-world planner should be treated

analogouslyto the waythat humanOperatorsare trained to

acquire a skill, that is, throughexperienceand knowledge

refinement,Finally, our approachis describedfor capturing

knowledgeabout the problem domainwith a combination

of tools andrepresentations.

76

7. References

(Benneret al., 1993) K. Benner, M.S. Feather, W.L.

Johnson, L. Zorman. "The Role of Scenarios in the

Software Development Process." In N. Prakash, C.

Rolland, and B. Pernici, editors, Information System

DevelopmentProcess, IFIP Transactions A-30, Elsevier

SciencePublishers, September,1993.

(Chien, Hill, Fayyad, 1994) S. Chien, R. Hill,

Fayyad."WhyReal-worldPlanningis Difficult." Working

Notes of the 1994 Fall Symposiumon Planning and

Learning: On to Real Applications, NewOrleans, LA,

November1994, AAAIPress.

(Fayyadet al., 1993) K. Fayyad,R. Hill, and E.J.

Wyatt. "KnowledgeEngineering for TemporalDependency

Networks as Operations Procedures." Proceedings of

AIAAComputingin Aerospace9 Conference, October 19¯ 21, 1993,SanDiego,CA.

(Hill&Johnson, 1993a) R. Hill and W.L. Johnson.

"Designing an intelligent tutoring system based on a

reactive modelof skill acquisition." Proceedingsof the

WorldConferenceon Artificial Intelligence in Education

(AI-ED93), Edinburgh,Scotland, 1993a.

(Hill&Johnson, 1993b) R. Hill and W.L. Johnson.

"Impasse-driventutoring for reactive skill acquisition."

Proceedings of the 1993 Conference on Intelligent

Computer-Aided Training and Virtual Environment

Technology (ICAT-VET-93), NASA/Johnson Space

Center, Houston,Texas, May5-7, 1993b.

(Hill, 1993) R. Hill. "Impasse-driventutoring for

reactive skill acquisition." Ph.D. diss., University of

SouthernCalifornia, 1993.

(Hill et al., 1993)R. Hill, K. Sturdevant, and W.L.

Johnson. "Towardan embeddedtraining tool for Deep

Space Network Operations." Proceedings of AIAA

Computingin Aerospace 9 Conference, October 19-21,

1993, San Diego, CA.

(Hill&Johnson, 1994) R. Hill and W.L. Johnson.

"Situated Plan Attribution for Intelligent Tutoring." To

appear in the Proceedings of the Twelfth National

Conferenceon Artificial Intelligence, July 31-August4,

1994,Seattle, WA.

(Laird et al., 1987) J. Laird, A. Newell and

Rosenbloom.

Soar:. Anarchitecture for generalintelligence.

Artificial Intelligence,33, 1987:1-64.

(Munroet al., 1993) A. Munro,M.C. Johnson, D.S.

Surmon,and J.L. Wogulis."AIIribute-CenteredSimulation

Authoring for Instruction." Proceedings of AI-ED93,

WorldConferenceon Artificial Intelligence in Education,

Edinburgh,Scotland; 23-27 August1993.

(Suchman,1987) Suchman,L.A. Plans and Situated

Actions: The Problemof Human-Machine

Communication.

Cambridge

University Press, NewYork, 1987.

(Zorman, 1994) Zorman, Lorna. "Requirements

Envisaging by Utilizing Scenarios." Ph.D. Diss., in

preparation,Universityof SouthernCalifornia, 1994.