From: AAAI Technical Report SS-02-06. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved.

AbductiveProcesses for AnswerJustification

Sanda M. Harabagiu

Steven J. Maiorano

Language Computer

Corporation

Dallas TX 75206 USA

sanda @ianguagecomputer.com

University of Sheffield

Sheffield S 1 4DPUK

stevemai @home.corn

Abstract

Ananswerminedfromtext collections is deemedcorrect if an explanationor justification is provided.Due

to multiplelanguageambiguities,abduction,or inferenceto the best explanation,is a possiblevehiclefor

suchjustifications. Tointerpret abductivelyan answer,

world knowledge,iexico-semantic knowledgeas well

as pragmaticknowledge

needto be integrated. Thispaper presentsvariousformsof abductiveprocessestaking

place whenanswersare minedfrom texts and knowledgebases.

Introduction

In (Harabagiu and Maiorano1999) we claimed that finding

answers from large collections of texts is a matter of implementing paragraph indexing and applying abductive inference. Subsequent experiments, reported in (Harabagiu

et al.2000) have showedthat in fact, answerjustification

provided by lightweight abduction has the merit of improving the accuracy of a Question Answering(QA) system

over 20%.These results were later confirmedin the TREC9t evaluations (Voorbees 2001), where LCC-SMU’s

system

was the only one that implementedabductive processes for

answerjustification.

To evaluate the results of a QAsystem, the TRECresults were considered as five possible answers, of either

50 bytes of length (short answers) or 250 bytes of length

(long answers). The scoring, detailed in (Voorbees2001),

uses a MeanReciprocal Rank (MRR)by relying on only six

values:(1, .5, .33, .25, .2, 0), representingthe score each set

of five answersobtains. If the first answeris correct, it obtains a score of 1, if the secondone is correct, it is scored

with .5, if the third one is correct, the score becomes.33, if

the fourth is correct, the score is .25 and if the fifth one is

correct, the score is .2. Otherwise, it is scored with 0. No

credit is given if multiple answers are correct. As shown

Copyright(~) 2002,American

Associationfor Artificial Intelligence(wwwn~i,org).

All rights reserved.

*TheTExtRetrieval Conference(TREC)

is the mainresearch

forumfor evaluatingthe performance

of InformationRetrieval(IR)

and Question Answering(QA)Systems. TRECis sponsored

the NationalInstitute of Standardsand Technology

(NIST)and the

DefenseAdvancedResearch Projects Agency(DARPA)

to annually conductIR and QAevaluations.

33

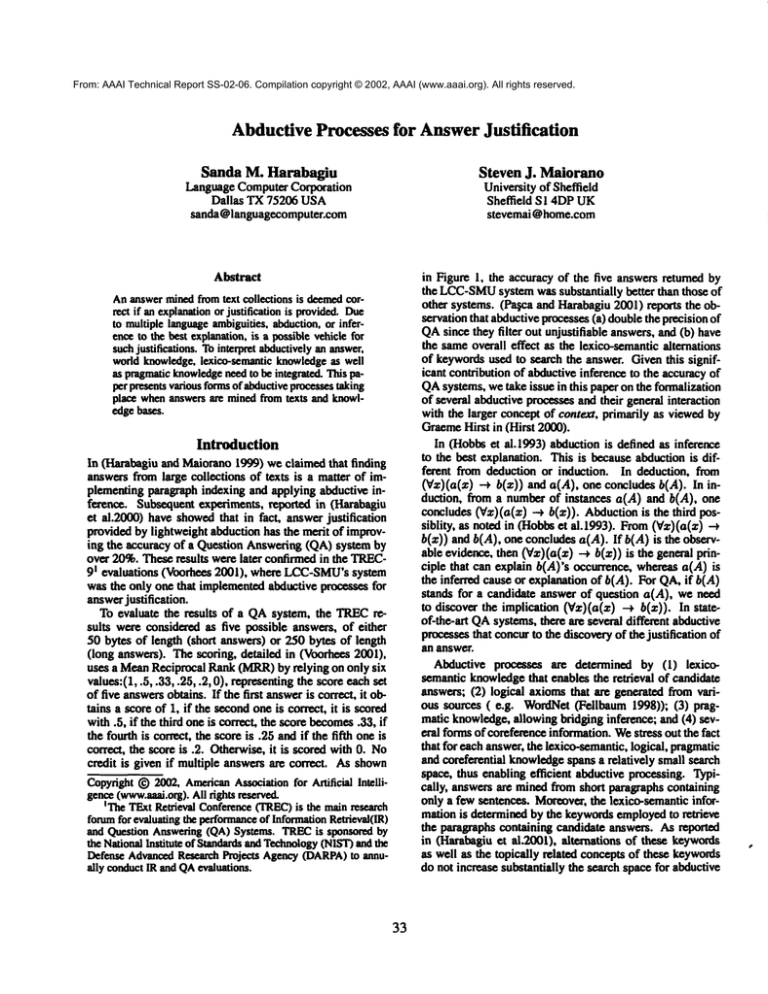

in Figure 1, the accuracy of the five answers returned by

the LCC-SMU

system was substantially better than those of

other systems. (Papa and Harabagiu 2001) reports the observation that abductiveprocesses(a) doublethe precision

QAsince they filter out unjustifiable answers, and (b) have

the sameoverall effect as the lexico-semantic alternations

of keywordsused to search the answer. Given this significant contribution of abductive inference to the accuracyof

QAsystems, we take issue in this paper on the formalization

of several abductive processes and their general interaction

with the larger concept of context, primarily as viewedby

GraemeHirst in (Hirst 2000).

In (Hobbset al.1993) abduction is defined as inference

to the best explanation. This is because abduction is different from deduction or induction. In deduction, from

(Vz)(a(z) ~ b(z)) a(A)one concludes b(A). In induction, from a number of instances a(A) and b(A), one

concludes (Vz)(a(z) -t b(z)). Abductionis the third

siblity, as noted in (Hobbset al.1993). From(Vz)(a(z)

b(z)) and b(A), one concludes a(A). If b(A) is the observable evidence, then (Vz)(a(z) b(z)is the general principlethat can explain b(A)’s occurrence, whereas a(A) is

the inferred cause or explanation of b(A). For QA,if b(A)

stands for a candidate answer of question a(A), we need

to discover the implication (Vz)(a(z) b(z)). In s tat eof-the-art QAsystems, there are several different abductive

processesthat concurto the discoveryof the justification of

an answer.

Abductive processes are determined by (1) iexicosemantic knowledgethat enables the retrieval of candidate

answers; (2) logical axiomsthat are generated from various sources ( e.g. WordNet(Feilbaum 1998)); (3)

matic knowledge,allowing bridging inference; and (4) several formsof coreference information.Westress out the fact

that for each answer,the iexico-semantic,logical, pragmatic

and coreferential knowledgespans a relatively small search

space, thus enabling efficient abductive processing. Typically, answers are minedfrom short paragraphs containing

only a few sentences. Moreover,the lexico-semantic informarion is determined by the keywordsemployedto retrieve

the paragraphs containing candidate answers. As reported

in (Harabagiu et al.2001), alternations of these keywords

as well as the topically related concepts of these keywords

do not increase substantially the search space for abductive

TRY-9 5O b~

O.5

0.4

0.3

m

mmmmmmmm_

o., mmmmnmmmmmmmmmmmm_

mm mmmmmmmmmmmmmmmmmm.m

0

0.8

0.7

0.6

0.$

0.4

0.3

0.2

0,!

0

TREC-925Obytes

Figure 1: Results of the TREC-9

evaluations.

processing - on the contrary- they guide the abductive pro-

Rouge, the famous Parisian nightclub. If appositions are

not recognized and the mining of answers is solely based

on keywords, manyfalse positives may be encountered.

For example, when searching on GOOGLE

for ’ ’Houlin

Rouge’ ’ a vast majority of the URLscontain information about the 2001movieentitled "MoulinRouge",starring

Nicole Kidrnanand EwenMcGregor.Dueto this, the pageranking algorithm used by GOOGLE

categorizes the named

entity as the title of a movie. Linguistically, we are dealing in this case with a metonymy,since moviesget titles because of the mainplace of themesthey depict, in this case

the Parisian cabaret. However,to be able to answerthe question, a long chain of inference is needed.Oneof the reviews

of the movie says: " "Resurrects a momentin the history

of cabaret whenspectacle ravished the senses in an atmosphere of bohemianabandon.". The elliptic subject of the

review refers to the movie. Becauseof this reference, the

first clause introduces the theme of the movie: "a moment

in the history of cabaret". This enables the coercion of the

nameof the movieinto the type "cabaret", leading to the

sameanswer that was minedthrough an apposition from the

TRECcollection. As opposedto a simple recognition of an

apposition, this exampleshowsthe special inference chains

imposedby on-line documents.

Copulativeexp _res~__’ons

Copulative expressions are used for defining features of a

widerange of entities. This explains why,for so-called definition questions, recognizedby one of the patters:

cesse8.

This paper is organizedas follows. Section 2 describes the

answerminingtechniques that operate on large on-line text

collections. Section 3 presents the techniques used for mining answersfrom lexical knowledgebases. Section 4 details

several abduedveprocesses and their attributes. Section 5

discusses the advantages and differences of using abductive

justification as opposedto quantitative ad-hoc information

provided by redundancy. Section 6 presents results of our

evaluations whereasSection 7 summarizesthe conclusions.

AnswerMiningfromOn-Hne

Text Collection

There are questions whoseanswer can be minedfrom large

text collections because the informationthey request is explicit in sometext snippet. Moreover,for somequestions

and sometext collections, it is easier to minea questionin a

vast set of texts rather than a knowledge

base. In this section

we describe several abductive processes that account for the

miningof answersfrom text collections:

Appositions

Appositive constructions are widely used in Information

Extraction tasks or coreference resolution. Similarly, they

provide a simple and reliable way for the abduction of

answers from texts. For example, the question "What

is Moulin Rouge?" evaluated in TREC-10is answered

by the apposition following the named entity: Moulin

34

(Q-Pl):What {is[are} <phrase.to.define>?

(Q-P2): Whatis the definition of <phrase.to.define>9.

(Q-P3): Who[ is[wuslarelwere} < person.name(s)>

the mining of the answer is done by matching some of

the following patterns,as reported in (Pa~ca and Harabagiu

2001):

( A-Pl ):[ <phrase.to.defme>

{ islarelbecame}

( A-P2):[ <phrase_to.defme

>, { a[the[an}]

(A-P3):[<phrase_to.define>

A special observation needs to be madewith respect to

the abduction of answers for definition questions. Another

apposition, from the same TRECcollection of 3 GBytesof

text data, correspondingto the question asking about Moulin

Rougeis Moulin Rouge, a Pard landmark. At this point,

additional pragmatic knowledgeneeds to be used, in order

to discard this second candidate answerdue to its level of

generality. Oneneeds to knowthat the Notre Dameand the

Eiffel Towerare also Paris landmarks, but howeverMoulin

Rougeis a cabaret, the Notre Dameis a cathedral whereas

the Eiffel Toweris a monument.Therefore the definition

of the MoulinRougeas a Parisian landmarkis too general,

since abductions classifying it as a cathedral or monument

wouldbe legal, but untruthful.

Unfortunately, pragmatic knowledgeis notalwaysreadily

available, and thus often we needto rely on classification information available either from categorizations provided by

popular

search

engines

likeGOOGLE,

orfromad-hoc

categorizations

determined

by hyperlinls

onvarious

Webpages.

For example, when searching GOOGLEfor ’ ’ landmark

+ Paris’’, the resulting pointers comprise Webpages

discussing the Arc de Triompbe,the Eiffel Towerbut also

the Hotel Intercontinental, boasted as a Paris landmark,or

the Maiile Waymustardshop or even the products of Iguana,

a companymanufacturing heirloom glass ornaments representing Parisian landmarkssuch as the Eiffel Tower, the

Notre Dameor the Arc de Triomphe. Furthermore, lexical

knowledgebases such as Wordlqetdo not classify a cabaret

as a landmark,but rather as a place of entertainment.

Possessives

Possessive constructions are useful textual cues for answer

mining. For example, whensearching for the answer to the

TRF_~-10question "Wholived in the NeaschwansteincaRde?", the possessive "MadKing Ludwig’s Neuschwanstein

Castle indicates a possible abduction,due to the multiple interpretations of the semanticof possessiveconstructs. In the

case of a house, evenif luxurious as a castle, the "possessot" is either the architect, the owneror the personthat lives

in it. Since the question specifically asked for the person

wholived in the castle, the other twopossibilities of interpretation are filtered out unless there is evidencethat King

Ludwig

built the castle but did not live in it.

Possessive constructions are sometimes combined with

appositions, like in the case of the answer to the TREC10 question "What currency does Argentina use?", which

is "The austral, Argentina’s currency". However,mining

35

the answer to a very similar TREC-10

question "Whatcurrency does Luxemburguse?" relies far more on knowledge

of other currencies than on pure syntactic information. In

this case, the answer is provided by the complexnominal

"Luxemburg

franc", that is recognizedcorrectly because the

noun "franc’~gorized

as CURRENCY

by a Named Entity tagger similar to those developedfor the MessageUnderstanding Conference (MUC)evaluations. If such a resource is not available and the answerminingrelies only on

semantic information from WordNet,the abduction cannot

be performed, since in WordNet1.7, the noun ’~franc" is

classified as a "monetaryun/t", a conceptthat has no relation to the "currency"concept.

Meronyms

Sometimesquestions contain expressions like "madeof" or

"belongs to" whichare indicative of meronymic

relations to

one of the entities expressed in the question. For example,

in the case of the TREC-10

question "Whatis the statue of

liberty madeof?. ", evenif Statue of Libertyis not capitalized

properly, it is recognized as the NewYork landmarkdue to

its entry in WordNet.The sameentry is sub-categorized as

a statue, and the nameof its location "NewYork" can be retrieved from its defining gloss. However,WordNetdoes not

encode any meronymicrelations of the type IS-MADE-OF.

Instead, the meronymicrelation is implied by the prepositional attachment "the 152-foot copperstatue in NewYork’,

where the nominal expression ~ to the Statue of Liberty in the same TRECdocument. Since "copper" is classifted as a substance in WordNet,it allows the abduction

of the metonymybetweencopper and the Statue of Liberty,

thus justifying the answer.

A second form of meronymy

is illustrated by the TREC10 question "Whatcounty is Modesto,California in ?". The

processing of this question presupposesthe recognition of

Modestoas a city nameand of California as a state name,

which is a function easily performed by most namedentity

recognizers. Usually the names of UScities ate immediately followed by the nameor the acronymof the state they

belong to - to avoid ambiguitybetweendifferent cities with

the samename,but located in different states, e,g. Arlignton,

VAand Arlington, TX. However,the question asks about

the nameof a county, not for the nameof the state. If gazeteers of all UScities, their counties andstates are not available, an IS-PART

relation is infened from the interpretation

of prepositional attachments determinedby the preposition

"in".. For example,the correct answerto the abovequestion

is indicated by the following attachment: "10 miles west of

Modestoin Stanislaus County.

A third form of meronymy

results from recipes or cooking directions. It is commonsense

knowledgethat any food

is madeof a series of ingredients, and the general format

of any recipe will list the ingredients along with their measurements. This insight allows the mining of the answerto

the TREC-10question "What fruit is Melba sauce made

from?". The expected answer is a kind of fruit. Both

peaches and raspberries are fruits. However,we cannot abductively prove that "peach" is the correct answer, because

the text snippet "/t is used as saucefor PeachMelba", indi-

ORGANIZATION

~’~

/---"’~

LANGUAGE

LO~T,ON LXA A

I

!,~\

UN,VERSrTY

/~/~

DEGREE

VALUE

DEFINITION

~TE PERCENTAGE

AMOUNT SPEED

"’"

A A

A A A A

"",":"~NS,ON

DURA~DN

COUNTT~ERATURE

AA

AA

A ~ ~

N~.NT

//

G~LAXY COU Y

,,

"....

"--....

"

IAA

AA

,~’

""IVbNEY

CITY PROVINCE ODIER_LOC :;’

’

-AAA

AA AA_. .....

...

I

I=t,~

oU~,,

I

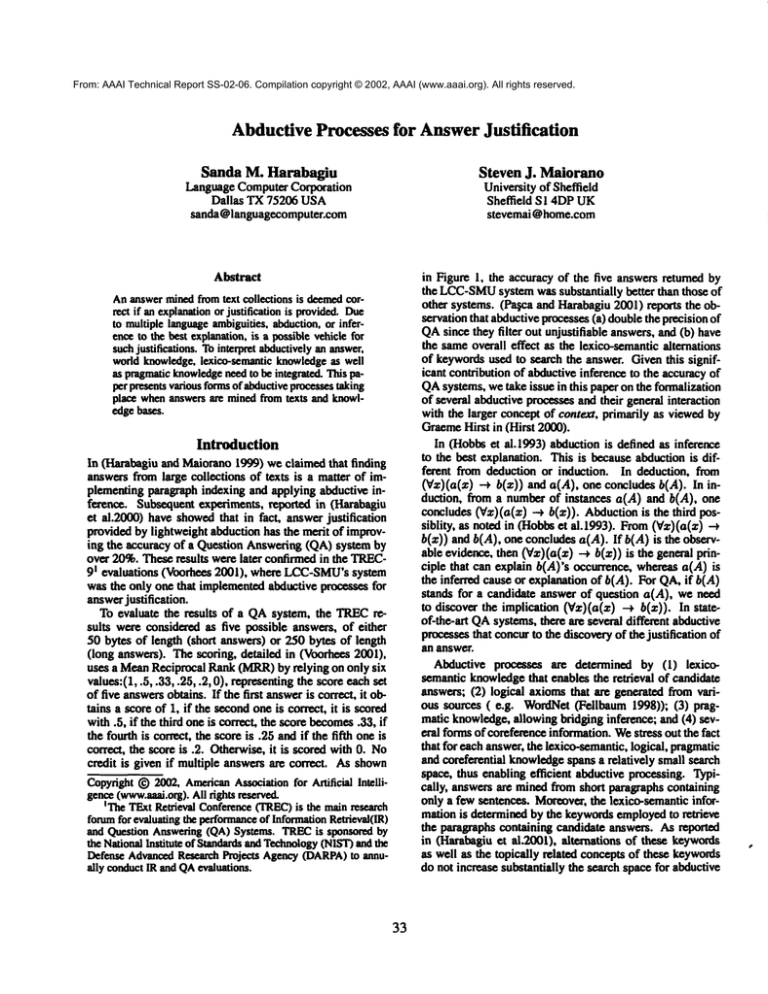

Figure 2: AnswerType Taxonomy

cates that the sauce, whichcorefers with Melhasauce in the

sametext, isjust in ingredient of the PeachMeiha.However,

the text snippet "2 cups of pureed raspberries" extracted

from the recipe of Melbasauce provides evidence it is the

COITeCt answer.

AnswerMiningfromLexical Knowledge

Bases

Lexical knowledgebases organized hierarchically represent

an important source for mininganswers to natural language

questions. A special case is represented by the WordNet

database, which encodes a vast majority of the concepts

from the English language, lexicalized as nouns, verbs, adjectivas and adverbs.Whatis special about WordNetis the

fact that each concept is represented by a set of synonym

words,also called a synset. In this way, wordsthat havemultiple meaningsbelong to multiple synsets. However,word

sense disambignation is not hindering the process of mining answers from lexico-semantic knowledgebases because

question processingenables a fairly accurate categorization

of the expected answertype.

Whenopen-domainnatural language questions inquire

only about entities or events or someof their attributes or

roles, as was the case with the test questions in TREC-8,

TREC-9and the main task in TREC-10,an off-line taxonomyof answer types can be built by relying on the sysnets encoded in WordNet(Fellbaum 1998). (Pagca

Harabagiu2001) describes the semi-antomatic procedure for

acquiring a taxonomyof answertypes, similar to the one illustrated in Figure2.

Ananswer type hierarchy enables the mining of the answer of a wide range of questions. For example, when

searching for the answer to the question "Whodefeated

Napoleonat Waterloo?"it is important to knowthat the ex-

36

pected answer represents somenational armies, let by some

individuals. In WordNet1.7, the glossed definition of the

synset { Waterloo,battle of Waterloo}is (the battle on 18

June 181S in which Napoleonmet his fuml defeat; Prussian

and British forces under Blucher and the Dukeof Wellington muted the French forces under Napoleon). The exact

answer, extracted from this gloss is "Prussian and British

forces under Blucher and the Duke of Wellington", representing a noun phrase, whosehead, Prussian and British

forces, is coerced from the namesof the two nationalities,

that are encodedas concepts in the answer type hierarchy.

However,since the expected answer type is PERSON,

several entitieS from the gloss are candidates for the answer:

"Napoleon", " Prussian and British forces", "Blucher",

"the Duke of Wellington" and "the French forces". If

"Napoleon", "Prussian", "British" and "French" are encoded in WordNet,it is to be noted that "Blucher" and "the

Dukeof Wellington" are not. Nevertheless, they are recognized as namesof persons due to the usage of a NamedEntity recognizer.

Since the verb "defeat"

is not reflexive, both "Napoleon"

and "the French forces" are ruled out, the second due

to the prepositional attachment "the French forces under

Napoleon".Becausethe only remaining candidates are syntactically dependentthrough the coordinated prepositional

attachment "Prussian and British forces underBlucher and

the Dukeof Wellington"they represent the exact

answer.If a

shorter exact answeris wanted, a philosophical debate over

whois more important- the general or the armyneeds to be

resolved.

Fromthe WordNetgloss, "Prussian and British

forces" is recognized as the genus of the definition of Waterloo - and thus stands for an answer mined from WordNet. Miningthis answer from WordNetis important, as it

Answeri. Score: 280.00

Thefamilygainedprominence

by financingsuchmassiveprojects as European

railroads and the British military

campaign

that led to the final defeat of Napoleon

Bonaparte

at Waterlooin June1815

Answer2. Score: 244.69

In 1815,Napoleon

Bonapartemethis Waterlooas British and Prussiantroops defeatedthe Frenchforces in Belgium

Answer3. Score: 244.69

In 1815,NapoleonBonapartemethis Waterlooas British and Prussiantroops defeatedthe Frenchforces in Belgium

Answer4. Score: 146.14

in 1815,Napoleon

Bonaparte

arrived at the island of Saint Helenaoff the westAfricancoast wherehe wasexiled after his

lossto the Britishat Waterloo

Answer5. Score: 132.69

Subtitled "WorldSociety1815- 1830,"the bookconcentrateson the period that openswithNapoleon’sdefeat at Waterloo

and closes withthe election of Andrew

Jacksonas presidentof the U.S.Scienceand technologyare as importantto his story

as the rise andfall of empires

Table 1: Answersreturned by LCC’sQASfor the question "Whodefeated Napoleonat WaterlooT’

AnswerI. Score: 888.00

Notsurprisingly, Leningrad’sCommunist

Party leadershipis in the forefront of those opposedto changingit backagain

Answer2. Score: 856.00

Thevehicle plant namedafter Lenin’s YoungCommunist

Leagueand knownas AZKL,

shut its car productionline yesterday

after suppliesof enginesfrom

Answer

3. Score:824.00

"! ’m againstchanging

the name

of the city," YuriP. Belov.a secretaryof the Leningrad

regionalCommunist

Party

committee,

saidin aninterview

Answer4. Score: 820.69

Expre~ing

the interests of the workingclass andall working

peopleandrelying on the great legacy

of Marx,Bagelsand Lenin,the SovietCommunist

Patty is creatively developingsocialist

Answer5. Score: 820.69

In both Moscow

and Leningrad,the local Communist

Party has taken control of the newspapers

it originally sharedwith

~’

Table 2: Answersreturned by LCC’sQASfor the question "Whowas Lenin.

can determine a better scoring of QAsystems that mineanswers only from text collections. For example,if this answer

were known,it wouldhave improvedthe precision of LCC’s

Question Answering Server (QASTM),that produced the

answerslisted in Table1. In this case, answers4 and 5 would

have been filtered out and answers2 and 3 wouldhave taken

precedence over answer 1.

Definition questions

Mining answers from knowledge bases has even more importance whenprocessing definition questions. The percentage of questions that ask for definitions of concepts, e.g.

"What are capers?" or "What is an antisen?" represented

25%of the questions from the main task of TREC-10,an

increase from a mere 9% in TREC-9and 1% in TREC-8

respectively. The definition questions normallyrequire an

increase in the sophistication of the QAsystem. This is determined by the fact that questions asking about the definition of an entity use few other words that could guide the

semantics of the answermining. Moreover,for a definition,

there will be no expected answer type. A simple question

such as "Whowas Lenin?" can be answered by consulting

the Wordlqetknowledgebase rather than a large set of texts

like the TRECcollection. Lenin is encoded in Wordlqet,

in a synset that lists all his namealiases: {Len/n, Vladimir

Lenin, Nikolai Lenin, Vladimir Ilyich Ulyanov}. Moreover,

the gloss of this synset lists the definition: (Russianfounder

of the Bolsheviks and leader of the Russian Revolution and

37

first head of the USSR(1870-1924)). This is a correct answer, and furthermore, a shorter answercan be obtained by

simply accessing the hypernymof the Lenin entry, revealing

that he was a communist. Whenmining the answer for the

samequestion from large collections of texts, as illustrated

in Table I no correct completeanswers can be found, as the

informationrequired by the answeris not explicit in any text,

and thus only related informationis found.

Q912:Whatis epilepsy?

Qi273:Whatis an annuity?

Q!022:Whatis I~mbledon?

QI I.52: Whatis MardiGras?

QI I tO:Whatis dianetics?

Q1280:Whatis MuscularDistrophy?

Table 3: Examplesof definition questions.

Severaladditional definition questions that wereevaluated

in TREC-10

are listed in Table 3. For the somethose questions the concept that needs to be defined is not encoded

in WordNet.For example, both diametics from Q1160and

Muscular Distrophy from Q1280are not encoded in WordNet. But wheneverthe concept is encodedin WordNet,both

its gloss and its hypernymhelp determine the answer. Table 4 illustrates several examplesof definition questionsthat

arc resolvedby the substitution of the conceptwith its hypernym. The usage of WordNethypernymsbuilds on the con-

jecture that any concept encodedin a dictionary like WordNet is defined by a genus and a differentia. Thuswhenasking about the definition of a concept, retrieving the genusis

sufficient evidenceof the explanationof the definition, especially whenthe genus is identical with the hypernym.

Conceptthat needs

ob dOd

Whatis a

shaman?

Whatis a

nematode?

Whatis

anise.?

Hypernymfrom

WordNet

{priest.nonChristianpriest}

(worm)

Candidateanswer

Mathews

is the

priest or shaman

nematodes,tiny

worms

in soil.

{herb, herbaceous aloe, anise, rhubarb

plant)

and other herbs

Table 4: WordNetinformation employed for minings answersof definition questions.

In WordNetonly one third of the glosses use a hypernymof the concept being defined as the genus of the gloss.

Therefore, the genus, processes as the head of the first Noun

Phrase from the gloss, can also be used mine for answers.

For example, the processing of question Q1273from Table 3 relies on the substitution of annuity with incomethe

genus of its WordNetgloss, rather than its hypernym,the

concept regular payment.The availability of the genus or

hypernymhelps also the processing of definition questions

in whichthe concept is a namedentity, as it is in the case

of questions Q1022and Q1152from Table 3. In this way

Wimbledonis replaced with a suburb of Londonand Mardi

Gras with holiday.

Pertainyms

Whena concept A is related by some unspecified semantics to another concept B, it is said that A pertains to B.

WordNetencodes such relations, which prove to be very

useful for mining answers for natural language questions.

For example, in TREC-10,when asking "Whatis the only

artery that carries bloodfromthe heart to the lungs’?°’, the

correct answer is mined from WordNetbecause the adjective "’pulmonary"pertains to lungs, therefore the Pulmonary

Artery is the expected answer. Knowingthe answer makes

the retrieval of a text snippet containingit mucheasier. The

textual answer from the TRECcollection is: "a connection

from the heart to the lungs through the pulmonaryartery".

Bridging Inference

Questions like Whatis the esophagusused for?" or "Why

is the sun yellow ?" are difficult to process becausethe answer relies on expert knowledge,from medicinein the former example, and from physics in the latter one. Nevertheless, if a lexical dictionary that explains the definitions

of concepts is available, some supporting knowledgecan

be mined. For example, by inspecting WordNet(Folihaum

1998), in the case of esophaguswe can find that it is "the

passage between the pharynx and the stomach’. Moreover,

WordNetencodes several relations, like meronymy,showing

that the esophagusis part of the digestive tube or gastrointestinal tract. The glossed definition of the digestive tube

showsthat one of its function is the digestion.

38

The information minedfrom WordNetguides several processes of bridging inference betweenthe question and the

expected answer.First the definition of the concept defined

by the WordNetsynonymset {esophagus, gorge, gullet} indicates its usageas a passagebetweentwo other bodyparts:

the pharynx and the stomach. Thus the query " esophagus

ANDpharynx ANDstomach’" retrieves all paragraphs conmining relevant connectionsbetweenthe three concepts, including other possible functions of the esophagus. When

the query does not retrieve relevant paragraphs, newqueries

combiningesophagusand its hoionyms(i.e. gastrointestinal

tract) or functions of the holonyms(i.e. digestion) retrieve

the paragraphs that maycontain the answer. To extract the

correct answer, the question and the answer need to be semantically unified.

Thedifficulty stands in resolvingthe level of precision reTMconsidquired by the unification. Currently, LCC’sQAS

ers an acceptable unification when(a) a textual relation can

be established betweenthe elements of the query matchedin

the answer(e.g. esophagusand gastrointestinal tract); and

(b) the textual relation is either a syntactic dependency

generated by a parser, a reference relation or it is inducedby

matchingagainst a predefined pattern. For example,the pattern "X, particularly Y" accountsfor such a relation, granting the validity of the answer "the upper gastrointestinal

tract, particularly the esophagus’. However,we are aware

that suchpatterns generatemultiplefalse positive results, degrading the performanceof the question-answeringsystem.

Abductive Processes

In (Hobbset a1.1993) abductive inference is used as a vehicle for solving local pragmatics problems. To this end,

a first-order logic implementingthe Davidsonianreification

of eventualities (Davidson 1967). The ontological assumptions of the Davidsoniannotation was reported in (Hobbset

al.1993). This notation has twoadvantages:(l) it is as close

as possible to English; and (2) it localizes syntactic ambiguities, as it was previously shownin (Bear and Hobbs1988).

Moreover,the samenotation is used in a larger project first

outlined in (Harahagiuet aL 1999) that extends the WordNet

lexicai database (Fellbaum 1998). The extension involves

the disambiguationof the defining gloss of each concept as

well as the transformationof each gloss into a logical form.

The Logic FromTransformation (LFT) codification is based

on syntactic-based relations such as: (a) syntactic subjects;

(b) syntactic objects; (c) prepositional attachmentsand

adjectival/adverbial adjuncts.

The Davidsoniantreatment of actions considers that all

predicates iexicaiizod by verbs or nominalizationshave three

argumentsaction/state/event-predicate(e, x, y), where:

o e represents the eventuality of the action, state or event to

have taken place;

o x represents the agent of the action, representedby the syntactic subject of the action, event or state;

o y represents the syntactic direct object of the action, event

or state.

In addition, we express only the eventuality of the actions,

events or states, considering that the subjects, objects or

other themes expressed by prepositional attachments exist

Cargtldmanswsrl;

the ruskol soivlngB~eparadoxol whyAtlner*j , the cradleel Wesfem

democracy,

cot)denmed

Socrafes

to death

Evk~n~:condemn(e)-:.cause(e)tw~e) &die[e,?) -~fe(e,?) & cause[e,?,el) ~[x) -> die[e,?)

WordNet

troponyms:how(e)& die(e,?)-> sulfocate(e, ?)

how(e)& die(e,?)-> ctown(e,

Candidateanswer2:

Socrates

Butsikares, a formereditor of rne NewYorkTimes" infenmtional

edition in Paris, d~dThun;day

of

a heartalfackat a StatenIslandhospital

d

I

I LFT: somt~x~~re~x~t ~ve~e=~~ o~e~a ,u~ana~J

Evidence:how(e)&die(e,?)->die(e,?) & cause(e,?,el) hean..affack{z)-.~Wclion(z)affliction(z)->

Figure 3: Abductivecoercion of candidate answers.

by virtue of actions they participate in. For example, the

LFTof the gloss (a person who inspires fear) defining the

WordNet

synset2 {terror, scourge, threat} is: Lverson(x)

inspire(e, x, y) &fear(y)]. Wenote that verbal predicates can

sometimeshave morethan three arguments, e.g. in the case

of bitransitive verbs. For example, the gloss of the synset

{impart, leave, give, pass on} is (give people knowledge),

with the correspondingLFr:~give(e, x, y, z) &knowledge(y)

&people(z)], havingthe subject unspecified.

Since most thematic roles are rclxesented as prepositional

attachments, predicates lexicalized by prepositions arc used

to account for such relations. The preposition predicates

have always two predicates: the first argumentcorresponds

to the head of the prepositional attachmentwhereasthe second argumentstands for the object that is being attached.

The predicative treatment of prepositional attachments was

first repotted in (Bear and Hobbs1988). In the workof Bear

and Hobbs, the prepositional predicates are used as means

for localizing the ambiguities generated by possible attachments. In contrast, when LFTsare generated for WordNet

glosses, all syntactic ambiguities are resolved by a highperformancepmbabilistic parser trained on the Penn Treebank (Marcus et al.1993). However,the usage of preposition predicates in the LFTis very important, as it suggests

the logic relations determinedby the combinationof syntax

and semantics. Paraphrase matching can thus be employed

to unify relations bearing the samemeaning, and thus enabling justifications. The paraphrasesare correctly matched

also because of the lexical and semanticdisambignationthat

is producedconcurrently with the parse. The probabilistic

parser also correctly identifies base NPs, allowing complex

nominals to be recognized and transformed in a sequence

2Aset of synonyms

is called a synseL

39

of predicates. The predicate rut indicates a complexnominal, whereasits argumentscorrespond to the componentsof

the complex nominal. For example, the complex nominal

baseball team is transformed in Inn(a, b) &baseball(a)

team(b)].

The roles that generate LFTswere reported in (Moldovan

and Rus). The transformation of WordNetglosses into Ll~s

is important because they enable two of the most important

criteria neededto select the best abduction. Thesecriteria

were reported in (Hobbset al.1993) and are inspired by Thagard’s principles of simplicity andconsilience (cf. (Thagard

1978)). Roughly,simplicity reqt~:s that the chain of explanations should be as ,:mall as possible, whereasconsilieace

requires that the evidence triggering the explanation be as

large as possible. In other words,for the abductiveprocesses

needed in QA,we expect to have as muchevidence as possible to infer froma candidateanswerthe validity of the question. Wealso expect to makeas few inferences as possible.

All the glosses from WordNet

represent the search space for

evidencesupportingan explanation. If at least twodifferent

justifications are found, the consiliencecriterion requires to

select the one having stronger evidence. However,the quantification of evidenceis not a trivial matter.

For the exampleillustrated in figure 3, the evidenceused

to justify the first answerof the TREC-8

question comprises

axiomsabout the definition of the condenmconcept, the definition of the howquestion class and the relation between

the nominalization death and its verbal root die. The LFT

of the first answer, whenunified with these axiomsproduces

a coercion that cannot be unified with the LFTof the question because the causality relation is reversed: the answer

explains whySocrates dies and not how he did die. The

second candidate answerhowevercontains the right dire(:-

Can~fam

answer

3:

Hls cdtics evennndfault wl~ ltmt . argulng#wt the m__~__~_

tlon wlth ~m~ ~ ~ ~ ~

I LFT:°~oclatlo~iU

& wl~a.x]& ~x] & ~ike{e.a/o]& sulc;de(b]&~el.b]& hero,el)

EvldBn~:

7???

AbOicti~

pxC~n:

a.~ocla#on

with(x] makw

(y] ->relatlon

Ix,

how[e)

Ld~[e,

?)->¢~°,

?]L cause[e,

?,°1]

~iclde[e,?)->Idtl[e.?,?]

l~l(e,?,?]->cause[e,?]&

Oie[e,?)

x) ]

i Coe~on:Socmtes(x]&~.~cide[°,

Cand/dme

atwwer

4:

Somt~wt~owas~nwnced

to oeam

for/ml~y °nO~ ~k~ of ¢~0c~ty V ya~. ~ m~ ~ pokumous

i

I ,FT..socmm(,)

,, ~*~y)-~,,o~y)

8 ~,,t,o,0,)

Eviderce:

Ira,ale) &die(a,?)

-,~ie(e.?)& cau~(e,?.el)

poL,~n(e,x)

->t~(e,x)poi~w~u~z)->

Figure4: Abducfivejustification of candidateanswers.

tion of the causality imposed

by the semanticclass of the

questionandit is considereda correct, althoughsurprising

answer.

Thisis dueto the ambiguity

of the question- it is not

clear whetherweare askedaboutthe morefamousSocrates,

i.e. the philosopher, or about somebody

whosefirst nameis

Socrates. The coercion of the first candidate answercould

not use any of the WordNetevidence provided by the hypouymsof the verb die.

The simplicity criterion is satisfied becausethe coercion

of the answeralways uses the minimalpath of backwardinference. Hobbsconsiders that in abduction, the balance between simplicity and consilience is guaranteed by the high

degree of redundancytypical in natural languageprocessing.

However,we note that redundancyis not always enough. An

exampleis illustrated in Figure 4 by the third candidate answer to the same TRECquestion. Withoutan abductive pattern recognizing a relation betweenconcept X and concept

Yin the expression "association with X makesY" it is practically impossible to justify this answer, whichis correct.

The fourth candidate answer is also correct, and furthermore it is a morespecific answer. However,only by using

the axiomatic knowledgederived from WordNetthrough the

LFTs,it is impossible to showthat the third answeris more

general than the fourth one - therefore it is hard to quantify

the simplicity criterion - due to the generative powerof natural language, whichalso has to be taken into consideration

when implementingabductive processes.

Whenimplementing abductive processes, we considered

the similarity betweenthe simplicity criterion and the notion of context simpliciter discussed in (Hint 2000). Hirst

notes that context in natural languageinterpretation cannot

be oversimplified and considmedonly as a source of information - its main functionality when employedin knowledge representation. Withsomevery interesting examples,

4O

Hirst showsthat in natural languagecontext constrains the

interpretation of texts. At a local level, it is known

that context is a psychologicalconstruct that generates the meaning

of words. Miller and Charles argued in (Miller and Charles

1991) that word sense disambignation (WSD)could be

ferred easily if we could knowall the contexts in which

words occur. In a very simplified way, local context can

be imaginedas a windowof words surrounding the targeted

concept, but work in WSD

shows that modeling context in

this wayimposessophisticated waysof deriving the features

of these contexts. To us it is clear that the problemof answer justification is by far morecomplexand difficult than

the problemof WSD,and therefore we believe that simplistic representationsof context are not beneficial for QA.Hirst

notes in (Hirst 2000)

that"havingdiscretion in constructing

context does not meanhaving complete freedom’.

Arguingthat context is a spurious concept that maycome

dangerously to being used in incoherent manners, Hirst

notes that "lmowledgeused in interpreting natural language

is broaderwhile reasoningis shallow". In other words, the

inference path is usually short while the evidential knowledge is rich. Weused this observation whenbuilding the

abductive processes.

To complywith the simplicity and consilience criteria we

need (1) to havesufficient textual evidencefor justification

- which we accomplish by implementingan information retrieval loop that bring forwardall paragraphscontainingthe

ke~ of the question as well as at least one concept of

the expected answer type3; (2) to consider that questions

can be unified with answers, and thus provide immediate

explanations; and (3) to justify the answer through abduc3Moredetails of the feedbackImpsimplemented

for QAare

reportedin (Harabagiuct al.2001)

I

Quest~n: Whyare so many ~ sent to jail?

]

Answ~.Jo#sand educationdimin~h.

Context1: (Revemnd

JesseJaclcsonln anatficle on MartlnLutherKing’s"l lmvea droarn"speech)

The"glant

eucldng

is and

noteOuca~n

morelydiminish,

Amorlcan

jot~

ooingam

to being

NAFTA

andGA

Tr choap

Ja~indusMal

~s. Thoglant

stctd~

sound

is tlmtek~md"

as jobs

our youth

sucked

into

the jail

~mplex.

I

I

Context2:

(RossPerotfirst presidentialcampaign)

tithe UnitedStmtesapprovesNAFTA,

the giant sucldngsoundthat wehearwill bethe soundof thousands

of joDs

I

ar~ ~s d~appeart~

to Mex~o.

I

Figure 5: Therole of context in abductiveprocesses.

tions that use axioms gnnemtedfrom WordNetalong with

information derived by the reference resolution or by world

knowledge;(4) to makeavailable the recognition of paraphrasesand to generate somenormalized dependencies between concepts. Howeverwe find that we also need to take

into account the contextual information. To account for our

belief, we present an exampleinspired by the discussion of

context from (Hirst 2000). The exampleis illustrated in Figure 5.

Redundancy

Whenthe question from Figure 5 is posed the answer is

extracted from a paragraphcontained in an article of Reverend Jesse Jackson on Martin Luther King’s "I have a

dream" speech. This paragraph contains also an anaphoric

expression "the giant sucking sound" which is used twice.

The anaphor is resolved to the metaphoricexplanation that

this soundis causedby youngstersbeing jailed due to the diminishingjobs and education. In the process of interpreting

the anaphor,the expression : being sucked into the jail ind~trial complex"is recognized as a paraphrase

of "sent to

jail" even if is contains the morphologicallyrelation to the

"sucking sound". So what is this "sucking sound"?As Hirst

4, at the timeof writingthe arfirst points out in (Hirst 2000)

ticle containing the answer, ReverendJesse Jackson alludes

to a widely reported remark of Ross Perot. This remark

bring forwardthe context of Perot’s speechin his first presidential campaign,whenhe predicts that NAFTA

will enable

the disappearing of jobs and factories to Mexico.This example showsthat both anaphora resolution, that sometimes

require access to embeddedcontext, and the recognition of

causation relations are at the crux of answerjustification.

The recognition of the causation underlyingthe text snippet

"as jobs and education diminish, our youth are being sucked

into the jail industrial complex"is basedon cause-effect patterns triggered by cue phrases such as as. This examplealso

leads to the conclusion that abduetive processes that were

proven successful in the past TRECevaluations need to be

extended to deal with more forms of pragmatic knowledge

as well as non-literal expressions(e.g. metaphors).

vs.

Abduction

Definition questions impose a special kind of QAbecause

often the question contains only one concept - the one that

needsto be defined. Moreover,in a text collection, the definition maynot be matchedby one of the predefined patterns

- and thus required additional informationto be recognized.

Such information maybe obtained by using the very large

index of a search engine, e.g. google.com.Whenretrieving

documentsfor the concept to be defined, the most frequent

words encountered in the summaries produced by GOOGLE

for each documentcould be considered. Due to the redundancyof these words, we mayassumethat they port important information. However,this is not alwaystrue. For the

question "What is an atom?" we found that none of the

redundant word were used in the definition of atom in the

WordNet

database. Nevertheless this technique is useful for

concepts that are not defined in WordNet.Whentrying to

answer the question "Who is Sanda Harabagiu?", GOOGLe

returns documentsshowingthat she is a faculty memberand

an author of papers on QA.Therefore for such questions,

redundancymaybe considereda reliable source of justifications.

Conclusions

In this paper we have presented several forms of abducrive processes that are implemented in a QAsystem. We

also drawattention to morecomplexalxinctive explanations

that require morethan lexico-semantic information or axiomatic knowledgethat can be scanned in a left-to-right

back-chainingof the LFTof candidate answer, as it was reported in (Harabagiu et al.2000). Weclaim in this paper

that the role of abduction in QAwill break ground in the

interpretation of the intentions of questioners. Wediscussed

the difference betweenredundancyand abduction in QAand

concluded that some forms of information redundancy may

be safely consideredas abductive processes.

Acknowledgements:This research was partly funded by

the ARDA’sAQUAINT

program.

4Thisexamplewas taken from (Hint 2000)

41

References

S. Abney,M. Collins, and A. Singhal. Answerextraction. In Proceedings of the 6th Applied Natural LanguageProcessing Conference (ANLP-2000),pages 296-301, Seattle. Washington,2000.

J. Bear and J. Hobbs. Localizing expressions of ambiguity. In

Proceedings of the 2nd Applied Natural Language Processing

Conference (ANLP.1998),

pages235--242,

1988.

P. Cohen,R. Schrag,

E. Jones,A. Pease,A. Lin,B. Start,

D. Easter,

D. Gunning,

and M. Burke.The DARPAHighPerformanceKnowledgeBases Project. Artificial Intelligence Magaz/ne, 19(4):2.5-49, 1998.

M. Collins. A newstatistical parser based on bigramlexical dependencies, in Proceedings of the 34th Annual Meeting of the

Association of ComputationalLinguistics (ACL-96), pages 184191, 1996.

D. Davidson.Thelogical form of action sentences, in N. Rescher,

ed. The Logic of Decisionand Action, University of Pittsburg, PA,

pages 81-95, 1967.

Christia~ Pellbaum (Editor). WordNet- An Electronic Lexical

Database. Mrr press, 1998.

A. Graesser

andS.Gordon. Question-Answering

andtheOrganization

of World Knowledge,chapter Question-Answeringand the

Organization of WorldKnowledge.Lawrence Erlbaum, 1991.

S. Harabagiu, G.A. Miller and D. Moldovan.WordNet2 - A Morphologically and Semantically EnhancedResource. in Proceedings of $1GLIZX-99,June 1999, Univ. of Maryland,pages !-8.

S. Harabagiu and S. Maionmo.Finding answers in large collections of texts: Paragraphindexing + abductive inference. In AAAi

Fall Symposiumon Question Answering Systems, pages 63-71,

November1999.

S. Hmbagiu, M. Pqca and S. Maiorano. Experiments with

Open-DomainTextual Question Answering. in Proceedings of

the 18th International Conferenceon ComputationalLinguistics

(COLING-2000),August 2000, Saarbruken Germany,pages 292298.

S. 14~iu, D. Moldovan, M. l~a. R. Mihaicea. M. Surdeanu,

R. Bunescu,R. G[rju, V. Rnsand P. Morg~scu.The role of lexicosemantic feedback in open-domaintextual question-answering. In

Proceedings of the 39th Annual Meeting of the Associationfor

ComputationalLinguistics (ACL-2001).Toulouse, France. 2001,

pages 274-28 !.

G. Hirst. Context as a spurious concept, in Proceedings,Conference on Intelligent Text Processing and ComputationalLinguistics, MexicoCity, Februmy2000. pages 273-287.

E. Hovy, L. Gerber, U. Hermjakob,C-Y. Lin, D. Ravichandran.

Towardsemantic-based answer pinpointing. In Proceedings of

the HumanLanguage Technology Conference (HLT-2001), San

Diego, California. 2001.

J. Hobbs,M. Stickel, D. Appelt, and P. Martin. Interpretation as

abduction. Artificial Intelligence, 63, pages 69-142, 1993.

B. Kay. Fromsentence processing to information access on the

world wide web. In AAAi Spring Symposiumon Natural Language Processing for the World W’MeWeb, Stanford, California,

1997.

W. Lehnert. The Process of Question Answering: a computer

simulation of cognition. LawrenceErlbanm, 1978.

Mitch Marcus, Beatrice Santorini and Mark Marcinkiewicz.

1993. Building a large annotated corpus of English: The Penn

Treebank. ComputationalLinguistics, 19(2):313-330, 1993.

42

G.A. Miller. WordNet:a lexical database. Communicationsof

theACM,38(i I):39--41, 1995.

G.A. Miller and W.G.Charles. Contextualcorrelates of semantic

similarity. Languageand CognitiveProcesses,6( I ): 1-28, 199

D. Moldovanand V. Rns. Transformation of WordNetGlosses

into Logic Forms. In Proceedings of the 39th Annual Meeting of the Association for ComputationalLinguistics (ACL-2001),

Toulouse, France, 2001, pages 394--401.

M. Pa.~ca and S. Harabagiu.

High Performance Question/Answering, in Proceedingsof the 24th AnnualInternational

ACL$1GIRConference on Research and Development in Information Retrieval ($1GIR-2001), September 2001, NewOrleans

LA, pages 366-374.

G. Salton, editor. The SMART

Retrieval System. Prentice-Hall,

NewJersey, 1969.

P.R. Thagard. Thebest explanation: criteria for theory choice.

Journal of Philosophy, pages 79--92, 1978.

E.Voorhecs. Overview of the TREC-9 Question Answering

Track. in Proceedings of the 9th Text REtrieval Conference

(TREC-9), 2001, pages 71-79.