From: AAAI Technical Report SS-02-02. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved.

Co-evolution Learningin Organizational-LearningClassifier System

Takao Terano, Yasushi Ishikawa

Graduate School of Systems Management,University of Tsukuba, Tokyo

3-29-1, Otsuka, Bunkyo-ku,Tokyo112-0012,Japan

terano@gssm.otsuka.tsukuba.ac.jp

Abstract

This paper proposes an agent-based system with

Organizational-Learning

OrientedClassifier System

(OCS),whichis an extension of LearningClassifier System~CS)into multiple agent environments.

In OCS,each agent is equippedwith a corresponding Michigantype LCSand acquires problemsolving knowledge

basedon the conceptsof organizational

learningin management

and organizationalsciences.

In the proposedsystem, we further extend OCSto

employthe followingcharacteristics: (1) eachagent

solvesmulti-objective

problems,there are sometradeoffs about given problems;(2) the agents compose

multi-classes,andin eachclass, theypursuedifferent

goals, whichmightcauseconflictsamong

the classesof

agents,and(3) the agentslearn bothindividuallyand

organizationally.Wehaveappliedthe systemto

logical Marketing"

domain

in orderto explaincompetitive andcooperativeagentbehaviorsin developing

and

purchasingbotheconomical

and ecologicalproducts.

Introduction

Recentdistributed information systems over the Internet

often reveal very complexphenomenain practical operation, althoughthey are constructed from very simple software components,because the behaviors of the users are

not predictable. To understand the phenomena,we must

develop explainable and executable modelsto analyze the

activities of organizations, whichconsist of both humanand

artifacts. Computational(and Mathematical)Organization

Theory (COT;CMOT)

[Carley 1999] utilizes agent-based

modelingtechniques [Axelrod1997], [Epstein 1996], [Axtel 2000]. By agent modeling, we mean that we develop

models with groups of simple and small software components or software agents and that they behave in a given

environmentand solve someglobal problems.

Theapproacheswe address in this paper also employthe

principles of agent-basedmodelingtechniques. To develop

the model, we introduce the concepts of Organizational

Learning in management

and organizational sciences [Argyris 78]. This paper proposesan agent-basedsocial simulation systemwith Organizational-LearningOriented Classifter System(OCS)[Takadama99a, 99b, 01a, 01b], which

Copyright(~) 2002,American

Associationfor Artificial Intelligence(www.aaai.org).

All rights reserved.

25

is an extension of LearningClassifier Systemin GeneticsBased MachineLearning [Goldberg89], [Lanzi et al., 01]

into multiple agent environments.The proposedsystem is

characterizedby the agents that (I) individuallysolve multiobjective problems,(2) pursue different conflict goals,

they belongto different classes, and (3) learn both individually and organizationally. Compared

with multiagentlearning systems so far (e.g., [AAAI96]), the proposedsystem

is so complexthat the agents are able to learn fromrandom

initial knowledgeand so powerfulthat they are utilized to

simulatepractical social activities. Toshowthe effectiveness, therefore, we have applied the systemto Ecological

Marketing~lomainin order to explain both competitiveand

cooperative agent behaviors in developingand purchasing

both economicaland ecological products.

This paper is organizedas follows: in section 2, we describe the background

and objectives of the research, in section 3, weproposea newOCSbasedarchitecture, then using

the proposedmodel,in section 4, we carry out experiments

on Ecological Marketingsimulation. Basedon the experiments,section 5 and 6 respectivelydiscuss the issues of organizationallearning and co-evolutionlearning. Finally, in

section 7, concludingremarkswill follow.

Background and Objectives

The literature in COTand/or CMOT

frequentiy reports that

small autonomousagents can generate global interesting

structures and behaviors.In moredetail, they describe:

(1) someinteresting organizational phenomenausually

occur in observingthe systems’behaviors, (2) very subtle

changes of control mechanismsor system parameters will

dramaticaily changethe characteristics of the systems, and

(3) there are common

face-similarities of behaviorsof complex systems, for example,computersystems, social systems, and economicalsystems.

However,these researches in the literature only discuss

the emergentproperties whichcan be identified fromthe observers’ standpoints, and tend to ignore the design problems

of such multiagent systems. Theseresearches have not yet

attained to describethe flexibility andpracticability of practical informationsystems.Bythe wordflexibility and practicability, we respectively meanthat ambiguousknowledge

sharing and ongoingadaptation in the context of activities

occur.

Tosolve the difficulties, the study onextendingthe architecture of learning classifier systemsinto multiagentenvironmentshas becomepopular in recent years. In such environments,agents should cooperatively and/or competitively

learn each other and solve a given problem.As stated above,

amongstthem, we have conductedthe research based on the

concepts of Organizational learning in management

and organizationalsciences.

Thoughthere are various definitions and discussions

about organizationlearning in the literature [Argyris78],

[Duncan79], [Kim93], by organizational learning, wemean

that it is the processfor the organizationto learn to solve

a given problem,which cannot be solved by each individual agent in it, becauseof the insufficient capability of the

agent. The approachesto learning multiagent systems are

classified in the followingtwo categories. Theone is that

all agents in the systempursue the samegoal, and the other

is that multipleclassesof agents pursuedifferent conflicting

goals and affect each other in the problemsolving processes.

In both approaches,agents learn individually, however,from

our organizational learning viewpoints, the formeraims at

acquiringthe ability to get better solutions of the problem

by exchangingor transferring adaptation behaviors among

the agents. Onthe other hand, the latter almsat acquiring

the ability to howto act fromthe feedbackfromthe environmentalchangesdueto the activities of the classes of agents.

Fromthe technical viewpoints, there has been a number

of studies in the co-evolutionof populationsin GAandmultiagent learning literature. For example,[Rosin 97] proposes

a methodof "competitive coevolution," in whichfitness

is based on direct competitionamongindividuals selected

from two independentlyevolvingpopulations of "hosts" and

"parasites." [Bull 96] proposesa multi plied learning approach because the agents do not influence each other in

their learning processes. [Grefenstette 96] describeaexperiments with co evolutionary approaches,that are similar to

ecological environments

wherespecies adapt in evolvein interaction with each other. [Haynes97] analyzes cross over

operators and fitness functions that allow to rapidly evolve

a team of agents with goodtask performance.[Sikora 94]

integrates a similarity-based learning programand GAoperations. Compared

with such researches, in this paper, we

emphasizethe concepts of organizational learning and the

application of learning classifier systemsin Genetics-Based

MachineLearning[Goldberg89] into multiagent learning.

Wehave developedthe architecture of Organizationallearning oriented Classifier System(OCS).In OCS,we have

focuseduponthe formertype organizational learning by the

rule or classifier exchangemechanism

[Takadama99a, 99b,

01a, 01b]. However,the techniques we have implementedin

OCSso far are only applicable to the agents with the same

internal modelsand the samegoals. Therefore,in this paper,

weaddress the development

of the latter type organizational

learning in the OCSarchitecture in whichmultiple classes

of agents mayhavedifferent internal models.

Whenwe develop a learning multiagent system, we often

meetthe followingfour difficulties:

¯ Agents maysolves Multi-objective problems:

26

Whenacting as individuals, the agents mayindividually

achieve morethan one objective at the sametime. This

meansthat agents must solve multi-objective problems.

For example, in the Ecological Marketing domaindescribed later, the agents meetthe tradeoffs betweeneconomicaland ecological goods.

¯ Agentsmaypursue different conflicting goals:

Whileagents act for their objectives, the agents mayhave

different weightson their multiple objectives. Furthermore, in the environmentwhereagents act, there maybe

multiple classes of agents. A group of agents with the

same objectives forms an agent class. The agents in a

sameclass maycooperativelyimitate, learn, and adapt to

the activities of the other agents in the sameclass. However, the agents whobelongto different classes maypursue different conflicting goals. In the EcologicalMarketing domain,one agent groupis correspondingto the supplier class and the other is correspondingto the consumer

class. Bothclass agents wouldlike to contribute the environmental problems, however,from the economicalview

point, the objectivesare conflicting.

¯ Agentsmaylive in multiple environments:

If there are multiple environments,each environmentrequires somespecific activities to the agents living in it.

Agentmust adopt the requirements, as they are ones of

the agents objectives.

Ourpurposeof this research is that weextend the architecture of the original OCSin order to developmoregeneral

social simulationmodelsfor analyzingdistributed information systems with multiple classes composed

of such agents

withmultiple objectives. In the followingsections, we will

describe the conceptsand validate the effectiveness.

Organlzational-learnlng oriented Classifier

Systemand its Extension

In this section, weproposea newarchitecture with extension

of the OCSto simulatethe agent withthe characteristics explainedsofar.

Brief Descriptionof Organizational-learning

Oriented Classifier System

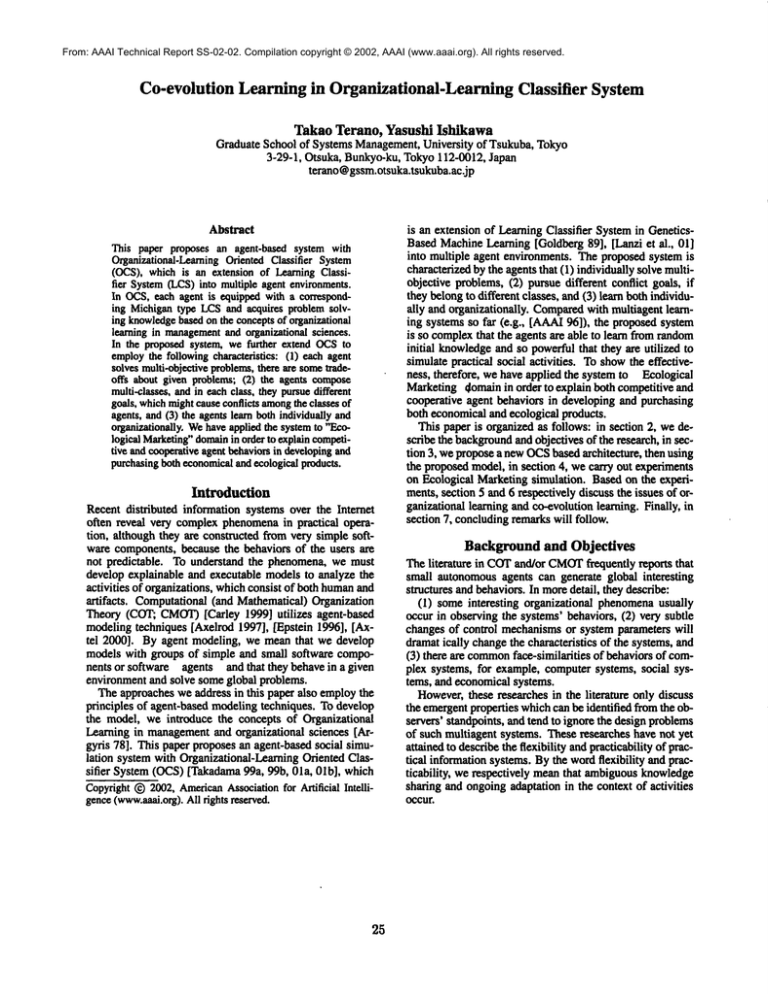

Figure 1 showsthe OCSarchitecture extended from conventional Learning Classifier System(LCS)[Goldberg89]

with organizational learning methods. The system solves

a given problemwith multi-agents organizational learning

wherethe problemcannot be solved by the sumof individual

learning of each agent. In OCS,we introducethe four organizational learning mechanismproposed in [Kim93]: (a)

Individual single loop learning, CO)Individual doubleloop

learning, (c) organizationalsingle looplearning, and (d)

ganizational double loop learning. Eachlearning mechanism respectively correspondsto (a) reinforcementlearning

(or modificationof weights)of classifiers in each agent, Co)

newclassifier or rule generation via genetic operations in

each agent, (c) the exchangemechanism

of goodclassifiers

amongvarious agents, and (d) collective knowledgereuse

for new problems.

Agentsdivide given problemsby acquiring their ownappropriate functions throughinteraction amongagents Weassumethat a given problemcannot be solved at an individual

level.

The

agent

inOCS

contains

thefollowing components:

¯ Problem

Solver:

- Detector and Effecter: they translate someparts (sub

environments)of a total environmentstate into an internal state (workingmemory)of an agent, applying

classifiers and/or collective knowledge,and derive actions basedon the informationin the workingmemory.

¯ Memory:

- Collective knowledgememory:

It stores a set comprising each agent’s rule set as collective knowledge.In

OCS,all agents share this knowledge.

- Individual knowledge

memory:

It stores a set ofclassitiers (CFs). In OCS,agents individuallystore different

CFsthat are composed

of if-then rules with a strength

weightfactor. At first, a givennumber

of rules are generated in each agent at random,and the initial weights

are set to the samevalue.

- Workingmemory:It stores the recognition results of

both sub-environmental

states and the internal states of

an actionof fired rules.

¯ Learning in OCS:

- Reinforcement Learning: In OCS,the RLmechanism

enablesagents to acquire their ownactions that are required to achieve their owngoals by tuning the rule

weights. Weemploya profit sharing methodfor the

purpose.

- Rule Generation: The mechanismgenerates a newrule

whenno rules in the individual knowledgecan be applied to the workingmemory

conditions and a rule with

the least weightis deleted.

- Rule Exchange:In OCS,agents exchange rules with

other agentsat a particular timeinterval in orderto distribute moreeffective rules that cannotbe acquiredat

the individual level learning. Whenexchanged,rules

with lowerweightsare replaced.

- Collective KnowledgeReuse: Whenthe agents have

solved someset of problems, the individual knowledge is chunkedand commonly

distributed to the all

the agents. The knowledgeis stored in order to apply

the future problemsolving.

The basic idea of the proposedsystemin this paper comes

from OCS,however,the proposed system is extended from

the original OCSso that we deal with the agents with the

characteristics described above. Wewill omit the detailed

descriptions about the system common

with the original

OCSThe detailed discussion about the mechanismsof the

original OCSis found in [Takadama01a, 01b].

Coping with Multi-Objective Problems

First, wehavechangedthe formof the classifiers, whichare

stored within Individual Knowledge~module, in order

27

I[

" Im Bnttwnlam

!

iT

T.

__t’,~L~_

..r~..

g’ ....

t~111¢4N

w

~m

J~mt~

~N

~s

Figure 1: Architecture of Organizational-learningOriented

Classifier System

to copewith multi-objective problemsdealt with the agent.

Referring to VEGA

(Sehaffer 1985), which implements

wayto deal with multi-objective problemsby Genetic Algorithms (GAs), we have applied the principle of VEGA

to LearningClassifier System.Eachclassifier individually

holds multiple strengths correspondingto each objective.

Eachof the strength values meansthe adaptation score towardsthe correspondingobjectives. Thescore is calculated

by a methoddescribed later. Individual knowledgewill be

iteratively improvedby reinforcementlearning. If two or

moreof the agents havethe sameobjectives for their action,

then they are formedin the sameclass. Anagent decidesits

action by the followingprocedures:

(1) Select probabilistieallyone of the n objectives abouttheir

actions.

(2) Select someclassifiers that matchthe states of Detector"

of Learningclassifier system.

(3) Select one classifier fromthemaccordingto strengths by

"RouletteSelection"to decideits action.

(4) Applythe classifier to Effecter ito a real action.

Eachagent individually has n weight values according

to the correspondingn objectives. In each action, one of

the objectives is probabilistically selected accordingto the

weightvalues, and then correspondingactions will be taken.

Thus, by the difference of the weightfor each objective, we

represent the agent’s characteristics so that Agentpursues

different goals |

Classifiers are gradually trained via conventional LCS

mechanisms.The learning procedureis as follows:

(1) Select the elite classifiers. Select somefixed numberof

excellentclassifiers for eachn objective. Somefixed ratio

of the selected superiorclassifiers remainsfor eachobjectives accordingto their weightsfor each objective.

(2) Select classifiers for crossoveroperation.First, select two

classifiers fromall classifiers randomly.Next,select one

from the two by the tournamentselection. At the tournamentselection, select one objective, comparethe correspondingstrengths of the twoclassifiers, and select the

better one for the candidatefor the crossoveroperations.

(3) Applygenetic operations. Accordingto the general procedures of GAs,apply crossover, selection, and mutation

operatorsfor the next generation.

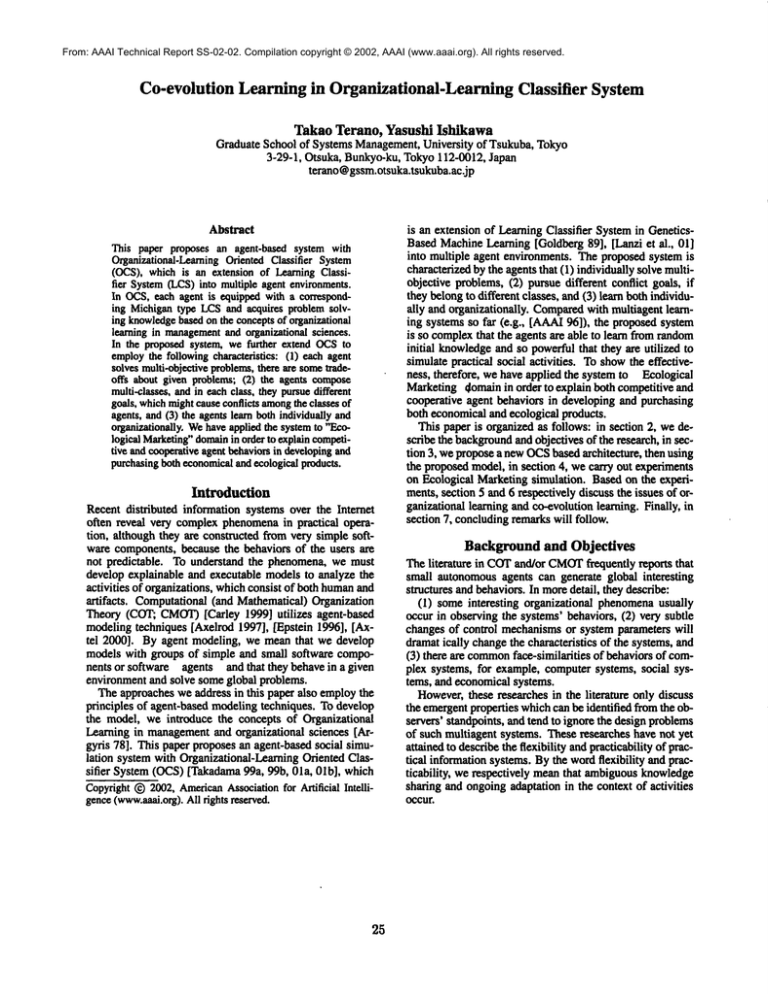

Extension for Multi-Class Agents

Wehave changed OCSto handle multi-class agents. As

shownin Figure2, multiple classes of agents share the same

environment.However,each agent class has each different

viewpointto interpret the status of the environment,

different

objectivesto act, or different actions. Toattain this, wedesign Effecter iand Detector for each individual class.

By this way, we extend OCSto multi-class agent system

without changingthe internal architecture of OCS-agent.

Evaluating Agents’ Actions

Wedescribe a wayto evaluate agents’ actions underthe requirementsfrommultiple environmentsand the aims to meet

their actions to the multiple objectives. Anagent in a same.

¯ agent class has the sameobjectives for its actions. These

objectives are implicit requirementsfromthe environments

that it lives. Asdescribedearlier, an agent probabilistically

selects oneobjective fromn of them,selects classifiers that

matchthe conditionof the detector, and select oneclassifier

to decide its action. To correspondto multiple environments

requirements,its action for oneobjective is reflected to all

the environments,

the action will changethe status of all environments.Eachagent comparesthe effect of its action to

the environmentthat the agent has selected basedon the objective, and averagesthe effects by all agents actions. That

is, the effects by all agents are averagedin the sameclass

to the environment.However,in this way, the other agents

do not alwaysact for the sameobjectives. Thus, the agents

evaluate their actions based on the resulting environment,

whichmighthave effects of the other agents with different

objectives.

The methodmayseem invalid, however,we believe this

evaluationis adequate,becausethe agents will see the other

agents actions subjectively by their ownobjectives. They

will judge their surroundingenvironmentsby their internal

models. While we evaluate the results of actions by one

agent, this relative evaluation is adaptive to the dynamic

changes of the environmentit lives. If one agent would

evaluateits action absolutely, it wouldevaluate its actions

as suitable after the environment

changesto the state the action wouldnot be suitable any more. This relative evaluation is a reasonablewayto adapt the dynamicchangeof the

environmentsunderwhichvarious agents act with different

objectivesthey aimat.

The proposedsystemhas no explicit objective functions

globally, but eachagent Individuallyhas the ones. Theenvironmentsthey live canbe changedto the state that they never

wantto be, becausethe environments

are shared and affected

by the other classes of agents that mayact in completelydifferent ways.Arealistic tactic to fit such environments

for

agents is not to aim at on one target state as an ideal one

for them. Anagent should compromiseto the environment

it live and it mayfollowa better result that the other agents

haveattained. In this point of view, the relative evaluation

methodis a flexible way.

28

[

EnvironmentA

}[

EnvironmentB

Figure 2: Extensionof OCSto Multi-Class Agents

Onescore calculated by this evaluation is reflected to

the strength for the correspondingobjective in the classitier; however,the score is not reflected to the other n - 1

strengths.

Methods for Organizational Learning

Weprepare the two organizational learning methodsfor

agents in a sameclass.

CopyingAction Parts of a Classifier Whenagent’s actions result in a bad status, the action part pf classifiers is

substituted by the other actions, whichhaveresulted in good

results, so far. Withthis method,an agent avoids makinga

mistakein the samesituation, again. The agent adopts the

actions those maynot be caused with the same objective.

So we call this method"Copyof Action (without normative

objective)".

CopyingClassifiers or Rules The objective of copying

rules is to share the success stories by all agents. While

an agent succeededin its actions, the agent offers classifier

sets that madethe success to collective knowledgeas the

shared place or blackboard. Whenapplying GAoperations,

all agents adoptthose classifiers as the elitist ones. Bythis

method,agents get rules as its individual knowledge

that will

be able to succeedin the samesituation. Wecall this method

"Copyof Rules ; Classifier sets to share are prepared for

each objective that the correspondingclass of agents owns

them.In that case, the ratio of the classifiers that the agent

adapts for each objective is accordingto the weightof objectives.

Ecological

Marketing Simulator

Ecological Marketingiis one of general marketingmethods that approachesconsumerswith a supplier or its products keen on corresponding ecological problems. Wehave

modeledEcologicalMarketingvery simple, and simulated it

in order to validate the effectivenessof the architecturediscussed above. Theobjective of the Eco-marketingSimulator

is to find out the stable conditionswhereboth supplier and

consumerclasses of agents live by proceeding coevolving

processesto acquire their knowledge

each other.

Our agent modelof Ecological Marketingis summarized

as follows.

¯ Agent classes: "Consumers"and "Suppliers"

¯ Environmentsthat agents live: "Economy-oriented"and

"Ecology-oriented"

¯ Objectives of Supplier class: "earn muchmoney"and

"correspondto ecology"on selling their products

¯ Objectives of Consumerclass: "buy a cheaper products"

and "correspondto ecology"on buyingthe products.

Betweenthe twoobjectives of supplier agents, there is a

constraint that the higher a supplier correspondsto ecology,

the higher the cost of the productsraises. Eachsupplier can

set the price of the product as it like. Suppliers and consumershold "cash position" and "ecological score" as their

internal variables. Withtheir actions, these variables will

changeby the rules as follows.

(1) Case of Suppliers

¯ CashPosition: Increases/decreases by sales. Asupplier

mustproduceat least 2 products, so while it could sell

less than 2, the supplier looses the cost of the remained

products.

¯ Ecologicalscore: Asupplier gets a score multiply"ecology correspondenceratio of a product" by "numberof

sold products". But while a supplier could sell less than

2, the supplier gets somepenalty scores.

(2) Case of Consumers

¯ Cashposition: A consumerpays someprices of a product

it bought,and gets a meanprice of all products.

¯ Ecological score: A consumergets the ecology correspondenceratio of a product.

To.implement

the agents, the conditionalparts of the classifter contain the followinginformation:Cashposition, Ecological score, Resultsof previoussales activities (for suppliers only), results of previous purchaseactivities (for consumersonly), the averageof moneyall the agents have, the

averageof ecologicalscores all the agents have, and so on.

Theaction parts of the classifier containecologicalscoreand

the marginof the products(for suppliers) and price of products and ecological score (for consumers).

Thelengthof one classifier is 50 bits for suppliersand 40

bits for consumers.

Eachagent has 5,000classifiers in its internal model. Parameters of GAoperations are summarized

as follows: GAoperations are applied every 500 iteration,

the ratio for the elite classifier selectionis 0.2, the crossover

ratio is 1.0, andthe mutationratio is 0.001.

Preliminary Experiments: Only Supplier Agents

Learn

For the first practice, we have implementedthe consumer

class with only heuristic followingtwo rules. That is, we

have not applied GAoperations to consumeragents. Onthe

other hand, makeragents are implementedby OCS,thus,

their actions will evolve.

29

10

...

L

..~- Grayd ~ns

I ~ With b~hmllhods

Figure 3: Numberof SucceededAgents in Pre-Experiments

(Averageof 5 experiments)

¯ Rule1: First, a consumerselects a product randomly,and

then, if there is a productfit moreto ecologyand the price

is not higherthan 110%

fromthe first one, select the product.

¯ Rule2: First, a consumerselects a product randomly,and

then if there is a product whoseprice is less than 90%

fromthe first one, selects the product.

The formerheuristic is used in order for the consumer’s

objectiveto correspondto ecology,and latter is usedin order

for the consumer’sobjective to buy a cheaper product. In

this experiment,the weightsfor both objectives are set to

0.5. Wehave removedthe variety of agents to measurethe

pure effect of organizationallearning methods.

Before the experiment, we have carried out the simulation without organizational learning mechanisms;however,

we havehad results that all suppliers go bankrupt.That is,

the prices of products go downto the least level samewith

their productioncost. Accordingly,we mustapply the organizational learning methodto a supplier’s objective to earn

much money.

To overcomethe results, the following two organizational learning methodsare designed. Whena supplier goes

bankrupt, the "Copyof actions" methodis executed. This

meansthat they copythe last 5 best actions to the supplier’s

bad correspondingclassifiers. Whena supplier succeeds,

that is, its moneyposition exceedsto the upperlimit, the

supplier providesthe last 5 classifiers applied, whichcause

the last 5 actions of it, into a shared place. Thesesuccessful rules are able to be acceptedas the elites by all suppliers

in the GAoperations. But the rules the samesupplier agent

has providedare excludedfor the acceptancefor itself. The

numbersof these success rules are limited to 40timesS.

Weshowthe effects of organizational learning methodin

Figures 3 and 4. ’Success’in this figure meansthe times of

moneyposition exceeds to the upper limit are morethan 9

times that the moneyposition falls the lower limit. ’Lose’

means the times of moneyposition goes under the lower

limit than that exceeds the upper limit. Wehave measured

themevery 2,000iterations of the simulation.

---_.....

!

....

~ ._,&s__ __,,~n)

I-.-~r~a~

I

....................... I

@

m

Figure 4: Numbersof Lost Agentsin Pre-Experiments(Average of 5 experimentsExtension of OCSto Multi-Class

Agents)

Figure 6: Results of EconomicalActionsTakenby Suppliers

in Pre-Experiments(Averageof 5 experiments)

..........................................................

.......................................

m

m

e

m

Figure 5: Results of EcologicalActions TakenbySuppliers

in Pre-Experiments(Averageof 5 experiments)

Thisresult showsthat "Copyof action" is effective to improvethe initial activities of agents, and "Copyof Rule"is

also effectiveto improve

their final states.

Weobservethe effects of these methodsby validating the

states of the environments

feed backedby the actions of the

agents. Weshowin Figures 5 and 6, which represent the

transformationof environmentswith suppliers’ "ecological

actions" and "economicalactions". Theseresults showthat

our methodsworkwell on this point of view.

Automated Modification of Actions in

Ecological Marketing Simulation

Wehave foundout the results of feedbacksof suppliers’ actions to the environmentsin Figures 5 and 6 that "ecological actions" increasesatisfactorily, but "economical

actions"

rather decreases.This is becausesuppliers are too muchcorrespondingto ecological issues, whichhave resulted in the

sacrifice of earningmuchmoney.That is, suppliers’ weights

for the twoobjectivesare not suitable for its multi-objective

problems.Therefore, to improvethe situation, we carry out

the secondseries of experimentsto modifytheir objectives

dynamicallyby using the results of feed backof suppliers’

30

Figure 7: Results of Agents’Actionswith "Modificationsof

Actions"

actions to the environment.

Here, we examinea method(1) to comparethe results

of both ecological and economicalactivities of agents, then

(2) to feed backthe results towardthe next simulationsteps.

Whilethe results are increasedin the one objective and decreased in the other one, we decreasethe weightfor the increased objective. Wecall this methodas "Modificationof

Actions". Wehave improvedthe suppliers’ multi-objective

solutions with this methodas Figure 7 shows. Weset the

unit step for the weighttransformationat 0.05, here.

Weobservethat the both results of the feedbacksare increasing, evenif the results of ecologicalactions are rather

worsethan the last result. That is, this methodcan gives

good organizational learning mechanismsfor the multiobjective problem. That is, supplier agents have learned

the best weights for objectives on-the-fly mannerby this

method.

Co-Evolving Multiple Agents in Ecological

Marketing Simulation

Co-evolutionis the evolutionbetweendifferent kinds of living things with their interactive affections [Mitchell96]. In

the framework of our research, Co-Evolution i means

the evolutionary acquisition of better knowledgebetween

Figure 8: Results of Agents’Actionswith "Interventionsfor

Actions"

the supplier agent class and the consumeragent class. In

the sameclass, they learn cooperatively,on the other hand,

against the class, they learn competitively.

The agents wouldlike to get the benefit each other by

cooperatingtogether towardtheir common

objectives correspondingto "ecology", and by competitive learning, which

meansto competethe conflict of interests betweensuppliers

to earn "money"and consumersto buy cheaper "products".

This co-evolutionallearningis attained in adaptation to the

changeof environments,whichis causedby their feedbacks

of actions to the environments.Wesimulate EcologicalMarketing with supplier agents and consumeragents, both of

whichare implementedby the classifier systemmechanisms

describedearlier.

In the preliminary experiments, suppliers go bankrupt

even if they use organizational learning methodsof "Copy

of actions iand Copy of Rules"; consumers win a runawayvictory in their competition. In the real world, this

result leads insufficiently supplyof products,and this affect

consumersa bad impact. So consumersshould makeconcessions to suppliers to earn moneyso far as they do not go

bankrupt.

To put such concessionsinto practice in our simulation,

we expand the Modification of Actions imethod to coevolutional learning betweenagent classes. Whilesuppliers cannot improvethe result of their economical

actions by

themselves, the suppliers intervene consumersto change

their weight for economicalobjective lower. Wecall this

methodas Intervention of Actions i Wesimulate the coevolution of multiclass agents in the EcologicalMarketing

by the parametersas follows: the weightfor each objective

is 0.5, the variety of rules (the marginof the weightfor objectives) is 0.2. Asa result, productssettle to highecological

and lowprice ones, and suppliers earn moneyso far as they

do not go bankrupt as Figure 8 shows.

Figure 8 Results of AgentsActions with "Interventions

for Actions"

Concluding

Remarks

This paper has proposeda newarchitecture to simulate complex agents behaviorssimilar to the ones in our real world

31

that mayhavemulti-objectives,varieties in characteristics,

multi-class, and/or multiple environments.Suchsituations

are quite popularin recent distributed informationsystems.

Wehave developedsuch an agent modelwith LearningClassifier Systemsin Genetic-BasedMachineLearningliterature

and havevalidated the effectiveness of the organizational

learning methods employed in the model. Our proposed

methods: "Copyof Actions", "Copyof Rules" and "Modification of Actions" have shownthe performanceon the multiagents’ organizationallearningabouttheir suitable actions

for their multi-objectives. Wehavealso shownthe capability of the "Interventionof Actions"methodto co-evolvethe

multi-classagents.

Future research includes (1) exploring further applications of the proposedagent modelingtechniquesto the other

informationsystemproblems,(2) extendingthe architecture

to examine

the effects of the varietyof agentsto the result of

the simulation, and (3) developingmuchmorenovel organizational learning methodsfor agent based modeling.

References

AAAISpring SymposiumSeries 1996: Adaptation, Coevolution and Learning in Multiagent Systems. March2527, 1996.

Argyris, C., D. and SchonA. Organizational Learning,

Addison-Wesley,1978.

Axelrod, R. Complexityof Cooperation: Agent BasedModels of Competitionand Collaboration, Princeton University

Press, 1997.

Axtel, R. L. WhyAgents? On the Varied Motivations for

AgentComputing

in the Social Sciences. BrookingsInstitution WorkingPaper No. 17, 2000.

Bull, L., Fogarty, T. C.. EvolutionaryComputing

in Cooperative Multi-AgentSystems.In [AAA196],pp. 22-27. 1996.

Carley, K. M., Gasser, L.: Computational Organization

Theory, in Weiss, G. (ed.). Multiagent Systems: A Modem

Approach

to Distributed Artificial Intelligence, MITPress,

1999, pp. 299-330.

Duncan,R. and WeissA. Organizational Learning: Implications for Organizational Design,iStaw, B. M. (Ed.) Research in OrganizationalBehavior,Vol. I, JAI Press, 1979,

pp.75 123.

Epstein, J.M.. Axtell, R.: GrowingArtificial Societies: Social Science from the BottomUp, MITPress, 1996.

Goldberg, D.E. Genetic Algorithms in Search, Optimization, and MachineLearning, Addison-Wesley,1989.

G-refenstette, J., Daley,R. Methodsfor Competitiveand Cooperative co-evolution, In [AAA196],

pp. 45 - 50, 1996.

Haynes,T., Sen, S. LearningCasesto ResolveConflicts and

ImproveGroupBehavior, International Journal of HumanComputerStudies, Vol. 48, No. 1, pp. 31-49, 1997.

Kim, D. The Link betweenindividual and organizational

learning, iSloan Management

Review, Fall, 1993, pp. 37

50.

Lanzi, P.L., Stolzmann,W.,

Wilson,S.W.(eds.): Advances

Learning Classifier Systems Third Int. Workshop,IWLCS

2000, Springer LNAI1996, 2001.

Mitchell, M. AnIntroduction to Genetic Algorithms, The

MITPress, 1996.

Rosin, C. D., Belew, R. K. NewMethodsfor Competitive

Coevolution,EvolutionaryComputation,Vol.5, yNo.1, pp.

1-29, 1997.

Schaffer, J.D. "Multiple Objective Optimizationwith Vector EvaluatedGeneticAlgorithms,"Proceedingsof the First

International Conferenceon Genetic Algorithmsand Their

Applications, LawrenceErlbaumAssociates, Inc., Publishers, 1985, pp.93 100.

Sikora, R.,Shaw. M.J. A Double-Layered Learning Approachto AcquiringRulesfor Classication: Integrating Genetic Algorithm with Similarity-Based Learning. ORSA

Journal on Computing,Vol. 6, No. 2, pp. 174-187,1994.

Takadama K., Terano T., Shimohara K. Agent-Based

Model TowardOrganizational Computing: From Organizational Learning to Genetics -Based MachineLearning, i

Proceedingsof IEEESMC’99

Vol. II, 1999-a, pp. 604-609.

TakadamaK., Terano T, ShimoharaK., Hori K., Nakasuka

N. MakingOrganizational Learning Operational: Implication from LearningClassifier System,iJ. Computational

and MathematicalOrganization Theory, (5:3), 1999-b, pp.

229-252.

TakadamaK., Terano T., ShimoharaK. Learning Classitiers MeetMultiagentEnvironments,fin Lanzi, P.L., Stolzmann,W,Wilson,S. W.(eds.): Advancesin LearningClassifier Systems Third Int. Workshop,IWLCS

2000, Springer

LNAI1996, pp. 192-210, 2001.

Takadama K., Terano T., Shimohara K. Nongovernanee

Rather Than Governancein a MultiagentEconomicSociety,

IIEEETrans. on Evolutionary ComputationVol. 5, No.5,

pp. 535-545, 2001.

39.

![[ ] ( )](http://s2.studylib.net/store/data/010785185_1-54d79703635cecfd30fdad38297c90bb-300x300.png)