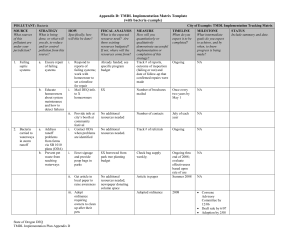

Measuring Water Quality Improvements:

advertisement