From: AAAI Technical Report SS-94-01. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

TowardsCombinedAttention Focusing and AnomalyDetection

in Medical Monitoring

Richard J. Doyle

Artificial

Intelligence Group

Jet Propulsion Laboratory

California Institute

of Technology

Pasadena,

CA 91109-8099

rdoyle @ aig.jpl.nasa.gov

Abstract

operators employ multiple methods for recognizing anomalies, and 2) missionoperators do not and should not interpret

all sensor data all of the time. Weseek an approachfor determining from momentto momentwhich of the available sensor

data is most informative about the presence of anomaliesoccurring within a system. The workreported here extends the

anomalydetection capability in Doyle’s SELMON

monitoring

system[5, 6] by adding an attention focusing capability.

Other model-based monitoring systems include Dvorak’s

MIMIC,which performs robust discrepancy detection for continuous dynamic systems [7], and DeCoste’s DATMI, which

infers system states from incomplete sensor data [3]. This

work also complementsother work within NASA

on empirical and model-basedmethodsfor fault diagnosis of aerospace

platforms[1, 8, 9, 12].

Anyattempt to introduce automationinto the monitoring of complexphysical systems must start from

a robust anomalydetection capability. This task

is far from straightforward, for a single definition

of what constitutes an anomalyis difficult to come

by. In addition, to make the monitoring process

efficient, and to avoid the potential for information

overload on humanoperators, attention focusing

must also be addressed. Whenan anomaly occurs,

moreoften than not several sensors are affected, and

the partially redundantinformationthey provide can

be confusing,particularly in a crisis situation where

a responseis neededquickly.

The focus of this paper is a newtechnique for attention focusing. The technique involves reasoning

about the distance between two frequency distributions, and is used to detect both anomaloussystem parameters and "broken" causal dependencies.

Thesetwo forms of information together isolate the

locus of anomalousbehavior in the system being

monitored.

2

1 Introduction

Mission Operations personnel at NASA

have the task of determining, from momentto moment,whether a space platform is exhibiting behavior which is in any way anomalous,

whichcould disrupt the operation of the platform, and in the

worst case, could represent a loss of ability to achieve mission

goals. A traditional techniquefor assisting mission operators

in space platform health analysis is the establishmentof alarm

thresholds for sensors, typically indexed by operating mode,

which summarize which ranges of sensor values imply the

existence of anomalies. Another established technique for

anomalydetection is the comparisonof predicted values from

a simulation to actual values received in telemetry. However,

experienced mission operators reason about more than alarm

threshold crossings and discrepancies betweenpredicted and

actual to detect anomalies: they mayask whethera sensor is

behavingdifferently than it has in the past, or whethera current behavior maylead to--the particular baneof operators--a

rapidly developing alarm sequence.

Our approach to introducing automationinto real-time systems monitoring is based on two observations: 1) mission

31

Background:

The SELMONApproach

Howdoes a humanoperator or a machine observing a complex physical system decide whensomething is going wrong?

Abnormalbehavior is always defined as somekind of departure from normalbehavior. Unfortunately, there appears to be

no single, crisp definition of "normal"behavior. In the traditional monitoringtechnique of limit sensing, normalbehavior

is predefined by nominal value ranges for sensors. A fundamental limitation of this approachis the lack of sensitivity

to context. In the other traditional monitoring technique of

discrepancy detection, normal behavior is obtained by simulating a modelof the system being monitored. This approach,

while avoidingthe insensitivity to context of the limit sensing approach, has its ownlimitations. The approach is only

as good as the system model. In addition, normal system

behavior typically changes with time, and the model must

continueto evolve. Giventhese limitations, it can be difficult

to distinguish genuine anomaliesfrom errors in the model.

Noting the limitations of the existing monitoring techniques, we have developed an approach to monitoring which

is designed to makethe anomalydetection process more robust, to reduce the numberof undetected anomalies (false

negatives). Towardsthis end, we introduce multiple anomaly

models, each employinga different notion of "normal" behavior.

2.1 Empirical AnomalyDetection Methods

In this section, we briefly describe the empirical methods

that we use to determine, from a local viewpoint, when a

sensor is reporting anomalousbehavior. These measures use

knowledgeabout each individual sensor, without knowledge

of any relations amongsensors.

Surprise

An appealing way to assess whether current behavior is

anomalousor not is via comparison to past behavior. This

is the essence of the surprise measure. It is designed to

highlight a sensor whichbehavesother than it has historically.

Specifically, surprise uses the historical frequencydistribution

for the sensor in two ways: To determine the likelihood of

the given current value of the sensor (unusualness), and to

examinethe relative likelihoods of different values of the

sensor (informativeness). It is those sensors which display

unlikely values whenother values of the sensor are more

likely which get a high surprise score. Surprise is not high

if the only reasona sensor’svalue is unlikely is that there are

manypossible values for the sensor, all equally unlikely.

Alarm

Alarmthresholds for sensors, indexed by operating mode,

typically are established throughan off-line analysis of system

design. The notion of alarmin SELMON

extends the usual one

bit of information(the sensor is in alarmor it is not), and also

reports howmuchof the alarm range has been traversed. Thus

a sensor which has gone deep into alarm gets a higher score

than one whichhas just crossed over the alarm threshold.

AlarmAnticipation

The alarm anticipation measure in SELMON

performs a

simple formof trend analysis to decide whetheror not a sensor

is expected to be in alarm in the future. A straightforward

curve fit is used to project whenthe sensor will next cross an

alarm threshold, in either direction. A high score meansthe

sensor will soon enter alarmor will remainthere. A lowscore

meansthe sensor will remain in the nominalrange or emerge

from alarm soon.

anomalies. This raw discrepancyis entered into a normalization process identical to that used for the value changescore,

and it is this representation of relative discrepancywhichis

reported. The deviation score for a sensor is minimum

if there

is no discrepancy and maximum

if the discrepancy between

predictedand actual is the greatest seen to date on that sensor.

Deviationonly requires that a simulationbe available in any

form for generating sensor value predictions. However,the

remaining sensitivity and cascading alarms measuresrequire

the ability to simulate and reason with a causal modelof the

system being monitored.

Sensitivity and Cascading Alarms

Sensitivity measuresthe potential for a large global perturbation to develop from current state. Cascadingalarms

measuresthe potential for an alarm sequence to develop from

current state. Both of these anomalymeasuresuse an eventdriven causal simulator [2, 11 ] to generate predictions about

future states of the system, given current state. Current state

is taken to be defined by both the current values of system

parameters (not all of whichmaybe sensed) and the pending

events already resident on the simulator agenda. The measures assign scores to individual sensors accordingto howthe

system parameter corresponding to a sensor participates in,

or influences, the predicted global behavior. A sensor will

haveits highest sensitivity score whenbehaviororiginating at

that sensor causes all sensors causally downstreamto exhibit

their maximum

value change to date. A sensor will have its

highest cascading alarms score whenbehavior originating at

that sensor causes all sensors causally downstreamto go into

an alarmstate.

2.3 Previous Results

In order to assess whether SELMON

increased the robustness

of the anomalydetection process, we performedthe following

experiment: Wecompared SELMONperformance to the performanceof the traditional limit sensingtechniquein selecting

critical sensor subsets specified by a SpaceStation EnvironValue Change

mental Control and Life Support System (ECLSS)domain

A change in the value of a sensor maybe indicative of an

expert, sensors seen by that expert as useful in understanding

anomaly.In order to better assess such an event, the value

episodes of anomalousbehavior in actual historical data from

change measure in SELMON

compares a given value change

ECLSS

testbed operations.

to historical value changes seen on that sensor. The score

The experimentasked the following specific question: How

reported is based on the proportion of previous value changes often did SELMON

place a "critical" sensor in the top half of

which were less than the given value change. It is maximum its sensor ordering based on the anomalydetection measures?

whenthe given value changeis the greatest value changeseen

The performance of a randomsensor selection algorithm

to date on that sensor. It is minimumwhenno value change

would be expected to be about 50%; any particular sensor,

has occurredin that sensor.

wouldappear in the top half of the sensor ordering about half

the time. Limit sensing detected the anomalies 76.3%of the

2.2 Model-Based AnomalyDetection Methods

time. SELMON

detected the anomalies 95.1%of the time.

Although many anomalies can be detected by applying

These results show SELMON

performing considerably betanomalymodelsto the behaviorreported at individual sensors,

ter than the traditional practice of limit sensing. Theylend

robust monitoring also requires reasoning about interactions

credibility to our premise that the most effective monitoring

occurring in a system and detecting anomalies in behavior

system is one which incorporates several models of anomareported by several sensors.

lous behavior. Our aim is to offer a more complete, robust

set of techniques for anomalydetection to makehumanoperDeviation

ators moreeffective, or to provide the basis for an automated

monitoringcapability.

The deviation measureis our extension of the traditional

methodof discrepancy detection. As in discrepancy detecThe following is a specific exampleof the value added of

tion, comparisonsare madebetweenpredicted and actual senSELMON.

During an episode in which the ECLSSpre-heater

sor values, and differences are interpreted to be indications of

failed, system pressure (which normally oscillates within

32

knownrange) became stable. This "abnormally normal" behavior is not detected by traditional monitoring methodsbecause the systempressure remains firmly in the nominalrange

wherelimit sensing fails to trigger. Furthermore,the fluctuating behavior of the sensor is not modeled;the predicted value

is an averagedstable value whichfails to trigger discrepancy

detection. See [5, 6] for moredetails on these previous results

in evaluating the SELMON

approach.

3

Attention

Focusing

A robust anomalydetection capability provides the core for

monitoring, but only whenthis capability is combinedwith

attention focusing does monitoring becomeboth robust and

efficient. Otherwise, the potential problemsof information

overload and too manyfalse positives maydefeat the utility

of the monitoring system.

The attention focusing technique developed here uses two

sources of information: historical data describing nominal

system behavior, and causal information describing which

pairs of sensors are constrained to be correlated, due to the

presenceof a dependency.The intuition is that the origin and

extent of an anomaly can be determined if the misbehaving

system parameters and the misbehavingcausal dependencies

can be determined.

3.1 TwoAdditional Measures

While SELMON

runs, it computesincremental frequency distributions for all sensors being monitored. These frequency

distributions can be saved as a methodfor capturing behavior from any episode of interest. Of particular interest are

historical distributions which correspond to nominal system

behavior.

To identify an anomaloussensor, we apply a distance measure, defined below, to the frequencydistribution whichrepresents recent behaviorto the historical frequencydistribution

representing nominal behavior. Wecall the measure simply

distance. To identify a "broken"causal dependency,we first

apply the same distance measureto the historical frequency

distributions for the cause sensor and the effect sensor. This

reference distance is a weakrepresentation of the correlation

that exists betweenthe values of the two sensors due to the

causal dependency.This reference distance is then compared

to the distance betweenthe frequencydistributions based on

recent data of the samecause sensor and effect sensor. The difference betweenthe reference distance and the recent distance

is the measureof the "brokenness"of the causal dependency.

Wecall this measurecausal distance.

3.2 Desired Properties of the Distance Measure

Definea distribution D as the vector di such that

Vi, O <_di <_1

and

If our aim was only to comparedifferent frequency distributions of the same sensor, we could use a distance measure

which required the numberof partitions, or bins in the two

distributions to be equal, and the range of values covered by

the distributions to be the same. However,since our aim is

to be able to comparethe frequencydistributions of different

sensors, these conditions must be relaxed.

Wedefine two special types of frequency distribution. Let

F be the random,or flat distribution where Vi, di = l~. Let

Si be the set of "spike" distributions wheredi = 1 and Vj

i, dj=O.

3.3 The Distance Measure

The distance measure is computedby projecting the two distributions into the two-dimensional

space [f, s] in polar coordinates and taking the euclidian distance betweenthe projections.

Define the "flatness" componentf(D) of a distribution as

follows:

-d,

i=O

This is simply the sumof the bin-by-bin differences between the given distribution and F. Note that 0 < f(D) <_

Also, f(Si) --+ 1 as n -4 0o.

Define the "spikeness" components(D) of a distribution

as:

n-I

i

d

i=0

This is simplythe centroid value calculation for the distribution. The weightingfactor ¢ will be explained in a moment.

Once again, 0 < s(D) < 1.

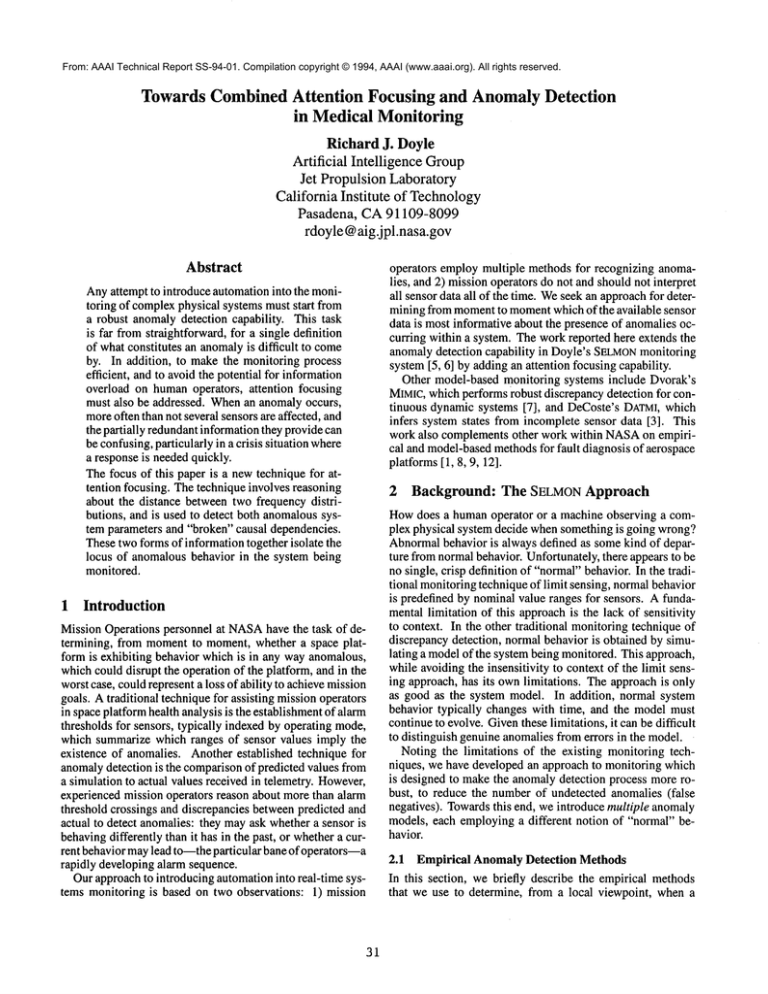

Nowtake [f, s] to be polar coordinates [r, 0]. This maps

F to the origin and the Si to points along an arc on the unit

circle. See Figure 1.

Note that we take ¢ =-y. ’~ This choice of ¢ guarantees

that A(So, S,~-1) = A(F, S0) A(F, Sn -I) = 1 and al l

other distances in the region whichis the range of A are by

inspection < 1.

Insensitivity to the numberof bins in the two distributions

and the range of values encodedin the distributions is provided

by the [f, s] projection function, which abstracts awayfrom

these properties of the distributions.

Additionaldetails on desired properties of the distance measure and howthey are satisfied by the function A maybe found

in [4].

3.4 Results

n-I

Edi

=1

i=0

For a sensor S, we assumethat the range of values for the

sensor has been partitioned into n contiguoussubrangeswhich

exhaust this range. Weconstruct a frequencydistribution as a

vector Ds of length n, wherethe value of di is the frequency

with whichS has displayed a value in the ith subrange.

33

In this section, we report on the results of applyingthe distribution distance measureto the task of focusing attention

in monitoring. The distribution distance measureis used to

identify misbehavingnodes (distance) and arcs (causal distance) in the causal graph of the systembeing monitored, or

equivalently, detect and isolate the extent of anomaliesin the

system being monitored.

0,34

0.0

o~.o O’0o.oI

o.o

o.ol

~

o.

o.o

.1

o.oe *.s8

o.oo o

F~

1=I

~So

o.~

o.es

o.u

0.30

o.o

o.o

Figure 1: The function A(D], D2).

Figure 3: A leak fault.

HeTank

T HeTwlk

P

o.o

o.o

~eVR Cl HeVRCI

~

o.o

0.07

0,0

~

Manf

tP

oi

0.2

M|nfI P

o.oo.o

o.o

o.o

o.13

o.1~I

oo

o.o

o.oe

o.o

o.o

Figure 2: Causal Graph for the Forward Reactive Control

System (FRCS)of the Space Shuttle.

3.4.1 A Space Shuttle Propulsion Subsystem

Figure 2 showsa causal graph for a portion of the Forward

Reactive Control System(FRCS)of the Space Shuttle. A full

causal graph for the Reactive Control System, comprisingthe

Forward, Left and Right RCS,was developed with the domain

expert.

3.4.2 Attention Focusing Examples

SELMON

was run on seven episodes describing nominal

behavior of the FRCS.The frequency distributions collected

during these runs were merged. Reference distances were

computedfor sensors participating in causal dependencies.

SELMON

was then run on 13 different fault episodes, representing faults such as leaks, sensor failures and regulator

failures. Twoof these episodes will be examinedhere; results were similar for all episodes. In each fault episode,

and for each sensor, the distribution distance measurewas

applied to the incremental frequency distribution collected

during the episode and the historical frequency distribution

from the mergednominal episodes. These distances were a

measureof the "brokenness"of nodesin the causal graph; i.e.,

instantiations of the distance measure.

Newdistances were computed between the distributions

correspondingto sensors participating in causal dependencies.

The differences betweenthe newdistances and the reference

distances for the dependencies were a measure of the "brokenness"of arcs in the causal graph; i.e., instantiations of the

causal distance measure.

The first episode involves a leak affecting the first and

second manifolds (jets) on the oxidizer side of the FRCS.

The pressures at these two manifoldsdrop to vapor pressure.

The dependencybetween these pressures and the pressure in

34

Figure 4: A pressure regulator fault.

the propellant tank is severed because the valve betweenthe

propellant tank and the manifolds is closed. Thus there are

two anomaloussystem parameters (the manifold pressures)

and two anomalous mechanisms(the agreement between the

propellant and manifold pressures whenthe valve is open).

The distance and causal distance measures computedfor

nodes and arcs in the FRCScausal graph reflect this faulty

behavior. See Figure 3. (To visualize howthe distribution

distance measurecircumscribes the extent of anomalies, the

coloring of nodes and the width of arcs in the figure are

correlated with the magnitudesof the associated distance and

causal distance scores). An explanation for the apparent

helium tank temperature anomalyis not available. However,

we note that this behavior was present in the data for all six

leak episodes.

The second episode involves an overpressurization of the

propellant tank due to a regulator failure. Onboardsoftware

automatically attempts to close the valves which isolate the

heliumtank from the propellant tank. Oneof the valves sticks

and remains open.

The distance and causal distance measures isolate both

the misbehavingsystem parameters (propellant pressure and

valve status indicators) and the altered relationships between

the heliumand propellant tank pressures and betweenthe propellant tank pressure and the valve status indicators. Overpressurizationof the propellant tank also alters the usual relation betweenpropellant tank pressure and manifoldpressures.

See Figure 4.

4

Discussion

The distance and causal distance measures based on the distribution distance measurecombinetwo concepts: 1) empir-

ical data alone can be an effective modelof behavior, and 2)

the existence of a causal dependencybetween two parameters implies that their values are somehowcorrelated. The

causal distance measureconstructs a modelof the correlation betweentwo causally related parameters, capturing the

general notion of constraint in an admittedlyabstract manner.

Nonetheless, these modelsof constraint arising from causality provide surprising discriminatory powerfor determining

which causal dependencies (and corresponding system mechanisms) are misbehaving.(In the distance measurefor detecting misbehavingsystem parameters, we are simply using the

degenerate constraint of expected equality betweenhistorical

and recent behavior.)

Several issues need to be examinedto continue the evaluation of the attention focusing techniquebased on the distribution distance measureand its utility in monitoring.

Weneed to understand the sensitivity of the technique to

howsensor value rangesare partitioned. Clearly the discriminatory powerof the distribution distance measureis related

to the resolution provided by the numberof bins and the bin

boundaries. The results reported here are encouragingfor the

number of FRCSsensor bins were in many cases as low as

three and in no cases morethan eight.

Weneed to understand the suitability of the technique for

systems which have manymodesor configurations. Wewould

expect that the discriminatory powerof the technique would

be compromised

if the distributions describing behaviors from

different modeswere merged. Thus the technique requires

that historical data representing nominalbehavioris separable

for each mode.If there are manymodes,at the very least there

is a data managementtask. A capability for tracking mode

transitions is also required. Anunsupervised learning system which can enumerate system modesfrom historical data

and enable automatedclassification wouldsolve this problem

nicely.

Notall distinct distributions are mapped

to distinct points in

the projection space [f, s] by the distribution distancemeasure.

Weneedto understandthis limitation; in particular we needto

understandwhetheror not distributions wewish to distinguish

are in fact being distinguished. The judicial introduction of

additional components(e.g., the numberof local maximain

a frequencydistribution) to the distribution projection space

[f, s] maybe required to enhancediscriminability.

It should be possible to describe the temporal (along with

the causal/spatial) extent of anomaliesby incrementally comparing recent sensor frequency distributions calculated from

a "movingwindow"of constant length with static reference

frequencydistributions.

5

Towards Applications

Monitoring

in Medical

The approachdescribed in this paper has usability advantages

over other forms of model-basedreasoning. The overhead involved in constructing the causal and behavioral modelof the

system is minimal. The behavioral modelis derived directly

from actual data; no offline modelingis required. The causal

modelis of the simplest form, describing only the existence of

dependencies. For the Shuttle RCS,a 198-nodecausal graph

was constructed in a single one and one half hour session

betweenthe author and the domainexpert.

35

This low modelingoverhead holds out the promise that the

SELMON

approach maybe suitable for applications outside the

NASA

mission operations arena for which it was designed,

including perhaps medical monitoring.

The questions which need to be answered are: Do ample amounts of empirical data exist to describe "nominal"

behavior for the measurableparameters used in medical monitoring? Candependency-onlycausal graphs be easily built to

describe wherecorrelations should exist in such data? Pearl’s

work [10] mayprovide a wayto construct such causal graphs

if they are not easily generatedby hand.

Our approach is most easily applied when the number of

modes,configurations or states whichneed to be understoodis

small, for it is necessaryto collect data representing nominal

behavior for each mode.While recognizing mylack of expertise in this area, it wouldseemthat the numberof "nominal"

modesfound in medical monitoringcontexts is in general less

than than associated with designedphysical artifacts.

Of particular concern in medical monitoring is the false

alarm rate. The attention focusing approachdescribed in this

paper is meantto address this problemdirectly by identifying

anomalysignatures which span causally related parameters,

rather than stressing the possibly redundant information of

anomalousbehavior occurring at several individual sensors.

This work, however, does not provide any silver bullet for

determining howto set thresholds to define significant departures from nominal behavior, whatever model of nominal

behavior is being employed.

6

Summary

Wehave described the properties and performanceof a distance measure used to identify misbehavior at sensor locations and across mechanismsin a system being monitored.

The technique enables the locus of an anomalyto be determined. This attention focusing capability is combinedwith a

previously reported anomalydetection capability in a robust,

efficient and informative monitoring system, which is being

applied in mission operations at NASA.

7

Acknowledgements

The members of the SELMON

team are Len Charest, Harry

Porta, Nicolas Rouquette and Jay Wyatt. Other recent members of the SELMON

project include Dan Clancy, Steve Chien

and UsamaFayyad. Harry Porta provided valuable mathematical and counterexampleinsights during the development

of the distance measure. Matt Barry, Dennis DeCoste and

Charles Robertson also provided valuable discussion. Matt

Barry served invaluably as domain expert for the Shuttle

FRCS.

The research described in this paper was carried out by the

Jet PropulsionLaboratory, California Institute of Technology,

under a contract with the National Aeronautics and Space

Administration.

References

[I ] K. H. Abbott, "Robust Operative Diagnosis as Problem

Solving in a Hypothesis Space," 7th National Conference on Artificial Intelligence, St. Paul, Minnesota,August 1988.

[2] L. Charest, Jr., N. Rouquette, R. Doyle, C. Robertson,

and J. Wyatt, "MESA:AnInteractive Modelingand Simulation Environment for Intelligent Systems Automation," HawaiiInternational Conferenceon System Sciences, Maui, Hawaii, January 1994.

[3] D. DeCoste, "Dynamic Across-Time Measurement Interpretation" 8th National Conferenceon Artificial Intelligence, Boston, Massachusetts, August1990.

[4] R. J. Doyle, "A Distance Measurefor Attention Focusing and AnomalyDetection in Systems Monitoring"

submitted to 12th National Conferenceon Artificial Intelligence, Seattle, Washington,July 1994.

[5] R. J. Doyle, L. Charest, Jr., N. Rouquette,J. Wyatt,and

C. Robertson, "Causal Modelingand Event-driven Simulation for Monitoring of Continuous Systems" Computers in Aerospace9, AmericanInstitute of Aeronautics

and Astronautics, San Diego, California, October 1993.

[6] R. J. Doyle, S. A. Chien, U. M. Fayyad, and E. J. Wyatt, "Focused Real-time Systems Monitoring Based on

Multiple AnomalyModels" 7th International Workshop

on Qualitative Reasoning, Eastsound, Washington,May

1993.

[7] D. L. Dvorak and B. J. Kuipers, "Model-BasedMonitoring of DynamicSystems," 11th International Conference on Artificial Intelligence, Detroit, Michigan,August 1989.

[8] T. Hill, W. Morris, and C. Robertson, "Implementing

a Real-time Reasoning System for Robust Diagnosis,"

6th Annual Workshopon Space Operations Applications

and Research, Houston, Texas, August 1992.

[9] J. Muratore, T. Heindel, T. Murphy,A. Rasmussen,and

R. McFarland, "Space Shuttle Telemetry Monitoring by

Expert Systems in Mission Control," in Innovative Applications of Artificial Intelligence, H. Schorr and A.

Rappaport (eds.), AAAIPress, 1989.

[10] J. Pearl, ProbabilisticReasoningin lntelligent Systems:

Networks of Plausible Inference, Morgan-Kaufmann,

San Mateo, 1988.

[11] N. F. Rouquette, S. A. Chien, and L. K. Charest, Jr.,

"Event-driven Simulation in SELMON:

An Overview of

EDSE"JPL Publication 92-23, August 1992.

[12] E. A. Scarl, J. R. Jamieson,and E. New,"Deriving Fault

Location and Control from a Functional Model" 3rd

IEEESymposiumon Intelligent Control, Arlington, Virginia, 1988.

36