From: AAAI Technical Report SS-93-04. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved.

Massive Parallelism

in Logic

Franz J. Kurfet3"

Department

Faculty

of Neural

Information

of Computer Science,

Ulm,

Processing,

University

of Ulm,

GERMANY

The capability of drawing new conclusions from available information still

is a major challenge for computer

systems. Whereas in more formal areas like automated theorem proving the ever-increasing

computing power of

conventional systems also leads to an extension of the range of problems which can be treated, manyreal-world

reasoning problems involve uncertain, incomplete, and inconsistent information, possibly from various sources

like rule and data bases, interaction with the user, or raw data from the real world. For this kind of applications

inference mechanisms based on or combined with massive parallelism,

show some promise. This contribution

concentrates

in particular

connectionist

on three aspects of massive parallelism

techniques

and inference:

first,

the potential of parallelism in logic is investigated, then an massively parallel inference system based on

connectionist techniques is presented 1 and finally the combination of neural-network based components with

symbolic ones as pursued in the project WlNAis described.

1

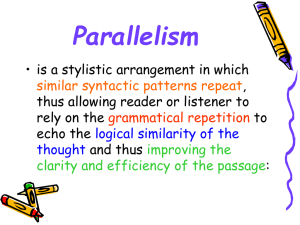

Parallelism

The exploitation

in Logic

of parallelism

in inference systems mostly concentrates on AND/OR-parallelism with Prolog

as input language, resolution as underlying calculus and either implicit parallelism or a small set of built-in

constructs for explicit parallelism. Whereasthe potential degree of parallelism in these approaches seems quite

high, its practical exploitation

is rather limited, mainly due to the complicated managementof the execution

environment. The potential of parallelism, and in particular massive parallelism, in logic is discussed here with

respect to different parts of a logical formula, namelythe whole formula, its clauses, the literals

of the clauses,

the terms, and the symbols (Kurfef~, 1991b).

Formula Level On the formula level, parallelism

occurs mainly in two varieties:

one is to work on different

relatively large and independent pieces of the formula simultaneously, the pieces being either separate formulae,

"This work is partially

supported by the German Ministery for Research and Technology under project

lo3 E/9 (WINA)

l joint

work with Steffen HSUdobler, TH Darmstadt

132

number 413-4001-01 IN

or something corresponding to modules or objects. The other variety is to apply different

evaluation mechanisms

to one and the same formula; these evaluation mechanismscan be different calculi suitable for different classes

of formulae, or different degrees of accuracy ranging from a restriction

to the propositional structure

of the

formula (thus neglecting the information contained in the terms), over incomplete unification (weak unification,

unification without occur check) to a full treatment of the predicate logic formula. This variety also comprises

some reduction techniques, aiming at a smaller search space while maintaining the essential properties of the

formula. Another instance of parallelism on the formula level are spanning matings (Bibel, 1987b). A spanning

mating is a set of connections of the formula which - if it is unifiable - represents a solution; thus spanning

matings are related to OR-parallelism, but take into account the overall structure of the formula, which to some

degree can already be done at compile time.

Clause Level OR-parallelism (Conery, 1983; Ratcliffe

and Robert, 1986; Warren, 1987; Haridi and Brand,

1988; Lusk et at., 1988; Szeredi, 1989; Kale, 1989) occurs on the clause level: alternative definitions of clauses

are evaluated at the same time. In the reduction-based evaluation mechanisms, OR.parallelism is exploited at

runtime. This creates a considerable overhead for the managementof the variable bindings associated with the

alternative solution attempts. In addition, other reduction techniques may be applied on the clause level.

Literal Level The literal

level contains AND.parallelism and routes. AND-parallelism refers to the simulta-

neuos evaluation of multiple subgoals in a clause. The problem here is that there might be variables appearing

in more than one subgoals. All instances

of one variable in a clause, however, must have the same value.

Thus, if subgoals sharing variables are evaluated in parallel

these variables are consistent.

it must be guaranteed that the instantiations

of

On one hand this causes some complications during the execution, since either

communication has to take place between processing elements working on subgoals with shared variables,

or

the bindings of different instances of a shared variable must be checked for consistency after the evaluation

of a subgoal. On the other hand, shared variables can be used nicely for synchronization purposes, which is

used in a family of AND-parallel logic programming languages with PARLOG

(Clark, 1988; Foster and Taylor,

1987; Gregory, 1987), Concurrent PROLOQ

(Shapiro, 1988) and GHCand its derivatives

exponents. Routes are based on a statical

grouping of connected literals

(Ueda, 1985) as

from different clauses (Ibafiez,

1988;

Kurfel~, 1991b). In contrast to AND-parallelism, where subprocesses are spawned off dynamically as soon as

multiple subgoals are encountered in a clause, routes are identified as far as possible during compile time, and

only expanded at run-time if necessary.

Term Level Of high interest

with respect to massive parallelism is the term level; this kind of parallelism is

often referred to as unification or term parallelism. Again we can differentiate

between two varieties:

to treat

different terms or pieces of terms simultaneously, or to apply different operations on the same terms. In the

first case, pairs of terms can be treated as they emerge during the proof process, or large term structures can

133

be separated into relatively

independent substructures (this includes streams, for example). In the second case,

unification can be split up into separate phases (singularity,

evaluation scheme for resolution,

promising. This is different

decomposition, occur check). Due to the stepwise

the size of terms is rather small, and term parallelism does not look very

for approaches like the connection method or similar ones (Wanget al.,

where the terms of substantial

parts of the formula are combined into large term structures,

1991),

which then can be

treated in parallel.

Symbol Level The application

of operations to the components of data structures

for data parallelism (Hillis and Steele, 1986; Waltz and Stanfill,

is executed in a SIMDway on each component of a data structure.

data structures

representation

is the underlying concept

1988; Fagin, 1990): one and the same operation

This only is useful if there are regular

composed of a large number of elements of the same (or a closely related)

type. The usual

of logic programming languages like PROLOG

does not lead to a natural exploitation

parallelism; there are approaches, however, to integrate data-parallel

Subsymbolic Level Applying an alternative

concepts into PROLOG

(Fagin, 1990).

paradigm to the representation

of symbols, namely distributed

representation (Hinton, 1984), leads to another level even below the symbol level.

of a symbol cannot be localized to one particular

over an ensemble of cells;

symbol. A few inference

one cell,

Here the representation

place in memory(e.g. one memorycell),

but is distributed

on the other hand, is involved in the representation

mechanisms based on connectionist

of data

of more than one

systems have been proposed; some of them aim

at a implementation of a logic programminglanguage (or, equivalently,

expert system rules) with connectionist

methods (Pinkas, 1990; HSlldobler and Kurfet], 1991; Pinkas, 1991c; Pinkas, 1991b; Pinkas, 1991a; Lacheret al.,

1991a; Kuncickyet al., 1991; Lacher et al., 1991b; Kuncickyet al., 1992) whereas others are directed towards

inference models not necessarily founded on a formal logic system (Lee et al., 1986; Barnden, 1988; Shastri,

1988; Barnden and Srinivas,

1990; Sun, 1991; Lange and Wharton, 1992; Shastri and Ajjanagadde, 1992; Sun,

1992; Sun, 1993).

2

Massive Parallelism

A lot of attention

has been paid recently to the potential

and the use of "massively parallel

systems". This

term is used in computer architecture for describing computer systems consisting of a large numberof relatively

simple processing elements, with the Connection Machine, specifically the CM-2series, as prototypical instance.

In a more general context, the term is also used as a theory for describing the behavior of physical systems

composedof a large numberof relatively

simple interacting

units, often in connection with neural networks to

describe their modeof operation. In this section we will try to point out important aspects of massively parallel

systems, the emphasis being on its use in the computer architecture field.

134

Characteristics

for Massively Parallel

Computer Systems There seems to be a general

about the meaning of "massive parallelism",

or "massively parallel

although, to our knowledge, a concise definition

1984; Hillis,

understanding

systems" in the computing community,

has not yet been proposed (Hillyer and Shaw, 1984; Jackoway,

1985; Potter, 1985; Uhr, 1985; Denning, 1988; Frenkel, 1986; Hillis and Steele, 1986; Thinking

Machines, 1987; Aarts and Korst, 1989; Nilsson and Tanaka, 1988; Stanfill

Robertson, 1988; Waltz and Stanfill,

1988; Almasi and Gottlieb,

1987; Douglas and Miranker, 1990; Hennessy and Patterson,

and Waltz, 1988; Tucker and

1989; Bell, 1989; MacLennan, 1989; Stolfo,

1990; Waltz, 1990; Douglas and Miranker, 1990;

Kalb and Moxley, 1992; Potter, 1992; Wattenberg, 1992; Journal of Parallel

and Distributed Computing, 1992).

At its core lies a computer system which consists of thousands of relatively simple processing elements, with a

relatively

dense interconnection network, operating in synchrony (SIMDmode). The term "massive parallelism"

often is used as a synonymfor "data parallelism" as introduced in (Hillis

and Steele, 1986; Rose and Steele,

1987).

In thefollowing

we tryto elucidate

thecharacteristics

of a massively

parallel

systemwithrespect

to the

aspects of computation, communication, synchronization,

and the underlying memorymodel. It is described

mainly from a user’s point of view, ideally providing a system with an unlimited number of processing elements,

each connected with all others. In addition, the user should not have to worry about synchronization or access

to memory.Obviously this is an idealized picture, possibly in contrast to feasibility,

performance, efficiency,

and other aspects.

Computation The computation aspects

of massive parallel

of processing elements, virtually infinite

system concentrate

for the user, and relatively

technology, tens of thousands of processing elements are feasible,

gle bit. The illusion

of an infinite

mainly on a large number

simple processing elements. With current

each processing element operating on a sin-

number of processing elements can be approached by providing "virtual"

processors, implementedthrough time-sharing of the real units. It is unclear if the simplicity of the processing

elements should be considered a fundamental requirement, or if it is a question of trade-off between the number

of elements and their complexity.

CommunicationFrom a user’s point of view, desirable communication properties

stem are a dense in~erconnection scheme, virtually

of a massively parallel

sy-

fully connected, with uniform communicationdelays. Current

computers being implementedas electronic devices, the only realistic

interconnection method for massively par-

allel systems seems to be a regular point-to-point serial connection to tens of neighbors. A fully interconnected

network is not feasible with this technology, but the available density seems to be sufficient for large classes of

problems. Automatic routing methods are usually available on the operating system level so that the user may

assume full interconnectivity.

Communicationdelays are normally not uniform, but do not seem to impose cri-

tical constraints for most applications. Optical technology might offer a substantial increase in intereonnection

density, but does not seem to be ripe yet for wide application.

135

Synchronization

The coordination

of activities

withina massively

parallel

systemshouldbe supported

by

flexible

synchronization mechanisms, transparent to the user, and problem-specific

should be easily available.

a strictly

synchronization methods

The currently prevalent synchronization method for massively parallel

systems is

synchronous operation of the processing elements, i.e. in one cycle each processing element executes

the same operation as all others,

or does nothing. Such a tight synchronization

scheme does not seem to

be a desirable property, but rather a consequence of available techniques and technologies. This scheme also

implies the presence of a single controller,

which maylead to problems with future, larger systems. Completely

asynchronous operation as in MIMD

systems, on the other hand, shifts the burden to the compiler or even the

user, which for massive parallelism

does not seem to be a viable alternative.

The usage of the term massive

parallelism in the other domain, the description of physical systems, often is connected with a certain amount

of self-organization, where the operation of the system is loosely synchronized through the activities

itself,

without the need for a central controller.

Whereas such a behavior certainly

of the units

would be desirable for a

computer as well, it is not clear at present howthis could be achieved.

MemoryModel The memory available

in a massively parallel

system as seen by the user should provide a

single fiat memoryspace, no visible memoryhierarchy (cache, local memory, global memory), and uniform memory access times. These requirements clearly reflect the currently almost exclusively used way of accessing data

through addresses and pointers.

or associative

Accessing data according to what they represent,

memories does have a number of advantages, especially

between items in large bodies of information. In particular

memoryaccess, distributed

The Parallel

3

representation,

i.e. in content-addressable

with operations based on similarities

in connection with neural networks, associative

and sparse encodings are often associated with massive parallelism.

Associative Network PAN(Palm and Palm, 1991) has been designed with these concepts in mind.

The Potential

of Massive Parallelism

for Logic

The goal of this section is to investigate the potential of massive parallelism for logic. The overview table in

Figure 1 shows for each level of a logical formula the requirements on computation, communication, synchronization, and memory.The requirements and their corresponding entries in the table are roughly characterized as

follows: Computationis low if there are a few operations to be performed in parallel;

it is mediumfor hundreds

of simultaneous operations, and high for thousands. Communicationis low if at a given time, a few messages are

exchanged in the system simultaneously; medium, if it is hundreds, and high for thousands. Synchronization

is low if a few synchronizing actions are effective

at the same moment, mediumfor hundreds, and high for

thousands. For the memory category several characterizations

active data elements (i.e.

can be chosen: the number of simultaneously

working memory), the number of memoryaccesses at one point, or the overall number

of data elements in main memoryat a given moment. There is an obvious tradeoff in this characterization:

similar results can be achieved by a few, very complex operations, or manyvery simple ones; similar tradeoffs

136

holdfor communication,

synchronization,

and memoryrequirements.

At thispointwe do notwantto detail

thesetradeoffs

further,

butjustassumesomeintermediate

complexity,

e.g.as givenby theoperations

anddata

structures

of a microprocessor

or an abstract

machineas the WAM(Warren,

1983).It mustbe notedthatthe

approach

hereis nota quantitative

one:theentries

of thetableareonlyestimates,

andnotbasedon a detailed

analysis.

Sucha detailed

analysis

wouldbe quitedifficult,

sincetheamountof parallelism

candependon the

programming

style,calculus,

internal

representation,

andmanyotherfactors.

Communication

Computation

Formula

low

multitasking,

objects,calc.

Synchronization

low

low

medium

low

Literal

AND-par., routes

medium

medium

Term

high

high

Atom

data par.

high

medium

Sub-symbolic

high

high

Clause

OR-par.,

spanning

Memory

low

sets

medium

medium

high

high

unification par., streams

low

low

medium

medium

connectionist

Figure 1: The potential of massive parallelism for logic

On the formula level, the requirements in all categories are rather low. This is due to the assumption that in

most applications only a small numberof independent formulae (or tasks, objects, modules, etc.) will be treated

simultaneously. It is possible to imagine applications where the number of formulae is muchhigher, however;

this is largely dependent on the organizational style of a large knowledgebase, for example. On the clause level,

the amount of computation can be considerabley higher than on the formula level,

exist expressing alternative rules. The communicationrequirements on this level still

clauses represent independent subtrees to be traversed.

because many clauses may

are rather low: alternative

There is a need for some synchronization,

however,

which is mainly caused by the necessity to perform backtracking if a branch leads to a dead end. Backtracking

can be avoided if all possible alternatives

are investigated simultaneously. Although there is some overhead in

memoryconsumption for the storage of binding information in the case that backtracking is necessary, it is not

very high.

The amount of computation to be performed on the literal

but for most applications still

level certainly is higher than at the clause level,

ranges in the hundreds. It requires a fair amount of communication since the

137

bindings of identical variables from different

literals

of the same clause must be the same. The same concept

of shared variables also causes the necessity of synchronization. There is no exceptional requirement in terms

of memoryconsumption here. The potential

for massive parallelism really becomes evident on the term level.

Depending on the size and structure of the program of course, thousands of operations can possibly be applied

to componentsof terms, although in the extreme case unification - which is the essential operation on the term

level - can be sequential (Yasuura, 1983; Delcher and Kasif, 1988; Delcher and Kasif, 1989). On this level, the

computation of variable bindings and checking of term structures

and synchronization.

necessitate

a high amount of communication

Due to the possibly large amount of data involved, memoryrequirements can be also

quite high. On the symbol level the potential lies in the exploitation of data parallelism (Hillis and Steele, 1986;

Succi and Marino, 1991; Fagin, 1990). The basic idea is to apply one and the same operation to all components

of a data structure like an array. The amountof parallelism thus depends on the size of these data structures.

Communication is necessary according to the dependencies of the program, whereas synchronization

according to the SIMDoperation of data-parallel

machines. Memoryaccess typically

is easy

is done locally for each

processing element. The potential for parallelism is also quite high on the sub-symbolic level, which is applied

in some connectionist models. The amount of computation is quite high due to the large amount of processing

elements, although the typical operations of these elements are very simple. Communicationis also very high,

again with the simplicity of the data exchanged. Synchronization basically is not necessary, the design of these

systems is based on self-organization.

The memoryrequirements can be about a third higher than conventional

systems, which is due to the necessity of sparse encoding to avoid crosstalk between stored concepts (Palm,

1980).

4

CHCL: A Connectionist

Inference

System

This section describes the design and implementation of CHCL(HSlldobler, 1990c; HSlldobler, 1990a), a connectionist inference system. The operational behavior of CHCLis defined by the connection method (Bibel, 1987b),

a calculus which has some advantages over resolution,

for example, with respect to parallelism.

here is to identify spanning matings, which represent candidates for alternative

order to represent an actual solution,

solutions to the formula; in

a spanning mating also has to be unifiable.

has been used to derive CHCLas functionally

The basic idea

This evaluation mechanism

decomposable system, in contrast to symbolic AI systems where

symbols as basic tokens are combined via concatenation.

The architecture

of CHCLis based on networks of simple neuron-like units,

interconnected

by weighted

links. The overall system consists of several sub-networks performing the determination of spanning matings,

reductions of the search space, and unification.

with weighted interconnections,

Through its composition from a large number of simple units

CHCI, exhibits a large degree of parallelism restricted

properties of the formula under investigation.

mainly by the inherent

At the core of the inference system is the unification mechanism

(HSlldobler, 1990b; Kurfefl, 1991c), which represents the terms to be unified as a boolean matrix of symbols and

138

theiroccurrences;

initially

allthose’entries

of thematrix

aretruewherea symbol

occurs

ata certain

position

in oneof the termsto be unified.

In the courseof the computation,

moreelements

becometrueaccording

to twoproperties

of unification:

Singularity,

whichguarantees

thatsymbols

withthesameidentifier

havethe

samevalue,andDecomposition,

whichpropagates

theunification

taskto subterms

through

functions

andtheir

arguments. In the example < f(z, z, z) f( g(a), y, g(z)), si ngularity gu arantees th at z, V, g(a) andg(z) all

have the same value, whereas decomposition propagates the unification

thus achieving identical

values for a and z. Both singularity

task to the subterms g(a) and g(z),

and decomposition are implemented through links

and auxiliary units between units of the unification network. Singularity links connect different symbols (and

subterms) occurring at the same position in different

terms, and instances of a symbol occuring at different

positions.

Decomposition

linksareonlyrequired

if subterms

mustbe unified

because

of a sharedvariable

in

theirancestors,

andtheygo fromthevariables

at a "parent"

position

to thesymbols

of therespective

"child"

position(s).

Theoperational

principle

ofCHCLis quitesimple:

it relies

on simple

boolean

unitswitha threshold,

weighted

linksandthepropagation

of activation

through

theselinks;thereis no learning

involved.

It is also

guaranteed

thatintheworstcasethetimecomplexity

of thealgorithm

is linear

withthesizeof theunification

problem;

thespecial

casesof matching

(whereonlyoneof thetwotermsmaybe changed)

andthewordproblem

(arethetwotermsidentical?)

aresolvedin twosteps(H611dobler,

1990a).

Thespatial

complexity

is not

favorable:

thenetwork

requires

a quadratic

numberof auxiliary

unitsanda cubicnumberof links.

An implementation

of CHCL’scoreparton ICSIM,a connectionist

simulator

(Schmidt,

1990)showedthe

validity

of theapproach

(Kurfefl,

1991c;

Kurfefi,

1991a);

itsperformance

obviously

is nomatchforconventional

inference

mechanisms.

Currentlyeffortsare underway to implement

CHCL on a WAVETRACER

(Wavetracer,

Inc.,1992)massively

parallel

system.Witha bettermatchof the evaluation

principle

andthe underlying

machine

model,andwithout

theadditional

overhead

of a simulator,

a largeboostin theperformance

is to be

expected.

In another

lineof research

CHCLis applied

to a spatial

reasoning

task,wherea simplevisualsceneanda

description

of thesceneareto be checked

forconformance

(Beringer

et al.,1992).Theinteresting

pointwith

respect

to massive

parallelism

hereis theinterface

between

thevisual

input- basically

a pixelarray- andthe

symbolic

part.A relatively

simpleextension

of CHCLallowsfortheextraction

of factsaboutthevisualinput

in an abductive

way,i.e.onlywhena particular

factis requested

by theinference

mechanism.

5

WINA: Knowledge

Processing

with

Symbolic

and Sub-Symbolic

Mechanisms

An important

aspectof knowledge

processing

is theuseof methods

to bringtogether

information

fromvarious

sources,

possibly

in different

formats,

anddrawcoherent

conclusions

basedon alltheavailable

evidence.

One

of thegoalsof theWINAprojectis to deviseand implement

a framework

withthenecessary

components

for

139

drawing

inferences

withmultiple

sources

of information,

different

representations,

uncertain

knowledge,

and

possibly

conflicting

evidence.

Twocorecomponents

of thesystemarea neuralclassifier,

whichextracts

structural

information

fromraw

dataso thatit canbe usedby a symbolic

component,

anda neuralassociative

memory,whichis mainlyused

forstoring

information

fromtheneural

classifier

in sucha waythatit canbe accessed

easily

by theinference

component.

The systemas a wholeis designed

in a modularwayso thatdifferent

instances

of the components

can be

exchanged

easily.

Withthisrespect,

a majoraspect

of thecurrent

workis theintegration

of a previously

developedhardware

implementation

of a parallel

associative

neuralnetworkcomputer

PAN (PalmandPalm,1991)

intotheoverall

system.

As withmanyparallel

systems,

thelimiting

factorfortheexploitation

of parallelism

hereis communication

bandwidth.

Thecritical

operation

in thiscaseis a retrieval

step,wherea pattern

X is

presented

to theassociative

memory,

andchecked

against

thepatterns

already

stored;

theresultis thepattern

withtheclosest

matchto theinput.Thecurrent

version

of thePAN system,

PAN-IV,

is basedon binaryneurons

and employs

a sparsecodingscheme(Palm,1980).Thisdesignleadsto theuse of one processor

per neuron,

anddatabusesof widthlogn, n beingthedimension

of theinputandoutputvector.

Thescalability

of the

system

is determined

by theeffort

to addprocessing

elements

(linear

withrespect

to n) andto expand

thebuses

(logarithmic

withrespect

to n).Theresponse

timedepends

on thenumberof l’sin theinputandoutput

vector,

whichdueto thesparsecodingis rathersmall(againroughly

logarithmic).

Forthebinarycasetheoperations

required

areverysimple,

andas an effectof thesparse

codingcanbe reduced

to conditional

decrements.

Thus

a special

chiphasbeendesigned

whichbasically

consists

of onecounter

foreachneuron,

plussomeaddressing

andpriority

encoding

logic.In itscurrent

version,

thePAN-IV contains

chipswith32 neurons

each;eightchips

with256 KBytesof memoryeachare placedon one board,and one VME-buscabinethousesup to 17 boards.

Basedon thisratherconservative

design,

morethan4K neuronscanbe integrated

withinonesystem.

Theintegration

of sucha neuralassociative

memorywithan inference

systemofferstwo mainadvantages:

extremely

fast,contentandsimilarity

basedaccess

to factson onehand,andintegration

of raw,subsyrnbolic

dataintoa symbolprocessing

systemon theotherhand.

References

Aarts,E. H. andKorst,J. H. (1988/1989).

Computations

in massively

parallel

networks

basedon theBoltzmann

machine:

A review.

Parallel

Computing,

9:129-145.

Almasi,

G. S. andGottlieb,

A. (1989).

HighlyParallel

Computing.

Benjamin

/ Cummings,

Redwood

City,CA.

Ballard,

D. (1988).

Parallel

Logical

Inference

andEnergy

Minimization.

Technical

Report

TR 142,Computer

Science

Department,

University

of Rochester.

Barnden,

J. (1988).

Simulations

of Conposit,

a Supra-Connectionist

Architecture

forCommonsense

Reasoning.

In $nd

Symposiumon the Frontier~ ofMassively Parallel Computation,Fair]az, VA., Las Cruces.

Barnden,J. A. and Srinivas, K. (1990). Overcomingrule-based rigidity and connectionist limitations through massivelyparallel

case-based

reasoning.

Memoranda

in Computer

andCognitive

Science

MCCS-90-187,

Computing

Research

Laboratory,

NewMexico

StateUniversity,

LasCruces,

NM.

140

Bell, G. C. (1989). The future of high performance computers in science and engineering.

32(9):1091-1101.

Beringer, A., H611dobler, S., and Kurfefl, F. (1992). Spatial reasoning and connectionist

Universit~t Ulm, Abteilung Neuroinformatik, W-7900 Ulm. submitted to IJCAI 93.

Bibel, W. (1987a). Automated Theorem Proving. Artificial

Bibel,

Intelligence.

Communications of the ACM,

inference.

Technical report,

Vieweg, Braunschweig, Wiesbaden, 2nd edition.

W. (1987b). Automated Theorem Proving. Vieweg, Braunschweig, Wiesbaden, second edition.

Clark, K. L. (1988). Parlog and Its Applications.

IEEE Transactions on Software Engineering, 14:1792-1804.

Conery, J. S. (1983). The AND/ORProce88 Model for Parallel Ezecution of Logic Programs. PhD thesis,

California, Irvine. Technical report 204, Department of Information and Computer Science.

University of

Delcher, A. and Kasif, S. (1989). Someresults in the complexity of exploiting data dependency in parallel

LogicProgramming, 6:229-241.

logic programs.

Delcher, A. L. and Kasif, S. (1988). Efficient Parallel

Johns Hopkins University, Baltimore, MD21218.

Denning, P. (1986). Parallel

Term Matching. Technical report,

Computing and Its Evolution.

Communications of the ACM,29:1163-1169.

Douglas, C. C. and Miranker, W. L. (1990). Beyondmassive parallelism:

Parallel Computing, 16(1):1-25.

Fagin, B. (1990). Data-parallel

Computer Science Department,

numerical computation using associative

tables.

logic programming. In North American Conference on Logic Programming.

Foster, I. and Taylor, S. (1987). Flat Parlog: A Basis for Comparison. Parallel Programming, 16:87-125.

Frenkel,

K. A. (1986). Evaluating Two Massively Parallel

Machines. Communications of the ACM, 29:752-758.

Goto, A., Tanaka, H., and Moto-Oka, T. (1984). Highly Parallel

Machine Architecture.

New Generation Computing.

Inference

Engine: PIE. Goal Rewriting Model and

Goto, A. and Uchida, S. (1987). Towards a High Performance Parallel Inference Machine - The Intermediate Stage Plan

for PIM. In Treleaven, P. and Vanneschi, M., editors, Future Parallel Computers, Berlin. Springer.

Gregory, S. (1987). Parallel

Logic Programming with PARLOG:The Language and its Implementation.

Addison Wesley.

Haridi, S. and Brand, P. (1988). ANDORRA

Prolog - An Integration

of Prolog and Commited Choice languages.

Future Generation Computer Systems, pages 745-754, Tokyo. Institute

for New Generation Computer Technology

(ICOT).

Hennessy, J. L. and Patterson,

Marco, CA.

D. A. (1990). Computer Architecture:

Hillis,

D. W. (1985). The Connection Machine. MIT Press,

Hillis,

W. and Steele,

G. (1986). Data Parallel

A Quantitative

Approach. Morgan Kaufmann, San

Cambridge, MA.

Algorithms. Communications of the ACM,29:1170-1183.

Hillyer, B. and Shaw, D. (1984). Execution of Production Systems aa a Massively Parallel

and Distributed Computing. submitted.

Hinton, G. E. (1984). Distributed Representations.

University, Pittsburgh, PA 15213.

Technical report,

Machine. Journal of Parallel

Computer Science Department, Carnegie-Mellon

H611dobler, S. (1990a). CHCL- a connectionist inference system for horn logic based on the connection method. Technical

Report TR-90-042, International Computer Science Institute,

Berkeley, CA94704.

H61ldobler, S. (1990b). A structured connectionist unification algorithm. In AAAI ’90, pages 587-593. A long version

appeared as Technical Report TR-90-012, International Computer Science Institute,

Berkeley, CA.

H6Udobler, S. (1990c). Towards a connectionist

Computational Intelligence.

inference

system. In Proceedings of the International

Symposium on

HSlldobler, S. and Kurfefl, F. (1991). CHCL- A Connectionist Inference System. In FronhSfer, B. and Wrightson, G.,

editors, Parallelization in Inference Systems, Lecture Notes in ComputerScience. Springer.

Ibafiez, M. B. (1988). Parallel inferencing in first-order logic based on the connection method. In Artificial

Methodology, Systems, Applications ’88. Yarns, North-Holland.

Jackoway, G. (1984). Associative Networks on a Massively Paxallel

Science, Duke University.

Computer. Technical report,

Department of Computer

Journal of Parallel and Distributed Computing(1992). Special issue: Neural computing on massively parallel

Journal of Parallel and Distributed Computing, (3).

141

Intelligence:

processors.

Kalb, G. and Moxley, R. (1992). Massively Parallel,

Amsterdam.

Kal6, L. (1988). A Tree Representation

Kal6, L. V. (1989).

Programming.

for Parallel

The REDUCEOR Process

Optical,

and Neurocomputing in the United States.

IOS Press,

Problem Solving. In AAAI ’88, pages 677-681.

Model for Parallel

Kohonen, T., M~kisara, K., Simula, O., and Kangu, J., editors

Networks (ICANN-91), Espoo, Finland. North-HoUand.

Execution

of Logic Programs.

(1991). International

Journal

o/Logic

Conference on Artificial

Neural

Kuncicky, D. C., Hrusk~t, S., and Lacher, K. C. (1992). Hybrid systems: The equivalence of expert system and neural

network inference. International Journal of Expert Systems.

Kuncicky, D. C., Hruska, S. I., and Lacher, R. C. (1991). Hybrid systems: The equivalence of rule-based expert system

and artificial

neural network inference. International Journal of Expert Systems.

Kung, C.-H. (1985). High Parallelism

and a Proof Procedure. Decision Support Systems, 1:323-331.

Kurfefl, F. (1991a). CHCL: Aa Inference Mechanism implemented

International Computer Science Institute.

on ICSIM. Technical

Report ICSI-TR 91-052,

Kurfefl, F. (1991b). Parallelism in Logic -- Its Potential for Performance and Program Development. Artificial

gence. Vieweg Verlag, Wiesbaden.

Kurfefl, F. (1991c). Unification on a connectionist simulator. In International

- ICANN-91, Helsinki, Finland.

Conference on Artificial

Intelli-

Neural Networks

Lacher, R. C., Hruska, S. I., and Kucicky, D. C. (1991a). Expert systems: A neural network connection to symbolic

reasoning systems. In Fishman, M. B., editor, FLAIRS 91, pages 12-16, St. Petersburg, FL. Florida AI Research

Society.

Lacher, R. C., Hruska, S. I., and Kuncicky, D. C. (1991b).

Transactions on Neural Networks.

Backpropagation

learning

in expert

networks.

IEEE

Lunge, T. E. and Wharton, C. M. (1992). REMIND:Retrieval from episodic memoryby inferencing and disambiguation.

In Barnden, J. and Holyosk, K., editors, Advances in Connectinoist and Neural Computation Theory, volume II:

Analogical Connections. Ablex, Norwood, NJ.

Lee, Y., Doolen, G., Chen, H., Sun, G., Maxweil, T., Lee, H., and Giles, C. (1988). Machine learning using a higher

order correlational network. Physica D, 22(1-3):276.

Lusk, E., Butler, R., Disz, T., Olson, R., Overbeek, R., Stevens, R., Warren, D. H. D., Calderwood, A., Szeredi, P.,

Haridi, S., Brand, P., Carlsson, M., Ciepielewski,

A., and Haussmann, B. (1988). The AURORA

OR-Parallel

PROLOG

System. In International

Conference on Fifth Generation Computer Systems, pages 819-830.

MacLennan,B. J. (1989). Outline of a theory of massively parallel

Tennessee, Computer Science Department, Knoxville.

analog computation. Technical report,

University

Nilsson, M. and Tanaka, H. (1988). Massively Parallel Implementation of Flat GHCon the Connection Machine.

International Conference on Fifth Generation Computer Systems, pages 1031-1040.

Palm, G. (1980). On Associative

Memory.Biological Cybernetics,

38:19-31.

Palm, G. and Palm, M. (1991). Parallel associative

networks: The PAN-System and the BACCHUS-Chip.In Ramacher,

U., R~ckert, U., and Nossek, J. A., editors, Microelectronics/or Neural Networks, pages 411-416, Munich, Germany.

Kyrill & Method Verlag.

Pinkas, G. (1990). Connectionist energy minimization and logic satisfiability.

Technical report,

Computing Systems, Department of Computer Science, Washington University.

Center for Intelligent

Pinkas, G. (1991a). Constructing proofs in symmetric networks. In NIPS ’91.

Pinkas, G. (1991b). Expressing first order logic in symmetric connectionist

Processing in AI, Sydney, Australia.

Pinkas, G. (1991c). Symmetric neural networks and propositional

Potter,

networks. In IJCAI 9I Workshop on Parallel

logic satisfiability.

Neural Computation, 3(2):282-291.

J. (1985). The Massively Parallel Processor. MIT Press.

Potter, J. L. (1992). Associative Computing - A Programming Paradigm for Massively Parallel

Publishing Corporation, NewYork, NY.

Ratcliffe,

M. and Robert, P. (1988). PEPSys: A Prolog for Parallel

Computer Research Center (ECRC), Mlanchen, M~nchen.

142

Processing.

Computers. Plenum

Technical Report CA-17, European

Rose, J. and Steele, G. (1987). C*: An Extended C Language for Data Parallel

Machines Corporation.

Schmidt, H. W. (1990). ICSIM: Initial design of an object-oriented

tional Computer Science Institute,

Berkeley, CA 94704.

Programming. Technical report,

Thinking

net simulator. Technical Report TR-90-55, Interna-

Shapiro,

E. (1988).Concurrent

Prolog.MIT Press.

Shastri,

L. (1988).A connectionlst

approach

to knowledge

representation

and limitedinference.

Cognitive

Science,

12:331-392.

Shastri,

L. andAjjanagadde,

V. (1992).

FromsimpleaJmociations

to systematic

reasoning:

A connectlonist

representation

of rules,variables,

and dynamic

bindings

usingtemporal

synchrony.

Behavioral and Brain Sciences.

Stanfill,

C. and Waltz,D. (1986).TowardMemory-Based

Reasoning.

Communications

of the ACM, 29:1213-1228.

Stanflll,

C. andWaltz,D. (1988).

Artificial

intelligence

on theconnection

machine:

A snapshot.

Technical

ReportG88-1,

ThinkingMachinesCorporation,

Cambridge,

MA.

Stolfo,

S. (1987).On theLimitations

of Massively

Parallel

(SIMD)Architectures

for LogicProgramming.

US-Japan

AI Symposium.

Succi, G. and Marino, G. (1991). Data Parallelism

oJ Logic Pro#rams, Paris, France.

in Logic Programming. In 1CLP 91 Workshop on Parallel

Sun, R. (1991). Connectionist models of rule-based reasoning. Technical report,

Department, Waltham, MA02254.

Brandeis University,

Sun, R. (1992). A connectionlst model for commonsensereasoning incorporating rules and similarities.

Honeywell SSDC, Minneapolis, MN55413.

Sun, R. (1993). An efficient

Cybernetics, 23(1).

feature-based

Swain, M. and Cooper, P. (1988). Parallel

connectionist

inheritance

Thinking Machines (1987).

Machines Corporation.

Connection Machine Model CM-2 Technical

Tucker, L. W. and Robertson,

(8):26-38.

G. G. (1988).

Architecture

Computer Science

Technical report,

scheme. 1EEETransaction on System, Man, and

Hardware for Constraint Satisfaction.

Szeredi, P. (1989). Performance Analysis of the Aurora OR-parallel

Logic Programming.

Execution

In AAAI ’88, pages 682-688.

Prolog System. In North American Conference on

Summary. Technical

and Applications

Report HA87-4, Thinking

of the Connection Machine. Computer,

Ueda,K. (1985).GuardedHornClauses.Technical

ReportTR-103,Institute

for New Generation

ComputerTechnology

(ICOT).

Uhr, L. (1985). Massively Parallel Multi-Computer Hardware --= Software Structures for Learning. Technical report,

NewMexico State University, La.s Cruces, NM.

Waltz, D. (1987a). AppUcations of the Connection Machine. Computer, 20:85-100.

Waltz, D. (1987b). The Prospects for Building Truly Intelligent

ration.

Machines. Technical report,

Waltz, D. L. (1990). Massively Parallel AI. In Ninth National Conference on Artificial

AmericanAssociation for Artificial Intelligence.

Thinking Machines CorpoIntelligence,

Boston, MA.

Waltz, D. L. and Stanfill, C. (1988). Artificial Intelligence Related Research on the Connection Machine. In International Con/erence on Fifth Generation Computer Systems, pages 1010-1024, Tokyo. Institute for NewGeneration

Computer Technology (ICOT).

Wang,J., Marsh,A., and Lavington,

S. (1991).Non-WAMmodelsof logicprogramming

and theirsupportby novel

parallel

hardware.

In Parallelization

in Inference

Systems,

pages253-269.

Springer

Verlag.

Warren,D. (1987).The SRI modelfor OR-parallel

execution

of Prolog.In Symposium

on LogicProgramming

’87, pages

92-102.

Warren,

D. H. (1983).

An Abstract

PrologInstruction

Set.Technical

Report309,SRIInternational,

Artificial

Intelligence

Center,

MenloPark,California.

Wattenberg, U. (1992). Massively Parallel,

Optical,

and Neurocomputin9 in Japan. IOS Press, Amsterdam.

Wavetracer, Inc. (1992). Zephyr Installation

and Operation. Wavetracer, Inc.

Yasuura, H. (1983). On the Parallel Computational Complexity of Unification.

Generation Computer Technology (ICOT), Tokyo.

143

Technical Report 27, Institute

for New