Pattern-Constrained Test Case Generation

Martin Atzmueller and Joachim Baumeister and Frank Puppe

University of Würzburg

Department of Computer Science VI

Am Hubland, 97074 Würzburg, Germany

{atzmueller, baumeister, puppe}@informatik.uni-wuerzburg.de

Abel 2002; Gupta & Biegel 1990): These approaches rely

on an existing knowledge base, and they generate test cases

based on the available set of derivation knowledge.

In contrast, this paper introduces a novel method for the

generation of test cases that is independent from the actually used knowledge representation. Here, test cases are

generated in a separate phase ignoring probably existing

derivation knowledge. Therefore, it is especially useful to

be applied within test–first methodologies, e.g., the agile

methodology for developing knowledge systems (Baumeister, Seipel, & Puppe 2004). Those process models intend

to define the test knowledge before the applied problemsolving knowledge; among other things this process is motivated by the evolutionary way the system is developed. In

the context of this paper, we focus on the domain of diagnostic/classification systems, i.e., for a given input of findings a

(set of) solution(s) is derived by the system explaining the

given findings.

The rest of the paper is organized as follows: We first introduce subgroup patterns and Bayesian networks, and describe the issues and the challenges of generating test cases

for empirical testing. After that, we introduce the test data

generation process in detail. Next, we provide a case study

where we generate test cases for a knowledge base from the

biological domain. Finally, we conclude the paper with a

discussion of the presented approach, and we show promising directions for future work.

Abstract

In this paper we present a novel approach for patternconstrained test case generation. The generation of test cases

with known characteristics is usually a non-trivial task. In

contrast, the proposed method allows for a transparent and

intuitive modeling of the relations contained in the test data.

For the presented approach, we utilize a general-purpose data

generator: It relies on easy to understand subgroup patterns

which are mapped to a Bayesian network representing the

data generation model. The data generation phase is embedded into an incremental process for quality control and adaptation of the generated test cases. We provide a case study in

the biological domain exemplifying the presented approach.

Introduction

The use of test cases for empirical testing is probably the

most natural method for the validation of intelligent systems

(Preece 1998). For this black-box testing method the intelligent system derives new solutions for previously solved test

cases and compares the solutions stored in the cases with

the derived solutions. As a result of the method appropriate

measures like precision/recall can be used to evaluate the

validity of the system.

Among others, the use of empirical testing is useful for

the following reasons:

• Removing communication errors: Defining test cases

before the implementation of the knowledge base can be

very helpful for removing communication errors between

the developer and the domain specialist.

• Detecting side effects: When extending the knowledge

base the definition of (new) test cases is helpful for identifying unwanted interference of old and new problemsolving knowledge.

• The actual validation of knowledge using a suite of test

cases covering the broad spectrum of the system use.

However, the manual creation of test cases is a complex

task and is therefore often ignored or accomplished with decreased interest due to the primary complexity of building

the knowledge base.

Therefore, a number of methods have been proposed to

decrease the acquisition costs of the test knowledge by automatically generating test cases, e.g., (Knauf, Gonzalez, &

Background

In this section we first introduce the used knowledge representation and describe the basics of subgroup patterns. After

that, we give an informal introduction to Bayesian networks.

Finally, we introduce the principles of empirical testing and

their challenges.

General Definitions

Let ΩA the set of all attributes. For each attribute a ∈ ΩA

a range dom(a) of (nominal) values is defined. Furthermore, we assume VA to be the (universal) set of attribute

values of the form (a = v), where a ∈ ΩA is an attribute and v ∈ dom(a) is an assignable value. A case

c = {a1 = v1 , . . . , ak = vk }, (ai = vi ) ∈ VA , is a set of

individual attribute values. These attribute values comprise

of two parts: First, we distinguish the problem description of

c 2007, American Association for Artificial IntelliCopyright gence (www.aaai.org). All rights reserved.

518

the case, i.e., the input to the knowledge system containing

the findings of the case. Second, the attribute values additionally contain at least one solution for the case, denoting

the output of the intelligent system. Let CB be the case base

containing all available cases.

Empirical Testing During the development of the system the test suite is used to check the validity of the created/modified knowledge. Here, the test cases are subsequently given to the system as input, and the derived solution is compared with the solution already stored in the test

case. Measures like precision, recall, and the E-measure are

commonly used for the comparison of the solutions.

Subgroup Patterns Subgroup patterns (Klösgen 2002;

Atzmueller, Puppe, & Buscher 2005), often provided by

conjunctive rules, describe ’interesting’ subgroups of cases,

e.g., "the subgroup of 16-25 year old men that own a sports

car are more likely to pay high insurance rates than the people in the reference population."

Subgroups are then described by relations between independent (explaining) variables (Sex=male, Age=16-25, Car

type=Sportscar) and a dependent (target) variable (Insurance rate). The independent variables are modeled by selection expressions on sets of attribute values. In the context

of this paper, the target variables usually correspond to the

solutions of a case, and the independent variables refer to

specific findings.

A subgroup pattern relies on the following properties: the

subgroup description language, the subgroup size, the target

share of the subgroup, and the target variable. In the context

of this work we focus on binary target variables.

Coverage of Generated Cases An important property of

the test suite is its coverage with respect to the tested knowledge. For rule-based knowledge representations appropriate

methods have been investigated thoroughly in the past, cf.

(Barr 1999; Knauf, Gonzalez, & Abel 2002).

Since we propose a generation method that is independent of the inspected knowledge base, we cannot guarantee

a suitable cover of the knowledge to be tested. For this reason, we propose a post-processing step to be applied after the

test case generation: We run the test suite and create a simple statistics on the knowledge elements used, e.g., for rulebased systems we count the rules that were actually used. If

the coverage of the test suite is found to be unsatisfactory,

then we propose a collection of input values that should also

be included in the test cases (they are extracted from the

unused knowledge elements). These new input values can

serve as input for a second (parametrized) generation phase.

Definition 1 (Subgroup Description) A subgroup description sd = {e1 , . . . , en } consists of a set of selection expressions (selectors) that are selections on domains of attributes,

i.e., ei = (ai , Vi ), where ai ∈ ΩA , Vi ⊆ dom(ai ). A subgroup description is defined by the conjunction of its contained selection expressions.

The Test Case Generation Process

In the following we define the incremental process model for

generating test case data, which is discussed in more detail

in the following subsections. We apply a generation model

given by a Bayesian network as the underlying knowledge

representation: Using the network, we are able to define

the dependency relations between the individual attributes

and the solutions capturing the specific data characteristics.

These data characteristics correspond to the goal characteristics of the test cases. Using the generation model, we can

generate the output data quite easily, e.g., by applying sampling algorithms for Bayesian networks, e.g., (Jensen 1996).

The difficult part is the construction of the Bayesian network itself which is usually non-trivial: The nodes of the

network with the attached conditional probability tables are

only easy to model at the local (node) level. In contrast,

entering all the conditional probabilities is often a difficult

problem, e.g., if relations between nodes need to be considered that are not directly connected.

Therefore, we aim to help the user in an incremental process, where the data characteristics can be described from

abstract to more specific ones. Parts of the generation model

can be described using subgroup patterns which are structurally mapped to the defined Bayesian network in turn. Alternatively, the Bayesian network can be defined or modified

directly with an interactive adaptation step using the given

(subgroup pattern) constraints as test knowledge.

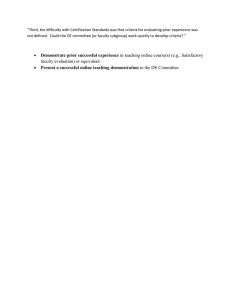

The process consists of the following steps that are also depicted in Figure 1 (read from left to right):

1. Define Domain Ontology: We first define the domain

ontology, i.e., the set of attributes (inputs and solutions)

and attribute values used for the test case generation.

The description language specifies the individuals belonging to a subgroup s = {c | c ∈ CB ∧ sd(c) = true}:

The latter is then given by the set of cases c that fulfill

the subgroup description sd(s), and are thus contained in

the respective subgroup s. The parameters subgroup size n

and target share p are used to measure the interestingness

of a subgroup, where n is the

number of

cases contained

{c | c ∈ s}; the target share

in a subgroup

s,

i.e.,

n

=

p = n1 · {c | c ∈ s ∧ t ∈ c} specifies the share of subgroup

cases that contain the (dependent) target variable t ∈ VA .

Bayesian Networks A Bayesian network consists of a set

of attributes and a set of directed edges connecting the attributes, e.g., (Jensen 1996). For each attribute a the range

dom(a) has to contain a finite set of distinct values. A directed acyclic graph is defined by the attributes and the set

of edges inducing dependency relations between pairs of attributes. For each attribute a and its parents pa(a), induced

by the edges, a conditional probability table (CPT) is attached. For an attribute with no parents an unconditioned

prior probability is used.

Empirical Testing and Case Coverage

The building block of empirical testing is a suite of test

cases, i.e., a collection of representative cases with correct

solutions covering the (expected) application of the intelligent system.

519

Define

Exclusion

Test-Patterns

Define Domain

Ontology

Generate

Data

Data

Model

Define

Association

Test-Patterns

Case Base

Case Replay –

Correct?

Adapt

Specification

No

Yes

Test Case

Selection

Check/

Optimize

Model

Figure 1: Process model for the case generation.

2. Specify Data Generation Model: The Bayesian network or fragments of the network can either be specified

manually, or they can be generated automatically by using

subgroup patterns.

More specifically, for test case generation we need to

model sets of attribute values (association test-patterns)

that occur together with a specific solution (target variable), and attribute values that do never occur with a certain solution (target variable, i.e., exclusion test-patterns.

Both association and exclusion test-patterns are modeled

with respect to a specific solution (target variable) in relation to a set of attribute values (independent variables).

The defined patterns and network fragments are then

structurally merged into the generation model for the output generation. The relations between the variables in the

network are represented by connections of the individual

nodes. Using the subgroup parameters, constraints are derived in order to check these relations. Such constraints

can also be supplied by the user directly.

3. Optimize Model: Initially, the conditional probability

tables of the nodes contained in the Bayesian network,

i.e., the generation model, are initialized with default values. Since the strengths of the modeled relations depend

on these, the parameters of the relations are usually not

correct initially. Therefore, the model is tested given the

available set of constraints, and an optimization step is

applied in order to adjust the conditional probability tables contained in the network. If the model fits the constraints, then the process is finished, and the data generation model is ready for use. As an alternative to the

optimization step, the user can also either adapt the patterns/constraints, modify the network structure, or try to

edit the conditional probability tables by hand.

4. Generate Data: After the generation model has been fit

to the specification of the user, the final generation step is

performed using a sampling algorithm. Given the network

we apply the prior-sample algorithm (Jensen 1996): In a

top-down algorithm for every node a value is computed

according to the values of its parent nodes.

5. Case Replay and Test: After the prototypical test cases

have been generated they are used in an initial empirical

testing phase.

6. Adapt Specification: Based on the results of the previous steps, usually the modeled patterns need to be adapted

when there are errors with respect to the generation model

or the knowledge base: We apply certain adaptation operators in order to handle incorrectly solved cases. Sometimes the knowledge base needs to be adapted, or a generated case needs to be removed completely. We will discuss the applicable set of operators in detail below.

7. Test Case Selection: After the final set of test cases has

been obtained, we apply a selection technique in order

to arrive at a more diverse set of cases, i.e., cases with a

diverse coverage of the problem space. Then, the result

set of cases is inserted into the test suite.

In the following sections we first give an overview of the approximation of the generation model, and we then describe

the particular phases of the process model in more detail.

Overview: Approximating the Generation Model

A collection of subgroup patterns describe dependencies between a target solution and a set of findings. Using such

subgroup patterns a two layered network can be constructed

automatically. Either the target variable can be designated

as the parent of the explaining variables, or as its child.

For each target variable a constraint is generated using the

specified total target share considering the entire population,

i.e., the prior probability of the target variable p(t). Constraints for the parameters of the subgroup patterns are based

on the contained target variable t and the set of selectors in

the subgroup description sd = {e1 , e2 , . . . , en }. Two constraints are generated for each pattern, i.e., the subgroup size

equivalent to the joint probability p(e1 , e2 , . . . , ek ), ei ∈ sd,

and the target share of the subgroup equivalent to the conditional probability p(t | e1 , e2 , . . . , ek ), ei ∈ sd.

Using the subgroup patterns defined for a target variable

the user can select from two basic network structures that are

generated automatically, if the respective nodes contained

in the subgroup description are not already contained in the

network: Either the target variable is the parent of the subgroup selectors, cf. Figure 2(a), or the target variable is the

child of the subgroup selectors, cf. Figure 2(b). The first figure depicts the relation ’IF target TV THEN selectors Si ’;

the latter models the inverse relation ’IF selectors Si THEN

target TV’.

Both structures have certain advantages and drawbacks

concerning the optimization step: Option (a) includes a simple definition of the prior probability of the target variable;

using the CPTs of the children selectors it is very easy to

adapt the parameter subgroup size. On the other hand, op-

520

S1

TV

S1

S2

...

SN

(a) If target TV,

then selectors Si

S2

...

Constraint-based Model Optimization (Phase 3)

SN

For the details of the constraint-based optimization method,

we refer to (Atzmueller et al. 2006). In summary, we need to

compute arbitrary joint and conditional probabilities in the

network, check the available set of constraints, and apply

a hill-climbing constraint satisfaction problem solver, that

tries to fit the model to the constraints minimizing a global

error function. After the CSP-solver has been applied the

resulting state of the model can be controlled by the user interactively: The deviations of the defined patterns and the

patterns included (implicitly) in the network are compared.

Then, the model is tuned if necessary: The automatic optimization process is either restarted, or the user can interact

manually by adapting the conditional probability tables of

the network, or by modifying the structure of the Bayesian

network.

TV

(b) If selectors Si ,

then target TV

Figure 2: Possible network structures for modeling the dependency relations between the target (dependent) variable

and the selectors (independent variables).

tion (b) allows for an easier adaptation of the subgroup target

shares (contained as values in the CPT of the target variable). Furthermore, option (b) typically results in larger

CPTs. This allows for better adaptation possibilities for the

optimization algorithm. However, the size of the CPT of the

target is exponential with respect to the number of parent

selectors.

Test Case Generation (Phase 4)

In the case generation phase we use the prior-sample algorithm (Jensen 1996) also known as forward-sampling: In a

top-down algorithm for every node a value is computed according to the values of its parent nodes. Then, we generate

a set of test cases of arbitrary size corresponding to the data

characteristics that are modeled in the Bayesian network.

Modeling Test Patterns (Phase 2a)

As outlined above, a test pattern, i.e., either an association

or an exclusion pattern, is modeled with respect to a specific

solution (target variable) relating a set of attribute values (independent variables) to it. For example, if a specific solution

should be derived categorically with respect to a set of input

findings, then a target share p = 1 is assigned to the corresponding subgroup pattern, i.e., the solution (target concept)

is always established. Otherwise, for a probabilistic relation

the target share is given by p ∈ [0; 1]. The second important parameter of a subgroup pattern is given by the (relative) subgroup size that controls the generality of the pattern

(rule): It corresponds to the frequency (support) the pattern

will be observed in the generated test data set.

If the solutions are independent from each other, then we

can build a model for each solution following a generative

programming approach. We then need to merge the sets of

test cases into the final suite of test cases. Such an approach

can significantly reduce the complexity of the data generation models in a divide-and-conquer approach. Therefore,

the practical applicability and tractability is often increased.

Case Replay and Test (Phase 5)

The empirical testing phase was implemented with an automated test tool (Baumeister, Seipel, & Puppe 2004). Essentially, we provide all generated cases to the tool, then the

tests are processed automatically. Visual feedback is given

by a colored status bar, which turns green, if all tests have

passed successfully. An error is reported by a red status bar,

i.e., if for some of the tests a different set of solutions was

derived compared to the solutions stored in the respective

generated case. This metaphor of a colored status bar was

adopted from automated testing techniques known in software engineering. Furthermore, a more detailed report of

each test result is presented. Figure 3 shows an example

of the automated test tool with an incorrectly processed test

suite; we will give more details in the case study.

Adaptation and Refinement (Phase 6)

In general, it will be likely that some of the generated test

cases fail during the empirical testing phase. An automatically generated test case can fail because of the following

reasons:

1. Deficient test generation knowledge: The problem description of a case is incorrect due to incorrectly/incompletely defined test generation knowledge,

i.e., the subgroup patterns and/or parts of the network are

incorrect/incomplete. The developer can choose from a

set of different possible operators:

• Extend pattern base: Add missing relations to pattern

base. This action is often applicable if there are at least

two factors with a meaningful missing dependency relation, and if this relation is necessary for the model.

• Modify patterns: Alternatively, it may be sufficient to

modify existing patterns, i.e., adapt the subgroup parameters.

Modeling of a Bayesian Network (Phase 2b)

In addition to the specification of a set of subgroup patterns the user can also define the Bayesian network directly

by connecting the nodes/attributes. Additionally, the conditional probability tables of the nodes in the network can be

adapted. If additional nodes are entered manually, then the

entries of the conditional probability tables need to be specified. However, this step is usually the most difficult one.

In an advanced step the network structure can be enriched

using hidden nodes that enable further possibilities for data

generation: Hidden nodes are used to add constraining relations of the active nodes that are used for case generation.

The hidden nodes are then used for the case generation step,

but they are not visible in the generated test cases.

521

For assessing the similarity of the query case q and a retrieved case c, we use the well-known matching features

similarity function.

sim(c, c ) =

|{a ∈ ΩA : πa (c) = πa (c )}|

,

ΩA

(1)

where πa (c) returns the value of attribute a in case c. The

diversity of a set of test cases TC = {ci }k of size k is then

measured according to the measure diversity(TC ):

k−1

diversity(TC ) =

k i=1 j=i+1

1 − sim(ci , cj )

k · (k − 1)/2

.

(2)

We select the k most diverse test cases from the generated

set of n test cases with k ≤ n.

Case Study

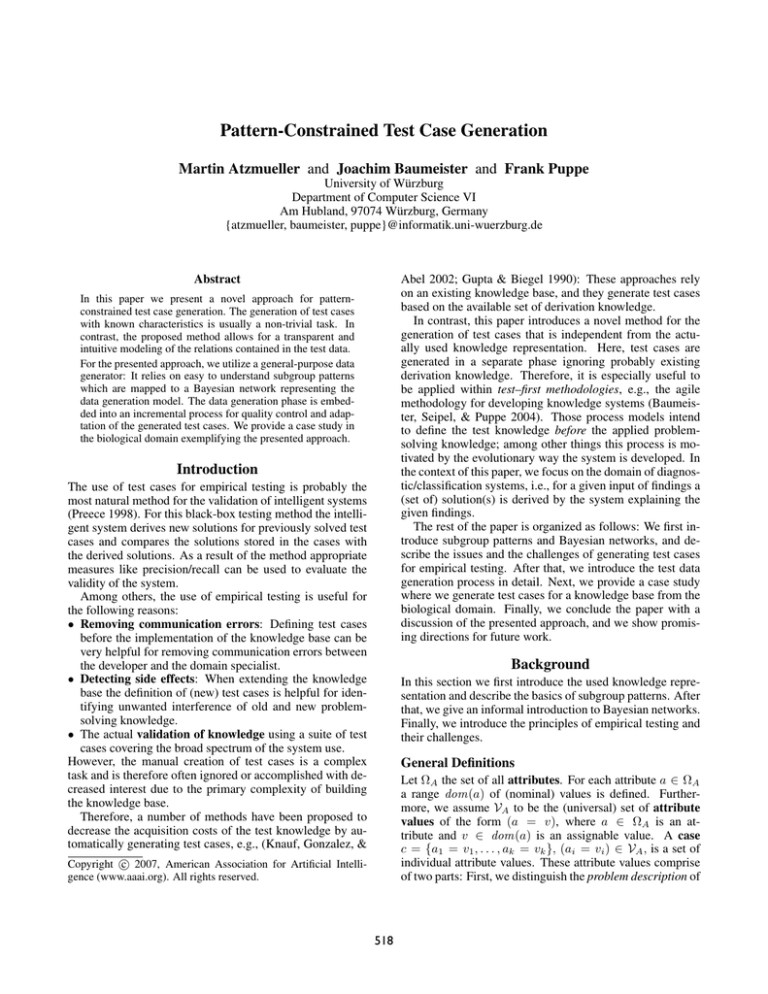

Figure 3: The d3web.KnowME testing tool.

The applicability of the presented approach was evaluated

using a rule base from the biological domain: The plant system (Puppe 1998) is a consultation system for the identification of the most common flowering plants vegetating in

Franconia, Germany. For a classification of a given plant

the user enters findings concerning the flowers, leaves and

trunks of the particular plant. The plant knowledge base defines 39 solutions and 40 inputs; each input has a discrete

range of possible finding values. We generated test data for

several selected solutions. The case study was implemented

using a special data generator plugin of the VIKAMINE

system (Atzmueller & Puppe 2005).

In the following we give an exemplary introduction into

the modeling issues with respect to one solution of the plant

knowledge base, i.e., the plant Camomile (Matricaria Inodora). Figure 4 shows the structure of the (generated)

Bayesian network for the plant Camomile.

• Modify data generation model: The developer can

modify the Bayesian network directly, if some dependencies can be handled more easily by modifying the

structure of the network. Then, commonly a hidden

node is introduced in order to add a exclusion relation

between two solutions. Consequently, either edges between nodes can be added or removed, or hidden nodes

can be introduced. Additionally, (simple) changes of

the conditional probability tables can also often be performed manually.

• Remove case: Sometimes the modification of the test

knowledge as described above is too costly for the convenient creation of test cases. As a trade-off between

the (potentially) increased complexity of the generation

model and the generation of successful test cases we

also propose to simply delete cases, that do not fit into

the expectations of the developer.

2. Deficient knowledge base: The failed test case uncovered an incorrect/unexpected behavior of the knowledge

base. In contrast to classical test case generation methods, this method is able to uncover missing relations in

the knowledge base (whereas the classical methods only

can detect incorrect knowledge).

• Refinement: Extend or modify the knowledge base

with respect to the missing or incorrect relations.

Sometimes, it might also be useful to adapt the test

knowledge patterns in order to take care of the uncovered relations.

Figure 4: Generation model for the solution Camomile (Matricaria Inodora) - in german.

Test Case Selection (Phase 7)

In order to select a representative but diverse set of test cases,

we can apply a post-selection step on the total set of generated test cases. By applying this step we can ensure that the

problem descriptions of a selected subset of the generated

test cases are not too similar to each other. We use a technique adapted from case-based reasoning (CBR) for measuring the diversity of a set of cases, c.f. (Smyth & McClave

2001).

In the case study we can make the independency assumption between the particular solutions, i.e., plants. This significantly simplifies the modeling effort for each solution,

and also increases the understandability and maintainability

of the constructed model. We only consider single findings

that are counted positive for deriving the solution (association patterns), and exclusion patterns made up of findings

that are negative for inferring the solution. Therefore, we

522

Conclusion

opted for the dependency structure for which the target is

the parent of the subgroup selectors, i.e., the findings, since

the combinations of these findings do not need to be modeled explicitly in the network.

In Tables 1 and 2, we show examples of the positive and

negative factors for deriving the solution Camomile (Matricaria Inodora), respectively. We denote the strength of the

relations by the symbols ’P1’, ’P2’, ’P3’ which denote positive categories in ascending order, and the equivalent ’N1’,

’N2’, ’N3’ for the negatives categories:

Input Finding

Blossom Type: Daisy family

Blossom Color: White

Blossom leaf edge: Not serrated

Coarse Blossom Type: Star-shaped

Chalice Color: Yellow

In this paper we presented a novel process model for test

case generation based on modeling simple subgroup patterns. The approach makes no use of the underlying knowledge representation, and is especially useful to be integrated

into test-first development methodologies. We successfully

demonstrated the method in a case study implemented in the

biological domain.

In the future, we are planning to enhance the postprocessing of generated cases by automatically proposing

appropriate adaptation operators for handling cases that

were incorrectly solved in the initial empirical testing phase.

Strength

P3

P3

P2

P2

P1

References

Atzmueller, M., and Puppe, F. 2005. Semi-Automatic Visual Subgroup Mining using VIKAMINE. Journal of Universal Computer Science (JUCS), Special Issue on Visual

Data Mining 11(11):1752–1765.

Atzmueller, M.; Baumeister, J.; Goller, M.; and Puppe, F.

2006. A Datagenerator for Evaluating Machine Learning

Methods. Journal Künstliche Intelligenz 03/06:57–63.

Atzmueller, M.; Puppe, F.; and Buscher, H.-P. 2005. Exploiting Background Knowledge for Knowledge-Intensive

Subgroup Discovery. In Proc. 19th Intl. Joint Conference

on Artificial Intelligence (IJCAI-05), 647–652.

Barr, V. 1999. Applications of Rule-Base Coverage Measures to Expert System Evaluation. Knowledge-Based Systems 12:27–35.

Baumeister, J.; Seipel, D.; and Puppe, F. 2004. Using

Automated Tests and Restructuring Methods for an Agile

Development of Diagnostic Knowledge Systems. In Proc.

of 17th FLAIRS, 319–324. AAAI Press.

Gupta, U. G., and Biegel, J. 1990. A Rule–Based Intelligent Test Case Generator. In Proc. AAAI–90 Workshop

on Knowledge–Based System Verification, Validation and

Testing. AAAI Press.

Jensen, F. V. 1996. An Introduction to Bayesian Networks.

London, England: UCL Press.

Klösgen, W. 2002. Handbook of Data Mining and Knowledge Discovery. Oxford University Press, New York. chapter 16.3: Subgroup Discovery.

Knauf, R.; Gonzalez, A. J.; and Abel, T. 2002. A Framework for Validation of Rule-Based Systems. IEEE Transactions of Systems, Man and Cybernetics - Part B: Cybernetics 32(3):281–295.

Preece, A. 1998. Building the Right System Right. In

Proc. KAW’98, 11th Workshop on Knowledge Acquisition,

Modeling and Management.

Puppe, F. 1998. Knowledge Reuse among Diagnostic

Problem-Solving Methods in the Shell-Kit D3. Intl. Journal of Human-Computer Studies 49:627–649.

Smyth, B., and McClave, P. 2001. Similarity vs. Diversity. In Proc. 4th Intl. Conference on Case-Based Reasoning (ICCBR 01), 347–361. Berlin: Springer Verlag.

Table 1: Single factors that are positive for deriving the solution Camomile.

Input Finding

Leaf Cirrus: Exist

Stipe Spine: Exist

Leaf edge: With spines

Leaf edge: Not kibbled

Blossom Type: Not dandelion

Strength

N3

N3

N2

N2

N1

Table 2: Single factors that are negative for deriving the solution Camomile.

For this solution we first generated 100 test cases only

including the positive factors. This resulted in 54 falsely

solved cases, since the inhibiting factors were missing. After that, exclusion relations were included resulting in 27

incorrectly solved cases. By further optimizing the pattern

constraints we obtained 100 test cases with only 9 incorrect

cases which were removed after a manual inspection. After

Figure 5: Three generated cases for the solution Camomile.

that, the model was not further optimized by including additional patterns in order to guarantee its concise and clear

semantics. These points are usually important for further

extensions and maintenance. Figure 5 shows a screenshot of

generated cases.

523