Intelligent Sensor Integration and Management

with Cooperative,

Heterogeneous Mobile Robots

From: AAAI Technical Report WS-99-15. Compilation copyright © 1999, AAAI (www.aaai.org). All rights reserved.

Robin Murphy, Aaron Gage, Jeff Hyams, Mark Eichenbaum,

Brian Minten, Min Shin, Brian Sjoberg

Mark Micire,

ComputerScienceand EngineeringDepartment,Universityof SouthFlorida,

4202E. Fowler Avenue,TampaFL 33620

Abstract

The two-robotentry from the University of SouthFlorida

received a TechnicalInnovationAwardfor Conceptualand

Artistic Designin the Horsd’OeurvesAnyone?

event of the

1999 AAAIMobile Robot Competition. The entry was

fielded by a team of seven students from the Computer

Scienceand Engineeringand Art departments.Threeof the

team memberswere undergraduates. The robots were

uniquein their approachto maximizing

the time the robot

wasengagedin serving: one robot acted as the server, who

wouldcall the secondrobot to bring refills as needed.This

allowedthe entry to creatively exploit the differences in

sensors on the tworobots. Theentry wasalso novelin its

application of multi-sensor integration within a hybrid

deliberative/reactive architecture. Fivesensor modalities

were used (vision, thermal, range, sonar, and tactile).

Behavioral sensor fusion was used for five reactive

behaviors in eight states, significantly improving

performance.

.

Each of the two robots can be thought of as actors,

literally following abstract behaviors called scripts

(Murphy 1998). The Borg Shark was the "star" of the

show. She was given an aggressive, smarmypersonality.

She is programmedto navigate an a priori route using

dead reckoning and sonars, pausing at waypoints to look

for humans with her camera. If she sees humans (and

confirms with thermal sensor), she attempts to makeeye

contact with them and scan the group in a natural way

while looking for VIPs (special colored ribbons). She uses

her laser to count the numberof treats removedfrom her

jaws, and then calls Puffer to bring refills. If someone

grabs treats, but isn’t visible, Sharkwill stop and look for

the person and other humans to serve. She talks via

DECtalkbut cannot perlbrm voice recognition.

1 Introduction

The USFentry was motivated by our ongoing research in

intelligent

sensing management. The AAAIevent

provided an interesting domainto makeprogress toward

three technical objectives:

1.

2.

with own capabilities,

communicating with each

other at a symbolic, human-likelevel.

Gain more experience with interacting with humans.

Previous efforts by the USFrobots have concentrated

on interacting with humansto accomplish the task.

This entry considered the additional challenges of

howto attract attention to the robots andentertainthe

audience. Costumes and interactive

media were

developed by an undergraduate student from the USF

Art Department,

Push the envelope on hybrid deliberative~reactive

architectures

by expanding them to include

intelligent sensor integration and management

at all

levels. A specific goal was to demonstrate a new

algorithm, MCH,for dynamic allocation of sensor

and effector resources (Gage and Murphy1999). The

implementation of MCHrequired the integration of

deliberative scheduling with reactive behavioral

execution. The competition was a good test bed for

dynamic sensor allocation because the USF entry

involved five sensing modalities (vision, thermal,

laser, sonar, contact) and five effectors (drive, steer,

pan,tilt, fan).

Demonstrate advances in cooperation between

multiple, heterogeneousrobots to achieve a task. The

USFentry treated each robot as an independent agent

13

The Puffer Fish was assigned a supporting role of aiding

the Borg Shark. It was given a personality and body

language associated with being (grumpy, resentful of

working for the Shark). Puffer Fish sleeps until

"bothered" by humans(it whines and turns its "head" of

cameras away muchas a sleeping child would do), or is

awakenedby a request from Shark. Then she navigates to

and from the Borg Shark’s estimated coordinates and

homebase using sonars and vision. If path is blocked or

annoyed (such as when she is near the Borg Shark),

Puffer Fish lives up to her nameand puffs up.

2 Robots

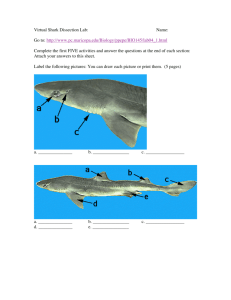

The entry consisted of two Nomad200 robots, Butler (aka

Borg Shark) and Leguin (aka Puffer Fish), costumedwith

interactive media by the USFArt department. The robots

were heterogeneous in sensing capabilities

and

functionality. The robots are shownin Figure 1.

a.

The software built on previous work from manysources.

First, both robots were programmedunder the biomimetic

SFX architecture.

This provided an object-oriented

framework for coordinating reactive behaviors and

deliberative software agents. The entry also used a form

of the vector field histogram (VFH)obstacle avoidance

algorithm provided by the Naval Research Laboratory.

Instead of using an HSVor RGBcolorspace, all color

vision used the Spherical Coordinate Transform (SCT)

taken from medical imaging (Hyams, Powell, and

Murphy1999). Third, the reactive layer made use of

large existing library of behaviors developedfor the 1997

AAAIcompetition and Urban Search and Rescue work.

C~b~

; Cor~x4ike

’, functions

b.

Figure 1 Robots in costumes: a) Borg Shark. Thermal

sensor is the "third eye" and Sick laser is inside

mouth,b) Puffer Fish with striped skirt inflated.

Both robots have 2 CPUs ( 166MHzPentiums on Borg

Shark, and 233MHzPentiums on Puffer Fish), two

cameras mountedon a pan/tilt head, and 2 Matrox Meteor

framegrabbers. Each was programmedin C++ (reactive

layer) and LISP (deliberative layer) under Sensor Fusion

Effects architecture

(Murphy 1998). All execute

autonomously with all onboard computation, but

communications with a local workstation is maintained

via radio ethernet in order to monitor progress. The robots

are also permitted to communicatewith each other via

radio ethernet. In addition to the commonaspects, Borg

Shark has redundant sensor rings (upper and lower),

E2T thermal sensor, a Sick planar laser ranger, and

redundant sonar rings. Puffer Fish has the common

components

plus an actuator for inflating her skirt.

Borg Shark suffered one hardware failure, one software

driver failure on site, and had one significant design flaw.

Her power board had a circuit failure and was repaired

onsite by the team. This resulted in about seven hours of

down time. A bug was discovered in the Nomadic

software driver, robotd, for controlling the redundant

sonar rings. Whenthe rings, either polled singly or

together, received manyclose "hits" the robotd driver

would crash and send the robot into a slow circle. This

bug had been intermittently seen before, but since there

were no patches from the manufacturer and the source

code is closed source, the decision was madeto field the

entry anyway. At the competition, Borg Shark crashed

over 10 times in two hours. In manycases, the audience

thought the frequent circling behavior was programmed.

3 Previous

Work

14

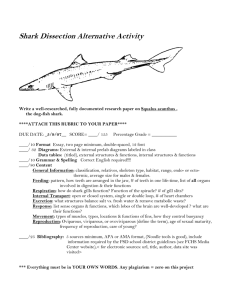

Focus¢~f attention,

recalibration

Figure 2 Layout of SFXarchitecture.

The SFX architecture has been used since 1990 for

mobilerobot applications emphasizingthe role of sensing

and sensor fusion in both behaviors and in more cognitive

functions. Figure 2 shows the organization of the SFX

architecture.

Dashed boxes indicate areas of the

architecture where sensing functionality corresponds to

the organization of sensing in the mammalian

brain. The

mainpoint of the architecture is that it is divided into two

layers: reactive and deliberative, The reactive layers

consists of a reactive behaviors which use sensor data

directly. All sensor processing, representation, and fusion

is local to the behavior, The deliberative layer consists of

a set of software agents whichmanagedifferent activities

(ex. Sensing Manager, Effector Manager), or create and

operate over global data structures (ex., the Cartographer).

One of the manyadvantages of using mangerial software

agents is that they can serve as the glue for basic modules.

For example, under SFX sensors and effectors are

modular and do not need to knowthe presence of others.

The Sensor Manager establishes

the needed

correspondences, providing a "plug and play" framework.

each behavior with sensing resources, and maximizethe

quality (or preference) of sensing for all behaviors.

Second, the allocation mechanismmust operate in realtime (on the order of 33 msec) to permit a smooth

transition between behavioral regimes as behaviors are

unpredictablyinstantiated and de-instantiated.

Figure 3 Layout of intelligent

architecture.

sensing within the SFX

5 Advances in Intelligent

Sensing

Previous work by (Gage and Murphy1999) demonstrated

in simulation that a variant of the MIN-CONFLICT

scheduling algorithm is well-suited for this problem. The

variant is called Min-Conflict with Happiness, or MCH,

because it makes all behaviors "happy" by optimizing

their preferences. For the AAAIcompetition, MCHwas

implementedin the Sensing and Effector Manageragents

within the deliberative layer of SFX, and was used on

both robots. It functioned correctly, handling up to five

behaviors and five sensors through eight behavioral

control regimes.

The resulting dynamic sensor and effector allocation

process is as follows. Whena behavior is instantiated, it

makes a request to the Sensor Manger for a logical

sensor(s) and to the Effector Mangerfor an effector. The

request to the Sensor Manageris in the form of an a priori

list of logical sensors in a preference ordering. The

requests, along with the current allocation, can be treated

as a plan or schedule. If multiple behaviors request the

logical sensor, then the conflict over physical resources

can be considered a failed plan. Min-Conflict uses plan

repair, so it finds a set of alternative bindings. The

Happiness modification

executes Min-Conflict

recursively to achieve a global optimization.

The research focus of this entry was on intelligent

sensing. It makes contributions to the managementof

sensing and multi-sensor integration at the behavioral

level.

5.1 Intelligent

Sensor Management

Sensor managementis needed for robots with a large set

of concurrent behaviors operating with a small set of

general purpose sensors and effectors. This is a many-toone relationship and suggests that contention for

resources will be a problem. However,the small set of

general purpose sensors are tailored for specific behaviors

by processing algorithms. Due to the breadth of sensor

algorithms, a robot may have several redundant (or

logically equivalent) means of extracting the same

percept. An example is extracting range from vision: a

robot might have vision-based algorithms using depth

from motion, depth from stereo, depth from texture, as

well as laser and sonar. In SFX, equivalent (physical

sensor, perceptual processing algorithm) modules are

called logical sensors after (Henderson and Shilcrat

1984). The correspondencebetween behaviors and logical

sensors can be one to many. This means that contention

for physical sensing can often be resolved by finding

equivalent logical sensors that use alternative physical

sensors. The sameissues apply to effectors.

5.2 Multi-sensor Integration

The USF entry also demonstrated

multi-sensor

integration, cueing, and focus-of-attention. Multi-sensor

fusion was added at the behavioral level to overcome

noise and unreliable sensing algorithms without adding

computational complexity. Borg Shark used color and

motion visual face detection and tracking algorithms.

However due to the complex environment of the

competition, these algorithms would return a false

positive on the order of 25%of the time. By adding the

thermal sensor to the behavior (must have both a visual

indicator and a thermal indicator to be a face), false

positives were almost totally eliminated. Borg Shark also

used sonars to reduce false positives from the laser in

counting treat removal. The laser would intermittently

give false returns, particularly in wide open spaces. This

would result in a false treat removal count and a

prematurecall for a refill. Tocombatthis, sonar data from

the front of Borg Shark was fused to suppress false

returns.

The mechanism for allocating sensing resources is

complicated by two factors. First, there are qualitative

differences in logical sensors. A set of equivalent logical

sensors mayproduce the same percept but they are likely

to have unique characteristics

(update rates,

environmental sensitivity).

As a result, there is

preference ordering on the logical sensors for a particular

behavior. The sensor allocation process should provide

15

The entry also used cueing and focus of attention

mechanisms to improve performance. Borg Shark had a

module that used the location of the humanface in the

image to determine the likely region of the badge in the

image. This was tested but not integrated into the final

code due to time limitations. Puffer Fish also used cueing.

For both finding BorgShark and the refill station, it used

dead reckoning to navigate to nearby the goal and then

trigger a vision-based recognition process.

The robots interact with humansin two ways. One was to

maximizepoints for recognizing the presence of humans

and what they were doing. Borg Shark needed to be able

to detect faces and interpret the meaningof badges. An

important lesson learned was that the sensing needed to

accomplish this was simple. Also, Borg Shark needed to

determine when humanshad removedalmost all the treats

in orderto call for refills,

A second form of interaction was howthe robots called

attention to themselves while protecting themselves from

negative human involvement. The USF entry used

costumesand interactive media to attract humaninterest.

Negative humaninvolvement was a concern for several

reasons. Previous observations of audience interactions

the AAAIMobile Robot Competition (Murphy 1997)

have yielded numeroustales of humanspoking at robots,

attempting to herd them into dangerous situations, and

even attempting to pick up robots and carry them off.

Also, people tend to crowdaround the robots preventing

navigation. The basis for the cooperative robot approach

was that the Puffer Fish could bring a refill faster than

Borg Shark could do it herself. If Borg Shark is blocked

by people, that is acceptable since she was the primary

interface to the audience, but if Puffer Fish was blocked,

she had to have a mechanismto encourage the crowd to

makeroomfor her. Finally, Puffer Fish was intended to

spend a large amountof time at the refill station in a

"’wait state" or sleeping mode.People were likely to a)

ignore the robot, which would not be an interesting

interaction, or b) comeup the robot and attempt to touch

sensors and generally interact in negative ways.

6 Advances in Agent Interactions

The USFentry also explored interactions between agents:

robots cooperating with other robots, and robots

interacting with humans.

6.1 Cooperation

Both robots act as peers, communicating only at

symbolic,human-likelevel ("I need a refill"). Arobot has

to wait for the other to completea particular task before it

can continue with its mission. For. example, Puffer Fish

must sleep until called by Borg Shark. Borg Shark must

stay in the sameplace until Puffer Fish arrives, otherwise

Puffer Fish may not be able to find Borg Shark in a

crowd. The waiting intervals are unpredictable.

The details of the cooperative interactions follow. When

Borg Shark runs out of food, she calls a subscript. The

subscript uses radio ethernet to call Puffer Fish for a refill

at the deliberative (symbolic) level. BorgShark transmits

to Puffer Fish her approximate X,Y coordinates. The two

robots use the same size occupancygrid with dimensions

in absolute coordinates, but not an actively localized map.

WhenPuffer Fish receives the radio message, she appears

to wake up. She then heads for Shark using a move-togoal behavior informed by dead reckoning. WhenPuffer

Fish is within 1.0 feet, she then shifts to visually looking

for Shark’s distinctive blue body to determine when

"close enough"(size, position in image)to stop.

To both present interesting personalities and attract

attention, the robots were conceptualized as a team of

colorful fish. BorgShark’s personality has been described

previously. Puffer Fish was given the role of the sullen

assistant. Since she is in a sleep modeat the refill station,

she was given literal "’sleeping" behaviors to run at that

time. She puffs up the inflatable skirt regularly to simulate

breathing. Her sonars remainactive, allowing her to turn

her camerahead awayto protect it from people while she

is sleeping and to complainthat she wants to be left alone.

Our observations indicated that the costumeand breathing

did attract people, and the "’complaining" behavior kept

theminterested but also prevented themfrom touching the

robot. As she is navigating she puffs up with her path is

blocked and has to turn more than 180 degrees; this

"amusingagression" was intended to be an alternative to

nudging people. Due to the numeroussoftware problems,

this effect was not observed at the competition enoughto

make any claims as to its effectiveness. As part of her

personality and to indicate that she had recognized the

Borg Shark, Puffer Fish also puffed up in the presence of

the Borg Shark.

At that point, both robots are nowin a wait state. The

humanhandler swaps trays, then communicatesto Puffer

Fish that the refill process is done by kicking her bumper.

(Borg Shark’s bumpersensor was malfunctioning.) Puffer

Fish uses radio ethernet to transmit to BorgShark that the

refill process is complete. Borg Shark resumes where she

left off in her serving script. Puffer Fish returns to the

refill area, again using dead reckoning to get in the

vicinity and then color segmentationto recognize the USF

banner.

6.2 Interactions

with Humans

16

7 Results

and Lessons

8 Summary

Learned

The USFentry can be summarizedin terms of the criteria

for judging and the research contributions it made. The

Borg Shark (Butler) robot was used for finding and

approachingpeople. It fused visual motion detection with

a thermal reading for reliability.

The face detection

algorithm did not execute while the robot was moving,

since it used a simple image subtraction technique. Borg

Shark was programmedto stop at multiple waypoints and

to stop if the laser detected a treat removalevent. Once

BorgShark had stopped and identified a face, it continued

to track the region for five seconds, makingeye contact. It

wouldthen scan the area for nearby humans.

The integrated performance of the robot team was poor.

Due to time spent on the hardware failure

and

unsuccessfully trying to create a workaround for the

robotd bug, the badge recognition software was tested

separately but not integrated with the system. The

blackboard system introduced several timing issues,

requiring re-tuning

after each addition of new

functionality. Altogether, Borg Shark was only able to

venture 10 feet before attracting a crowd, thereby causing

the robotd driver to crash. Over a two hour period, Borg

Sharkonly twice called for refills. The first time, Puffer

Fish crashed due to an apparent timing error. The second

time Puffer Fish did navigate to Borg Shark and the entire

refill process was carried out. Unfortunately, this occurred

when(and because) there was no one aroundto see it.

Both Borg Shark and Puffer Fish used sound and body

language to communicate information about what the

robots were doing and personality. While Borg Shark was

moving and serving, it used the onboard DECtalk voice

synthesizer to attract attention to itself with bad puns.

Puffer Fish exhibited a sullen personality, and used her

inflatable skirt to evincing sleeping (in and out breathing)

and annoyance (puffing when blocked or when serving

the fish).

Despite the poor integrated performance, the components

of the software worked well and showed advances in

robotics technology. The entry provided data showingthat

multi-sensor fusion significantly improves perception.

The MCHdynamic resource allocation algorithm and

Sensing and Effector Manageragents worked very well.

The modularity and ability of the managerial agents to

provide "plug and play" functionality was very clear as

two components (shark-finding

code and the radio

ethernet call for a refill) were integrated into the

architecture in less than 20 minutes. The impact of these

changes on the behaviors and other aspects of the

architecture were automatically propagated through the

system. Finally, the cooperation betweenthe robots at the

symbolic level appears promising.

Somepainful lessons were learned. Most significantly,

there is a major flaw in the SFXimplementation. The

blackboard/whiteboard global data structure is a good

idea, but our implementation executed too slowly and

required synchronization between processes. Weare now

moving the architecture

implementation to a CORBA

system. Also, color-based face detection is problematic.

Our use of the SCTcolor space solved color dependency

on lighting, but not the problemof howto segmenta face

from a similarly colored wall. The logical sensor was

eventually changedon site to one using motion detection,

but it was discovered that about half of the people

viewingthe robot stood very still confoundingthe results.

BorgShark handledthe task of getting refills in two ways.

First, it used sensor fusion of the laser range reading and

sonar to determine whena treat was removed(or at least

the likelihood that a hand had passed in the mouth).

Second, she used the Puffer Fish as a cooperative,

heterogeneous agent who had the sensing sufficient for

navigation but not for interactions with humans and

counting treat removal. The entry did not attempt to

manipulate trays or food, but instead relied on a human

handler to swaptrays.

Software was written and tested independently for

recognizing VIPs by their ribbons. This methodsearched

an area in the image for blobs of ribbons colors

represented in SCT space. The area of the image was

identified using a focus-of-attention based on the location

of the face in the image; the ribbons were assumedto be

on the left of the face. The SCTappeared quite robust in

test trials, but problems with the lower level software

prevented this being from integrated on the competition

version.

The research contributions of the USFentry were: an

extension to the SFXarchitecture exhibiting intelligent

sensor management, including dynamic sensor and

effector allocation using the MinConflict with Happiness

algorithm and multi-sensor integration, and an application

of cooperative heterogeneous robots. As such, it further

progress towards meeting the team’s ambitious technical

objectives.

The introduction of personality and emotional state

appears to be a very interesting research area. At first,

personality was treated as a hack for points on clever

human interaction

functionality.

However, the

implementation and general approach is converging on a

belief, desire, intention (BDI)framework.The major issue

is howto integrate personality across the deliberative and

reactive layers

17

Acknowledgments

Aspects of this entry were madepossible in part through

NSF grant CDA-9617309and NRLcontract N00173-99P-1543. The team would like to thank Alan Schultz and

Bill Adamsfrom the Naval Research Laboratory for code

and hardware loans, Dr. Bruce Marsh at USF for his

assistance, and especially the organizers, sponsors, and

judges of the AAAIcompetition.

References

Gage, A; and Murphy, R. 1999. Allocating Sensor

Resources to Multiple Behaviors, In Proceedings IROS

99. Forthcoming.

Hyams,J,;Powell, M.; and Murphy,R. 1999. Cooperative

Navigation of Micro-Rovers using Color Segmentation.

In Proceedings CIRA99. Forthcoming.

Murphy, R. 1998. Coordination and Control of Sensing

for Mobility using Action-Oriented Perception. In

Artificial Intelligence for Mobile Robots, MITPress,

1998.

Murphy, R, 1997, 1997 AAAI Mobile Robot

Competition, guest editoral, Robot Competition Corner.

In Robotics and AutonomousSystems, Nov., vol. 22, no.

1: Elsevier Press.

Henderson, T., and Shilcrat, E., 1984. Logical Sensor

Systems. In Journal of Robotic Systems, vol. 1, no. 2, pp.

169-193.

18