A QUASI-NEWTON ADAPTIVE ALGORITHM FOR ESTIMATING GENERALIZED EIGENVECTORS V.U.

advertisement

A QUASI-NEWTON ADAPTIVE ALGORITHM FOR ESTIMATING

GENERALIZED EIGENVECTORS

G. Mathew’

V.U. Reddd

A. Poulmf

‘Dept. of Electrical Communication Engineering

Indian Institute of Science, Bangalore- 560012, India

e-mail: vurQece.iisc.ernet .in

‘Information Systems Laboratory, Dept. of Electrical Engineering

Stanford University, Stanford, CA 94305

Tel: 415-725-8307 F~x:415-723-8473

ABSTRACT

can be obtained from the eigenvector corresponding to the

minimum eigenvalue of (R,, RW).

Many researchers have addressed the problem (1) for

given R, and R, and proposed methods for solving it.

Moler and Stewart [2] proposed a QZ-algorithm and Kaufman [3] propoeed an LZ-algorithm for iteratively solving

(1). But, their methods do not exploit the structure in

Ry and R,,,. On the other hand, the solution of (1) in

the special case of symmetric R, and symmetric positive

definite R, has been a subject of special interest and several dficient approaches have been reported. By using the

Cholesky factorization of R,, this problem can be reduced

to the standard eigenvalue problem as reported by Martin and Wilkinson [4]. Bunse-Gerstner [5] proposed an a p

proach using congruence transformations for the simultaneous diagonalization of R, and R,. Shougen and Shuqin

[6]reported an algorithm which makes use of Cholesky, QR

and Singular value decompositions when R, is also positive

definite. This algorithm is stable, faster than QZ-algorithm,

and much superior to that of [4]. Auchmuty [l] proposed

and analyzed certain cost functions which are minimized at

the eigenvectors corresponding to some specific eigenvalues.

In the case of adaptive signal processing applications,

however, & and R, correspond to asymptotic covariance

matrices and they need to be estimated. In this paper, the

data covariance matrix of g(n), i.e., R,(n), is taken as the

estimate of R, and M estimate of R,, say R,, is assumed

to be available based on some o priori measurements. The

sample covariance matrix at nth data instant is calculated

We first introduce a constrained minimization formulation

for the generalized symmetric eigenvalue problem and then

recast it into an unconstrained minimization problem by

constructing an appropriate cost function. Minimizer of this

cost function corresponds to the eigenvector corresponding

to the minimum eigenvalue of the given symmetric matrix

pencil and all minimizers are global minimizers. We also

present an inflation technique for obtaining multiple generalized eigenvectors of thia pencil. Based on this asymptotic

formulation, we derive a quasi-Newton adaptive algorithm

for estimating these eigenvectors in the data case. This

algorithm is highly modular and parallel with a computational complexity of O ( N z ) multiplications, N being the

problem-size. Simulation results show fast convergence and

good quality of the estimated eigenvectors.

1.

INTRODUCTION

Consider the matrix pencil (R,, R,) where R, and R, are

N x N symmetric positive definite matrices. Then, the task

of computing an N x 1 vector x and a scalar X such that

R,x = XR,x

(1)

is called the generalized symmetric eigenvalue problem.

The solution vector x and scalar X are called the generalized eigenvector and eigenvalue, respectively, of the

pencil ( R Y , R w ) . This pencil has N positive eigenvalues

viz. XI 5 X2 5

5 AN, and corresponding real R,orthonormal eigenvectors e,j = 1,.. .,N [9,1]:

Rpqi

=XiRwq,

with

q T R w Q t = 6i,

i , j €{1, ..., N }

as

n

irl

(2)

where y ( n ) is the data vector containing the most recent

of ~ ( n ) .Thus, the problem we address in this

paper ie as follows. Given the timc-series ~ ( n )develop

,

an

adaptive algorithm for estimating the first D (D 5 N) generalized R,-orthogonal eigenvectors of the pencil (R,,R,)

using (R,(R), k,) as an estimate of (R,,R,).

The paper is organized as follows. The generalized eigenvdue problem ie translated into an unconstrained minimization problem in Section 2. A quasi-Newton adaptive algorithm ie derived in Section 3. Computer simulations are

presented in Section 4 and finally, Section 5 concludes the

paper.

N samples

where 6, ie the Kronecker delta function.

The generalized symmetric eigenvalue problem has extensive applications in the harmonic retrieval / directionof-arrival estimation problem when the observed data g(n)

is the sum of a desired signal z(n) and a coloured noise

which is of the form u w ( n ) , where U is a scalar. For example, consider z(n) to be the sum of P real sinusoids and

m u m e ~ ( n to

) be uncorrelated with z(n). Then, if R,

and R, represent asymptotic covariance matrices (of size

N x N with N 1 2P+1) of g(n) and w(n), respectively, with

R, assumed to be non-singular, the sinusoidal frequencies

1058-6393/95$4.000 1995 IEEE

602

2. A MINIMIZATION FORMULATION FOR

GENERALIZED SYMMETRIC

EIGENVALUE PROBLEM

In this section, we recast the generalized symmetric eigen-

a*'.Rwa*),we get from Theorem 1 that a* is a stationary

point of J(a, a). Let y = a* + p, p E RN.Then, it caa be

ohown that

value problem into an unconstrained minimization framework. We first consider the case of the eigenvector corresponding to the minimum eigenvalue (henceforth referred to

as the minimum eigenvector) and then extend it to the case

of more than one eigenvector uaing an inflation approach.

The principle used here b a generalization of the approach

followed in [SI.

2.1.

+f [2pTRwa*+ pTRwp]'.

(8)

Single Eigenvector Case

We first introduce a constrained " i z a t i o n formulation

for seeking the minimum eigenvector of (R,, R,). Consider

the following problem:

aTRwa= 1.

(3)

Let R, = L,LE be the Cholesky factorization of

Then, (3) can be re-written as

R,.

min aTR,a

a

min

b

bTR,b

subject to

bTb = 1

subject to

V p E RN. Thus, a* is a global minimizer of J ( a , p ) .

Only if part:

Since a' is a global minimizer, we have

m a t i o n a r y point of J ( a , p ) and ii) H(a*,p) is

positive semi-definite. Hence, from Theorem 1, we get

T

&a* = XmRwa' with Am = p(1 - a* R,a*), a* = @qm

and @ =

for some m E { 1, . , N). Then, with

aiqa, it can be shown that

p=

d

(4)

..

z

N

E(&

- + 2fiB26mi)a?.

pTH(a*,C C =

)~

where R, = LZ1R,&GTand b = LEa. Since the eigenvalues of (R,, R,) and R, are identical and the eigenvectors of

(R,,,R,) are the eigenvectors of R, premultiplied by LGT,

it follows from (4) that the solution of (3) is the minimum

eigenvector of (R,, &).

Using the penalty function method [7], the constrained

problem (3) can be translated into unconstrained " i z a tion of the cost function

aTR,a p(aTR,a

J ( a ,P ) = 2

+

- 1)?

4

+

+

(9)

Since H(a*,p) is positive semi-definite, (9) implies that

Xi 2 Am for a l l i = 1 , . .. ,N. That is, a* is the minimum

eigenvector of (R,,R,). Further, since H(a*,p ) is positive

semi-definite even if a* is a local minimizer, it follows that

dl minimizers are global minimizers.

Corollary 1 The value of p should be greater than A,

.

Corollary 2 The eigenvectors of (R,, R,) arrociated with

(5)

the non-minimum eigenvalues correspond to saddle points

of J ( a ,a).

where p is a positive scalar. Below, we give results to establish the correspondence between the minimizer of J(a, p )

and a minimum eigenvector of (R,, R,,,).

The gradient vector g and Hessian matrix H of J ( a , p )

with respect to a are

g(a, P ) = R,a p(aT&a - l)Rwa,

H(a, P ) = R, 2 ~ ( R , a ) ( L a ) ~

+p(aTR,a - 1 ) L .

Xm

1-1

Clearly, minimizer of J ( a , p ) will be unique (except

for the sign) only if the minimum eigenvalue of ( R Y , R w )

is simple. The main result of the above analysis is that all

minimizers of J(a, p ) are aligned with the minimum eigenvectors of (RY,Rw).Since all minimizers are global minimizers, any simple search technique will suffice to reach the

2 Xmin V y E RN,

correct solution. Further, since

the bound for p in Corollary 1 can be satisfied by choosing

{a

(6)

(7)

'

R

Using (6) and (7), we get the following results.

(1 1)

2.2.

Inflation Technique for Seeking Multiple

Eigenvectom

Now, we present an inflation technique which, combined

Theorem 1 a* is a rtationarypoint of J(a, p ) if and only

if a* is an eigenvector of (R,, R,) corresponding to the

T

eigenvalue X ruch that X = p ( l - a* Rwa*).

with the result of the previous section, is used to seek the

fist D (D 5 N ) Rw-orthogonal eigenvectors of (R,, R,).

The basic approach is to divide the problem into D s u b

problems such that the kth subproblem solves for the k t h

eigenvector of (R,,R,).

Let at, i = I , . . . ,k 1, (with 2 2 k 2 D ) be the R,orthogonal eigenvectors of (R,, R,) corresponding to the

T

eigenvalues Xi = p(1 -a: R,at) for i = 1,. ,k - 1. To

Thie result immediately follows from (6).

Theorem 2 a* is a global minimizer of J(a, p ) if and only

if a* is a minimum eigenvector of (R,,,R,) with eigenvalue

T

Xmin = p ( l

a* L a * ) , when Amin = XI. Further, all

-

-

..

minimizers are global minimizers.

603

-

replacing R,, Rw and a?, i = 1,.. .,k 1, with Ru(n),R,

and aj(n), i = 1,. ,k 1, respectively. Thus, we obtain

obtain the next &,-orthogonal eigenvector a;, consider the

coot function

.. -

k-1

-

irl

with h I ( n ) = R,(n).

Further, we approximate the Hessian by dropping the

last term in (16) so that the approximant is positive definite and a recursion can be obtained directly in terms of

its inverse. Sutwtituting this approximated Hessian along

with (15) in (14), we obtain the quasi-Newton adaptive dgorithm for estimating the eigenvectors of (R,, R,) (after

some manipulations) as

with RY1= R,. Eqn.(l2) represents the inflation step and

its implication is discussed below.

Let a: = pip,, p, > 0, i = 1,.. . , k 1. Post-multiplying

(11) with qJ,we get

-

+

ak(n) = ik(n - l)R;;(n)Ck(n

. -

a/3;)Rwe j = 1,. . ,k I

= XjRwQ

j = k, ...,N .

R u b e = (A,

ik(n-1)

That is, (RY,Rw)

and (RYh,RW)

have the same set of

eigenvectors but k 1 different eigenvalues. Now, choose Q

such that

=

p

1

+

-

Xk

<

+3/.:

j = 1 , ...,k-1.

R;m

- 1)

k=1, ...,D

(18)

1 ar(n 1)ck(n 1)

2p(Cr(n l)R,':(n)Ck(n 1))

k = l , ...,D

(19)

+

-

-

-

-

= R;;&)

- RFi-l (nlck-1 (n)cT-i (fl)RLi-l(n)

+ cT-l(n)RFi-l (nlck-1 (n)

(13)

1

Then, clearly, the minimum eigenvalue of (RYh,Rw)

with qk as the corresponding eigenvector. Hence, if a; is

a minimizer of Jk(ak,p, a), then it follows from Theorem 2

that a; is a minimum eigenvector of (RYr,Rw)

with eigenT

value x k = p(1-a; Rwat). By construction, a; is nothing

but the k t h R,srthogonal eigenvector of (R,, R,).

A practical lower bound for Q io p. Thus, by constructing

D cost functions Jk(ak,p , a),k = 1,.. . , D , as h (10) and

finding their minimizers, we get the first D Rw-orthogonal

eigenvectors of (R,, R,).

3.

R;i(n)

n22

I

(21)

C k ( n ) = Ei,ak(n).

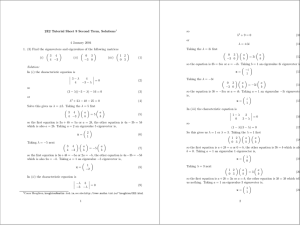

This algorithm can be implemented using a pipeline architecture as illustrated in Fig. 1. Here, krh eigenvector

is estimated by the kth unit and it goes through the following steps during n'" sample interval (time indices are

suppressed in the figure):

unit,

i) pass on q - i ( n - A) and ak(n - k) to (k +

ii) accept RLi-l(n-k+l) and ak-l(n-k+l) from (k-l)'h

unit and

iii) update q - i ( n - k ) and ak(n-t) to R ; : ( n - k + I ) and

ak(n k + 1).

Observe from (18)-(21) that the computations required

for updating the eigenvector estimates are identical for all

the units, thus making the algorithm both modular and

parallel. Consequently, the effective computational requirement is equal to that required for updating only one eigenvector estimate and it is about 5.5" multiplications per

iteration.

In this section, we combine the inflation technique of the

previous section with a quasi-Newton method and derive an adaptive algorithm for estimating the first D Rworthogonal eigenvectors of (R,,R,) in the data case.

Let ak(n),k = 1,.. . , D, be the estimates of these vectors

at nth adaptation instant. Newton algorithm for updating

ak(n 1) to ak(n) is of the form

-

-

- 1) - H;'(n - l)gk(n - 1)

(20)

where

A QUASI-NEWTON-CUM-INFLATION

BASED ADAPTIVE ALGORITHM

ak(n) = ak(n

k22

[R;'(n - 1)

n-1

- R ~ ' ( R- I)y(n)yT(n)Ry'(n- 1)

n - 1 + yT(n)R;'(n - l)y(n)

= R;'(n) =

n

(14)

where &(n-1) and gk(n-1) are the Hessian and gradient,

respectively, of Jk(ak,p,a ) evaluated at ?ik = ak(n 1).

Since R,, R, and a?, i = 1,. . . ,k - 1, are not available,

estimates of gk(n - 1) and Hk(n 1) can be obtained by

-

-

604

We have the following remarks regaruding the convergence

of this algorithm. Following the steps as in the convergence analysis of a similar-looking algorithm given in [E],

we can show that i) the algorithm in locally convergent

(asymptotically) and ii) the underired stationary points

(i.e., the undesired eigenvectoro) are unrtable. However,

when R,(n) = RYfor AU n, the algorithm can get stuck at

an undesired eigenvector. Thus, the propoeed algorithm is

globally convergent with probability one.

the data length,

s‘ = [l,expljarf), exp(j4*f), ,

urp(j(N 1)2xf)lT and H denotes Hermitian transpose.

The peaks of S(f)rue taken as the estimates of the sinusoidal frequencies in the desired signal z(n). The aversged

results are shown in Fig. 3. Observe that that the desired

frequencies are well estimated in spite of the fact that the

undesired signal frequencies are closely interlaced with the

desired signal frequencies.

-

4. S I M U L A T I O N R E S U L T S

In this section, we present some computer simulation results

to demonstrate the performance of the proposed adaptive

algorithm.

The performance measures used for evaluating the quality

of the estimated eigenvectors are as follows. To see how

close the estimated eigenvectors are to the true subspace,

we use the projection error measure, E ( n ) ,defined M

11 - S(STS)”ST] z(n)l12

E(n) = [I

where z ( n ) = F ( n ) ,

,ED(n)], X,(n)

6. C O N C L U S I O N S

The problem of seeking the generalized eigenvector corresponding to the minimum eigenvalue of a symmetric matrix

positive definite pencil ( R Y , R w )has been translated into

an unconstrained minimization problem. This was then extended to the case of more than one eigenvector using an

inflation technique. Based on this asymptotic formulation,

a quasi-Newton adaptive algorithm was derived for estimating these eigenvectors in the data case. Note that the algorithm requires the knowledge of noise covariance matrix to

within a scalar multiple.

(22)

=

S = [si,q 2 , . . . ,q ~ ] In

. order to know the extent of R,-

REFERENCES

orthogonality among the estimated eigenvectors, we define

an orthogonality meaeure, Orthmal(n), as

[l] G. Auchmuty, “Globally and Rapidly Convergent Algorithms for Symmetric Eigenproblems,” SIAM J. Motriz Analysis and Applications, ~01.12, 110.4, pp.690-

706, Oct. 1991.

[2] C.B. Moler and G.W. Stewart, “An Algorithm for Generalized Matrix Eigenvalue Problems,” SIAM J . Numerical Analysis, ~01.10,pp.241-256, 1973.

(23)

Thus, the smaller the values of E ( n ) and Otihma=(n), the

better is the quality of the estimated eigenvectors, and viceversa.

In the simulations, the signal y(n) was generated as

g(n) = z(n) uw(n) where

(31 L. Kaufman, “The LZ-Algorithm to solve the Generalized Eigenvalue Problem,” SIAM J. Numericol Analy818, VOl.11, pp.997-1024, 1974.

[4] R.S. Martin and J.H. Wilkinson, “Reduction of the

Symmetric Eigenproblem Ax = XBx and Related

Problems to Standard Form,” Numerische Mathematik, ~01.11,pp.99-110, 1968.

+

z(n)

w(n)

+ 61) + psin(2r(0.24)n + 6,)

+ 6,) + sh(2*(0.23)n + 0 , )

+an(2~(0.25)n+ 0,) + U(.).

= psin(2*(0.2)n

= sin(2*(0.21)n

(51 A. BunseGerstner, “An Algorithm for the Symmetric

Generalized Eigenvalue Problem,” Linear Algebra and

Applications, ~01.58,pp.43-68, 1984.

[6] W. Shougen and Z. Shuqin, “An Algorithm for Ax =

XBx with Symmetric and Positive-Definite A and B,”

SIAM J . Motriz Analysis and Applications, v01.12,

110.4, pp.654-660, Oct. 1991.

[7] D.G. Luenberger, Linear and Non-linear Programming, pp.366-369, Addison-Wesley, 1978.

[E] G. Mathew, V.U. Reddy and S. Dasgupta, “Adap

tive Estimation of Eigensubspace,” to appear in IEEE

h n s . Signal Processing, Feb. 1995.

[9] B.N. Parlett, The Symmetric Eigenvalue Problem,

Prentice-Had, Englewood Cliffs,NJ, 1980.

Here, u(n) is a zero-mean and unit variance white noise

and 6,’s are the initial phases (assumed to be uniform in

[ - r , 4 ) . Values of N and D were fixed at 10 and 6, respectively, and U at 0.6325.

The algorithm waa initialized as ak(0) = i k , k = 1 , . . ,D,

and Rr’(1) = 1001 where i k is the kth column of I. The matrix R, WM taken to be the asymptotic covariance matrix

of w(n). 100 Monte Carlo simulations were performed.

Values of E(n), averaged over 100 trials, are plotted in

Fig. 2 for p = 5.00 (high signal to noise ratio (SNR)) and

1.58 (low SNR). Observe that the algorithm converges quite

fast especially in the high SNR case. Values of Orthm,=(n)

(not shown here) are of the order of IO-‘ and

for

p = 5.00 and p = 1.58, respectively, implying that the implicit orthogonalization built into the algorithm through the

inflation technique is very effective.

In order to see how this subspace quality reflects in frequency estimation, we used the spectral estimator

.

05f

5 0.5

(24)

605

a,

operating On dbta

upto (n - D l)'* innant

operating on data

I)* innmt

operating on data

upto nr*instant

upto (n - h

+

+

Modular implementation of the proposed method

Figure 1.

.....................................

e-l&Kl

.::-.:

7.T

.c..r.?,.W...............

.....................................

_

so

0

.....................................

.....................................

..............................

c

.

.

.

'

-;---_-__

.

__

-so

100

-----2

---_--

200

rrmpl, no.

Figure 2.

Figure 3.

Convergence performance of the proposed method

Spectrum estimate using the estimated eigenvectors

606

io