Quantifying the uncertainty of activation periods

advertisement

Introduction

Background

Methodology

Application and Results

Conclusions & References

Quantifying the uncertainty of activation periods

in fMRI data via changepoint analysis

Christopher F. H. Nam

Department of Statistics, University of Warwick

Joint work with John A. D. Aston and Adam M. Johansen

CRiSM Changepoint Workshop,

Tuesday 27th March 2012

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

A Biological Application

fMRI data from an Anxiety Induced Experiment

Figure: Design of Anxiety Induced Experiment

215 images/time points

24 subjects

Image from Lindquist et al. [2007]

Introduction

Background

Methodology

A Biological Application

fMRI Data

Image from Lindquist et al. [2007]

Application and Results

Conclusions & References

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

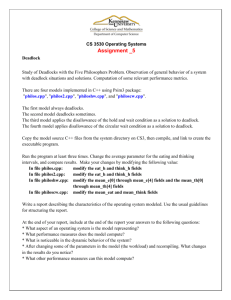

A Biological Application

Brain Regions of interest

6

4

2

−2

0

Cluster 6 data

8

Cluster 6

0

50

100

150

200

Time

(a) RMPFC time series

2

0

−2

−4

Cluster 20 data

4

Cluster 20

0

50

100

150

Time

(b) VC time series

200

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

A Biological Application

Questions of interest

The exact design of the experiment.

When might activations have occurred?

How many activations may have occurred?

How long are these activation periods?

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposal

Aim

Propose a methodology to fully capture the uncertainty of

changepoint characteristics given a sequence of data

y = y1:n = (y1 , . . . , yn ).

Changepoint Probability (CPP) to a regime occurring at time

t.

Probability of m changepoints occurring in the data.

Focus our attention to these quantities although others are easily

accessible.

Introduction

Background

Methodology

Application and Results

Conclusions & References

Hidden Markov Models

Hidden Markov Models

Assume y can be generated by a finite number of states.

Hidden Markov Models (HMMs) to model time series y

with {Xt } as our underlying Markov Chain, with finite state

space ΩX .

General Finite State Hidden Markov Model

Emission:

yt |y1:t−1 , x1:t , θ ∼ f (yt |xt−r :t , y1:t−1 , θ)

Transition:

p(xt |y1:t−1 , x−r +1:t−1 , θ) = p(xt |xt−1 , θ)

Changepoints in this HMM framework.

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Changepoints

Changepoint Definition

Changepoint Definition

Changepoint to a regime is said to have occurred at time t when a

state persists for at least k time periods in {Xt }.

xt−1 6= xt = xt+1 = . . . = xt+j

where j ≥ k − 1

Define τ (k) and M (k) to be the time and number of changepoints.

Introduction

Background

Methodology

Application and Results

Conclusions & References

Model Parameters

Model Parameters

θ indicates our model parameter vector which needs to be

estimated.

Components will vary; dependent on the particular type of

HMM used.

P, the |ΩX | × |ΩX | probability transition matrix for {Xt }

Parameters for the emission distribution which can be

dependent on the underlying state

Means µXt , variances σX2 t , Poisson intensity rates λXt , ...

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Suppose we are interested in the changepoint probability

P(τ (k) = t|y ).

Z

Z

(k)

(k)

P(τ = t|y ) = P(τ = t, θ|y )dθ = P(τ (k) = t|θ, y )p(θ|y )dθ

θ

θ

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Suppose we are interested in the changepoint probability

P(τ (k) = t|y ).

Z

Z

(k)

(k)

P(τ = t|y ) = P(τ = t, θ|y )dθ = P(τ (k) = t|θ, y )p(θ|y )dθ

θ

θ

≈ PcN (τ (k) = t|y ) =

N

X

i =1

by standard Monte Carlo results.

{W i , θ i }N

i =1 approximates p(θ|y )

P(τ (k) = t|θ i , y )

W i P(τ (k) = t|θ i , y )

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Proposed Methodology

1

Approximate p(θ|y ) via SMC samplers to obtain a normalised

weighted cloud of particles {W i , θ i }N

i =1 .

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Proposed Methodology

1

Approximate p(θ|y ) via SMC samplers to obtain a normalised

weighted cloud of particles {W i , θ i }N

i =1 .

2

For i = 1, . . . , N, compute the associated exact changepoint

distribution conditioned on θ i , P(τ (k) = t|θ i , y ), via FMCI in

a HMM context.

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Proposed Methodology

1

Approximate p(θ|y ) via SMC samplers to obtain a normalised

weighted cloud of particles {W i , θ i }N

i =1 .

2

For i = 1, . . . , N, compute the associated exact changepoint

distribution conditioned on θ i , P(τ (k) = t|θ i , y ), via FMCI in

a HMM context.

3

Weighted average of these exact changepoint distributions

to obtain the changepoint distributions in light of parameter

uncertainty, P(τ (k) = t|y ).

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Proposed Methodology

1

Approximate p(θ|y ) via SMC samplers to obtain a normalised

weighted cloud of particles {W i , θ i }N

i =1 .

2

For i = 1, . . . , N, compute the associated exact changepoint

distribution conditioned on θ i , P(τ (k) = t|θ i , y ), via FMCI in

a HMM context.

3

Weighted average of these exact changepoint distributions

to obtain the changepoint distributions in light of parameter

uncertainty, P(τ (k) = t|y ).

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Proposed Methodology

Proposed Methodology

1

Approximate p(θ|y ) via SMC samplers to obtain a normalised

weighted cloud of particles {W i , θ i }N

i =1 .

2

For i = 1, . . . , N, compute the associated exact changepoint

distribution conditioned on θ i , P(τ (k) = t|θ i , y ), via FMCI in

a HMM context.

3

Weighted average of these exact changepoint distributions

to obtain the changepoint distributions in light of parameter

uncertainty, P(τ (k) = t|y ).

Combines recent work of Del Moral et al. [2006] and Aston et al.

[2009].

Specific details can be found in Nam et al. [in press].

Introduction

Background

Methodology

Application and Results

Conclusions & References

Approximating p(θ|y), the model parameter posterior

Sequential Monte Carlo (SMC) Samplers

SMC Samplers

πb ∝ p(θ)l (y |θ)γb

where

p(θ) = prior of

the model

parameters

l (y |θ) =

likelihood

0 = γ1 ≤ γ2 ≤

. . . ≤ γB = 1,

a tempering

schedule

References

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

i

|θ , y)

Exact distributions

Exact computation of P(τ (k) = t|θ i , y ).

Conclusions & References

References

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

i

|θ , y)

Exact distributions

Exact computation of P(τ (k) = t|θ i , y ).

(k)

τu

denote the uth changepoint under our definition.

P

(k)

= t|θ i , y ) = u=1,2,... P(τu = t|θ i , y ).

P(τ (k)

(k)

P(τu = t|θ i , y ) ≡ P(W (k, u) = t + k − 1|θ i , y ) re-express

as a waiting time for runs.

References

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

i

|θ , y)

Exact distributions

Exact computation of P(τ (k) = t|θ i , y ).

(k)

τu

denote the uth changepoint under our definition.

P

(k)

= t|θ i , y ) = u=1,2,... P(τu = t|θ i , y ).

P(τ (k)

(k)

P(τu = t|θ i , y ) ≡ P(W (k, u) = t + k − 1|θ i , y ) re-express

as a waiting time for runs.

Waiting time distribution for runs can be computed exactly

via Finite Markov Chain Imbedding (FMCI).

References

Finite Markov Chain Imbedding (FMCI)

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

i

|θ , y)

Waiting Time Distributions via FMCI

(u)

P(W (k, u) ≤ t + k − 1|θ i ) = P(Zt+k−1 ∈ A|θ i ) where A

denotes the set of absorption states in ΩZ .

Computed by standard Markov Chain results.

References

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

i

|θ , y)

FMCI in a HMM context

Inference on the underlying state sequence.

References

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

i

|θ , y)

FMCI in a HMM context

Inference on the underlying state sequence.

Compute posterior transition probabilities P(Xt |Xt−1 , y ) to

consider all possible state sequences.

Sequence of time dependent posterior transition probability

matrices {P̃1 , P̃2 , . . . , P̃n }.

References

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

References

i

|θ , y)

FMCI in a HMM context

Inference on the underlying state sequence.

Compute posterior transition probabilities P(Xt |Xt−1 , y ) to

consider all possible state sequences.

Sequence of time dependent posterior transition probability

matrices {P̃1 , P̃2 , . . . , P̃n }.

Results in a sequence of time dependent posterior transition

probability matrices {M̃1 , M̃2 , . . . , M̃n } for the auxiliary MCs

{Zt }.

Introduction

Background

Methodology

Application and Results

Exact distributions conditional on model parameters, P(τ

(k)

Conclusions & References

References

i

|θ , y)

Exact Distributions for Changepoint characteristics

Probability of uth changepoint at specific time

(k)

P(τu

= t|θ i , y ) = P(W (k, u) = t + k − 1|θ i , y )

= P(W (k, u) ≤ t + k − 1|θ i , y ) − P(W (k, u) ≤ t + k − 2|θ i , y )

Distribution of the number of changepoints,

P(M (k) = u|θ i ) = P(W (k, u) ≤ n|θ i , y ) − P(W (k, u + 1) ≤ n|θ i , y )

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

fMRI data

6

4

2

−2

0

Cluster 6 data

8

Cluster 6

0

50

100

150

200

Time

(c) RMPFC time series

2

0

−2

−4

Cluster 20 data

4

Cluster 20

0

50

100

150

Time

(d) VC time series

200

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

fMRI application

Preliminary I

AR error process leads to a HMS-AR model.

Detrending within model.

HMS-AR(r ) with detrending

yt − µxt − m‘t β = at

at = φ1 at−1 + . . . + φr at−r + ǫt ,

ǫt ∼ N(0, σ 2 )

Detrending parameters within model → Estimated within

SMC samplers.

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

Preliminary II

Detrending types:

No detrending, Polynomial of order 3, Discrete Cosine

Basis

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

Preliminary II

Detrending types:

No detrending, Polynomial of order 3, Discrete Cosine

Basis

HMS-AR of order 0 and 1

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

Preliminary II

Detrending types:

No detrending, Polynomial of order 3, Discrete Cosine

Basis

HMS-AR of order 0 and 1

ΩX = {“resting”, “active”}

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

Preliminary II

Detrending types:

No detrending, Polynomial of order 3, Discrete Cosine

Basis

HMS-AR of order 0 and 1

ΩX = {“resting”, “active”}

5 consecutive active states for activated region (k = 5)

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

Preliminary II

Detrending types:

No detrending, Polynomial of order 3, Discrete Cosine

Basis

HMS-AR of order 0 and 1

ΩX = {“resting”, “active”}

5 consecutive active states for activated region (k = 5)

SMC samplers: 500 = N particles, 100 = B iterations

References

Cluster 6, CPP HMS-AR(0)

Cluster 6

0.8

0.6

CPP

0.2

0.4

6

4

2

−2

0.0

0

Cluster 6 data

8

1.0

CPP into & out of activation regime

P(Into)

P(Out of)

0

50

100

150

200

0

50

Time

CPP into & out of activation regime

1.0

CPP into & out of activation regime

0.6

0.2

0.0

0.0

0.2

0.4

CPP

0.6

0.8

P(Into)

P(Out of)

0.4

1.0

200

(f) AR(0), No detrending

P(Into)

P(Out of)

0.8

150

Time

(e) RMPFC time series

CPP

100

0

50

100

150

200

Time

(g) AR(0), Poly detrending

0

50

100

150

200

Time

(h) AR(0), DCB detrending

Cluster 6, CPP, HMS-AR(1)

Cluster 6

0.8

0.6

CPP

0.2

0.4

6

4

2

−2

0.0

0

Cluster 6 data

8

1.0

CPP into & out of activation regime

P(Into)

P(Out of)

0

50

100

150

200

0

50

Time

CPP into & out of activation regime

1.0

CPP into & out of activation regime

0.6

0.2

0.0

0.0

0.2

0.4

CPP

0.6

0.8

P(Into)

P(Out of)

0.4

1.0

200

(j) AR(1), No detrending

P(Into)

P(Out of)

0.8

150

Time

(i) RMPFC time series

CPP

100

0

50

100

150

200

Time

(k) AR(1), Poly detrending

0

50

100

150

200

Time

(l) AR(1), DCB detrending

Introduction

Background

Methodology

Application and Results

Conclusions & References

fMRI application

Cluster 6, Distribution of Number Activation Regimes

Probability

0.0 0.2 0.4 0.6 0.8 1.0

Distribution of number of activation regimes

No detrending

Poly detrending

DCB detrending

0

1

2

3

4

5

6

7

8

9

No. activation regimes

(m) AR(0)

Probability

0.0 0.2 0.4 0.6 0.8 1.0

Distribution of number of activation regimes

No detrending

Poly detrending

DCB detrending

0

1

2

3

4

5

6

No. activation regimes

(n) AR(1)

7

8

9

References

Cluster 20, CPP HMS-AR(0)

Cluster 20

0.8

0.6

CPP

0.2

0.4

2

0

−2

0.0

−4

Cluster 20 data

4

1.0

CPP into & out of activation regime

P(Into)

P(Out of)

0

50

100

150

200

0

50

Time

CPP into & out of activation regime

1.0

CPP into & out of activation regime

0.6

0.2

0.0

0.0

0.2

0.4

CPP

0.6

0.8

P(Into)

P(Out of)

0.4

1.0

200

(p) AR(0), No detrending

P(Into)

P(Out of)

0.8

150

Time

(o) VC time series

CPP

100

0

50

100

150

200

Time

(q) AR(0), Poly detrending

0

50

100

150

200

Time

(r) AR(0), DCB detrending

Cluster 20, CPP, HMS-AR(1)

Cluster 20

0.8

0.6

CPP

0.2

0.4

2

0

−2

0.0

−4

Cluster 20 data

4

1.0

CPP into & out of activation regime

P(Into)

P(Out of)

0

50

100

150

200

0

50

Time

CPP into & out of activation regime

1.0

CPP into & out of activation regime

0.6

0.2

0.0

0.0

0.2

0.4

CPP

0.6

0.8

P(Into)

P(Out of)

0.4

1.0

200

(t) AR(1), No detrending

P(Into)

P(Out of)

0.8

150

Time

(s) VC time series

CPP

100

0

50

100

150

200

Time

(u) AR(1), Poly detrending

0

50

100

150

200

Time

(v) AR(1), DCB detrending

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

fMRI application

Cluster 20, Distribution of Number Activation Regimes

Probability

0.0 0.2 0.4 0.6 0.8 1.0

Distribution of number of activation regimes

No detrending

Poly detrending

DCB detrending

0

1

2

3

4

5

No. activation regimes

(w) AR(0)

Probability

0.0 0.2 0.4 0.6 0.8 1.0

Distribution of number of activation regimes

No detrending

Poly detrending

DCB detrending

0

1

2

3

No. activation regimes

(x) AR(1)

4

5

Introduction

Background

Methodology

Application and Results

Conclusions & References

Conclusions

Conclusions

Proposed methodology quantifying the uncertainty for

changepoint characteristics in light of model parameter

uncertainty.

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

Conclusions

Conclusions

Proposed methodology quantifying the uncertainty for

changepoint characteristics in light of model parameter

uncertainty.

Combines recent work of exact changepoint distributions via

FMCI in HMM.

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

Conclusions

Conclusions

Proposed methodology quantifying the uncertainty for

changepoint characteristics in light of model parameter

uncertainty.

Combines recent work of exact changepoint distributions via

FMCI in HMM.

Accounts for parameter uncertainty via SMC samplers.

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

Conclusions

Conclusions

Proposed methodology quantifying the uncertainty for

changepoint characteristics in light of model parameter

uncertainty.

Combines recent work of exact changepoint distributions via

FMCI in HMM.

Accounts for parameter uncertainty via SMC samplers.

Application to fMRI data.

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Conclusions

Conclusions

Proposed methodology quantifying the uncertainty for

changepoint characteristics in light of model parameter

uncertainty.

Combines recent work of exact changepoint distributions via

FMCI in HMM.

Accounts for parameter uncertainty via SMC samplers.

Application to fMRI data.

Effects of error process assumptions and types of detrending.

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

References

References

John A. D. Aston, J. Y. Peng, and Donald E. K. Martin. Implied

distributions in multiple change point problems. CRiSM Research

Report 08-26, University of Warwick, 2009.

Pierre Del Moral, Arnaud Doucet, and Ajay Jasra. Sequential Monte

Carlo samplers. Journal of the Royal Statistical Society Series B, 68

(3):411–436, 2006.

Martin A. Lindquist, Christian Waugh, and Tor D. Wager. Modeling

state-related fMRI activity using change-point theory. NeuroImage, 35

(3):1125–1141, 2007. doi: 10.1016/j.neuroimage.2007.01.004.

C. F. H. Nam, J. A. D. Aston, and A. M. Johansen. Quantifying the

uncertainty in change points. Journal of Time Series Analysis, (in

press).

Introduction

Background

Methodology

Application and Results

Conclusions & References

Additional Information

Implementation of SMC samplers I

Consider the model parameters for a 2-state Hamilton’s

MS-AR(r ) model. θ = (P, µ1 , µ2 , σ 2 , φ1 , . . . , φr )

Simple linear tempering schedule,

b−1

,

b = 1, . . . , B.

γb = B−1

References

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Additional Information

Implementation of SMC samplers I

Consider the model parameters for a 2-state Hamilton’s

MS-AR(r ) model. θ = (P, µ1 , µ2 , σ 2 , φ1 , . . . , φr )

Simple linear tempering schedule,

b−1

,

b = 1, . . . , B.

γb = B−1

Reparameterisation of variances to precisions, λ = 1/σ 2

-AR parameters to Partial Autocorrelation Coefficients (PAC).

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Additional Information

Implementation of SMC samplers II

Initialisation: Assume independence between the components

of θ, and sample from Bayesian priors.

Approximation of posterior

(i )

(i )

i i N

p(θ|y ) ≈ {WB , θB }N

i =1 = {W , θ }i =1

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Additional Information

Implementation of SMC samplers II

Initialisation: Assume independence between the components

of θ, and sample from Bayesian priors.

Mutation: Random Walk Metropolis Hastings (RWMH).

Approximation of posterior

(i )

(i )

i i N

p(θ|y ) ≈ {WB , θB }N

i =1 = {W , θ }i =1

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Additional Information

Implementation of SMC samplers II

Initialisation: Assume independence between the components

of θ, and sample from Bayesian priors.

Mutation: Random Walk Metropolis Hastings (RWMH).

P

(i ) 2 −1

< N/2.

Selection: Resample if ESS = { N

i =1 (Wb ) }

Re-weight resampled particles to 1/N.

Approximation of posterior

(i )

(i )

i i N

p(θ|y ) ≈ {WB , θB }N

i =1 = {W , θ }i =1

Introduction

Background

Methodology

Application and Results

Conclusions & References

References

Additional Information

Implementation of SMC samplers II

Initialisation: Assume independence between the components

of θ, and sample from Bayesian priors.

Mutation: Random Walk Metropolis Hastings (RWMH).

P

(i ) 2 −1

< N/2.

Selection: Resample if ESS = { N

i =1 (Wb ) }

Re-weight resampled particles to 1/N.

Intermediary Output: Weighted cloud of N particles,

(i ) (i )

{Wb , θb }N

i =1 approximates the distribution πb .

Approximation of posterior

(i )

(i )

i i N

p(θ|y ) ≈ {WB , θB }N

i =1 = {W , θ }i =1

Introduction

Background

Methodology

Application and Results

Conclusions & References

Additional Information

Waiting Time Distributions for Runs in HMM context

For t = 1, . . . , n

Ψt = Ψt−1 M̃t

(u)

ψt

i

P(W (k, u|θ , y ) ≤ t) =

(u)

where :ψt

(u)

← ψt

(u−1)

+ ψt−1 (M̃t − I)Υ,

(u)

P(Zt

∈ A) =

u = 2, . . . , ⌊n/k⌋

(u)

ψt U(A)

= 1 × |ΩZ | vector storing probabilities of the uth chain

being in the corresponding state.

(1)

(2)

Ψt = ⌊n/k⌋ × |ΩZ | with (ψt , ψt , . . .) as row vectors.

I = |ΩZ | × |ΩZ | identity matrix

Υ = |ΩZ | × |ΩZ | matrix connecting absorption state

to the corresponding continuation state

U(A) = |ΩZ | × 1 vector with 1s in location of absorption states

0s elsewhere.

References