Detection of Structural Breaks and Outliers in Time Series Richard A. Davis :

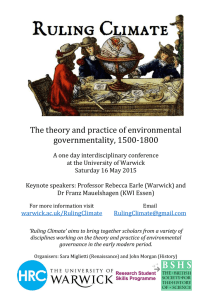

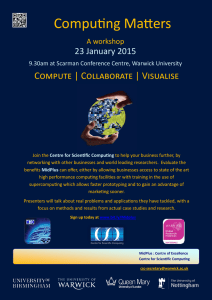

advertisement

Detection of Structural Breaks and Outliers

in Time Series

Richard A. Davis

Columbia University

Collaborators:

Implementation of AutoPARM/AutoPARMO:

Thomas Lee, UC Davis

Gabriel Rodriguez-Yam, Universida Autónoma Chapingo

Theory behind AutoPARM:

Stacey Hancock, UC Irvine (to be).

Yi-Ching Yao, Academia Sinica

General theory:

Chun Yip Yau, Chinese University of Hong Kong

Warwick Mar 2012

1

Illustrative Example

10

Can you spot any unusual observations?

-5

0

Y

5

IO

0

100

200

300

400

500

600

time

Warwick Mar 2012

2

Illustrative Example

10

Auto-PARMO=Auto-Piecewise AutoRegressive Modeling Outlier

Misclassified as

AO

-5

0

Y

5

5 AOs

0 IOs

0

100

200

300

400

500

600

time

1 = 51

Warwick Mar 2012

2 = 151

3 = 252

4

Illustrative Example

-5

0

Y

5

10

The series w/o any outlier effects—structural breaks easier to identify

0

100

200

300

400

500

600

time

Warwick Mar 2012

5

A Second Example

Weekly data: January 2004--December 2011.

0

5

Y

10

15

Any breaks? Outliers?

2004

2006

2008

time

Warwick Mar 2012

2010

2012

6

Game Plan

Introduction

Piecewise Models (AR, GARCH, SV, State-space)

Model selection using Minimum Description Length (MDL)

General principles

Application to AR models with breaks

Optimization using a Genetic Algorithm

Basics

Simulation Examples

Applications

Comments about theory for AutoPARM

Consistency (FLIL)

AutoPARM with Outliers

Warwick Mar 2012

7

Piecewise Models—examples

1. Piecewise AR model:

Yt j j1Yt 1 jp jYt p j j t , if j-1 t j ,

where 0 1 m m+1 n, and {t} is IID(0,1).

Goal: Estimate

m = number of change-points

j = location of jth break point

j = level in jth segment

pj = order of AR process in jth segment

( j1,, jp j ) = AR coefficients in jth segment

j = scale in jth segment

Warwick Mar 2012

8

Examples (cont)

2. Segmented GARCH model:

Yt t t ,

t2 j j1Yt 21 jp jYt 2 p j j1t21 jq j t2q j ,

if j-1 t j ,

where 0 1 m m+1 n + 1, and {t} is IID(0,1).

3. Segmented stochastic volatility model:

Yt t t ,

logt2 j j1 logt21 jp j logt2 p j j t ,

if j-1 t j .

4. Segmented state-space model (SVM a special case):

p( yt | t ,...,1, yt 1,..., y1 ) p( yt | t ) is specified

t j j1t 1 jp j t p j j t ,

Warwick Mar 2012

if j-1 t j .

9

Model Selection Using Minimum Description Length

Choose the model which maximizes the compression of the data or,

equivalently, select the model that minimizes the code length of the

data (i.e., amount of memory required to encode the data).

M = class of operating models for y = (y1, . . . , yn)

LF (y) = code length of y relative to F M

Typically, this term can be decomposed into two pieces (two-part code),

LF ( y) L(Fˆ|y) L(eˆ | Fˆ),

where

L(F̂|y) = code length of the fitted model for F

L(eˆ|F̂) = code length of the residuals based on the fitted model

Warwick Mar 2012

10

Model Selection Using Minimum Description Length (cont)

Code length

Using results from information theory and

likelihood

for τ1,…, τm

approximations, the minimum description length for the

Code length

, pm length

Penalty

Code

segmented AR model

with parameters (m, 1 , , m , p1 ,

1)

for m

for p1,…, pm+1

is Code length for Term

AR parameters

m 1

ψ1,…, ψ

mm+1

MDL(

, 1 , , m , p1 , , pm 1 ) log m m log n log p j

j 1

m 1

pj 2

j 1

2

m 1

nj

j 1

2

log n j

log(2ˆ 2j ),

-log(like)

th

Code

length

where nj is the number of observations in the j segment, and

residuals

ˆ 2 is the Yule-Walker estimate of the process for

variance

in the jth

j

segment.

Warwick Mar 2012

11

Minimizing the MDL

Select the best-fitting model for the data as the model that minimizes

the minimum description length with respect to the number of

change-points m, the change-point locations, 1, m, and the AR

orders p1,…, pm+1.

The dependence of the minimum description length on the

autoregressive coefficient parameter estimates is only through the

white noise estimates ˆ 2j , j = 1,…, m + 1.

Numerical minimization carried out using a genetic algorithm, which

mimics natural evolution (see Davis et al., 2006).

Warwick Mar 2012

12

Optimization Using Genetic Algorithm

Genetic Algorithm: Chromosome consists of n genes, each taking

the value of 1 (no break) or p (order of AR process). Use natural

selection to find a near optimal solution.

Map the break points with a chromosome c via

(m, (1, p1 ), (m , pm ))

c (1,, n ),

where

if no

no break

break point

point at

at tt,,

11,, if

tt

if break

break point

point at

at timett jj11 and

and AR

AR order is ppjj..

ppjj,, if

For example,

c = (2, -1, -1, -1, -1, 0, -1, -1, -1, -1, 0, -1, -1, -1, 3, -1, -1, -1, -1,-1)

t: 1

6

11

15

would correspond to a process as follows:

AR(2), t=1:5; AR(0), t=6:10; AR(0), t=11:14; AR(3), t=15:20

Warwick Mar 2012

15

Implementation of Genetic Algorithm—(cont)

Generation 0: Start with L (200) randomly generated chromosomes,

c1, . . . ,cL with associated MDL values, MDL(c1), . . . , MDL(cL).

Generation 1: A new child in the next generation is formed from the

chromosomes c1, . . . , cL of the previous generation as follows:

with probability c, crossover occurs.

two parent chromosomes ci and cj are selected at random with

probabilities proportional to the ranks of MDL(ci).

kth gene of child is k = ik w.p. ½ and jk w.p. ½

with probability c, mutation occurs.

a parent chromosome ci is selected

kth gene of child is k = ik w.p. 1 ; 1 w.p. 2;and p w.p. 12.

Warwick Mar 2012

16

Implementation of Genetic Algorithm—(cont)

Execution of GA: Run GA until convergence or until a maximum

number of generations has been reached. .

Various Strategies:

include the top ten chromosomes from last generation in next

generation.

use multiple islands, in which populations run independently,

and then allow migration after a fixed number of generations.

This implementation is amenable to parallel computing.

Warwick Mar 2012

17

Simulation Examples (cont)

2. Slowly varying AR(2) model:

Yt atYt 1 .81Yt 2 t if 1 t 1024

1024

a_t

0.4

-6

-4

0.6

-2

0.8

0

2

1.0

4

1.2

where at .8[1 0.5 cos(t / 1024)], and {t} ~ IID N(0,1).

1

200

Warwick Mar 2012

400

600

Time

800

1000

0

200

400

600

time

800

1000

21

2. Slowly varying AR(2) (cont)

GA results: 3 pieces, breaks at 1=293, 2=615. Total run time 27.45 secs

1- 292: .365 -0.753 1.149

293- 614: .821 -0.790 1.176

615-1024: 1.084 -0.760 0.960

Fitted model:

Fitted Model

0.0

0.0

0.1

0.1

0.2

0.2

0.3

0.3

0.4

0.4

0.5

0.5

True Model

0.0

0.2

0.4

0.6

Time

Warwick Mar 2012

0.8

1.0

0.0

0.2

0.4

0.6

Time

0.8

1.0

22

2. Slowly varying AR(2) (cont)

In the graph below right, we average the spectogram over the GA

fitted models generated from each of the 200 simulated realizations.

Average Model

0.3

0.2

0.0

0.0

0.1

0.1

0.2

Frequency

0.3

0.4

0.4

0.5

0.5

True Model

0.0

0.2

0.4

0.6

Time

Warwick Mar 2012

0.8

1.0

0.0

0.2

0.4

0.6

0.8

1.0

Time

23

Simulation Examples (cont)

3. Piecewise ARMA:

.9Ytt11 tt .7tt11, if 1 t 513,

Ytt .9Ytt11 tt,

if 513 t 769,

.7 ,

if 769 t 1024.

tt

tt11

-4

-2

0

2

4

where {t} ~ IID N(0,1).

1

Warwick Mar 2012

200

400

600

Time

800

1000

24

3. Piecewise ARMA (cont)

GA results: 3 pieces, breaks at 1=513, 2=769. Total run time 1.53 secs

Fitted model: AR orders 4, 1, 2

0.4

0.3

0.2

0.1

0.0

0.0

0.1

0.2

0.3

0.4

0.5

Fitted Model

0.5

True Model

0.0

0.2

0.4

0.6

Time

Warwick Mar 2012

0.8

1.0

0.0

0.2

0.4

0.6

0.8

1.0

Time

25

Example--Monthly Deaths & Serious Injuries, UK

1600

1200

1400

Counts

1800

2000

2200

Data: yt = number of monthly deaths and serious injuries in UK, Jan

`75 – Dec `84, (t = 1,…, 120)

Remark: Seat belt legislation introduced in Feb `83 (t = 99).

1976

1978

1980

1982

1984

Year

Warwick Mar 2012

26

Example -- Monthly Deaths & Serious Injuries, UK (cont)

Data: xt = number of monthly deaths and serious injuries in UK,

differenced at lag 12; Jan `75 – Dec `84, (t = 13,…, 120)

Remark: Seat belt legislation introduced in Feb `83 (t = 99).

-200

0

bf ((tt)) W

Wt,,

YYtt aa bf

t

0, if

if 11 tt 98

98,,

0,

f(t)

f(t) 1, if 98 t 120.

1, if 98 t 120.

XXtt YYtt YYtt1212

bg((tt)) N

Ntt

bg

-600

-400

Differenced Counts

200

Traditional regression

analysis:

1976

1978

1980

1982

1984

99 tt 110

110,,

11,, ifif 99

g(t)

g(t)

0, otherwise

otherwise..

0,

Year

Model: b373.4, {Nt}~AR(13).

Warwick Mar 2012

27

Example: Monthly Deaths & Serious Injuries, UK

0

-200

-600

-400

Differenced Counts

200

Data: Yt = number of monthly deaths and serious injuries in UK, Jan

`75 – Dec `84, (t = 1,…, 120)

Remark: Seat belt legislation introduced in Feb `83 (t = 99).

1976

1978

1980

1982

1984

Year

Results from GA: 3 pieces; time = 4.4secs

Piece 1: t=1,…, 98IID; Piece 2: (t=99,…108) IID; Piece 3: t=109,…,120 AR(1)

Warwick Mar 2012

28

Application to GARCH

Garch(1,1) model: Yt t t ,

t

t t

{tt} ~ IID(0,1)

t2t2 jj jjYtt2211 jjt2t211,

if j-j-11 t jj.

.4 .1Yt21 .5t21, if 1 t 501,

2

2

.4 .1Yt1 .6t1, if 501 t 1000.

-4

-2

0

2

2

t

1

200

400

600

800

1000

# of CPs

GA

%

AG

%

0

80.4

72.0

1

19.2

24.0

2

0.4

0.4

Time

CP estimate = 506

AG = Andreou and Ghysels (2002)

Warwick Mar 2012

33

Application to GARCH (cont)

More simulation results for Garch(1,1) : Yt t t ,

{t } ~ IID(0,1)

.05 .4Yt 21 .3t21, if 1 t 1,

2

2

1

.

00

.

3

Y

.

2

if 1 t 1000.

t 1

t 1,

2

t

Mean

SE

Med

Freq

50

GA

Berkes

52.62

71.40

11.70

12.40

50

71

.98

250

GA

Berkes

251.18

272.30

4.50

18.10

250

271

.99

500

GA

Berkes

501.22

516.40

4.76

54.70

502

538

.98

Berkes = Berkes, Gombay, Horvath, and Kokoszka (2004).

Warwick Mar 2012

35

Application to Parameter-Driven SS Models

State Space Model Setup:

Observation equation:

p(yt | t) exp{t yt b(t) + c(yt)}.

State equation: {t} follows the piecewise AR(1) model given by

t = k kt-1 kt , if k-1 t k ,

where 0 1 m n, and {t } ~ IID N(0,).

Parameters:

m = number of break points

k = location of break points

k = level in kth epoch

k = AR coefficients kth epoch

k = scale in kth epoch

Warwick Mar 2012

38

Application to Structural Breaks—(cont)

Estimation: For (m, 1, . . . , m fixed, calculate the approximate

likelihood evaluated at the “MLE”, i.e.,

| Gn |1/ 2

*

*

*

T *

T

T

ˆ ; yn )

exp{

y

1

{

b

(

)

c

(

y

)}

(

)

G

(

) / 2},

La (

n

n

n

1/ 2

K Gn

where

ˆ ( ˆ1,, ˆ m , ˆ 1,, ˆ m , ˆ 12 ,, ˆ 2m ) is the MLE.

Remark: The exact likelihood is given by the following formula

where

L(; y n ) La (; y n ) Era (),

Era () exp{R( n ; *)} pa ( n | y n ; ) dn .

It turns out that log( Era ( )) is nearly linear and can be approximated

by a linear function via importance sampling,

e( ) ~ e(ˆ AL ) e(ˆ AL )( ˆ AL )

Warwick Mar 2012

39

SV Process Example

1305

1295

-2

-1

1300

0

y

MDL

1

1310

2

3

1315

Model: Yt | t N(0,exp{t}), t = t-1+ t , {t}~IID N(0, )

1

500

1000

1500

2000

2500

time

3000

1

500

1000

1500

2000

2500

3000

Breaking Point

True model:

Yt | t ~ N(0, exp{t}), t = -.05 + .975t-1+ t , {t}~IID N(0, .05), t 750

Yt | t ~ N(0, exp{t }), t = -.25 +.900t-1+ t , {t}~IID N(0, .25), t > 750.

GA estimate 754, time 1053 secs

Warwick Mar 2012

41

SV Process Example

-530

-0.5

-525

-520

-515

MDL

0.0

y

-510

0.5

-505

-500

Model: Yt | t N(0,exp{t}), t = t-1+ t , {t}~IID N(0, )

1

100

200

300

time

400

500

1

100

200

300

400

500

Breaking Point

True model:

Yt | t ~ N(0, exp{t}), t = -.175 + .977t-1+ t , {t}~IID N(0, .1810), t 250

Yt | t ~ N(0, exp{t }), t = -.010 +.996t-1+ t , {t}~IID N(0, .0089), t > 250.

GA estimate 251, time 269s

Warwick Mar 2012

42

SV Process Example-(cont)

True model:

Yt | t ~ N(0, exp{at}), t = -.175 + .977t-1+ et , {t}~IID N(0, .1810), t 250

Yt | t N(0, exp{t }), t = -.010 t-1+ t , {t}~IID N(0, t > 250.

Fitted model based on no structural break:

1.0

Yt | t N(0, exp{t}), t = -.0645 + t-1+ t , {t}~IID N(0,

0.5

simulated series

0.0

y

0.0

-0.5

-0.5

y

0.5

original series

1

100

200

300

time

Warwick Mar 2012

400

500

1

100

200

300

400

500

time

43

SV Process Example-(cont)

Fitted model based on no structural break:

-468

-466

simulated series

-472

-478

-0.5

-476

-474

0.0

y

MDL

-470

0.5

1.0

Yt | t N(0, exp{t}), t = -.0645 + t-1+ t , {t}~IID N(0,

1

100

200

300

time

Warwick Mar 2012

400

500

1

100

200

300

400

500

Breaking Point

44

Comments on the theory for AutoPARM

Consistency of

:

Suppose the true number of change points is m and let

1=1/n, . . . , m=m/n

be the relative (true) change-points. Then AutoPARM estimates

of

and

satisfy:

→

,

→

.

Remarks: The proof is a “cool” application of Strassen’s function

law of the iterated logarithm.

Warwick Mar 2012

45

Comments on the theory for AutoPARM (cont)

Extension: If the process in the jth segment is stationary with ACVF

j(h) and with j(h) j-1(h) for some lag h{0,1,…,p*}. Then the

estimator which minimizes MDL by fitting AR(p*) models in each

segment produces weakly consistent estimators of m = true number of

change-points.

Remark: This result can be applied to detecting change-points in

piecewise GARCH models. Here one can take p*=0 so that we are

only talking about changes in variance.

Further extensions: This result has been extended to include a more

general framework by Davis and Yau (2012). Base models include

ARMA and GARCH—results address some identifiability issues

(Andrews and Cheng (2011)).

Warwick Mar 2012

46

Comments on the theory for AutoPARM (cont)

Theorem: Suppose

is the AR(1) process

,

1, and the

where

is IID(0,1) with finite 5th moment and pdf

satisfying

0 for all

•

•

•

liminf

→

e

0, for some 0.

Then, MDL is NOT consistent using Yule-Walker estimation. That

is, there is a positive probability that a change-point model will be

selected as

Warwick Mar 2012

→ ∞.

47

Modeling Framework for Outlier Detection

,…,

Data:

Model parameters

Segments:

m = number of change-points

j = start of jth segment (0 1 m m+1 n)

,

Intra-segment parameters (jth segment

,

Integer valued:

•

•

,

∗

,

,…,

∗

∗

,

,

,

,…,

,

locationofadditiveoutliers

,…,

∗

∗

,

locationofinnovationaloutliers

,

Warwick Mar 2012

,

order of AR process

∗

,

•

∗

,…,

,

55

Modeling Framework (cont)

,…,

Data:

,

Intra-segment parameters (jth segment

,

Real valued:

,

•

∗

•

,

≫

,…,

,

,

,…,

,

,

,

∗

,

AR model parameters

,

is AO variance

≫ 1is proportional increase in innovation variance.

•

Intra-segment AR process with IO:

,

where

,

,

⋯

,

,

,

is an independent sequence with

Var

Warwick Mar 2012

,

,

,if ∈ ,

1, if ∉ .

56

Modeling Framework (cont)

Data:

,…,

Intra-segment AR process with IO:

,

where

,

,

⋯

,

,

,

,

is an independent sequence with

Var

,

,if ∈ ,

1, if ∉ .

Intra-segment AR process with IO and AO:

,

,

,

where the

Warwick Mar 2012

,

,

,if ∈

ift ∉

are independent and N(0,

∗

,

,

distributed.

57

Modeling Framework (cont)

Remarks:

•

If the

,

and the

,

are Gaussian, then model (for fixed

integer parameter ) can be expressed as a Gaussian linear

state-space model.

•

Take advantage of state-space model and Kalman recursions

for likelihood calculation.

Warwick Mar 2012

58

Model Selection Using Minimum Description Length

Choose the model which maximizes the compression of the data or,

equivalently, select the model that minimizes the code length of the

data (i.e., amount of memory required to encode the data).

M = class of operating models for y = (y1, . . . , yn)

LF (y) = code length of y relative to F M

Typically, this term can be decomposed into two pieces (two-part code of

Rissanen),

LF ( y) L(Fˆ|y) L(eˆ | Fˆ),

where

L(F̂|y) = code length of the fitted model for F

L(eˆ|F̂) = code length of the residuals based on the fitted model

Warwick Mar 2012

59

Model Selection Using Minimum Description Length

Take

M = class of operating models for y = (y1, . . . , yn) that

includes piecewise AR models with AO’s and IO’s.

Let

,…,

and

,…,

valued parameter vectors, where

, ∗ , , ∗, , … ,

,

Then

L

∑

Warwick Mar 2012

log

log

,

,…,

,

be the integer- and real∗

,

∗

,

,

,

∗

,

,…,

,

.

,

1 log

log

∗

log

∗

log

1/2

3 log

60

Model Selection Using Minimum Description Length

Moreover, using results from information theory, Rissanen argues

L ̂|

where

∑

~

;

log

;

) is the likelihood evaluated at the MLE of the jth

segment; so that

, , ,

,

∗

, ,

1 log

log

∗

log

log

∗

log

m

log

1/2

3 log

log 2 (2

ˆ; 2j ) n j )

(log

j 1

Warwick Mar 2012

61

Model Selection Using Minimum Description Length

Computing the likelihood

in a segment given

;

: Start by computing likelihood

and locations of additive and innovational

outliers.

;

,

; ,

,

,

,

,

|

Warwick Mar 2012

62

Model Selection Using Minimum Description Length

;

Computing the likelihood

;

•

~

:

,

;

|

,

multivariate normal

•

,…,

; ,

2

⋯

exp

∉

2

exp

⋯

∈

Remark: Use Kalman recursions to compute likelihood.

Warwick Mar 2012

63

Optimization Using Genetic Algorithm

Genetic Algorithm: Chromosome consists of n genes, each taking

the value of -3 (AO), -2 (AI), 1 (no break), p (order of AR process).

Use natural selection to find a near optimal solution.

Map the break points with a chromosome c via

, , , ,

↔

,…,

where

3, ifAOoccursat ,

2, ifIOoccursat ,

1, ifnobreakoccursat ,

, ifbreakoccursattime andARmodelis .

For example,

c = (2, -1, -3, -1, -1, 0, -1, -1, -1, -1, 0, -1, -1, -1, 3, -1, -1, -2, -1,-1)

t: 1

6

11

15

would correspond to a process with AO at t=3, IO at t=18.

AR(2), t=1:5; AR(0), t=6:10; AR(0), t=11:14; AR(3), t=15:20

Warwick Mar 2012

64

Robust AutoPARM-IO contaminated AR model

Single AR model with

single IO at ?

MDL’s w/ top 5

residuals indicated

Warwick Mar 2012

66

Robust AutoPARM-AO contaminated AR model

Single AR model with

single AO at 101

MDL’s w/ top 5

residuals indicated

Warwick Mar 2012

67

Accelerating Robust AutoPARM

Remark: Observe that large reductions in MDL due to outliers seem

to occur at locations of largest residuals. Use this info to facilitate the

search optimizer.

Search modification: for simplicity, assume one AR(p) signal

,…,

1. Select locations

2. Let

and set

(resp

be an AR(p) model with AO(IO) at time t

,

3. Define

4. Set

argmin

, ∈

;

∈

, ,

argmin

5. Continue to obtain

arg min

Warwick Mar 2012

of k largest absolute residuals.

,

∈

,…,

location of outlier

, ∈

;

location of outlier

and define

0

68

Accelerating Robust AutoPARM

6. Only allow time locations , … , for outliers in the current

generation of the genetic algorithm.

7. After producing the next generation, select largest residuals again

and repeat.

Remark: The outlier selection step has elements that are similar in

spirit to one proposed by Bianco et al. (2001) and Sánchez and Peña

(2003). Our method is not as likely to stop prematurely.

Warwick Mar 2012

69

Accelerating Robust AutoPARM

Example: AR(1) model

.8

, with AO’s at {16,32,48,80} and no IOs.

~

0,1

Residuals selected by

minimizing MDL

Correctly

One

wrong

identified

classification

locations

Minimum

MDL

10 largest

residuals

Warwick Mar 2012

70

Robust AutoPARM-sample results

DGP1: one-piece AR model; no outliers

DGP2: one-piece AR model; 5 innovation outliers

DGP3: one-piece AR model; 4 additive outliers

Warwick Mar 2012

71

Warwick Mar 2012

72

Robust AutoPARM-sample results

Three-piece AR model:

.8

1.69

1.32

3 , if1

.81

, if128

.81

3 , if192

128,

t 192,

t 256.

DGP4: three-piece AR model; no outliers

DGP5: three-piece AR model; 3 innovation outliers at 16, 32, 48.

DGP6: three-piece AR model; 3 additive outliers at 16, 32, 48.

Warwick Mar 2012

73

Robust AutoPARM-sample results

-5

0

5

Y

10

15

20

DGP5: three-piece AR model; 3 innovation outliers at 16, 32, 48.

0

50

Results from AutoPARMO:

100

150

200

250

time

Breaks: 124 and 194; 3 IA outliers: 16, 32, 48

Results from AutoPARM(no outliers): Breaks at 52, 126, 294

arima.rob: AO at 16, 17,32, 48, 49

Warwick Mar 2012

74

Robust AutoPARM-sample results

DGP4: three-piece AR model; no outliers

DGP5: three-piece AR model; 3 innovation outliers

DGP6: three-piece AR model; 3 additive outliers

Warwick Mar 2012

75

Robust AutoPARM-sample results

DGP5: two-piece AR model; 3 innovation outliers

DGP6: two-piece AR model; 3 additive outliers

Warwick Mar 2012

76

Application to Google Trends Example: Tea Party

Weekly data: January 2004--December 2011.

15

Taxes due

9-12 taxpayer

march on

Washington DC

Y

10

3 weeks after

Obama takes

office

5

Midterm

elections

0

Texas Tea

Party straw

poll

2004

2006

Results from AutoPARMO:

2008

2010

2012

time

Ron Paul

raised

$4.4M on

internet in

1 day

Break: Feb 15, 2009 (3 weeks after Obama took office)

AO outliers: 11-5-06(election week); 9-2-07; 12-16-07;

4-12-09 (taxes due); 4-11-10 (taxes); 9-12-10; 10-31-10 (election)

Warwick Mar 2012

79

Application to Google Trends Example: Tea Party

Refinement: January 2004--December 2011.

15

Taxes due

9-12 taxpayer

march on

Washington DC

10

3 weeks after

Obama takes

office

Midterm

elections

0

Texas Tea

Party straw

poll

5

Y

Tea Party

protests

2004

2006

2008

2010

time

Results from AutoPARMO on the two segments:

2012

Ron Paul

raised

$4.4M on

internet in

1 day

AO outliers (7): 149, 192, 207, 276, 287, 328, 357

IO outliers (2): 325, 350

Warwick Mar 2012

80

Summary Remarks

1. MDL appears to be a good criterion for detecting structural breaks.

2. Optimization using a genetic algorithm is well suited to find a near

optimal value of MDL.

3. While estimating structural breaks for nonlinear time series models is

more challenging, this paradigm of using MDL together with GA holds

promise for break detection in parameter-driven models and other

nonlinear models.

4. Extensions to outlier (both innovation and additive) detection are

currently under study. Results look promising—new implementation is

almost ready.

Warwick Mar 2012

81

Running the Program AutoPARM

• Download the zip file AutoPARM (only runs on PC) from

www.stat.columbia.edu/~rdavis/AutoPARM/AutoPARM.zip

• Extract the zip file (folder called AutoPARM) will be created.

• Open a command prompt window

(In run, type the command cmd)

• Navigate to the AutoPARM folder

• AutoPARM needs an input file (first several lines of example file

eqn5.in are:

• DATA:

eqn5.dat

[name of file containing input data]

• OUTPUT:

eqn5.out

[name of file to export results]

• Execute program by typing on command line

AutoPARM eqn5.in

• Results are written to file named eqn5.out

• More details about running the program can be found in AutoPARM.pdf

Warwick Mar 2012

82

82