From: Proceedings of the Eleventh International FLAIRS Conference. Copyright © 1998, AAAI (www.aaai.org). All rights reserved.

Prototype

Selection

from Homogeneous Subsets

by a Monte Carlo Sampling

Marc Sebban

F~uipe RAPID

Reconnaissance,

Apprentissage et Perception Im.elligente

~ partir de Donn&~

L-FR Sciences, Ddpartement de Mathdmatiques et Informatique,

Univcrsitd des Antilles et de la Guyane, 97159 Pointe-~-Pitre

(France)

marc.sebbanc.~,un iv-ag, fr

Abstract

In order to reduce computatiomd

ald storage

c~stsof learningmethods,we presenta protot.,~T)e

selection

algorithm.

Thisapl)roach

usesil,formation

contained

in a connected

neighborhood

graph.It determines

the numberof homogeneous

subsetsin the Rp space,and usesit to fix the

numberof prototypes

in advance.Oncethisnumberis determined,

we.idcntii~,"

prototypes

applyinga stratified

MonteC~rlosampling

algoritl,n,.

We. prc~ent

an application

of our algorithm

olla

simulat(xl

example,

comparing

results

witilthose

obtainedwith othermethods.

Introduction

Sel(x.tion of relevant prototype subsets has interested

mlmerous se’~rehcrs in pattern recognition for a long

time. For example, non parametric classification

methods such as k-nemwt-neighbors

(tIart

1968), Parzen’s

¯ u~indou,s (Parzen 1962) or more generally

methods

ba~d on geonmtrical models (Seb~mn t996), (Preparata

and Shamos 1985), have the reputation

to have high

computational and storage costs (Jain 1997), (’~Vatson

1981), (Devijver 1.082). Actually.. the belonging class

determitmtion of a new instance often rcquire~ distanoc

calculations

with all points stored in memory. Neverthcless, the simplicity of these approach(~ encourages

searchers in pattern re(u~gnition to build strategies to

r(xluce the size of the learning sample, kec~fing cla.~sification accuracy (Hart 1968), (Gates 1972), (Ichino

Sklansky 1985) and (Skalak 1994).

Intuitively,

we think that a small number of protocypes can have comparable performanc~ (and perhaps

higher) to those obtained

with a whole m~mplc. We

justin’ this id~x with two re~asons :

I. Some noises or repetitions

2.

in data could be deletcxl,

~t(’h prototype can be viewexl a~s a supl)lementary

dcgrcx ~, of freedom. If we rex|uce the mmlber of prototype~, we can sometimes avoid overfitting

situations.

°Copyright ~) 1998, American Association for Artificial

Intelligence (www.aaai.org). All rights reserved.

250 Sebban

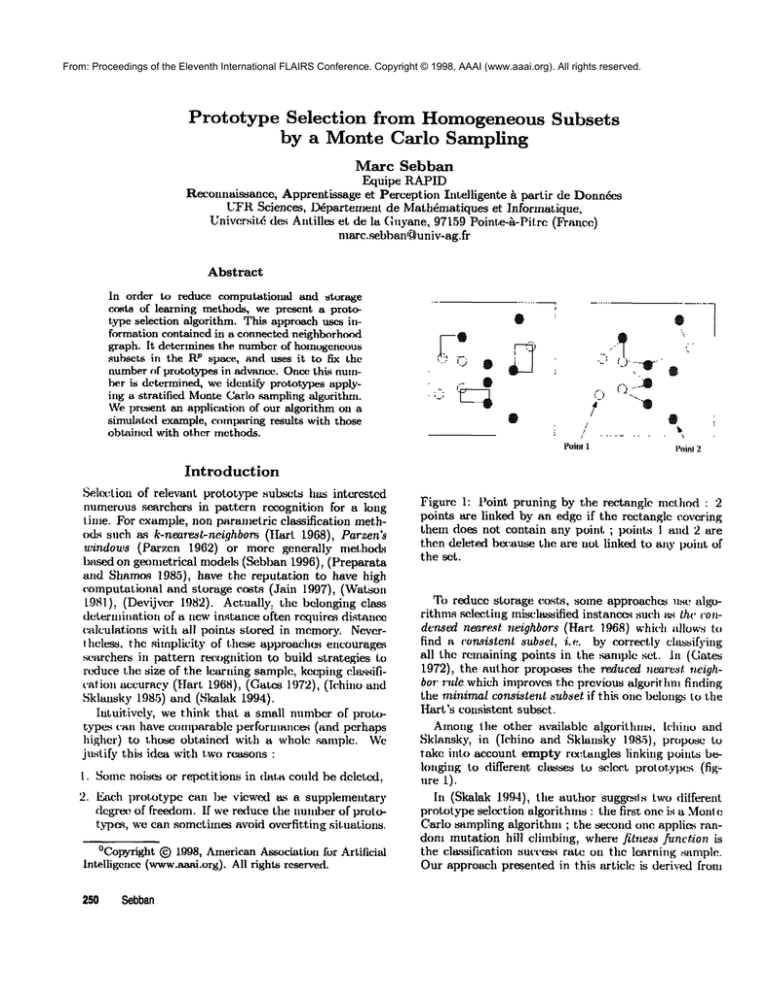

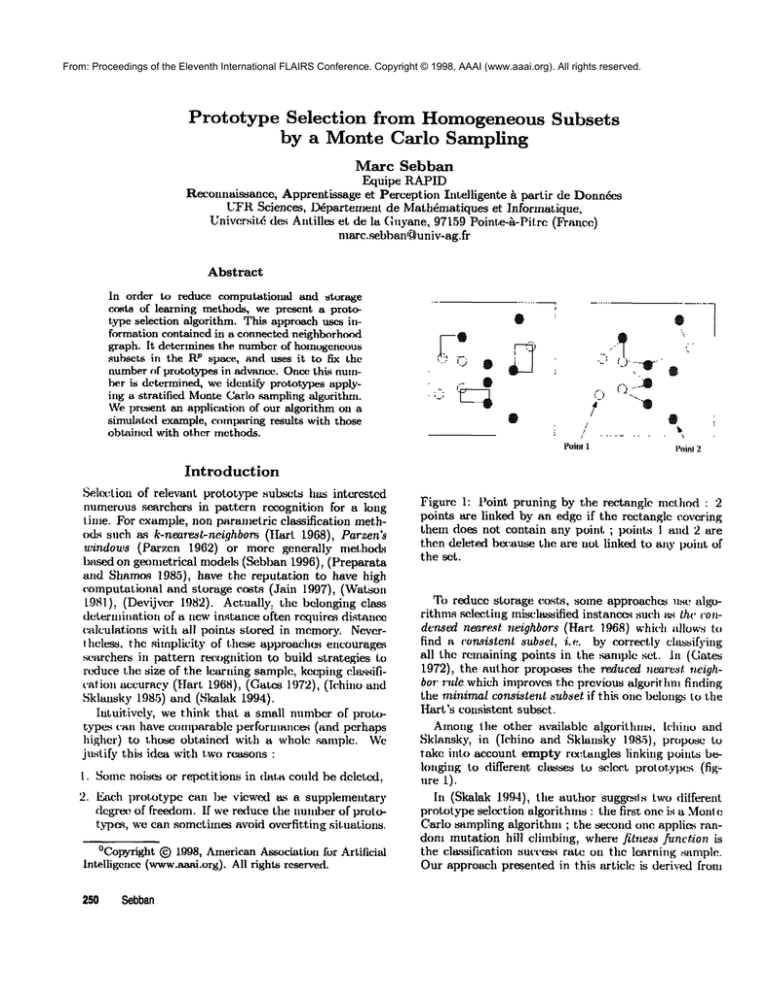

Figure. 1: Point pruning by the rectangle method : 2

points are linked by an edge if the rectangle covering

them does not contain an)" point ; points l and 2 are

then deleted because the are not linked to any poiut of

the set.

To reduce storage, costs, some approachc~ us( r algorithms ~leeting misclassified instances such ~-~ the condensex/ nearest neighbors (Hart 1968) which a/low.s

find a consistent subsel, i.e. by correctly classifying

all the remaining points in the sample set. In ((.lares

1972), the author propos~ the rexl.uced ncare..~t neighbor rule which improves the previous algorithm finding

the minimal consistent ~ub~et if this one 1)elon~,~ to the

Hart’s consistent subset.

Among lhe other available

algorithms,

lchino and

Sklansky, in (Iehino and Sklansky 1985), propose

take into account empty r(x’tangles

linking points belonging to different classes to select prototyl)cs (figure 1).

In (Skalak 1.994), the author suggcsls two diiferent

prototype sekx:tion algorithms : the first one is a Mon!c

Carlo sampling algorithm ; the second one applic~ randora mutation hill climbing, where fitness fu.nctio.n is

the classification SllC(’(~.~s rat(: on the lc,’trning .~ample.

Our approach presented in this article

is derived from

the first one. This is the reason whywe briefly review

in tim next section the useful aspects of the Skalak:s ~lection algorithm. This method being limited to simple

problems where clasps of patterns are easily" separable,

we propose in section 3 an extension of the principle

which determines in ad~ance the number of prototypes

from a connected neighborhood graph. In order to show

the interest of our approach, we apply the algorithm on

~,n examplein section 4.

The

Monte

Carlo

Sampling

Algorithm

Thc principle of the Monte Carlo method is based on

rcpcated stochastic trials (n). This way to proceed allows to approximate the solution of a givcn problem.

In (Skalak 1994), the author suggests the following algorithm to determine prototypes. The number of prototypes (m) is fixed in advance as being the number

classes to learn.

I. Select. ’n random samples, each sample with replacement, of m instances from the training set.

2. For each sample, compute its classification accuracy

on the training set, using a 1-near~qt neighbor algorithm (Cover and Hart. 1967).

3. Select the sample with the highest (:htssification accuracy on the training set.

Homogeneous

Subsets

Extraction

and

Prototypes

Selection

Wethink that performances of a learning algorithm,

whatever the algorithm may be, depends necessarily

on geometrical structures of classes to learn (figure 2).

p,

Thus, we propose to characterize these structures in R

called homogeneoussubset.s, from the construction of a

connected neighborhood graph.

Definition 1 : A graph G is composed of a set of verticea noted ~ linked by a set of edgez noted .4 ; they a~?

thus the couple (VZ,A ).

Definition 2 : A graph is considered conne~:tc~ if, for

any couple of point,~ {a, b} E E’~, there exists a s<a’ies of

edges joimng a to b.

Wcautomatically deternfine the number of prototypes applying the following algorithm :

1. Construction of the minimumspanning tree. This

neighborhood graph ks connected and toni ains the

nearest neighbor of each point (figure 3).

4. Cla.ssi~. the test set using as prototypes the sample

with the highest classification ac~curacy on the training set.

This approach is simple to apply, but. t.o fix m as

being thc numberof classes is restrictive. Actually, it

is limit, ed to simple problemswherecla.-~ses of pat! erns

are easy to separate. Wecan imagine some problems

where the m classes are mixtxi, and where m prototypes

are not sufficient. In the next section, we propose an

improvement of this algorithm, searching for the number of protot~2~es in ad~ncc, and applying afterwards

a stratified MonteCarlo sampling.

Figure 3: Mininmm Spanning qYee (MST) : in this

graph the edge len~h sum is minimum. Froln n points.

thc MSThas ~dwavs n-I edges.

2. Construction of homogeneoussubsets, deleting edges

connecting points which belong to different, chtssc,~

(white and black). Thus, a homogeneoussubset is a

connected sub-graph of the MinimumSpanning Tree

(figure 4).

®

Figure 2: Examples of geometrical structures : on the

one trend, the first example (a) shows a simple probleln

dealing with 2 classes (black and white). These classes

arc represented by two main structures of points belonging to the same class ; on the other hand, the second example (b) shows mixed classes, with numerous

geometrical structures.

¯

®

-

.-?’J

....

Figure 4: Homogeneoussubsets

MachineLearning251

Tile number of homogeneoussubsets seems to characterize the complexity to learn classes of patterns. Thus,

the more this number is high, the more the number of prototypes must be high. Actually, when the

problem is simple to learn (figure 2.a), a small number

of prototypes is sufficient, to characterize all the learning set.. On the contrary, when the problem is (x)mplex

(figure 2.b), the numberof prototypes converges on the

number of learning points. Thus, we dcx:ide to fix the

mnnber of prototypes in a(l~nce as being the number

of homogeneoussubscls. Afterwards, we search for the

best instan¢x~ of each homogeneoussubset, to identi~’

its protot.~33e. To do t.hai, we apply a stratified Monte

C.arlo sampling.

¯ ¯

++

.

] Success

Rate

Yl%

55% ]wholeset

73% ]

] m----32

]m--2

Table l : Results obtained on a ~diclat.ion set : the first.

columncorresponds to the sucz.e.~s rate obl.ain(xl with

our procedure ; the second corresponds to t, hc. Skalak’s

method, and the third one to the succc,~s rate o})tained

wit.hout reduction of the learning set

Results

In order to show the interest of our approa(’h we want

to verify that the rexluction of the learning set ¢1o~

not reduce significantly the revognit.ion perforntance~ of

the model built on this new learning sample. Wenmst

verify that the success rate obtained wit h the sub.set is

not significantly weakert.han Lhe ont: obtained wit h the

whole sample.

Applying our algorithm of homogent.~ms sub~,t extract:ion, we obtain m = 32 structures (figure 6). l{e,sults are presentedilL tit(; table 1, with ’n = I(X) t.rials.

This experiment shows that our algorithm allows to

obtain a large reductkmin sl orage, and results are (’lose

t.o those obt.ained with the wholelearning ~;t.. The al)plicat, iol, of a frequency(xmlparison test doc~not. show

a significant difference between the two rates (7"1%vs

73%). On the contrary, we bring to thc fore. with tt,is

example the limits of the SLalak’s algorithm, where the

numberof prototyl)cs is fixed in advance~mt.h(" number

l"igure 5: Example with two classem and 100 points

Example

Presentation

Wcapply in this section our prototype selection algoril hm. Our problemis a simulation, with two classes (’q

and C2 (figure 5), where:

I. (Ti contains 50 black points : VXi E Ct,Xi =

N(p, a~

2. (."2 contains F~ white points ; VXi E C..2,Xi =N(p, a.2), where crI > a~.

With th(~e parameters we. wanted to avoid two cx! remesitua!ions :

1. Ihe 2 ¢’la.~es are linearly separal)le. In this (’~L~(: (loo

simple) 2 prototypes are sufficient.

2. the 2 classe,~ are totally mixed. In this ease (too complex) the tmmberof prototypes (?n) converges on

number of points (n).

The validation set is (’Oml)(rsed of 100 new ca~s,

extually chtxsen amongthe t~l~tr()

clas.~ C1 and C.~.

252 Sebban

Figure 6: .MininmmSpanning tree built on the training

sample ; edge~ linking points which belong to difforem

classes are deleted ; |hen, we obtain 32 holnogt’ll(~tnls

sulx~et.s.

Conclusion

D.B. 1994. Prototype and feature selection

by sampling and random mutation hill climbing algorithms. In Proceedings of 11th International Conference on Machine Learning, Morgan Kaufmann, 293301.

SKALAK,

Wethink that the reduction of storage costs of a learningse¢is close~"

related

to themixingof classes.

In

thisarticle

we haveproposed

to characterize

thismixing

by searching

forgeometrical

structures

(called

homogeneoussubsets)

thatlinkpointsof thesameclass.The

smaller

thenumberof structures

is,themorethereduction

of storage

costsis possible.

Themaininterest

of ourapproach

is to establish

a priorithereduction

rateof the learning

set.Oncethe numberm of prototypes is a priori fixed, numerous methods to select

them are then available : stochastic approaches, genetic

algorithms, etc. Wehave used a Monte Carlo sampling

algorithm to compare our approach with other works.

Nevertheless, we think that the I-nearest neighbor rule

is not always adapted to classes with different, standard deviation. Then, we are working on new labeling

rules which take into account, other neighborhood structures : Gabriel structure, Delaunaypolyhedrons, Lunes,

etc. (Preparata and Shamos1985). These decision rules

may allow to extract better prototypes from homogeneous subsets, using not only the I-nearest neighbor to

label a new unknown point but also the neighbors of

neighbors.

D. 1981. Computing the n-dimensional

Delaunay tesselation with application to voronoi" polytopes, The computer journal, (24):167-172.

WATSON,

References

COVER,T.M. AND HART, P.E. 1967. Nearestneighborpattern

classification.

IEEE"_lhzns.

Inform.

Theory

21-27.

DEVIJVER

P. 1982. Selection of prototypes for nearest

neighbor classification. In Proceedings of International

Conference on Advances in Information Sciences and

Technology.

GATES,G.~, r. 1972. The reduced Nearest Neighbor

Rule. IEEE Trans. Inform. Theory 431-433.

HART,P.E. 1968. The condensed nearest neighbor

rule. IEEE Trans. Inform. Theory 515-516.

[CHINO, M. ANDSKLANSKY,J 1985. The relative

neighborhood graph for mixed feature ,~riables, Pattern recognition, ISSN 0031-3~03, USA, DA (18):161167.

JAIN, A. 1987. Advances in statistical

tio~ Springer-Verlag.

pattern recogni-

PARZEN,E. 1962. On estimation of a probability density function and mode. Ann. Math. Stat 33:1065-1076.

F.P. AND SHAMOS, M.I. 1985. Pattern

recognition and scene analysis. Springer-Verlag.

PREPARATA,

M. 1996. ModUles thdoriques en Reconnaissance de Formes et Architecture hybride pour Machine

Perceptive. Ph.d Thesis., Lyon 1 University.

SEBBAN,

MachineLeaming253