From: AAAI Technical Report WS-94-02. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

Designinga Familyof CoordinationAlgorithms*

KeithS. DeckerandVictorR. Lesser

Department

of Computer

Science

Universityof Massachusetts,Amherst,MA01003

Email:

DECKER@CS.UMASS.EDU

May27,1994

Abstract

Manyresearchers haveshownthat there is no single best organizationor coordination

mechanism

for all environments.This paper discusses the design and implementationof an

extendablefamily of coordinationmechanisms,

called GeneralizedPartial GlobalPlanning

(GPGP),that form a basic set of coordination mechanismsfor teams of cooperative

computationalagents. Theimportant features of this approachinclude a set of modular

coordinationmechanisms

(any subset or all of whichcan be used in responseto a particular

task environment);a general specification of these mechanisms

involving the detection

andresponseto certain abstract coordinationrelationships in the incomingtask structure

that are not tied to a particular domain;and a separation of the coordinationmechanisms

from an agent’s local scheduler that allows each to better do the job for whichit was

designed. Wewill also discuss the interactions between these mechanismsand howto

decide wheneach mechanismshould be used, drawingdata from simulation experiments

of multiple agent teamsworkingin abstract task environments.

1

Introduction

This paper presents a formal description of the Generalized Partial Global Planning (GPGP)

coordination approach [6] and extends it to create a family of coordination algorithms that can

be adapted to different environments. The GPGPfamily of algorithms is based on recognizing

and reacting to the characteristics of certain coordination relationships, an approach shared

with VonMartial’s work on the favor relationship [23]. The approach described here is based

on modular components (called ’mechanisms’) that work in conjunction with, but do not

replace, a fully functional agent with a local scheduler. Each componentor mechanismcan be

added as required in reaction to the environment in which the agents find themselves a part.

*This work was supported by DARPA

contract N00014-92-J-1698,Office of Naval Researchcontract

N00014-92-J-1450,

and NSFcontract CDA8922572.The content of the information does not necessarily

reflect the positionor the policyof the Government

andno official endorsement

shouldbe inferred.

- 32-

Anindividual algorithmin the family is defined by a particular set of active mechanisms

and

their associatedparameters.

This paper will also summarize

experimentalresults that involve a completeimplementation

of GPGP

and a separately developedreal-time local scheduler [16, 15]. Wewill showhowto

decide whena particular mechanism

is useful, howsomefamily membersperformrelative to a

centralized algorithm,and whatthe spaceof possible coordinationalgorithmslooks like for the

five mechanisms

currently defined. Weanalyze the performanceof this family of algorithms

through simulation in conjunction with the heuristic real-time local scheduler and randomly

generated abstract task environments.In these environments,the agents attempt to maximize

the system-wide

total utility (a quantity called ’quality’, describedlater) by executingsequences

of interrelated ’methods’. The agents do not initially have a completeview of the problem

solving situation, and the executionof a methodat one agent can either positively or negatively

affect the executionof other methodsat other agents.

TheGPGP

approachspecifies three basic areas of the agent’s coordination behavior: how

and whento communicateand construct non-local views of the current problem solving

situation; howand whento exchangethe partial results of problemsolving; howand whento

makeand break commitmentsto other agents about what results will be available and when.

The use of commitmentsin the GPGPfamily of algorithms is based on the ideas of many

other researchers [2, 21, 1, 18]. Eachagent also has a heuristic local schedulerthat decides

whatactions the agent shouldtake and when,based on its current viewof the problemsolving

situation (including the commitments

it has made), and a utility function. Thecoordination

mechanisms

supply non-local viewsof problemsolving to the local scheduler, including what

non-localresults will be available locally, and whenthey will be available. Thelocal scheduler

creates (and monitors the execution of) schedules that attempt to maximizegroup quality

through both local action and the use of non-local actions (committedto by other agents).

One way to think about this work is that the GPGPapproach views coordination as

modulatinglocal control, not supplantingit--a twolevel process that makesa clear distinction betweencoordination behavior and local scheduling. This process occurs via a set of

coordination mechanisms

that post constraints to the local scheduler about the importanceof

certain tasks and appropriate times for their initiation and completion.Byconcentrating on

the creation of local schedulingconstraints, weavoid the sequentiality of schedulingin the

original PGPalgorithm [12] that occurs whenthere are multiple plans. By having separate

modulesfor coordinationand local scheduling,wecan also take advantageof advancesin realtime scheduling algorithms to producecooperative distributed problemsolving systems that

respondto real-time deadlines. Wecan also take advantageof local schedulersthat havea great

deal of domainscheduling knowledgealready encodedwithin them. Finally, our approach

allows consideration of termination issues that were glossed over in the PGPwork(where

termination was handledby an external oracle). This paper will also discuss howto decide

wheneach mechanismshould be used and howthe mechanismsinteract, drawing on simple

modelsof generic task structures and data from simulation experimentswheremultiple agent

teams workin abstract task environments.

The waythat we specify the family of algorithms in a general, domain-independentway

(as responsesto certain environmentalsituations and interactions with a local scheduler)

very important. It leads to the eventual construction of libraries of reusable coordination

componentsthat can be chosen(customized)with respect to certain attributes of the target

- 33-

application.

1.1 The Task Environment

Theobservation that no single organization or coordination algorithm is ’the best’ across

environments,problem-solvinginstances, or evenparticular situations is a common

one in the

study of both humanorganizational theory (especially contingencytheory) [19, 14, 22] and

cooperative distributed problemsolving [13, 11, 10, 8]. Keyfeatures of task environments

demonstratedin both these threads of workthat lead to different coordination mechanisms

include those related to the structure of the environment(the particular kinds and patterns of

interrelationships or dependenciesthat occur betweentasks) and environmentaluncertainty

(both in the apriori structure of any problem-solving

episodeand in the outcome’sof an agent’s

actions; for example,the presenceof both uncertainty and concomitanthigh variancein a task

structure). This makesit importantfor a general approachto coordination(i.e., one that will

be used in manydomains)to be appropriately parameterizedso that the overheadactivities

associated with the algorithm, in terms of both communication

and computation,can be varied

dependinguponthe characteristics of the application environment.

So far wehavemadetwo importantstatements: that there is ampleevidencethat no single

coordinationalgorithm is ’the best’ across different environments,and that we are going to

describe a family of coordinationalgorithms. That weneeda family of algorithmsfollows from

the first statement;wewill nowbriefly discusshowthat familyis organized.At the mostabstract

level, each of the five mechanisms

weare about to describe are parameterizedindependently

(the first twohavethree possiblesettings and the last three can be in or out) for a total of

combinations. Manyof these combinationsdo not showsignificantly different performance

in randomlygeneratedepisodes, as will be discussedin the experiments(Section 3), although

they mayallow for fine-tuning in specific applications. Moremechanisms

can (and have) been

addedto expandthe family, but the family can also be enlarged by makingeach mechanism

moresituation-specific. For example,mechanisms

can have their parametersset by a mapping

from dynamicmeta-level measurementssuch as an agent’s load or the amountof real-time

pressure. Mechanisms

canbe ’in’ or ’out’ for individualclasses of task groups,or tasks, or even

specific coordinationrelationships, that re-occur in particular environments.Thecross product

of these dynamicenvironmentalcues provides a large but easily enumeratedspace of potential

coordination responses that are amenableto the adaptation of the coordination mechanisms

over time by standard machinelearning techniques. In the experimentalsection of this paper

wewill only consider the coarsest parameterizationof the mechanisms.

Thereader should keep

in mindwhenreading about the various coordinationtechniques that wehaveexplicitly stated

that they will not be useful in everysituation. Instead, our purposeis to carefully describethe

mechanisms

and their interactions so that a decision about their inclusion in an application

can be madeanalytically or experimentally(wewill present an examplein Section 3).

1.1.1

Uncertainty

Less uncertainty in the environmentmeansless uncertainty in the existence and extent of the

task interdependencies,and less uncertaintyin local scheduling--thereforethe agentsneedless

complexcoordination behaviors (communication,negotiation, partial plans, etc) [20]. For

-34-

example, one can have cooperation without communication[17] if certain facts are known

about the task structure by all agents. In this paperagents will not havea priori information

about the structure of an episode, but they will be able to get information about a subset

of the structure after the start of problemsolvingmnosingle agent workingalone will be

able to construct a view of the entire problem(task structure) facing the group. This lack

of information (another form of environmentaluncertainty) can cause the local scheduler

makesub-optimaldecisions. Someof this uncertaintywill arise fromdisparities in the objective

(real) task structure and the subjective (agent-believed)task structure. For example,an agent

maynot knowthe true duration of a methodand if the execution variance is high maynot

even have a goodestimate. In this paper wewill be looking at environmentsthat do not have

this characteristic (of large variancebetweenobjectivecharacteristics and subjectiveestimatesof

those characteristics). Instead wewill focus on a secondsource of structural uncertainty--each

agent has only a partial subjective viewof the current episode.

1.1.2

Taskinterrelationships

Taskinterrelationships include the relationships of tasks to the performancecriteria by which

we will evaluate a system, to the control decision structures of the agents whichmakeup a

system, and to the performanceof other tasks. Wewill represent, analyze, and simulate the

effects of task interrelationships using the T/EMS(Task Analysis, EnvironmentModeling,and

Simulation)framework[9]. Theimportantfeatures of TIEMS

include its layered description of

environments

(objective reality, subjective mapping

to agent beliefs, generativedescriptionof the

other levels across single problem-solving

instances); its acceptanceof any performance

criteria

(based on temporallocation and quality of task executions);and its non-agent-centered

point of

view that can be used by researchers workingin either formal systemsof mental-state-induced

behavior or experimentalmethodologies.TheT/EMSobjective subtask relationship indicates

the relationship of tasks to system performancecriteria; the subjective mappingindicates

the initial beliefs available to an agent’scontrol decisionstructures; variousnon-localeffects

such as enables and facilitates indicate howthe executionof one task affects the duration or

quality of another task. Whena relationship extendsbetweenparts of a task structure that are

subjectively believedby different agents, wecall it a coordinationrelationship. Thebasic idea

behind GeneralizedPartial GlobalPlanning (GPGP)is to detect and respondappropriately

these coordinationrelationships.

1.1.3

Representing task environments

Aprobleminstance (called an episodeE) is definedas a set of task groups,each with a deadline

D(T), such as E = (~, T2,..., 7",,). Thetask groups (or subtasks within them)mayarrive

different times(At(x)is the arrival time of task x), but in this paperthey will all arrive at

start of problemsolving (like the classic DVMT).

Whiletask groups are independentof one

1,

another computationally the tasks within a single task groupare in general not independent.

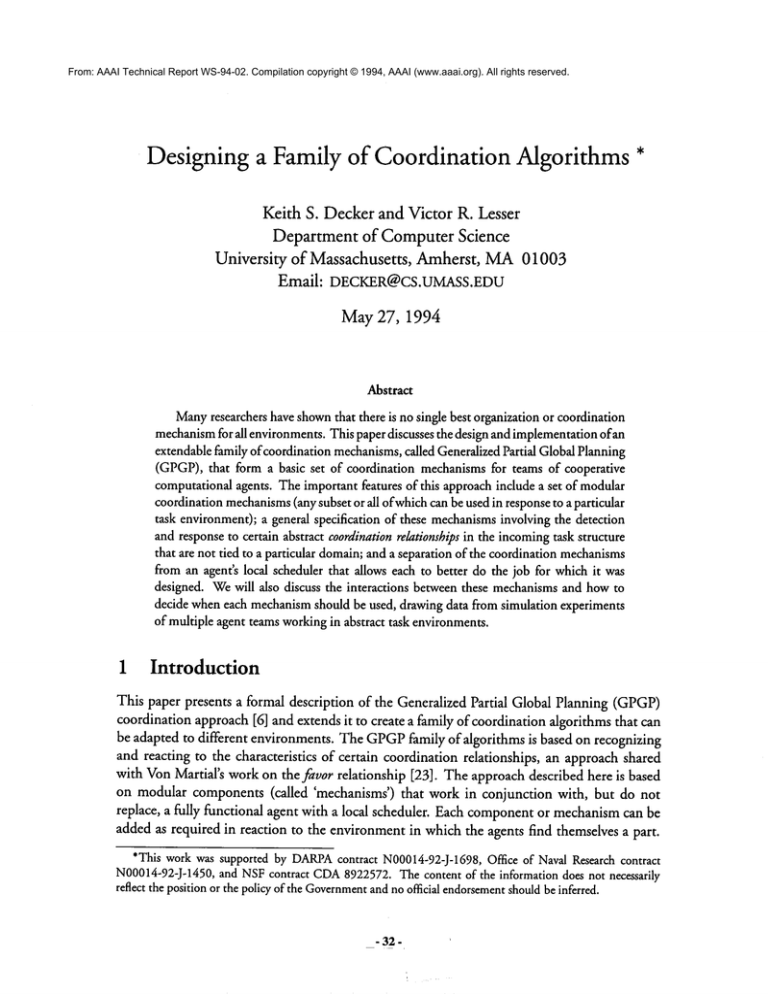

Figure 1 showsan objective task groupand agent A’s subjective viewof that task group. Atask

groupconsists of a set of tasks related to one another by a subtask relationship that formsan

acyclic graph(here, a tree). Thecircles higherup in the tree represent varioussubtasksinvolved

1 Except

fortheuseof theprocessor(s)

or otherphysical

resources.

- 35-

in the task group, and indicate precisely howquality will accrue dependingon what leaf tasks are

executed and when. Tasks at the leaves of the tree (without subtasks) represent methods, which

are the actual computations or actions the agent will execute (in the figure, these are shown

as boxes). The arrows between tasks and/or methods indicate other task interrelationships

where the execution of some methodwill have a positive or negative effect on the quality or

duration of another method. The presence of these interrelationships

makethis an NP-hard

scheduling problem; further complicating factors for the local scheduler include the fact that

multiple agents are executing related methods, that somemethods are redundant (executable at

more than one agent), and that the subjective task structure maydiffer from the real objective

structure. This notation and associated semantics are formally defined in [9].

method

(executable

task)

©

taskwithquality

accrualfunctionrain

subtaskrelationship

enablesrelationship

facilitatesrelationship

AgentAinitial subjecti~view

AgentB initial subjecti’~view

Figure1: AgentAandB’ssubjectiveviews(bottom)of a typicalobjectivetask group(top)

1.2 The AgentArchitecture

The T/EMSframeworkmakes very few assumptions about the architecture of agents--agents are

loci of belief and action. Agents have somecontrol mechanismthat decides on actions given the

agent’s current beliefs. There are three classes of actions: methodexecution, communication,

and information gathering. Methodexecution actions cause quality to accrue in a task group

(as indicated by the task structure). Communicationactions are used to send the results

methodexecutions (which in turn maytrigger the effects of various task interrelationships)

meta-level information. Information gathering actions add newly arriving task structures, or

newcommunications,to an agent’s set of beliefs.

Formally, we write B~(a~) to mean agent A subjectively believes z at time ~ (from

Shoham[21]). Wewill shorten this to B(~) when we don’t have a particular agent or time

mind. An agent’s subjective beliefs about the current episode B(E) includes the agent’s beliefs

about various task groups (e.g., B(7~ E E)), and an agent’s beliefs about each task group

includes beliefs about the tasks in that task group (e.g., B(T~, M~E 7~)) and the relationships

between these tasks (e.g., B(enables(T~, M~))).

- 36,

The GPGP

family of coordination algorithms makesstronger assumptionsabout the agent

architecture. Mostimportantly, it assumesthe presence of a local scheduling mechanism

(to

be described in the next section) that can decide what methodexecutionactions should take

place and when.It assumesthat agents do not intentionally lie and that they believe what

they are told (i.e. if agent A1tells agent A2at time tl with communicationdelay 8 that

BA1(enables(Ta, Mb)), then B~2(enables(Ta,Mb)) where t2 > tl + ~ is the earliest time

after the communicationarrives that agent A2 performs a newcommunicationinformation

gathering action to read the messagefrom A1). However,because agents can believe and

communicate

only subjective information, they mayunwittingly transmit information that is

inconsistent with an objective view (this can cause, amongother things, the phenomena

of

distraction). Finally, the GPGPfamily approach requires domain-dependentcode to detect

or predict the presenceof coordinationrelationships in the local task structure [5]. In this

paper wewill refer to that domain-dependent

codeas the informationgathering action detectcoordination-relatiomhipr,

wewill describethis action morein Section2.2.

1.3

The Local Scheduler

Each GPGP

agent contains a local scheduler that takes as input the current, subjectively

believed task structure and produces a schedule of what methodsto execute and when.Using

the informationin the subjective structure about the potential duration, potential quality,

and relationships of the methods,it choosesand orders executable methodsin an attempt to

maximize

a pre-definedutility measurefor each task groupT. In this paperthe utility function

is the sumof the task groupqualities. Thelocal scheduler attempts to maximizethis utility

function U(E) = ~rev. Q(T,D(T)), Q(T,t) denotesthe qual ity of T at time t a s

definedin [9].u

Besidethe subjectivetask structure, the local schedulershouldaccepta set of commitments

C

from the coordination component.These commitments

are extra constraints on the schedules

that are producedby the local scheduler. For example,if method1 is executableby agent Aand

method2 is executable by agent B, and the methodsare redundant,then agent A’s coordination

mechanismmaycommitagent A to do method1 both locally and socially (commitmentsare

directed to particular agents in the sense of the workof Shoham

and Castelfranchi [1, 21]) by

communicating

this commitment

to B (so that agent B’s coordination mechanism

records agent

A’s commitment,see the description of non-local commitments

NLCbelow). This paper will

use two types of commitments:C’(Do(T,q))is a commitment

to ’do’ (achieve quality for)

andis satisfied at the time t whenQ(T,t) _>q; the secondtype C’(DL(T,

q, tat)) is a ’deadline’

commitment

to do T by time tel and is satisfied at the time t when[Q(T,t) >_q] A [t <_ta].s

AscheduleS producedby a local schedulerwill consist of a set of methodsand start times:

S = {(Ml,tl), (M~,t2),..., (Mn, tn)}. The schedule mayinclude idle time, and the local

scheduler mayproduce morethan one schedule uponeach invocation in the situation where

not all commitments

can be met. Thedifferent schedulesrepresent different waysof partially

satisfying the set of commitments

(see [15] and the next section). Thefunction Violated(S)

returns the set of commitments

that are believed to be violated by the schedule. For violated

deadline commitmentsC(DL(T,q, tal)) Violated(S) th e fu nction Alt(C’, S’ ) re turns an

2Notethat quality does not accrueafter a task group’sdeadline.

3Othercommitments,

such as to the earliest start time of a tack, mayalso proveuseful.

-37-

alternative commitmentC(DL(T,q,t~l)) where t~l = mint such that Q(T, t) >_ if such a

t exists, or NIL otherwise. For a violated Do commitmentan alternative maycontain a lower

minimumquality, or no alternative maybe possible.

The final piece of information that is used by the local scheduler is the set of non-local

commitmentsmadeby other agents NLC.This information can be used by the local scheduler

to coordinate actions between agents. For example the local scheduler could have the property

that, if method M1is executable by agent A and is the only method that enables method M~

at agent B (and agent B knows this Bs(enables(M1, M2))), and BA(C(DL(M1,q,

BB(NLC), then for every schedule S produced by agent B, (M2, t) C S =~ t _> tl.

The function Ue,t(E, S, NLC)returns the estimated utility at the end of the episode

the agent follows schedule S and all non-local commitments in NLCare kept. Thus we

may define the local scheduler as a function LS(E, C, NLC)returning a set of schedules

S = {6’1, 6’2,..., 6’,,,}. Moredetailed information about this kind of interface between the

local scheduler and the coordination componentmay be found in [15].

This is an extremely general definition of the local scheduler, and is the minimal one

necessary for the GPGPcoordination module. Stronger definitions than this will be needed

for morepredictable performance, as we will discuss later.^ Ideally, the optimal local scheduler

would find both the schedule with maximumutility 6"u and the schedule with maximum

utility that violates no commitments

~’¢. In practice, however, a heuristic local scheduler will

produce a set of schedules wherethe schedule of highest utility Str is not necessarily optimal:

^

U(E, 6‘u, NLC) _< U(E, Su, NLC).

2

The Coordination Mechanisms

The role of the coordination mechanismsis to provide constraints to the local scheduler (by

modifying portions of the subjective task structure of the episode E or by makingcommitments

in C) that allow the local scheduler to construct objectivelybetter schedules. The modulesfulfill

this role by (variously) communicatingprivate portions of its task structures, communicating

results to fulfill non-local commitments,and makingcommitmentsto respond to coordination

relationships betweenportions of the task structure controllable by different agents or within

portions controllable by multiple 4agents.

The five mechanismswe will describe in this paper form a basic set that provides similar

functionality to the original partial global planning algorithm as explained in [6]. Mechanism

I exchanges useful private views of task structures; Mechanism2 communicatesresults; Mechanism 3 handles redundant methods; Mechanisms4 and 5 handle hard and soft coordination

relationships. Moremechanismscan be added, such as one to update utilities across agents as

discussed in the next section, or to balance the load better between agents. The mechanisms

are independent in the sense that they can be used in any combination. If inconsistent constraints are introduced, the local scheduler wouldreturn at least one violated constraint in all its

schedules, whichwouldbe dealt with as discussed in the next section. Since the local scheduler

is boundedlyrational and satisfices instead of optimizing, it maydo this even if constraints are

not inconsistent (i.e. it does not search exhaustively).

4We

say a subtreeof a task structureis controllable

byan agentif that agenthas at least oneexecutable

method

in that subtree.

- 38- ¯

2.1

The Substrate Mechanisms

All the specific coordination mechanismsrest on a commonsubstrate that handles information

gathering actions (new task group arrivals and receiving communications), invoking the local

scheduler and choosing a schedule to execute (including dealing with violated commitments),

and deciding whento terminate. Information gathering is done at the start of problem solving,

wheneverthe agent is otherwise idle (but not ready to terminate), and whencommunicationsare

expected from other agents. Communications

are expected in response to certain events (such as

after the arrival of a newtask group) or as indicated in the set of non-local commitmentsNLC.

This is the minimal general information gathering policy. 5 Termination occurs for an agent

when the agent is idle, has no expected communications, and no outstanding commitments.

Choosinga schedule is more complicated. Fromthe set of schedules S returned by the local

scheduler, two particular schedules are identified: the schedule with the highest utility Su and

the best committedschedule So. If they are the same, then that schedule is chosen. Otherwise,

we examine the sum of the changes in utility for each commitment. Each commitment, when

created, is assignedthe estimated utility U,,t for the task group.of whichit is a part. This utility

may be updated over time (when other agents depend on the commitment, for example).

then choose the schedule with the largest positive change in utility. This allows us to abandon

commitmentsif doing so will result in higher overall utility. The coordination substrate does

not use the local scheduler’s utility estimate Ue,t directly on the entire schedule because it

is based only on a local view, and the coordination mechanismmayhave received non-local

information that places a higher utility on a commitment

than it has locally.

For example, at time t agent A may make a commitment C, on task T 6 T1 6 E that

results in a schedule $1. 6’1 initially acquires the estimated utility of the task group of which

it is a part, U(C~) +-- U,,t({T~},S1,BA(NLC)). Let U(C~) = 50. After communicating

this commitment to agent B (making it part of BB(NLC),agent B uses the commitment

to improve U~,t({T~}, Su, BB(NLC))to 100. A coordination mechanism can detect

discrepancy and communicate the utility increase back to agent A, so that when agent A

considers discarding the commitment, the coordination substrate recognizes the non-local

autility of the commitment

is greater than the local utility,

If both schedules have the same impact, the one that is more negotiable is chosen. Every

commitment

has a negotiability index (high, medium,or low) that indicates (heuristically)

difficulty in rescheduling if the commitmentis broken. This index is set by the individual

coordination mechanisms. For example, hard coordination relationships like enables that

cannot be ignored will trigger commitmentswith low negotiability.

If the schedulesare still equivalent, the shorter one is chosen, and if they are the samelength,

one is chosen at random. After a schedule S is chosen, if Violated(S) is not empty, then each

commitmentC 6 Violated(S) is replaced with its alternative C +- C \ C U Alt(C, S). If

commitmentwas madeto other agents, the other agents are also informed of the change in the

5Theminimalpolicy wouldexamineeach dementof NLCat the appointedtime and if the local schedule

hadchanged

so that the receptionof the informationwouldnolongerhaveanyeffect, the associatedinformation

gatheringactioncouldbe skipped.

awhileit is dear that withoutthis policythe systemof agentswill performnon-optimally,

it is not dearhow

often the situationoccursor whatthe performance

hit is. Futureworkwill haveto examine

the costsandbenefits

of this policy; for this reason’we

donot includethis mechanism

among

the five examined

in this paper.

- 39-

commitment.

Whilethis could potentially cause cascadingchangesin the schedulesof multiple

agents, it generallydoesnot for three reasons: first, as wementioned

in the previousparagraph

less importantcommitments

are brokenfirst; secondly,the resiliancy of the local schedulersto

solve problemsin multiple waystends to dampout these fluctuations; and third, agents are

time cognizantresource-boundedreasoners that interleave executionand scheduling(i.e., the

agents cannotspendall day arguingover schedulingdetails and still meettheir deadlines). We

have observedthis useful phenomenon

before [7] and plan to analyzeit in future work.

2.2

Mechanism1: Updating Non-Local Viewpoints

Remember

that each agent has only a partial, subjective view of the current episode. Agents

can, therefore, communicate

the private portions of their subjective task structures to develop

better, non-local, views of the current episode. Theycould even communicate

all of their

private structural information, in an attempt to developa global subjective view. TheGPGP

mechanismdescribed here can communicateno private information (’none’ policy, no nonlocal view),or all of it (’all’ policy, globalview),or take an intermediateapproach(’some’policy,

partial view): an agent communicates

to other agentsonly the private structures that are related

by somecoordination relationship to a structure knownby the other agents. Theprocess of

detecting coordinationrelationships betweenprivate and shared parts of a task structure is in

general very domainspecific, so in the experimentspresentedlater in the paper wemodelthis

processby a newinformationgatheringaction, detect-coordination-relationships,

that takes some

fixed amountof the agent’s time. This action is chosenwhena newtask grouparrives (which

adds newinformationto the agent’s private task structures).

Theset P of privately believedtasks or methodsat an agent A(tasks believedat arrival time

by Aonly) is then {z I Task(z) A~/a E A \ A, -~Ba(B~r(~’)(z))}, whereAis the

agents and Ar(z) is the arrival time ofz. Giventhis definition, the action detect-coordinationrelationships returns the set of private coordinationrelationships PCR= {r I T1 E P AT2

P A [r(T1, T2) Vr(T2, T~)]} betweenprivate and mutually believed tasks. The action

not return whatthe task T~is, just that a relationship exists betweenT1 and someotherwise

unknown

task T2. For example,in the DVMT,

wehave used the physical organization of agents

to detect that AgentA’Stask T1 in an overlappingsensor area is in fact related to someunknown

task T2 at agent B (i.e. Ba(Bm(T2)))[6, 5]. The non-local view coordination mechanism

then communicates

these coordinationrelationships, the private tasks, and their context: if

7"(T1, Tz) E PCRand T1 C P then ~" and T1 will be communicatedby agent A to the set

agents {a [Ba(Ba(T,))}.

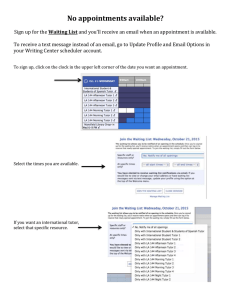

For example,Figure 2 showsthe local subjective beliefs of agents A and B after the

communicationfrom one another due to this mechanism.Theagents’ initial local view was

shownpreviouslyin Figure 1. In this example,Ts and T4 are two elementsin AgentB’s private

set of tasks P, facilitates(T4, T~, ~bd, ~q) C PCR(the facilitation relates a private task

a mutuallybelieved task), and enables(T4,Ts) is completelylocal to AgentB (it relates

private tasks). At the start of this section wementionedthat coordinationrelationships exist

betweenportions of the task structure controllable by different agents (i.e., in PCR)and

within portions controllable by multiple agents. We’ll denotethe completeset of coordination

relationships as CR;this includes all the elementsof PCRand all the relationships between

non-privatetasks. Somerelationships are entirely local--betweenprivate tasks and are only of

- 40-

withqualitytask)

~ methodtask

(executable

accrual

functionmin

subtaskrelationship

-). enablesrelationship

~

~

facilitates

Agent

A’sview

aftercommunication

fromB

relationship

Agent

B’sviewaftercommunication

fromA

Figure 2: Agents A and B’s local views after receiving non-local viewpoint communicationsvia mechanism1.

Figure1 showsthe agents’initial states.

concernto the local scheduler. Thepurposeof this coordinationmechanism

is the exchangeof

information that expandsthe set of coordination relationships CR.Withoutthis mechanism

in place, CRwill consist of only non-private relationships, and none that are in PCR.Since

the primary focus of the coordination mechanisms

is the creation of social commitments

in

responseto coordinationrelationships (elements of CR),this mechanism

can have significant

indirect benefits. In environmentswhereI PCRItends to be small, very expensiveto compute,

or not useful for makingcommitments

(see the later sections), this mechanism

can be omitted.

2.3

Mechanism2: CommunicatingResults

The result communicationcoordination mechanismhas three possible policies: communicate

only the results necessaryto satisfy commitments

to other agents (the minimalpolicy); communicatethis informationplus the final results associated with a task group(’TG’ policy),

and communicate

all results (’all’ policy). Extra result communications

are broadcast to all

agents, the minimalcommitment-satisfyingcommunications

are sent only to those agents to

whomthe commitmentwas made(i.e., communicatethe result of T to the set of agents

.[A

}. e A IB(BA(C(T))

2.4

Mechanism3: Handling Simple Redundancy

Potential redundancyin the efforts of multiple agents can occur in several places in a task

structure. Anytask that uses a ’max’quality accumulationfunction (one possible semantics

for an ’OR’node)indicates that, in the absenceof other relationships, only one subtaskneeds

to be done. Whensuch subtasks are complexand involve manyagents, the coordination of

these agents to avoidredundantprocessingcan also be complex;wewill not address the general

redundancyavoidanceproblemin this paper (see instead [20]). In the original PGPalgorithm

and domain(distributed sensor interpretation), the primary form of potential redundancy

was simple methodredundancy--the sameresult could be derived from the data from any of

a numberof sensors. The coordination mechanismdescribed here is meant to address this

-41-

simpler form of potential redundancy.

The idea behind the simple redundancy coordination mechanism is that when more than

one agent wants to execute a redundant method, one agent is randomly chosen to execute

it and send the results to the other interested agents. This is a generalization of the ’static’

organization algorithm discussed by Deckerand Lesser [8]--it does not try to load balance, and

uses one communicationaction (because in the general case the agents do not knowbeforehand,

without communication, that certain methods are redundantT). The mechanismconsiders the

set of potential

redundancies RCR= {r E CR I [r = subtask(T,M, min)] A [VM

M, method(M)]}. Then for all methods in the current schedule S at time t, if the methodis

potentially redundant then commit to it and send the commitmentto Others(M) (non-local

agents who also have a method in M):

[<M, tM> e S] A

[subtask(T,M,

min) ¯ RCR]

[M eM]

:::>

[C(Do(M,Q..t(M,D(M),S)))

[Comm(M,Others(M),t)

A

e

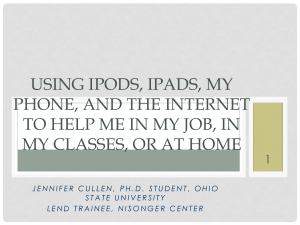

See for example the top of figure 3--both agents committo Do their methods for T1.

After the commitment is made, the agent must refrain from executing the method in

question if possible until any non-local commitmentsthat were madesimultaneously can arrive

(the communicationdelay time 8). This mechanismthen watches for multiple commitments

the redundant set (subtask(T,M,

min) C RCR, M1 E M,/I//2 C C(Do(MI,q))E C,

a

and BB(C(Do(M2,

q))) NL

C) and if they appear, a u ni que age nt is chosen ran domly

(but identically by all agents) from those with the best commitmentsto keep its commitment.

All the other agents can retract their commitments.For example the bottom of figure 3 shows

the situation after Agent B has retracted its commitmentto Do B1. If all agents follow the

same algorithm, and communication channels are assumed to be reliable, then no second

message(retraction) actually needs to be sent (because they all choose the sameagent to do

redundant method). In the implementation described later, identical random choices are made

by giving each methoda unique randomidentifier, and then all agents choose the methodwith

the ’smallest’ identifier for execution.

Initially,

all Do commitmentsinitiated by the redundant coordination mechanismare

marked highly negotiable. Whena redundant commitmentis discovered, the negotiability of

the remaining commitment is lowered to mediumto indicate the commitment is somewhat

more important.

2.5

Mechanism4: Handling HardCoordination Relationships

Hard coordination relationships include relationships like enables(M1,/1//2) that indicate that

M1must be executed before M2in order to obtain quality for M2. Like redundant methods,

hard coordination relationships can be culled from the set CR. The hard coordination

"rThedetectionof redundantmethodsis domain-dependent,

as discussedearlier. Sinceweare talking here

aboutsimple,direct redundancy

(i.e. doingthe exactsamemethod

at morethanoneagent)this detectionis very

straight-forward.

SRead"21//1 and M2are redundant,and I amdoingMIand I knowthat B has committed

to meto do Mu".

- 42-

Agent A ~t view after rommunicationflom B

O/(T4,50,5)

[M¢ch#5]

Agent B’s view after communicationfiom A

1

12

t~5

Agent A’s view after recieveing B’s commitments

t!lO

"

AgentB’s view after r~ceiving A ’s commitments

Figure3" Acontinuation

of FiguresI and2. Attop: agentsAandBproposecertaincommitments

to oneanother

via mechanisms

3 and5. Atbottom:after receivingthe initial commitments,

mechanism

3 removes

agentB’s

redundantcommitment.

mechanism

further distinguishes the direction of the relationship---the current implementation

only creates commitments

on the predecessors of the enables relationship. We’ll let HPCR

C

CRindicate the set of potential hard predecessor coordination relationships. The hard

coordination mechanism

then looks for situations wherethe current scheduleS at time t will

producequality for a predecessorin HPCR,

and commitsto its executionby a certain deadline

both locally and socially:

[Q..~(T,D(T),S) > O] A

::=> [C(DL(T,Q..t(T,D(T),S),t.,,~Iy))

[enables(T, M) HPCR]

[Comm(C,Others(M),t)

C]A

The next question is, by what time (tearly above) do we committo providing the answer?

Onesolution, usable with any local schedulerthat fits our general description in Section1.3,

is to use the mint such that Qe,t(T,D(T),S) > 0. In our implementation, the local

schedulerprovidesa queryfacility that allowsus to proposea commitment

to satisfy as ’early’

as possible (thus allowingthe agent on the other end of the relationship moreslack). Wetake

- 43.

advantageof this ability in the hard coordination mechanismby adding the newcommitment

C’(DL(T,Q,,t(T,D(T),S),"early")) to the local commitments6’, and invoking the local

scheduler LS(E, C, NLC)to produce a newset of schedules S. If the preferred, highest

utility scheduleSu E S has no violations (highly likely since the local scheduler can simply

return the samescheduleif no better one can be found), wereplace the current schedulewith

it and use the newschedule,with a potentially earlier finish time for T, to providea value for

te,,rly. Thenewcompletedcommitment

is entered locally (with low negotiability) and sent

the subset of interested other agents.

If redundant commitmentsare madeto the sametask, the earliest commitmentmadeby

any agent is kept, then the agent committingto the highest quality, and any remainingties are

broken by the samemethodas before.

Currently, the hard coordination mechanismis a proactive mechanism,providing informationthat mightbe used by other agents to them, while not putting the individual agent to

any extra effort. Other future coordination mechanisms

mightbe addedto the family that are

reactive and request fromother agents that certain tasks be doneby certain times; this is quite

different behaviorthat wouldneedto be analyzedseparately.

2.6

Mechanism

5: HandlingSoft Coordination Relationships

Soft coordinationrelationships are handledanalogouslyto hard coordinationrelationships except that they start out with high negotiability. In the current implementation

the predecessor

of a facilitates relationship is the only one that triggers commitments

across agents, although

hindersrelationships are present. Thepositive relationship facilitates(M1, M2,~d, ~q) indicates that executingM1before M2decreases the duration of M2by a ’power’factor related to

~b,t and increases the maximum

quality possible by a ’power’factor related to 4~, (see [9] for

the details). A moresituation-specific version of this coordination mechanism

mightignore

relationships with very low ’power’.Therelationship hinders(M1,M2,~bd, qSq)is negativeand

indicates an increase in the duration of M2and a decrease in maximum

possible quality. A

coordination mechanismcould be designedfor hinders(and similar negative relationships)

and addedto the family. To be proactive like the existing mechanisms,a hinders mechanism

wouldworkfrom the successors of the relationship, try to schedulethemlate, and committo

an earliest start time on the successor. Figure 3 showsAgentB makinga Dcommitment

to do

methodB4, whichin turn allows AgentA to take advantageof the facilitates(T4, T1, 0.5, 0.5)

relationship, causingmethodA1to take only half the time and produce1.5 times the quality.

3

Experimentsin Generalized Partial Global Planning

In this final section wewill discuss experimentswehave conductedwith our implementation

of theseideas:

¯ Methodologically, howshould we decide whenthe addition of a particular mechanism

is warranted?

¯ Whatis the performanceof a system using all the mechanismscomparedto a system

that only broadcastsresults? Compared

to a systemwith a centralized scheduler?

-44.

¯ Whatis the performancespace of the GPGP

family, as delineated by the five existing

mechanisms?

As we have stated several times in this paper, we do not believe that any of the mechanisms

that collectively form the GPGP

family of coordination algorithms are indispensable. What

we can do is evaluate the mechanismson the terms of their costs and benefits to cooperative

problemsolving both analytically andexperimentally. This analysis and experimentationtakes

place with respect to a very general task environmentthat does not correspondto a particular

domain.Doing this producesgeneral results, but weakerthan wouldbe possible to derive in

a single fixed domainbecause the performancevariance between problemepisodes will be far

greater than the performance

varianceof the different algorithmswithin a single episode. Still,

this allows us to determinebroadcharacteristics of the algorithmfamily that can be used to

reducethe search for a particular set of mechanism

parametersfor a particular domain(with or

without machinelearning techniques). Wewill also discuss statistical techniquesto deal with

the large between-episodevariances that occur whenusing randomly-generatedproblems.

Ourmodelof an abstract task environmenthas ten parameters;Table 1 lists themand the

values used in the experimentsdescribedin the next two sections.

Parameter

MeanBranchingfactor (Poisson)

MeanDepth(Poisson)

MeanDuration(exponential)

Redundant Method QAF

Number

of task groups

TaskQAFdistribution

Values(facilitation exps.)

1

3

10

Max

2

(20%/80%rain/max)

HardCRdistribution

(10%/90%enables/none)

Soft CRdistribution

(80%/10%/10%

facilitates/hinders/none)

Chanceof overlaps(binomial)

FacilitationStrength

10%

.1.5.9

Values(dusterin~exps.)

1

3

(1 10 100)

Max

(1 5 10)

(50%/50%rain/max)

( 100%/0%rain/max)

(0%/100%enables/none)

(50%/50%enables/none)

(0%/10%/90%

facilitates/hinders/none)

(50%/10%/40%

facilitates/hinders/none)

(0% 50% 100%)

.5

Table1: Environmental

Parameters

usedto generatethe random

episodes

The primary sources of overhead associated with the coordination mechanismsinclude

action executions (communicationand information gathering), calls to the local scheduler,

and any algorithmic overhead associated with the mechanismitself. Table 2 summarizesthe

total amountof overhead from each source for each coordination mechanismsetting and

the coordination substrate. L represents the length of processing (time before termination),

and d is a general density measureof coordination relationships. Webelieve that all of

these amountscan be derived from the environmental parameters in Table 1, they can also

be measuredexperimentally. Interactions between the presence of coordination mechanisms

and these quantities include: the numberof methodsor tasks in E, which depends on the

non-local view mechanism;the numberof coordination relationships ICRI or the subsets

RCR, HPCR,SPCR, which depends on the number of tasks and methods as well; and

the numberof commitmentsI C[, which depends on each of the three mechanismsthat makes

commitments.The relationships between these quantities can be modeledand verified using

methodssimilar to those in [8].

-\

- 45

\

Mechanismsetting

Communications

substrat¢

0

nlv none

0

some 0(dP)

all 0(P)

comm min

o(c)

TG o(c+ E)

all

redundant on

hard on

soft

on

O(M~ E)

0(RCR)

0(IIPCR)

0(SPCR)

InformationGathering

E + idle

0

E detect.CRs

E detect-CRs

0

0

0

0

0

0

Scheduler

L

0

0

0

0

0

0

0

0(HPCR)

O(SPCR)

Other Overhead

o(r,c)

0

O(Te ~)

O(TE ~)

O(C)

O(C+ ~)

O(M~ ~)

0(RCR * S + OR)

O(HPCR ¯ 5 + CR)

O(SPCR ¯ S + CR)

Table 2: Overheadassociated with individual mechanisms

at each parametersetting

Decidingto add a mechanism.A practical question to ask is simply whether the addition

of a particular mechanismwill benefit performancefor the system of agents. Here we give

an examplewith respect to the soft coordination mechanism,which will makecommitments

to facilitation relationships. Weran 234 randomlygenerated episodes (generated with the

environmentalparametersshownin Table 1) with four agents both with and without the soft

coordination mechanism.Becausethe variance betweenthese randomlygenerated episodes is

so great, wetook advantageof the paired responsenature of the data to run a non-parametric

Wilcoxonmatched-pairs signed-ranks test [3]. This test is easy to computeand makesvery

fewassumptions--primarilythat the variables are interval-valued and comparablewithin each

blockof paired responses.For each of the 234blocks wecalculated the difference in the total

final quality achieved by each group of agents and excluded the blocks wherethere wasno

difference, leaving 102blocks. Wethen replace the differences with the ranks of their absolute

values, and then replace the signs on the ranks. Finally wesumthe positive and negativeranks

separately. AstandardizedZ score is then calculated. Asmall value of Z meansthat there was

not muchconsistent variation, while a large value is unlikely to occur unless one treatment

consistently outperformedthe other. In our experiment,the null hypothesisis that the system

with the soft coordination mechanism

did the sameas the one withoutit, and our alternative

is that the systemwith the soft coordination mechanism

did better (in terms of total final

quality). Theresult here wasg = -6.9, whichis highly significant, and allowsus to reject the

null hypothesisthat the mechanism

did not have an effect.

PerformanceIssues. Another question is howthe performanceof a fully configured system

compareswith optimal performance.While wehave no optimal parallel scheduler with which

to compareourselves, wedo havea single agent optimalschedulerand a centralized, heuristic

parallel scheduler that takes the single-agent optimalscheduleas its starting point. In 300

paired response experimentsbased on the sameenvironmentalparametersas the facilitation

experiments(Table 1), the centralized parallel scheduler outperformedour distributed, GPGP

agents 57%of the time (36%no difference, 7%distributed wasbetter) using the total final

quality as the only criterion. TheGPGP

agents produced85%of the quality that the centralized

parallel schedulerdid, on average. Theseresults needto be understoodin the proper context-the centralized scheduler takes muchmoreprocessing time that the distributed scheduler and

can not be scaled up to larger numbersof methodsor task groups. Thecentralized scheduler

also starts with a global view of the entire episode. XVealso lookedat performancewithout

- 46,

any of the mechanisms; on the same 300 episodes the GPGPagents produced on average 1.14

times the final quality of the uncoordinated agents. These experiments had very low numbers

of enables relationships, which tend to hurt the uncoordinated agents, and also remember

that we are only looking at one performance measuremtotal final quality. Coordinated agents

execute far fewer methodsbecause of their ability to avoid redundancy, but this does not show

up in final quality because there is no real-time pressure in these 300 episodes (we kept the time

pressure constantly low). The redundant execution of methods proves a muchmore hindering

element to the uncoordinated agents whenacting under severe time pressure [4].

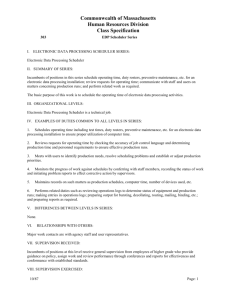

Examining the family’s performance space. Next we looked at the multidimensional performance space for the family of coordination algorithms over four different performance

measures. Earlier we mentioned that although our simple initial parameterization results in

72 possible coordination algorithms, manyof these are not statistically

different from one

another in randomly generated environments. Weapplied two standard statistical clustering

techniques to develop a muchsmaller set of significantly different algorithms. The resulting

five ’prototypical’ combinedbehaviors are a useful starting point in evaluating the family in a

new environment.

!

!

¯

2.0

¯

I

¯

-I-

o 1.0

~o

I

|

I

I

0.0

~-1.0

-2.0

4I

I

Balanced Mute Myopic

I

I

I

Simple

Balanced

Tough

I

Mute Myopic

I

I

Simple

Tough

3

2.0

2

,

1.0

~

-1/-

1-"

0.0

~

0 ..........

91.0

#

-2.0

-3.0

I

Balanced

I

I

Mute Myopic Simple

I

-3

Tough

Balanced

Mute Myopic Simple

Tough

Figure4: StandardizedPerformance

by the 5 namedcoordinationstyles.

The analysis proceeded as follows: for 63 randomly chosen environments, we generated

one random episode and ran each of the 72 "agent types" on the episode (4536 cases).

\,

- 47-

collected four performance measures: total quality, number of methods executed, number of

communicationactions, and termination time. Wethen took this data and standardized each

performance measure within an environment. So now each measure is represented as the number of standard deviations from the mean value in that environment. Wethen took summary

statistics for each measure grouped by agent types--this boils the 4536 cases (standardized

within each environment) into 72 summarycases (summarized across environments). Each

the 72 summaries correspond to the average standardized performance of one agent-type for

the four performance measures. Wethen used both a hierarchical clustering algorithm (SYSTATJOIN with complete linkage) and a linear clustering algorithm (SYSTATKMEANS)

produce the following general prototypical agent classes (we chose one representative algorithm

in eachclass):

Simple: No commitmentsor non-local view, just broadcasts results.

Myopic: All commitment mechanisms on, but no non-local view.

Balanced: All mechanisms on.

Tough-guy: Balanced agent that makes no soft commitments.

9Mute: No communication whatsoever

KMEANS

also produces the mean value of each performance measure for each group, so

you can in fact see that the non-communicatingagents have a high negative mean"number-ofcommunications"(- 1.16-remember these were averaged from standardized scores) but execute

more methods on average and produce less final quality. They also terminate slightly quicker

than average. Our "Balanced" group, in comparison, communicatesa little more than average,

executes manyfewer methods(- 1.29--wayout on the edge of this statistic), returns better-thanaverage quality and about average termination time. This is reasonable, as ’avoiding redundant

work’ and other work-reducing ideas are a key feature of the GPGPalgorithm.

Figure 4 shows the values of several typical performance measures for only the five named

types. Performancemeasures were standardized within each episode, (i.e. across all 72 types).

Shown for each are the means and 10, 25, 50, 75, and 90 percent quantiles. All agents’

performances are significantly different by Tukey Kramer HSDexcept for: MethodExecution

(Simple vs. Mute), Total final quality (Balanced vs. Tough), Deadlines missed (simple

mute) and (balanced vs. tough).

Weare also analyzing the effect of environmental characteristics on agent performance.

Figure 5 shows an example of the effect of the a priori amount of overlap on the number of

method execution actions for the five namedagent types. Note again that the balanced and

tough agents do significantly less work whenthere is a lot of overlap (as wouldbe expected).

The performance of the tough and balanced agents is similar because (from Table 1) only half

the experiments had any facilitation, and whenit was present was only at 50

4

Conclusions and Future Work

This paper discusses the design of an extendable family of coordination mechanisms, called

Generalized Partial Global Planning (GPGP),that form a basic set of coordination mechanisms

9This algorithm makesno commitments

(mechanisms

3, 4, and 5 off) and communicates

(mechanism

only ’satisfied commitments’--therefore

it sendsno communications

ever!.

- 48~

!#

o

L Mute

.~

+

g

Myopic

.~

~

+

~""

-1

-2

.............

ouch

i

"

I

Balanced

*

+

-3

÷

I

-0.1

]l

i

I I I I I I I I I I

0.1

0.3

0.5

0.7

0.9

1.1

Overlap

Figure5: Theeffect of overlapsin the task environment

on the standardizedmethodexecutionperformance

by

the 5 namedcoordination

styles (smoothed

splines fit to the means).

for teams of cooperative computational agents. An important feature of this approach includes

an extendable set of modular coordination mechanisms, any subset or all of which can be

used in response to a particular task environment. This subset maybe parameterized, and

the parameterization does not have to be chosen statically, but can instead be chosen on a

task-group-by-task-group basis or even in response to a particular problem-solving situation.

For example, Mechanism5 (Handle Soft Predecessor CRs) might be "on" for certain classes

of tasks and ’off’ for other classes (that usually have few or very weaksoft CRs). The general

specification of the GPGPmechanismsinvolves the detection and response to certain abstract

coordination relationships in the incoming task structure that were not tied to a particular

domain. A careful separation of the coordination mechanismsfrom an agent’s local scheduler

allows each to better do the job for which it was designed. A complete discussion of the

scheduler/decision-maker interface can be found in our companionpaper [15]. Webelieve this

separation is not only useful for applying our coordination mechanismsto existing problems

with existing, customized local schedulers, but also to problems involving humans(where the

coordination mechanismcan act as an interface to the person, suggesting possible commitments

for the person’s consideration and reporting non-local commitmentsmadeby others).

Applicationdesigners will find this general specification of partial global planning coordination behaviors easier to adapt to their domainsthan the original distributed vehicle monitoring

testbed implementation. Whatis required to use GPGPor similarly designed algorithms is a

local scheduler that can handle local and non-local commitmentsand can communicate via

task structures (the interface is fully described in [15]). GPGPalso requires domain-dependent

code to detect potential coordination relationships to other agents and verify their presence

after non-local information has been received. This paper has outlined the overhead involved

with each mechanism, and discussed experimental techniques for demonstrating improved

performance in an application. Future work will involve elaborating and validating models

.49 -

of these coordination mechanismsthat link environmentalparameters to both overheadand

performance.

Wealso discussedhowto limit the initial searchfor a suitable application-specific

coordination algorithm by using prototypical algorithm family members.

Worknot reported here includes the developmentof a load-balancing coordination mechanismthat worksby providingbetter informationto the agents with whichto resolve redundant

commitments. More mechanisms, and more complex mechanismsare possible; for example, mechanisms

that workfrom the successors of hard and soft relationships instead of the

predecessors, or negotiation mechanisms.Mechanismsfor behavior such as contracting are

also possible. As always, the importantquestion will be "in whatenvironmentswill the extra

overheadof these mechanismsbe worthwhile." Future workwill also examineexpandingthe

parameterization of the mechanismsand using machinelearning techniques to choose the

appropriate parametervalues (i.e., learning the best family member

for an environment).

References

[1] C. Castelfranchi. Commitments:

fromindividual intentions

to groupsand organizations.

In MichaelPrietula, editor, A/andtheories of groups& organizations: Conceptualand

Empirical Research. AAAIWorkshop,1993. WorkingNotes.

[2] Philip R. Cohenand HectorJ.

Levesque.Intention is choice with commitment.

Artificial

lnteUigence,42(3), 1990.

[3] W.W. Daniel. Applied NonparametricStatistics. Houghton-Mifflin,Boston, 1978.

[4] Keith S. Decker.EnvironmentCenteredAnalysis and Designof CoordinationMechanisms.

PhDthesis, University of Massachusetts,1994.

[5] Keith S. Decker, Alan J.

Garvey, MartyA. Humphrey,

and Victor R. Lesser. Effects of

parallelism on blackboardsystemscheduling. In Proceedingsof the Twelfth IJCALpages

15-21, Sydney,Australia, August1991. Extendedversion in International Journal of

Pattern Recognitionand Artificial Intelligence 7(2) 1993.

[6] Keith S. Deckerand Victor R. Lesser. Generalizingthe partial

global planningalgorithm.

International Journal of Intelligent and CooperativeInformationSystems, 1(2):319-346,

June 1992.

[7] Keith S. Deckerand Victor R. Lesser. Analyzinga quantitative coordinationrelationship.

GroupDecisionand Negotiation, 2(3): 195-217,1993.

[8] Keith S. Deckerand Victor R. Lesser. Anapproachto

analyzing the needfor meta-level

communication.In Proceedingsof the Thirteenth IJCAI, Chamb~ry,August1993.

[9] Keith S.

Decker and Victor R. Lesser. Quantitative modeling of complexcomputational task environments.In Proceedingsof the EleventhNationalConferenceon Artificial

Intelligence, pages 217-224,Washington,July 1993.

Coordinationas distributed search in a hierarchical

[10] E. H. Durfeeand T. A. Montgomery.

behavior space. IEEETransactions on Systems, Man,and Cybernetics, 21(6):1363-1378,

November1991.

[II]

EdmundH. Durfee,

Victor R. Lesser, and Daniel D. Corkill. Coherent cooperation

amongcommunicatingproblemsolvers. IEEETransactions on Computers,36(11):12751291, November1987.

[12] E.H. Durfee and V.R. Lesser. Partial global planning: A coordination frameworkfor

distributed hypothesis formation. IEEETransactionson Systems, Man,and Cybernetics,

21(5):1167-1183, September 1991.

[13]MarkS. Fox. Anorganizationalviewof distributed systems. IEEETransactionson Systems,

Man,and Cybernetics,11 (1) :70-80, January1981.

[14] J. Galbraith. OrganizationalDesign. Addison-Wesley,Reading, MA,1977.

[15]Alan Garvey, Keith Decker, and Victor Lesser.

A negotiation-based interface between

a real-time scheduler and a decision-maker. CSTechnical Report 94--08, University of

Massachusetts, 1994.

[16] Alan Garveyand Victor Lesser. Design-to-timereal-time scheduling. IEEETransactions

on Systems, Man,and Cybernetics, 23(6), 1993. Special Issue on Scheduling,Planning,

and Control.

[17] M. R. Genesereth, M. L. Ginsberg, and J. S. Rosenschein. Cooperation without communication.In Proceedings

of the FiShNationalConference

on Artificial Intelligence, pages

51-57, Philadelphia, PA., August1986.

[18] N. R. Jennings. Commitmentsand conventions: The foundation of coordination in

multi-agent systems. The KnowledgeEngineeringReview, 8(3):223-250, 1993.

[19] Paul Lawrenceand Jay Lorsch. Organizationand Environment.HarvardUniversity Press,

Cambridge, MA,1967.

[20] V. R. Lesser. Aretrospective viewof FA/Cdistributed problemsolving. IEEETransactions

on Systems, Man,and Cybernetics, 21 (6): 1347-1363,November

1991.

A simple agent

[21]YoavShoham.AGENT0:

language and its interpreter. In Proceedings

of the Ninth NationalConferenceon Artificial Intelligence, pages 704-709,Anaheim,July

1991.

[22] Arthur L. Stinchcombe.Information and Organizations.University of California Press,

Berkeley, CA,1990.

[23]Frankv.

Martial. CoordinatingPlansof Autonomous

Agents. Springer-Verlag,Berlin, 1992.

LectureNotesin Artificial Intelligence no. 610.

¯ -$1- -