From: KDD-98 Proceedings. Copyright © 1998, AAAI (www.aaai.org). All rights reserved.

Joins that Generalize: Text Classification

William W. Cohen

AT&T Labs-Research

180 Park Avenue

Florham Park NJ 07932

wcohen@research.att.com

Abstract

WHIRL is an extensionof relational databasesthat can perform “soft joins” based on the similarity of textual identifiers; these soft joins extend the traditional operation of joining tables based on the equivalenceof atomic values. This

paper evaluatesWHIRL on a number of inductive classification tasksusing data from the World Wide Web. We show

that although WHIRL is designedfor more generalsimilaritybasedreasoningtasks,it is competitive with mature inductive

classificationsystemson these classificationtasks. In particular, WHIRL generally achieveslower generalization error than C4.5, RIPPER, and severalnearest-neighbormethods. WHIRL is also fast-p to 500 times faster than C4.5

on some benchmark problems. We also show that WHIRL

can be efficiently used to select from a large pool of unlabeled items those that can be classified correctly with high

confidence.

Introduction

Consider the problem of exploratory analysis of data obtained from the Internet. Assuming that one has already narrowed the set of available information sourcesto a manageable size, and developedsome sort of automatic procedure

for extracting data from the sites of interest, what remains

is still a difficult problem. There are severaloperationsthat

one would like to support on the resulting data, including

standardrelation databasemanagementsystem(DBMS) operations,since some information is in the form of data; text

retrieval, since some information is in the form of text; and

text categorization. Since data can be collected from many

sources,one must also support the (non-standard)DBMS

operation of data integration (Monge and Elkan 1997;

Hemandezand Stolfo 1995).

As an example of data integration, suppose you have

two relations, place(univ,state), which contains university names and the states in which they are located, and

job(uuiv,dept), which contains university departmentsthat

are hiring. Suppose further that you are interested in job

openingslocated in a particular state. Normally a user could

join the two relations to answer this query. However, what

if the relations were extracted from two different and unrelated Web sites? The same university may be referred to

Copyright @ 1998, American Association for Artificial Intelligence(www.aaai.org).All rights reserved.

Using WHIRL

Haym Hirsh

Departmentof Computer Science

RutgersUniversity

New Brunswick, NJ 08903

hirsh@cs.rutgers.edu

as “Rutgers University” in one relation, and “Rutgers, the

State University of New Jersey”in another. To solve this

problem traditional databaseswould require some form of

key normalization or data cleaning on the relations before

they could be joined.

An alternative approach is taken by the databasemanagement system (DBMS) WHIRL (Cohen 1998a; 1998b).

WHIRL is a conventional DBMS that has been extendedto

use statistical similarity metrics developedin the information retrieval community to compare and reason about the

similarity of pieces of text. These metrics can be used to

implement “similarity joins”, an extension of regular joins

in which tuples are constructedbased on the similarity of

values, rather than on equality. Constructedtuples are then

presentedto the user in an ordered list, with tuples containing the most similar pairs of fields coming first.

As an example, the relations described above could be

integratedusing the WHIRL query

SELECT place.univ as ul, place.state,

job.uuiv as u2, job.dept

FROM place, job WHERE place.uuiv~job.univ

where place.univNjob.univ indicates that these items must

be similar. The result of this query is a table with columns

~1, state, u2, and dept, and rows sorted by the similarity of

~1 and ~2. Thus rows in which ~l=d? (i.e., the rows that

would be in a standardequijoin of the two relations) will appear first in this table, followed by rows for which ul and ~2

are similar, but not identical (such as the pair “Rutgers University” and “Rutgers, the StateUniversity of New Jersey”).

Although WHIRL was designedto query heterogeneous

sources of information, WHIRL can also be used in a

straight-forward manner for traditional text retrieval and relational data operations. Less obviously, WHIRL can be

used for inductive classification of text. To see this, note

that a simple form of rote learning can be implementedwith

a conventionalDBMS. Assume we are given training data in

the form of a relation train(inst,label) associatinginstances

inst with labels label from some fixed set. (For example,

instancesmight be news headlines,and labels might be subject categoriesfrom the set {business, sports, other}.) To

classify an unlabeled object X one can store X as the only

element of a relation test(inst) and use the SQL query:

SELECT test .inst ,train.label

FROM train,test WHERE test.inst=train.inst

KDD-98

169

In a conventionalDBMS this retrievesthe correct label for

any X that hasbeenexplicitly storedin the training settrain.

However, if one replacesthe equality condition test.inst=

train.inst with the similarity condition, test.instNtrain.inst,

the resulting WHIRL query will find training instancesthat

are similar to X, and associatetheir labels with X. More

precisely, the answer to the correspondingsimilarity join

will be a table

Every tuple in this table associatesa label Li with X.

Each Li is in the table becausethere were some instances

Y.51,. . . ,Yi, in the training data that had label Li and were

similar to X. As we will describebelow, every tuple in the

table also has a “score”, which dependson the number of

theseYi j ’s and their similarities to X. This methodcan thus

be viewed as a sort of nearestneighborclassificationalgorithm.

Elsewherewe haveevaluatedWHIRL’on dataintegration

tasks (Cohen 1998a). In this paper we show that WHIRL

is also well-suited to inductive text classification,a task we

would also like a generaldata manipulationsystemsuch as

WHIRL to support.

An Overview of WHIRL

We assumethe readeris familiar with relational databases

and the SQL query language. WHIRL extendsSQL with

a new TEXT data type and a similarity operatorXNY that

applies to any two items X and Y of type TEXT.’ Associated with this similarity operatoris a similarity function

SIM(X, Y), to be describedshortly, whoserangeis [0, l],

with larger valuesrepresentinggreatersimilarity.

This paperfocuseson queriesof the form

SELECT test .inst ,train.label

FROM test,train WHERE test.inst~train.inst

and we therefore tailor our explanation to this class of

queries. Given a query of this form and a user-specified

parameterK, WHIRL will first find the set of K tuples

(Xi, yj, Lj) from the Cartesianproduct of test and train

such that SrM(Xi, Yj) is largest (where Lj is Yj’s label in

train). Thus, for example,if test containsa single element

X, then the resultingset of tuples correspondsdirectly to the

K nearestneighborsto X, plus their associatedlabels. Bach

of thesetuples has a numericscore; in this casethe scoreof

(Xi,~,~~)iSSimplySIM(Xi,Y$).

The next step for WHIRL is to SELECT test.inst,

train.label, i.e., to selectthe first and third columns of the

table of (Xi, Yj, Lj) tuples. In this projection step, tuples

with equal Xi and Lj but different Yj will be combined.

‘Other publisheddescriptionsused a Prolog-like notation for

the samelanguage(Cohen 1998a; 1998b). We refer the readerto

theseearlier papersfor descriptionsof the full scopeof WHIRL

and efficient methodsfor evaluatinggeneralWHIRL queries.

170

Cohen

The scorefor eachnew tuple (X, L) is

l-fi(l-Pj)

(1)

j=l

wherepi ,..., p, arethescoresofthentuples(X,Yi,L) ,...,

(X, Y,, L) that contain both X and L.

The similarity of eachX and Y is assessedvia the widelyused “vector space”model of text (Salton 1989). Assume

a vocabularyT of atomic terms that appearin each document.2 A TEXT object is representedas a vector of real

nufnbersv E 72ITI, where each componentcorrespondsto

a term. We denotewith 21~the componentof v that correspondsto t E T.

The generalidea behind the vector-spacerepresentation

is that the magnitudeof a componentut should be related

to the “importance”of t in the documentrepresentedby v,

with higher weightsassignedto termsthat arefrequentin the

documentand infrequent in the collection as a whole; the latter often correspondto proper namesand other particularly

informative terms. We use the TF-IDF weighting scheme

(Salton 1989) in which ut = log( T&J + 1) * log( ID&).

Here Z’FVIt is the numberof times that t occursin the document representedby v, and IDFt = 5, whereN is the total

numberof records,and 7~~is the total numberof recordsthat

contain the term t in the given field. The similarity of two

documentvectorsv and w is then given by the formula

tET

vt’wt

m f(v,w)= cllvll

* llwll

This measureis large if the two vectors sharemany “important”terms, and small otherwise. Notice that due to the

normalizing term S1&?(v, w) is always betweenzero and

one.

By combining graph searchmethodswith indexing and

pruning methodsfrom informationretrieval,WHIRL can be

implementedfairly efficiently. Details of the implementation and a full descriptionof the semanticsof WHIRL can

be found elsewhere(Cohen 1998a).

Experimental Results

To evaluateWHIRL as a tool for Web-basedtext classification tasks, we creatednine benchmarkproblems,listed in

Table 1, from pagesfound on the World Wide Web. Although they vary in domain and size, all were generatedin

a straight-forwardfashion from the relevantpages,and all

associatetext - typically short, name-like strings- with

labels from a relatively small set3 The table gives a summary descriptionof eachdomain,the numberof classesand

numberof terms found in eachproblem, and the numberof

training and testing examplesusedin our text classification

2Currently,T containsall “word stems”(morphologicallyinspiredprefixes)producedby the Porter(1980) stemmingalgorithm

on textsappearingin the database.

3Althoughmuch work in this area hasfocusedon longer documents,we focus on short text itemsthat moretypically appearin

suchdataintegrationtasks.

experiments. On smaller problems we used lo-fold crossvalidation(“lOcv”), and on larger problemsa singleholdout

set of the specifiedsize was used. In the caseof the cdroms

andnetvet domains,duplicateinstanceswith different labels

were removed.

Relative accuracy

100

80

60

Using WHIRL for Inductive Classification

To label each new test example,the examplewas placedin

a table by itself, and a similarity join was performedwith

it and the training-settable. This results in a table where

the test example is the tist item of each tuple, and every

label that occurredfor any of the top K most similar text

items in the training-settable appearsas the seconditem of

some tuple. We used Ii’ = 30, basedon exploratoryanalysis of a small number of datasets,plus the experienceof

Yang and Chute (1994) with a relatedclassifier(seebelow).

The highest-scoringlabel was usedas the test item’s classification. If the table was empty, the most frequentclasswas

used.

We compared WHIRL to several baseline learning algorithms. C4.5 (Quinlan 1994) is a feature-vectorbased

decision-treelearner.We usedas binary-valuedfeaturesthe

sameset of terms used in WHIRL’s vectors; however,for

tractability reasons,only termsthat appearedat least3 times

in the training set were used as features. RIPPER (Cohen

1995) is a rule learning systemthat has beenused for text

categorization(CohenandSinger 1996).We usedRIPPER s

set-valuedfeatures(Cohen 1996)to representthe instances;

this is functionally equivalentto using all terms as binary

features,but more efficient. No feature selectionwas performed with RIPPER. l-NN finds the nearestitem in the

training-settable (using the vector-spacesimilarity measure

SIM) and givesthe test item that item’s label. We aIsoused

Yang and Chute’s (1994) distance-weightedk-NN method,

hereaftercalled K-NNs. This is closely relatedto WHIRL,

but combinesthe scoresof the the K nearestneighborsdifferently, selectingthe label L that maximizesc pi. Finally,

I<-NNM is like Yang and Chute’smethod,but picks the label L that is most frequentamongthe I{ nearestneighbors.

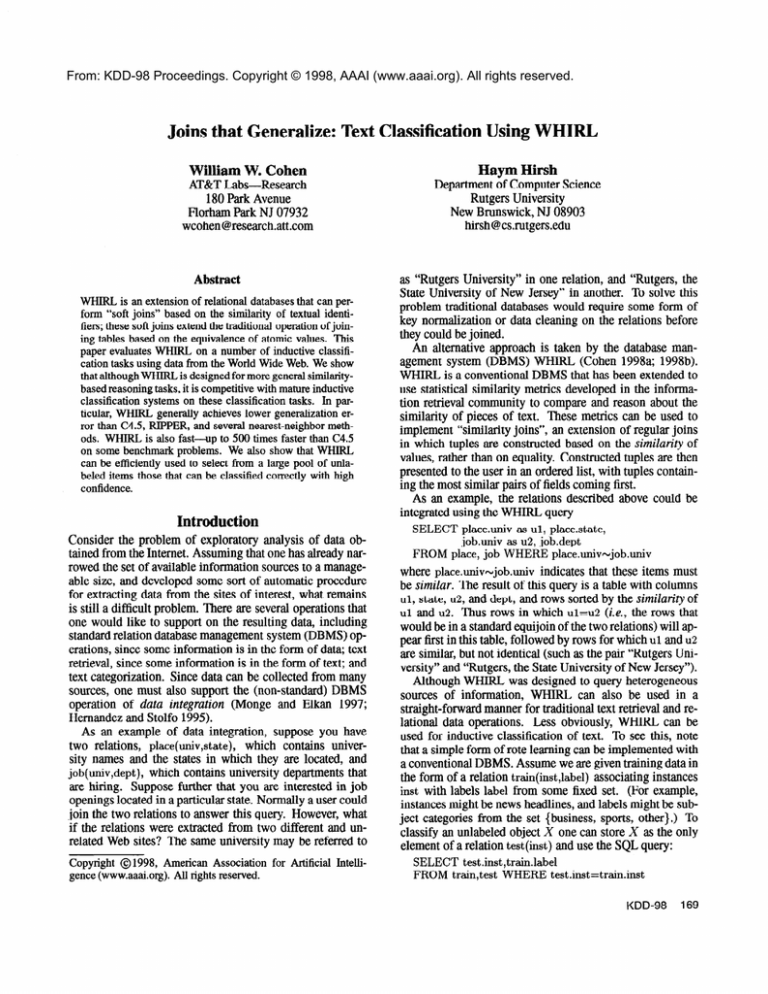

The accuracyof WHIRL and each baselinemethod, as

well as the accuracyof the “default rule” (alwayslabeling

an item with the most frequent class in the training data)

are shown in Table 2; the highest accuracyfor each problem is shown in boldface. The same information is also

shown graphically in Figure 1, where eachpoint represents

one of the nine test problems.4 With respectto accuracy

WHIRL uniformly outperformsK -NNM , outperformsboth

l-NN and C4.5 eight of nine times, and outperformsRIPPER sevenof nine times. WHIRL was never significantly

worsethan any other learneron any individual problem.5

4The y-value of a point indicatesthe accuracyof WHIRJ.,on

a problem, and the x-value indicates the accuracyof a competing method; points that lie abovethe line y = x are caseswhere

WHJRL was better. The upper graph comparesWHIRL to C4.5

andRIPPER,and the lower graphcomparesWHIRL to the nearestneighbormethods.

5WeusedMcNemar’stest to test for significantdifferenceson

the problemsfor which a single holdout was used, and a paired

t-test on the folds of the cross-validationwhen 1Ocvwasused.

40

20

Accuraz of seconYk3am*r

Relative accuracy

80

100

(lNN,wE

7

(KNN-sunyhiri 1 +

(KNN-mal,whirl) q

0

20

AccuraEj of seconsdqearnf3r

I&

80

Figure 1: Scatterplotsof learneraccuracies

The algorithm that comesclosestto WHIRL is K-NNs.

WHIRL outperformsK-NNs eight of nine times, but all of

theseeight differencesare small. However,five are statistically significant,indicating that WHIRL representsa small

but statisticallyreal improvementover K-BINS.

Using WHIRL for SelectiveClassification

Although the error rates are still quite high on some tasks,

this is not too surprising given the nature of the data. For

example,considerthe problem of labeling companynames

with the industry in which they operate (as in Mine and

hcoarse). It is fairly easy to guessthe businessof “Watson Pharmaceuticals,Inc.“, but correctly labeling “Adolph

Coors Company”or “AT&T” requiresdomainknowledge.

Of course,in many applications,it is not necessaryto label every test instance; often it is enoughto find a subset

of the test data that can be reliably labeled. For example,

in constructinga mailing list, it is not necessarydetermine

if every householdin the US is likely to buy the advertised product; one needsonly to find a relatively small set

of householdsthat are likely customers.The basicproblem

can be statedas follows: given M test cases,give labels to

N of these,for N < M, whereN is set by the user.We call

this problem selective classifcation.

One way to perform selectiveclassificationis to use a

learner’shypothesisto make a prediction on eachtest item,

andthenpick the N itemspredictedwith highestconfidence.

Associatinga confidencewith a learner’sprediction is usuKDD-98

171

problem #train #/test #classesI #terms I text-valuedfield

I label

memos

334 1Ocv

documenttitle

category

cdroms

category

1133 CDRom gamename

798 1Ocv

birdcom

914 1ocv

phylogenicorder

674 commonnameof bird

birdsci

914 1Ocv

1738 common + scientificname of bird phylogenicorder

hcoarse 1875 600

industry (coarsegrain)

2098 companyname

hfine

1875 600

2098 companyname

industry (fine grain)

books

3501 1800

subjectheading

7019 book title

species 3119 1600

7231 animal name

phylum

netvet

3596 2000

category

5460 URL title

-ion-

Table 1: Description If benchmarkproblems

default RIPPER C4.5

memos

57.5

19.8

50.9

40.5

cdrom

26.4

38.3 39.2

70.4

88.8 79.6

42.8

birdcom

42.8

91.0

83.3

82.8

birdsci

hcoarse

11.3

28.0 30.2

20.2

12.7

hfine

4.4

16.5 17.2

53.0

books

5.7

42.3

52.2

87.5

90.6

89.4

species

51.8

50.9

netvet

67.1 68.8

22.4

57.52 57.41 53.76 1

1 average 25.24

I

-7mRy WHIRL

66.5

64.4

43.0

78.9

81.5

29.5

17.8

54.7

90.7

64.8

56.64 1

I

47.5

47.2

83.9

82.3

90.3

89.2

32.9

32.7

20.8

21.0

61.4

60.1

943

93.6

68.1

67.8

62.00 62.90

Table2: Experimentalresultsfor accuracy

ally straightforward.6

However,WHIRL also suggestsanotherapproachto selective classification. One can againuse the query

SELECT test .inst ,train.label

FROM traintest WHERE test.instNtrain.inst

but let test containall unlabeledtest cases,ratherthan a sin-

gle test case.The result of this query will be a table of tuples

(Xi, Lj ) where Xi is a test caseand Lj is a label associated

with one or more training instancesthat are similar to Xi.

In implementing this approachwe used the score of a tuple (as computedby WHIRL) as a measureof confidence.

To avoid returning the same example multiple times with

a different labels, we also discardedpairs (X, L) such that

(X, L’) (with L’ # L) also appearsin the tablewith a higher

score; this guaranteesthat each example is labeled at most

once.

Rather than uniformly selecting K nearest neighbors

for each test example, this approach(henceforththe “join

method”) finds the K pairs of training and test examples

that are most similar, and then projects the final table from

this intermediateresult. Hence,the join methodis not identical to repeatinga K-NN style classification for every test

query (for any value of Ii’).

An advantageof the join method is that since only one

query is executedfor all the test instances,the join method

is simpler and fasterthan using repeatedK-NN queries.Table 3 comparesthe efficiency of the baselinelearnersto the

efficiency of the join method. In each case we report the

6For C4.5 and RIPPER,a confidencemeasurecan be obtained

using the proportion of each class of the training examplesthat

reachedthe terminal nodeor rule used for classifyingthe test case.

For nearest-neighborapproachesa confidencemeasurecan be obtained from the score associatedwith the method’s combination

rule.

172

Cohen

combinedtime to learn and classify the test cases.The lefthand side of the table gives the absoluteruntime for each

method, and the right-hand side gives the runtime for the

baseline learners as a multiple of the runtime of the join

method. The join method is much faster than symbolic

methods,with averagespeedupsof nearly 200 for C4.5 with 500 in the best case- and averagespeedupsof nearly

30 for RIPPER - with 60 in the best case. It is also significantly fasterthan the straight-forwardWHIRL approach,

or evenusing l-NN on this task.7 This speedupis obtained

even though the size K of the intermediatetable must be

fairly large, since it is sharedby all test instances.We used

I< = 5000 in the experimentsbelow, and from preliminary

experimentsK = 1000 appearsto give measurablyworse

performance.

A potential disadvantageof the join method is that test

caseswhich are not nearany training caseswill not be given

any classification;for the five larger problems,for example,

only l/4 to l/2 of the test examplesreceive labels. In selective classification, however,this is not a problem. If one

considersonly the N top-scoring classifications for small

N, there is no apparentloss in accuracyin using the join

method rather than WHIRL; indeed, there appearsto be a

slight gain. The upper graph in Figure 2 plots the error on

the N selectedpredictions (also known as the precision at

rank N) against N for the join method, and comparesthis

to the baselinelearnersthat perform best, accordingto this

metric. All points are averagesacrossall nine datasets.On

average,thejoin methodtendsto outperformWHIRL for N

less than about 200; after this point WHIRL’s performance

beginsto dominate.Generally,thejoin methodis very competitive with C4.5 and RIPPER.* However, theseaverages

‘Runtimes for the K-NIV variantsare comparableto WHIRL’s.

*RIPPERperformsbetter than the join method,on average,for

Table3: Run time of selectedlearningmethods

Average over all datasets

0.95

of examples.Our experimentsshow that, despiteits greater

generality,WHIRL is extremelycompetitivewith state-ofthe-artmethodsfor inductiveclassification.WHIRL is fast,

sincespecialindexingmethodscanbe usedto find the neighbors of an instance,and no time is spentbuilding a model.

WHIRL was also shownto be generallysuperiorin generalization performanceto existingmethods.

0.9

0.85

0.8

0.75

0.7

References

0.85

0.6

0.55 ’ ’

50

’

100

*

’

150 200

I

I

50

60

’

’

patkNOO

n

350

’

400

*

’

450 500

Precision at N=l 00

I

I

n

.-$

.O_

1

Pre%ion

80 IearneY’

of other

100

Figure2: Experimentalresultsfor selectiveclassification

conceala lot of individual variation; while the join method

is beston average,therearemanyindividual casesfor which

its performanceis not best.This is shownin the lower scatter

plot in Figure2.g

Summary

WHIRL extendsrelational databasesto reasonabout the

similarity of text-valuedfields using information-retrieval

technology.This paperevaluatesWHIRL on inductiveclassification tasks, where it can be applied in a very natural

way - in fact, a single unlabeledexamplecan be classified using one simple WHIRL query, and a closely related

WHIRL query can be used to “selectively classify”a pool

W . W . Cohen. 1995. Fast effectiverule induction. In Machine

Learning: Proceedingsof the TwelfthInternational Conference.

MorganKaufmann.

W . W . Cohen. 1996. Learningwith set-valuedfeatures.In Proceedingsof the ThirteenthNational Conferenceon Artiflciul Intelligence.AAAI Press.

W . W . Cohen.1998.Integrationof heterogeneous

databases

without commondomainsusing queriesbasedon textual similarity.

In Proceedingsof the I998 ACM SIGMODInternationalConferenceon Managementof Data. ACM Press.

W . W . Cohen.1998. A Web-basedinformationsystemthat reasonswith structuredcollectionsof text. In Proceedingsof the

SecondInternational ACM Conferenceon AutonomousAgents.

ACM Press.

W . W . Cohen and Y. Singer. 1996. Context-sensitivelearning

methodsfor text categorization.In Proceedingsof the 19th Annual InternationalACM Conferenceon Researchand Developmentin InformationRetrieval,pages307-315.ACM Press.

M. Hernandezand S. Stolfo. The merge/purgeproblemfor large

databases.

In Proceedingsof the 1995ACM SIGMOD,May 1995.

A. Monge and C. Elkan. An efficient domain-independent

algorithm for detectingapproximatelyduplicatedatabaserecords.In

Theproceedingsof the SIGMODI997 workshopon data mining

and knowledgediscovery,May 1997.

M. F. Porter.1980. An algorithmfor suffix stripping. Program,

14(3):13&137.

J. R. Quinlan.1994.C4.5: Programsfor machinelearning.Morgan Kaufmann.

G. Salton,editor.1989.AutomaticTextProcessing.AddisonWesley.

Y. Yang and C.G. Chute. 1994. An example-based

mapping

methodfor text classificationand retrieval. ACM Transactions

on InformationSystems,12(3).

valuesof N < 60, but the differenceis small;for instance,the join

methodaveragesonly 1.3 moreerrorsthan RIPPERfor N = 50.

‘Each point here comparesthe join method with someother

baselinemethodat N = 100,a point at which all four methodsare

closein averageperformance.

KDD-98

173