Journal of Artificial Intelligence Research 31 (2008) 33-82

Submitted 02/07; published 01/08

Planning with Durative Actions in Stochastic Domains

Mausam

Daniel S. Weld

MAUSAM @ CS . WASHINGTON . EDU

WELD @ CS . WASHINGTON . EDU

Dept of Computer Science and Engineering

Box 352350, University of Washington

Seattle, WA 98195 USA

Abstract

Probabilistic planning problems are typically modeled as a Markov Decision Process (MDP).

MDPs, while an otherwise expressive model, allow only for sequential, non-durative actions. This

poses severe restrictions in modeling and solving a real world planning problem. We extend the

MDP model to incorporate — 1) simultaneous action execution, 2) durative actions, and 3) stochastic durations. We develop several algorithms to combat the computational explosion introduced by

these features. The key theoretical ideas used in building these algorithms are — modeling a complex problem as an MDP in extended state/action space, pruning of irrelevant actions, sampling

of relevant actions, using informed heuristics to guide the search, hybridizing different planners

to achieve benefits of both, approximating the problem and replanning. Our empirical evaluation

illuminates the different merits in using various algorithms, viz., optimality, empirical closeness to

optimality, theoretical error bounds, and speed.

1. Introduction

Recent progress achieved by planning researchers has yielded new algorithms which relax, individually, many of the classical assumptions. For example, successful temporal planners like SGPlan,

SAPA, etc. (Chen, Wah, & Hsu, 2006; Do & Kambhampati, 2003) are able to model actions that take

time, and probabilistic planners like GPT, LAO*, SPUDD, etc. (Bonet & Geffner, 2005; Hansen &

Zilberstein, 2001; Hoey, St-Aubin, Hu, & Boutilier, 1999) can deal with actions with probabilistic

outcomes, etc. However, in order to apply automated planning to many real-world domains we must

eliminate larger groups of the assumptions in concert. For example, NASA researchers note that

optimal control for a NASA Mars rover requires reasoning about uncertain, concurrent, durative

actions and a mixture of discrete and metric fluents (Bresina, Dearden, Meuleau, Smith, & Washington, 2002). While today’s planners can handle large problems with deterministic concurrent

durative actions, and MDPs provide a clear framework for non-concurrent durative actions in the

face of uncertainty, few researchers have considered concurrent, uncertain, durative actions — the

focus of this paper.

As an example consider the NASA Mars rovers, Spirit and Oppurtunity. They have the goal of

gathering data from different locations with various instruments (color and infrared cameras, microscopic imager, Mossbauer spectrometers etc.) and transmitting this data back to Earth. Concurrent

actions are essential since instruments can be turned on, warmed up and calibrated, while the rover

is moving, using other instruments or transmitting data. Similarly, uncertainty must be explicitly

confronted as the rover’s movement, arm control and other actions cannot be accurately predicted.

Furthermore, all of their actions, e.g., moving between locations and setting up experiments, take

time. In fact, these temporal durations are themselves uncertain — the rover might lose its way and

c

2008

AI Access Foundation. All rights reserved.

M AUSAM & W ELD

take a long time to reach another location, etc. To be able to solve the planning problems encountered by a rover, our planning framework needs to explicitly model all these domain constructs —

concurrency, actions with uncertain outcomes and uncertain durations.

In this paper we present a unified formalism that models all these domain features together.

Concurrent Markov Decision Processes (CoMDPs) extend MDPs by allowing multiple actions per

decision epoch. We use CoMDPs as the base to model all planning problems involving concurrency.

Problems with durative actions, concurrent probabilistic temporal planning (CPTP), are formulated

as CoMDPs in an extended state space. The formulation is also able to incorporate the uncertainty

in durations in the form of probabilistic distributions.

Solving these planning problems poses several computational challenges: concurrency, extended durations, and uncertainty in those durations all lead to explosive growth in the state space,

action space and branching factor. We develop two techniques, Pruned RTDP and Sampled RTDP to

address the blowup from concurrency. We also develop the “DUR” family of algorithms to handle

stochastic durations. These algorithms explore different points in the running time vs. solutionquality tradeoff. The different algorithms propose several speedup mechanisms such as — 1) pruning of provably sub-optimal actions in a Bellman backup, 2) intelligent sampling from the action

space, 3) admissible and inadmissible heuristics computed by solving non-concurrent problems, 4)

hybridizing two planners to obtain a hybridized planner that finds good quality solution in intermediate running times, 5) approximating stochastic durations by their mean values and replanning, 6)

exploiting the structure of multi-modal duration distributions to achieve higher quality approximations.

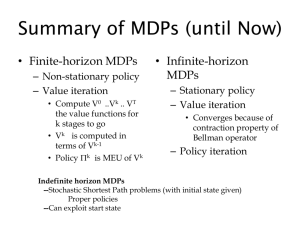

The rest of the paper is organized as follows: In section 2 we discuss the fundamentals of

MDPs and the real-time dynamic programming (RTDP) solution method. In Section 3 we describe

the model of Concurrent MDPs. Section 4 investigates the theoretical properties of the temporal

problems. Section 5 explains our formulation of the CPTP problem for deterministic durations. The

algorithms are extended for the case of stochastic durations in Section 6. Each section is supported

with an empirical evaluation of the techniques presented in that section. In Section 7 we survey the

related work in the area. We conclude with future directions of research in Sections 8 and 9.

2. Background

Planning problems under probabilistic uncertainty are often modeled using Markov Decision Processes (MDPs). Different research communities have looked at slightly different formulations of

MDPs. These versions typically differ in objective functions (maximizing reward vs. minimizing

cost), horizons (finite, infinite, indefinite) and action representations (DBN vs. parametrized action

schemata). All these formulations are very similar in nature, and so are the algorithms to solve

them. Though, the methods proposed in the paper are applicable to all the variants of these models,

for clarity of explanation we assume a particular formulation, known as the stochastic shortest path

problem (Bertsekas, 1995).

We define a Markov decision process (M) as a tuple S, A, Ap, Pr, C, G, s0 in which

• S is a finite set of discrete states. We use factored MDPs, i.e., S is compactly represented in

terms of a set of state variables.

• A is a finite set of actions.

34

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

State variables : x1 , x2 , x3 , x4 , p12

Action

Precondition

Effect

toggle-x1

¬p12

x1 ← ¬x1

toggle-x2

p12

x2 ← ¬x2

toggle-x3

true

x3 ← ¬x3

no change

toggle-x4

true

x4 ← ¬x4

no change

toggle-p12

true

p12 ← ¬p12

Goal : x1 = 1, x2 = 1, x3 = 1, x4 = 1

Probability

1

1

0.9

0.1

0.9

0.1

1

Figure 1: Probabilistic STRIPS definition of a simple MDP with potential parallelism

• Ap defines an applicability function. Ap : S → P(A), denotes the set of actions that can be

applied in a given state (P represents the power set).

• Pr : S × A × S → [0, 1] is the transition function. We write Pr(s |s, a) to denote the

probability of arriving at state s after executing action a in state s.

• C : S × A × S → + is the cost model. We write C(s, a, s ) to denote the cost incurred when

the state s is reached after executing action a in state s.

• G ⊆ S is a set of absorbing goal states, i.e., the process ends once one of these states is

reached.

• s0 is a start state.

We assume full observability, i.e., the execution system has complete access to the new state

after an action has been performed. We seek to find an optimal, stationary policy — i.e., a function

π: S → A that minimizes the expected cost (over an indefinite horizon) incurred to reach a goal

state. Note that any cost function, J: S → , mapping states to the expected cost of reaching a goal

state defines a policy as follows:

πJ (s) = argmin

a∈Ap(s) s ∈S

Pr(s |s, a) C(s, a, s ) + J(s )

(1)

The optimal policy derives from the optimal cost function, J ∗ , which satisfies the following pair

of Bellman equations.

J ∗ (s) = 0, if s ∈ G else

J ∗ (s) = min

a∈Ap(s)

Pr(s |s, a) C(s, a, s ) + J ∗ (s )

(2)

s ∈S

For example, Figure 1 defines a simple MDP where four state variables (x1 , . . . , x4 ) need to be

set using toggle actions. Some of the actions, e.g., toggle-x3 are probabilistic.

Various algorithms have been developed to solve MDPs. Value iteration is a dynamic programming approach in which the optimal cost function (the solution to equations 2) is calculated as the

limit of a series of approximations, each considering increasingly long action sequences. If Jn (s)

35

M AUSAM & W ELD

is the cost of state s in iteration n, then the cost of state s in the next iteration is calculated with a

process called a Bellman backup as follows:

Jn+1 (s) = min

a∈Ap(s)

Pr(s |s, a) C(s, a, s ) + Jn (s )

(3)

s ∈S

Value iteration terminates when ∀s ∈ S, |Jn (s) − Jn−1 (s)| ≤ , and this termination is guaranteed for > 0. Furthermore, in the limit, the sequence of {Ji } is guaranteed to converge to the

optimal cost function, J ∗ , regardless of the initial values as long as a goal can be reached from every reachable state with non-zero probability. Unfortunately, value iteration tends to be quite slow,

since it explicitly updates every state, and |S| is exponential in the number of domain features. One

optimization restricts search to the part of state space reachable from the initial state s0 . Two algorithms exploiting this reachability analysis are LAO* (Hansen & Zilberstein, 2001) and our focus:

RTDP (Barto, Bradtke, & Singh, 1995).

RTDP, conceptually, is a lazy version of value iteration in which the states get updated in proportion to the frequency with which they are visited by the repeated executions of the greedy policy.

An RTDP trial is a path starting from s0 , following the greedy policy and updating the costs of

the states visited using Bellman backups; the trial ends when a goal is reached or the number of

updates exceeds a threshold. RTDP repeats these trials until convergence. Note that common states

are updated frequently, while RTDP wastes no time on states that are unreachable, given the current

policy. RTDP’s strength is its ability to quickly produce a relatively good policy; however, complete

convergence (at every relevant state) is slow because less likely (but potentially important) states get

updated infrequently. Furthermore, RTDP is not guaranteed to terminate. Labeled RTDP (LRTDP)

fixes these problems with a clever labeling scheme that focuses attention on states where the value

function has not yet converged (Bonet & Geffner, 2003). Labeled RTDP is guaranteed to terminate,

and is guaranteed to converge to the -approximation of the optimal cost function (for states reachable using the optimal policy) if the initial cost function is admissible, all costs (C) positive and a

goal reachable from all reachable states with non-zero probability.

MDPs are a powerful framework to model stochastic planning domains. However, MDPs make

two unrealistic assumptions — 1) all actions need to be executed sequentially, and 2) all actions

are instantaneous. Unfortunately, there are many real-world domains where these assumptions are

unrealistic. For example, concurrent actions are essential for a Mars rover, since instruments can

be turned on, warmed up and calibrated while the rover is moving, and using other instruments

for transmitting data. Moreover, the action durations are non-zero and stochastic — the rover might

lose its way while navigating and may take a long time to reach its destination; it may make multiple

attempts before finding the accurate arm placement. In this paper we successively relax these two

assumptions and build models and algorithms that can scale up in spite of the additional complexities

imposed by the more general models.

3. Concurrent Markov Decision Processes

We define a new model, Concurrent MDP (CoMDP), which allows multiple actions to be executed

in parallel. This model is different from semi-MDPs and generalized state semi-MDPs (Younes

& Simmons, 2004b) in that it does not incorporate action durations explicitly. CoMDPs focus on

adding concurrency in an MDP framework. The input to a CoMDP is slightly different from that of

an MDP – S, A, Ap , Pr , C , G, s0 . The new applicability function, probability model and cost

36

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

(Ap , Pr and C respectively) encode the distinction between allowing sequential executions of

single actions versus the simultaneous executions of sets of actions.

3.1 The Model

The set of states (S), set of actions (A), goals (G) and the start state (s0 ) follow the input of an MDP.

The difference lies in the fact that instead of executing only one action at a time, we may execute

multiple of them. Let us define an action combination, A, as a set of one or more actions to be

executed in parallel. With an action combination as a new unit operator available to the agent, the

CoMDP takes the following new inputs

• Ap defines the new applicability function. Ap : S → P(P(A)), denotes the set of action

combinations that can be applied in a given state.

• Pr : S × P(A) × S → [0, 1] is the transition function. We write Pr (s |s, A) to denote the

probability of arriving at state s after executing action combination A in state s.

• C : S × P(A) × S → + is the cost model. We write C (s, A, s ) to denote the cost incurred

when the state s is reached after executing action combination A in state s.

In essence, a CoMDP takes an action combination as a unit operator instead of a single action.

Our approach is to convert a CoMDP into an equivalent MDP (M ) that can be specified by the

tuple S, P(A), Ap , Pr , C , G, s0 and solve it using the known MDP algorithms.

3.2 Case Study: CoMDP over Probabilistic STRIPS

In general a CoMDP could require an exponentially larger input than does an MDP, since the transition model, cost model and the applicability function are all defined in terms of action combinations

as opposed to actions. A compact input representation for a general CoMDP is an interesting, open

research question for the future. In this work, we consider a special class of compact CoMDP

– one that is defined naturally via a domain description very similar to the probabilistic STRIPS

representation for MDPs (Boutilier, Dean, & Hanks, 1999).

Given a domain encoded in probabilistic STRIPS we can compute a safe set of co-executable

actions. Under this safe semantics, the probabilistic dynamics gets defined in a consistent way as

we describe below.

3.2.1 A PPLICABILITY F UNCTION

We first discuss how to compute the sets of actions that can be executed in parallel since some

actions may conflict with each other. We adopt the classical planning notion of mutual exclusion (Blum & Furst, 1997) and apply it to the factored action representation of probabilistic STRIPS.

Two distinct actions are mutex (may not be executed concurrently) if in any state one of the following occurs:

1. they have inconsistent preconditions

2. an outcome of one action conflicts with an outcome of the other

3. the precondition of one action conflicts with the (possibly probabilistic) effect of the other.

37

M AUSAM & W ELD

4. the effect of one action possibly modifies a feature upon which another action’s transition

function is conditioned upon.

Additionally, an action is never mutex with itself. In essence, the non-mutex actions do not interact — the effects of executing the sequence a1 ; a2 equals those for a2 ; a1 — and so the semantics

for parallel executions is clear.

Example: Continuing with Figure 1, toggle-x1 , toggle-x3 and toggle-x4 can execute in parallel but

toggle-x1 and toggle-x2 are mutex as they have conflicting preconditions. Similarly, toggle-x1 and

toggle-p12 are mutex as the effect of toggle-p12 interferes with the precondition of toggle-x1 . If

toggle-x4 ’s outcomes depended on toggle-x1 then they would be mutex too, due to point 4 above.

For example, toggle-x4 toggle-x1 will be mutex if the effect of toggle-x4 was as follows: “if togglex1 then the probability of x4 ← ¬x4 is 0.9 else 0.1”. 2

The applicability function is defined as the set of action-combinations, A, such that each action

in A is independently applicable in s and all of the actions are pairwise non-mutex with each other.

Note that pairwise concurrency is sufficient to ensure problem-free concurrency of all multiple

actions in A. Formally Ap can be defined in terms of our original definition Ap as follows:

Ap (s) = {A ⊆ A|∀a, a ∈ A, a, a ∈ Ap(s) ∧ ¬mutex(a, a )}

(4)

3.2.2 T RANSITION F UNCTION

Let A = {a1 , a2 , . . . , ak } be an action combination applicable in s. Since none of the actions are

mutex, the transition function may be calculated by choosing any arbitrary order in which to apply

them as follows:

Pr (s |s, A) =

...

Pr(s1 |s, a1 )Pr(s2 |s1 , a2 ) . . . Pr(s |sk−1 , ak )

(5)

s1 ,s2 ,...sk ∈S

While we define the applicability function and the transition function by allowing only a consistent set of actions to be executable concurrently, there are alternative definitions possible. For

instance, one might be willing to allow executing two actions together if the probability that they

conflict is very small. A conflict may be defined as two actions asserting contradictory effects or

one negating the precondition of the other. In such a case, a new state called failure could be created such that the system transitions to this state in case of a conflict. And the transition may be

computed to reflect a low probability transition to this failure state.

Although we impose that the model be conflict-free, most of our techniques don’t actually depend on this assumption explicitly and extend to general CoMDPs.

3.2.3 C OST MODEL

We make a small change to the probabilistic STRIPS representation. Instead of defining a single

cost (C) for each action, we define it additively as a sum of resource and time components as follows:

• Let t be the durative cost, i.e., cost due to time taken to complete the action.

• Let r be the resource cost, i.e., cost of resources used for the action.

38

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Assuming additivity we can think of cost of an action C(s, a, s ) = t(s, a, s ) + r(s, a, s ), to be

sum of its time and resource usage. Hence, the cost model for a combination of actions in terms of

these components may be defined as:

C (s, {a1 , a2 , ..., ak }, s ) =

k

r(s, ai , s ) + max {t(s, ai , s )}

i=1..k

i=1

(6)

For example, a Mars rover might incur lower cost when it preheats an instrument while changing

locations than if it executes the actions sequentially, because the total time is reduced while the

energy consumed does not change.

3.3 Solving a CoMDP with MDP Algorithms

We have taken a concurrent MDP that allowed concurrency in actions and formulated it as an equivalent MDP, M , in an extended action space. For the rest of the paper we will use the term CoMDP

to also refer to the equivalent MDP M .

3.3.1 B ELLMAN EQUATIONS

We extend Equations 2 to a set of equations representing the solution to a CoMDP:

J∗ (s) = 0, if s ∈ G else

J∗ (s) = min

A∈Ap (s)

s ∈S

Pr (s |s, A) C (s, A, s ) + J∗ (s )

(7)

These equations are the same as in a traditional MDP, except that instead of considering single

actions for backup in a state, we need to consider all applicable action combinations. Thus, only this

small change must be made to traditional algorithms (e.g., value iteration, LAO*, Labeled RTDP).

However, since the number of action combinations is worst-case exponential in |A|, efficiently

solving a CoMDP requires new techniques. Unfortunately, there is no structure to exploit easily,

since an optimal action for a state from a classical MDP solution may not even appear in the optimal

action combination for the associated concurrent MDP.

Theorem 1 All actions in an optimal combination for a CoMDP (M ) may be individually suboptimal for the MDP M.

Proof: In the domain of Figure 1 let us have an additional action toggle-x34 that toggles both x3

and x4 with probability 0.5 and toggles exactly one of x3 and x4 with probability 0.25 each. Let

all the actions take one time unit each, and therefore cost of any action combination is one as well.

Let the start state be x1 = 1, x2 = 1, x3 = 0, x4 = 0 and p12 = 1. For the MDP M the only optimal

action for the start state is toggle-x34 . However, for the CoMDP M the optimal combination is

{toggle-x3 , toggle-x4 }. 2

3.4 Pruned Bellman Backups

Recall that during a trial, Labeled RTDP performs Bellman backups in order to calculate the costs of

applicable actions (or in our case, action combinations) and then chooses the best action (combination); we now describe two pruning techniques that reduce the number of backups to be computed.

39

M AUSAM & W ELD

Let Q (s, A) be the expected cost incurred by executing an action combination A in state s and then

following the greedy policy, i.e.

Qn (s, A) =

s ∈S

Pr (s |s, A) C (s, A, s ) + Jn−1 (s )

(8)

A Bellman update can thus be rewritten as:

Jn (s) =

min

A∈Ap (s)

Qn (s, A)

(9)

3.4.1 C OMBO -S KIPPING

Since the number of applicable action combinations can be exponential, we would like to prune

suboptimal combinations. The following theorem imposes a lower bound on Q (s, A) in terms of

the costs and the Q -values of single actions. For this theorem the costs of the actions may depend

only on the action and not the starting or ending state, i.e., for all states ∀s, s C(s, a, s ) = C(a).

Theorem 2 Let A = {a1 , a2 , . . . , ak } be an action combination which is applicable in state s. For

a CoMDP over probabilistic STRIPS, if costs are dependent only on actions and Qn values are

monotonically non-decreasing then

Q (s, A) ≥ max Q (s, {ai }) + C (A) −

i=1..k

k

i=1

C ({ai })

Proof:

Qn (s, A) = C (A) +

⇒

s

s

Pr (s |s, A)Jn−1 (s )

(using Eqn. 8)

Pr (s |s, A)Jn−1 (s ) = Qn (s, A) − C (A)

Qn (s, {a1 }) = C ({a1 }) +

≤ C ({a1 }) +

s

s

Pr(s |s, a1 )Jn−1 (s )

Pr(s |s, a1 ) C ({a2 }) +

= C ({a1 }) + C ({a2 }) +

≤

=

k

i=1

k

i=1

C ({ai }) +

(10)

s

s

s

Pr(s |s , a2 )Jn−2 (s )

(using Eqns. 8 and 9)

Pr (s |s, {a1 , a2 })Jn−2 (s )

Pr (s |s, A)Jn−k (s )

C ({ai }) + [Qn−k+1 (s, A) − C (A)]

Replacing n by n + k − 1

40

(repeating for all actions in A)

(using Eqn. 10)

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Qn (s, A) ≥ Qn+k−1 (s, {a1 }) + C (A) −

≥ Qn (s, {a1 }) + C (A) −

≥

k

k

i=1

max Qn (s, {ai }) + C (A) −

i=1..k

2

i=1

C ({ai })

C ({ai })

k

i=1

(monotonicity of Qn )

C ({ai })

The proof above assumes equation 5 from probabilistic STRIPS. The following corollary can

be used to prune suboptimal action combinations:

Corollary 3 Let Jn (s) be an upper bound of Jn (s). If

Jn (s) < max Qn (s, {ai }) + C (A) −

k

i=1..k

i=1

C ({ai })

then A cannot be optimal for state s in this iteration.

Proof: Let A∗n = {a1 , a2 , . . . , ak } be the optimal combination for state s in this iteration n. Then,

Jn (s) ≥ Jn (s)

Jn (s) = Qn (s, A∗n )

Combining with Theorem 2

Jn (s) ≥ maxi=1..k Qn (s, {ai }) +

C (A∗n )

−

k

i=1

C ({ai }) 2

Corollary 3 justifies a pruning rule, combo-skipping, that preserves optimality in any iteration

algorithm that maintains cost function monotonicity. This is powerful because all Bellman-backup

based algorithms preserve monotonicity when started with an admissible cost function. To apply

combo-skipping, one must compute all the Q (s, {a}) values for single actions a that are applicable

in s. To calculate Jn (s) one may use the optimal combination for state s in the previous iteration

(Aopt ) and compute Qn (s, Aopt ). This value gives an upper bound on the value Jn (s).

Example: Consider Figure 1. Let a single action incur unit cost, and let the cost of an action combination be: C (A) = 0.5 + 0.5|A|. Let state s = (1,1,0,0,1) represent the ordered values x1 = 1, x2 =

1, x3 = 0, x4 = 0, and p12 = 1. Suppose, after the nth iteration, the cost function assigns the values:

Jn (s) = 1, Jn (s1 =(1,0,0,0,1)) = 2, Jn (s2 =(1,1,1,0,1)) = 1, Jn (s3 =(1,1,0,1,1)) = 1. Let Aopt for

state s be {toggle-x3 , toggle-x4 }. Now, Qn+1 (s, {toggle-x2 }) = C ({toggle-x2 }) + Jn (s1 ) = 3

and Qn+1 (s, Aopt ) = C (Aopt ) + 0.81×0 + 0.09×Jn (s2 ) + 0.09×Jn (s3 ) + 0.01×Jn (s) = 1.69.

So now we can apply Corollary 3 to skip combination {toggle-x2 , toggle-x3 } in this iteration, since

using toggle-x2 as a1 , we have Jn+1 (s) = Qn+1 (s, Aopt ) = 1.69 ≤ 3 + 1.5 - 2 = 2.5. 2

Experiments show that combo-skipping yields considerable savings. Unfortunately, comboskipping has a weakness — it prunes a combination for only a single iteration. In contrast, our

second rule, combo-elimination, prunes irrelevant combinations altogether.

41

M AUSAM & W ELD

3.4.2 C OMBO -E LIMINATION

We adapt the action elimination theorem from traditional MDPs (Bertsekas, 1995) to prove a similar

theorem for CoMDPs.

Theorem 4 Let A be an action combination which is applicable in state s. Let Q∗ (s, A) denote

a lower bound of Q∗ (s, A). If Q∗ (s, A) > J∗ (s) then A is never the optimal combination for

state s.

Proof: Because a CoMDP is an MDP in a new action space, the original proof for MDPs (Bertsekas,

1995) holds after replacing an action by an ‘action combination’. 2

In order to apply the theorem for pruning, one must be able to evaluate the upper and lower

bounds. By using an admissible cost function when starting RTDP search (or in value iteration,

LAO* etc.), the current cost Jn (s) is guaranteed to be a lower bound of the optimal cost; thus,

Qn (s, A) will also be a lower bound of Q∗ (s, A). Thus, it is easy to compute the left hand side

of the inequality. To calculate an upper bound of the optimal J∗ (s), one may solve the MDP M,

i.e., the traditional MDP that forbids concurrency. This is much faster than solving the CoMDP,

and yields an upper bound on cost, because forbidding concurrency restricts the policy to use a

strict subset of legal action combinations. Notice that combo-elimination can be used for all general

MDPs and is not restricted to only CoMDPs over probabilistic STRIPS.

Example: Continuing with the previous example, let A={toggle-x2 } then Qn+1 (s, A) = C (A) +

Jn (s1 ) = 3 and J∗ (s) = 2.222 (from solving MDP M). As 3 > 2.222, A can be eliminated for

state s in all remaining iterations. 2

Used in this fashion, combo-elimination requires the additional overhead of optimally solving

the single-action MDP M. Since algorithms like RTDP exploit state-space reachability to limit

computation to relevant states, we do this computation incrementally, as new states are visited by

our algorithm.

Combo-elimination also requires computation of the current value of Q (s, A) (for the lower

bound of Q∗ (s, A)); this differs from combo-skipping which avoids this computation. However,

once combo-elimination prunes a combination, it never needs to be reconsidered. Thus, there is

a tradeoff: should one perform an expensive computation, hoping for long-term pruning, or try a

cheaper pruning rule with fewer benefits? Since Q-value computation is the costly step, we adopt

the following heuristic: “First, try combo-skipping; if it fails to prune the combination, attempt

combo-elimination; if it succeeds, never consider it again”. We also tried implementing some other

heuristics, such as: 1) If some combination is being skipped repeatedly, then try to prune it altogether with combo-elimination. 2) In every state, try combo-elimination with probability p. Neither

alternative performed significantly better, so we kept our original (lower overhead) heuristic.

Since combo-skipping does not change any step of labeled RTDP and combo-elimination removes provably sub-optimal combinations, pruned labeled RTDP maintains convergence, termination, optimality and efficiency, when used with an admissible heuristic.

3.5 Sampled Bellman Backups

Since the fundamental challenge posed by CoMDPs is the explosion of action combinations, sampling is a promising method to reduce the number of Bellman backups required per state. We

describe a variant of RTDP, called sampled RTDP, which performs backups on a random set of

42

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

action combinations1 , choosing from a distribution that favors combinations that are likely to be

optimal. We generate our distribution by:

1. using combinations that were previously discovered to have low Q -values (recorded by memoizing the best combinations per state, after each iteration)

2. calculating the Q -values of all applicable single actions (using current cost function) and

then biasing the sampling of combinations to choose the ones that contain actions with low

Q -values.

Algorithm 1 Sampled Bellman Backup(state, m)

1:

2:

3:

4:

5:

6:

7:

8:

9:

10:

11:

Function 2 SampleComb(state, i, l)

1:

2:

3:

4:

5:

6:

7:

8:

9:

10:

11:

12:

13:

14:

15:

//returns the best combination found

list l = ∅ //a list of all applicable actions with their values

for all action ∈ A do

compute Q (state, {action})

insert a, 1/Q (state, {action}) in l

for all i ∈ [1..m] do

newcomb = SampleComb(state, i, l);

compute Q (state, newcomb)

clear memoizedlist[state]

compute Qmin as the minimum of all Q values computed in line 7

store all combinations A with Q (state, A) = Qmin in memoizedlist[state]

return the first entry in memoizedlist[state]

//returns ith combination for the sampled backup

if i ≤ size(memoizedlist[state]) then

return ith entry in memoizedlist[state] //return the combination memoized in previous iteration

newcomb = ∅

repeat

randomly sample an action a from l proportional to its value

insert a in newcomb

remove all actions mutex with a from l

if l is empty then

done = true

else if |newcomb| == 1 then

done = false //sample at least 2 actions per combination

else

|newcomb|

done = true with prob. |newcomb|+1

until done

return newcomb

This approach exposes an exploration / exploitation trade-off. Exploration, here, refers to testing a wide range of action combinations to improve understanding of their relative merit. Exploitation, on the other hand, advocates performing backups on the combinations that have previously

been shown to be the best. We manage the tradeoff by carefully maintaining the distribution over

combinations. First, we only memoize best combinations per state; these are always backed-up

1. A similar action sampling approach was also used in the context of space shuttle scheduling to reduce the number of

actions considered during value function computation (Zhang & Dietterich, 1995).

43

M AUSAM & W ELD

in a Bellman update. Other combinations are constructed by an incremental probabilistic process,

which builds a combination by first randomly choosing an initial action (weighted by its individual Q -value), then deciding whether to add a non-mutex action or stop growing the combination.

There are many implementations possible for this high level idea. We tried several of those and

found the results to be very similar in all of them. Algorithm 1 describes the implementation used

in our experiments. The algorithm takes a state and a total number of combinations m as an input

and returns the best combination obtained so far. It also memoizes all the best combinations for the

state in memoizedlist. Function 2 is a helper function that returns the ith combination that is either

one of the best combinations memoized in the previous iteration or a new sampled combination.

Also notice line 10 in Function 2. It forces the sampled combinations to be at least size 2, since all

individual actions have already been backed up (line 3 of Algo 1).

3.5.1 T ERMINATION AND O PTIMALITY

Since the system does not consider every possible action combination, sampled RTDP is not guaranteed to choose the best combination to execute at each state. As a result, even when started with

an admissible heuristic, the algorithm may assign Jn (s) a cost that is greater than the optimal J∗ (s)

— i.e., the Jn (s) values are no longer admissible. If a better combination is chosen in a subsequent

iteration, Jn+1 (s) might be set a lower value than Jn (s), thus sampled RTDP is not monotonic.

This is unfortunate, since admissibility and monotonicity are important properties required for termination2 and optimality in labeled RTDP; indeed, sampled RTDP loses these important theoretical

properties. The good news is that it is extremely useful in practice. In our experiments, sampled

RTDP usually terminates quickly, and returns costs that are extremely close to the optimal.

3.5.2 I MPROVING S OLUTION Q UALITY

We have investigated several heuristics in order to improve the quality of the solutions found by

sampled RTDP. Our heuristics compensate for the errors due to partial search and lack of admissibility.

• Heuristic 1: Whenever sampled RTDP asserts convergence of a state, do not immediately

label it as converged (which would preclude further exploration (Bonet & Geffner, 2003));

instead first run a complete backup phase, using all the admissible combinations, to rule out

any easy-to-detect inconsistencies.

• Heuristic 2: Run sampled RTDP to completion, and use the cost function it produces, J s (),

as the initial heuristic estimate, J0 (), for a subsequent run of pruned RTDP. Usually, such a

heuristic, though inadmissible, is highly informative. Hence, pruned RTDP terminates quite

quickly.

• Heuristic 3: Run sampled RTDP before pruned RTDP, as in Heuristic 2, except instead of

using the J s () cost function directly as an initial estimate, scale linearly downward — i.e.,

use J0 () := cJ s () for some constant c ∈ (0, 1). While there are no guarantees we hope that

this lies on the admissible side of the optimal. In our experience this is often the case for

c = 0.9, and the run of pruned RTDP yields the optimal policy very quickly.

2. To ensure termination we implemented the policy: if number of trials exceeds a threshold, force monotonicity on the

cost function. This will achieve termination but will reduce quality of solution.

44

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Experiments showed that Heuristic 1 returns a cost function that is close to optimal. Adding

Heuristic 2 improves this value moderately, and a combination of Heuristics 1 and 3 returns the

optimal solution in our experiments.

3.6 Experiments: Concurrent MDP

Concurrent MDP is a fundamental formulation, modeling concurrent actions in a general planning

domain. We first compare the various techniques to solve CoMDPs, viz., pruned and sampled RTDP.

In following sections we use these techniques to model problems with durative actions.

We tested our algorithms on problems in three domains. The first domain was a probabilistic

variant of NASA Rover domain from the 2002 AIPS Planning Competition (Long & Fox, 2003), in

which there are multiple objects to be photographed and various rocks to be tested with resulting

data communicated back to the base station. Cameras need to be focused, and arms need to be

positioned before usage. Since the rover has multiple arms and multiple cameras, the domain is

highly parallel. The cost function includes both resource and time components, so executing multiple actions in parallel is cheaper than executing them sequentially. We generated problems with

20-30 state variables having up to 81,000 reachable states and the average number of applicable

combinations per state, Avg(Ap(s)), which measures the amount of concurrency in a problem, is

up to 2735.

We also tested on a probabilistic version of a machineshop domain with multiple subtasks (e.g.,

roll, shape, paint, polish etc.), which need to be performed on different objects using different

machines. Machines can perform in parallel, but not all are capable of every task. We tested

on problems with 26-28 state variables and around 32,000 reachable states. Avg(Ap(s)) ranged

between 170 and 2640 on the various problems.

Finally, we tested on an artificial domain similar to the one shown in Figure 1 but much more

complex. In this domain, some Boolean variables need to be toggled; however, toggling is probabilistic in nature. Moreover, certain pairs of actions have conflicting preconditions and thus, by

varying the number of mutex actions we may control the domain’s degree of parallelism. All the

problems in this domain had 19 state variables and about 32,000 reachable states, with Avg(Ap(s))

between 1024 and 12287.

We used Labeled RTDP, as implemented in GPT (Bonet & Geffner, 2005), as the base MDP

solver. It is implemented in C++. We implemented3 various algorithms, unpruned RTDP (U RTDP), pruned RTDP using only combo skipping (Ps -RTDP), pruned RTDP using both combo

skipping and combo elimination (Pse -RTDP), sampled RTDP using Heuristic 1 (S-RTDP) and sampled RTDP using both Heuristics 1 and 3, with value functions scaled with 0.9 (S3 -RTDP). We tested

all of these algorithms on a number of problem instantiations from our three domains, generated by

varying the number of objects, degrees of parallelism, and distances to goal. The experiments were

performed on a 2.8 GHz Pentium processor with a 2 GB RAM.

We observe (Figure 2(a,b)) that pruning significantly speeds the algorithm. But the comparison of Pse -RTDP with S-RTDP and S3 -RTDP (Figure 3(a,b)) shows that sampling has a dramatic

speedup with respect to the pruned versions. In fact, pure sampling, S-RTDP, converges extremely

quickly, and S3 -RTDP is slightly slower. However, S3 -RTDP is still much faster than Pse -RTDP.

The comparison of qualities of solutions produced by S-RTDP and S3 -RTDP w.r.t. optimal is shown

in Table 1. We observe that solutions produced by S-RTDP are always nearly optimal. Since the

3. The code may be downloaded at http://www.cs.washington.edu/ai/comdp/comdp.tgz

45

M AUSAM & W ELD

Comparison of Pruned and Unpruned RTDP for Rover domain

Comparison of Pruned and Unpruned RTDP for Factory domain

12000

y=x

Ps-RTDP

Pse-RTDP

25000

Times for Pruned RTDP (in sec)

Times for Pruned RTDP (in sec)

30000

20000

15000

10000

5000

y=x

Ps-RTDP

Pse-RTDP

10000

8000

6000

4000

2000

0

0

0

5000

10000

15000

20000

25000

30000

0

Times for Unpruned RTDP (in sec)

2000

4000

6000

8000

10000

12000

Times for Unpruned RTDP (in sec)

Figure 2: (a,b): Pruned vs. Unpruned RTDP for Rover and MachineShop domains respectively. Pruning

non-optimal combinations achieves significant speedups on larger problems.

Comparison of Pruned and Sampled RTDP for Rover domain

Comparison of Pruned and Sampled RTDP for Factory domain

8000

y=x

S-RTDP

S3-RTDP

8000

Times for Sampled RTDP (in sec)

Times for Sampled RTDP (in sec)

10000

6000

4000

2000

0

y=x

S-RTDP

S3-RTDP

7000

6000

5000

4000

3000

2000

1000

0

0

2000 4000 6000 8000 1000012000140001600018000

Times for Pruned RTDP (Pse-RTDP) - (in sec)

0

1000 2000 3000 4000 5000 6000 7000 8000

Times for Pruned RTDP (Pse-RTDP) - (in sec)

Figure 3: (a,b): Sampled vs Pruned RTDP for Rover and MachineShop domains respectively. Random

sampling of action combinations yields dramatic improvements in running times.

46

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Comparison of algorithms with size of problem for Rover domain

30000

S-RTDP

S3-RTDP

Pse-RTDP

U-RTDP

25000

S-RTDP

S3-RTDP

Pse-RTDP

U-RTDP

15000

20000

Times (in sec)

Times (in sec)

Comparison of different algorithms for Artificial domain

20000

15000

10000

10000

5000

5000

0

0

0

5e+07

1e+08

1.5e+08

Reach(|S|)*Avg(Ap(s))

2e+08

2.5e+08

0

2000

4000

6000 8000

Avg(Ap(s))

10000 12000 14000

Figure 4: (a,b): Comparison of different algorithms with size of the problems for Rover and Artificial domains. As the problem size increases, the gap between sampled and pruned approaches widens

considerably.

Results on varying the Number of samples for Rover Problem#4

300

250

200

150

100

50

0

Running times

Values of start state

300

100

200

300 400 500 600 700

Concurrency : Avg(Ap(s))/|A|

800

900

12.8

250

12.79

200

12.78

150

12.77

100

12.76

50

J*(s0)

0

0

12.81

Value of the start state

350

S-RTDP/Pse-RTDP

Times for Sampled RTDP (in sec)

Speedup : Sampled RTDP/Pruned RTDP

Speedup vs. Concurrency for Artificial domain

350

10

20

30

40 50 60 70 80

Number of samples

12.74

90 100

Figure 5: (a): Relative Speed vs. Concurrency for Artificial domain. (b) : Variation of quality of solution

and efficiency of algorithm (with 95% confidence intervals) with the number of samples in Sampled RTDP for one particular problem from the Rover domain. As number of samples increase,

the quality of solution approaches optimal and time still remains better than Pse -RTDP (which

takes 259 sec. for this problem).

47

M AUSAM & W ELD

Problem

Rover1

Rover2

Rover3

Rover4

Rover5

Rover6

Rover7

Artificial1

Artificial2

Artificial3

MachineShop1

MachineShop2

MachineShop3

MachineShop4

MachineShop5

J(s0 ) (S-RTDP)

10.7538

10.7535

11.0016

12.7490

7.3163

10.5063

12.9343

4.5137

6.3847

6.5583

15.0859

14.1414

16.3771

15.8588

9.0314

J ∗ (s0 ) (Optimal)

10.7535

10.7535

11.0016

12.7461

7.3163

10.5063

12.9246

4.5137

6.3847

6.5583

15.0338

14.0329

16.3412

15.8588

8.9844

Error

<0.01%

0

0

0.02%

0

0

0.08%

0

0

0

0.35%

0.77%

0.22%

0

0.56%

Table 1: Quality of solutions produced by Sampled RTDP

error of S-RTDP is small, scaling it by 0.9 makes it an admissible initial cost function for the pruned

RTDP; indeed, in all experiments, S3 -RTDP produced the optimal solution.

Figure 4(a,b) demonstrates how running times vary with problem size. We use the product of

the number of reachable states and the average number of applicable action combinations per state

as an estimate of the size of the problem (the number of reachable states in all artificial domains is

the same, hence the x-axis for Figure 4(b) is Avg(Ap(s))). From these figures, we verify that the

number of applicable combinations plays a major role in the running times of the concurrent MDP

algorithms. In Figure 5(a), we fix all factors and vary the degree of parallelism. We observe that the

speedups obtained by S-RTDP increase as concurrency increases. This is a very encouraging result,

and we can expect S-RTDP to perform well on large problems inolving high concurrency, even if

the other approaches fail.

In Figure 5(b), we present another experiment in which we vary the number of action combinations sampled in each backup. While solution quality is inferior when sampling only a few

combinations, it quickly approaches the optimal on increasing the number of samples. In all other

experiments we sample 40 combinations per state.

4. Challenges for Temporal Planning

While the CoMDP model is powerful enough to model concurrency in actions, it still assumes each

action to be instantaneous. We now incorporate actual action durations in the modeling the problem.

This is essential to increase the scope of current models to real world domains.

Before we present our model and the algorithms we discuss several new theoretical challenges

imposed by explicit action durations. Note that the results in this section apply to a wide range of

planning problems:

• regardless of whether durations are uncertain or fixed

• regardless of whether effects are stochastic or deterministic.

48

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Actions of uncertain duration are modeled by associating a distribution (possibly conditioned

on the outcome of stochastic effects) over execution times. We focus on problems whose objective

is to achieve a goal state while minimizing total expected time (make-span), but our results extend

to cost functions that combine make-span and resource usage. This raises the question of when a

goal counts as achieved. We require that:

Assumption 1 All executing actions terminate before the goal is considered achieved.

Assumption 2 An action, once started, cannot be terminated prematurely.

We start by asking the question “Is there a restricted set of time points such that optimality is

preserved even if actions are started only at these points?”

Definition 1 Any time point when a new action is allowed to start execution is called a decision

epoch. A time point is a pivot if it is either 0 or a time when a new effect might occur (e.g., the

end of an action’s execution) or a new precondition may be needed or an existing precondition may

no longer be needed. A happening is either 0 or a time when an effect actually occurs or a new

precondition is definitely needed or an existing precondition is no longer needed.

Intuitively, a happening is a point where a change in the world state or action constraints actually

“happens” (e.g., by a new effect or a new precondition). When execution crosses a pivot (a possible

happening), information is gained by the agent’s execution system (e.g., did or didn’t the effect

occur) which may “change the direction” of future action choices. Clearly, if action durations are

deterministic, then the set of pivots is the same as the set of happenings.

Example: Consider an action a whose durations follow a uniform integer duration between 1 and

10. If it is started at time 0 then all timepoints 0, 1, 2,. . ., 10 are pivots. If in a certain execution it

finishes at time 4 then 4 (and 0) is a happening (for this execution). 2

Definition 2 An action is a PDDL2.1 action (Fox & Long, 2003) if the following hold:

• The effects are realized instantaneously either (at start) or (at end), i.e., at the beginning or

the at the completion of the action (respectively).

• The preconditions may need to hold instaneously before the start (at start), before the end (at

end) or over the complete execution of the action (over all).

(:durative-action a

:duration (= ?duration 4)

:condition (and (over all P ) (at end Q))

:effect (at end Goal))

(:durative-action b

:duration (= ?duration 2)

:effect (and (at start Q) (at end (not P ))))

Figure 6: A domain to illustrate that an expressive action model may require arbitrary decision epochs for a

solution. In this example, b needs to start at 3 units after a’s execution to reach Goal.

49

M AUSAM & W ELD

Theorem 5 For a PDDL2.1 domain restricting decision epochs to pivots causes incompleteness

(i.e., a problem may be incorrectly deemed unsolvable).

Proof: Consider the deterministic temporal planning domain in Figure 6 that uses PDDL2.1 notation

(Fox & Long, 2003). If the initial state is P =true and Q=false, then the only way to reach Goal is

to start a at time t (e.g., 0), and b at some timepoint in the open interval (t + 2, t + 4). Clearly, no

new information is gained at any of the time points in this interval and none of them is a pivot. Still,

they are required for solving the problem. 2

Intuitively, the instantaneous start and end effects of two PDDL2.1 actions may require a certain

relative alignment within them to achieve the goal. This alignment may force one action to start

somewhere (possibly at a non-pivot point) in the midst of the other’s execution, thus requiring

intermediate decision epochs to be considered.

Temporal planners may be classified as having one of two architectures: constraint-posting

approaches in which the times of action execution are gradually constrained during planning (e.g.,

Zeno and LPG, see Penberthy and Weld, 1994; Gerevini and Serina, 2002) and extended statespace methods (e.g., TP4 and SAPA, see Haslum and Geffner, 2001; Do and Kambhampati, 2001).

Theorem 5 holds for both architectures but has strong computational implications for state-space

planners because limiting attention to a subset of decision epochs can speed these planners. The

theorem also shows that planners like SAPA and Prottle (Little, Aberdeen, & Thiebaux, 2005) are

incomplete. Fortunately, an assumption restricts the set of decision epochs considerably.

Definition 3 An action is a TGP-style action4 if all of the following hold:

• The effects are realized at some unknown point during action execution, and thus can be used

only once the action has completed.

• The preconditions must hold at the beginning of an action.

• The preconditions (and the features on which its transition function is conditioned) must not

be changed during an action’s execution, except by an effect of the action itself.

Thus, two TGP-style actions may not execute concurrently if they clobber each other’s preconditions or effects. For the case of TGP-style actions the set of happenings is nothing but the set of

time points when some action terminates. TGP pivots are the set of points when an action might

terminate. (Of course both these sets additionally include zero).

Theorem 6 If all actions are TGP-style, then the set of decision epochs may be restricted to pivots

without sacrificing completeness or optimality.

Proof Sketch: By contradiction. Suppose that no optimal policy satisfies the theorem; then there

must exist a path through the optimal policy in which one must start an action, a, at time t even

though there is no action which could have terminated at t. Since the planner hasn’t gained any

information at t, a case analysis (which requires actions to be TGP-style) shows that one could

have started a earlier in the execution path without increasing the make-span. The detailed proof is

discussed in the Appendix. 2

In the case of deterministic durations, the set of happenings is same as the set of pivots; hence

the following corollary holds:

4. While the original TGP (Smith & Weld, 1999) considered only deterministic actions of fixed duration, we use the

phrase “TGP-style” in a more general way, without these restrictions.

50

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Probabillity: 0.5

a0

s0

a2

G

a1

Make−span: 3

Probability 0.5

a0

s0

a1

G

b0

Make−span: 9

Time

0

2

4

6

8

Figure 7: Pivot decision epochs are necessary for optimal planning in face of nonmonotonic continuation. In this domain, Goal can be achieved by {a0 , a1 }; a2 or b0 ; a0 has duration 2

or 9; and b0 is mutex with a1 . The optimal policy starts a0 and then, if a0 does not finish

at time 2, it starts b0 (otherwise it starts a1 ).

Corollary 7 If all actions are TGP-style with deterministic durations, then the set of decision

epochs may be restricted to happenings without sacrificing completeness or optimality.

When planning with uncertain durations there may be a huge number of pivots; it is useful to

further constrain the range of decision epochs.

Definition 4 An action has independent duration if there is no correlation between its probabilistic

effects and its duration.

Definition 5 An action has monotonic continuation if the expected time until action termination is

nonincreasing during execution.

Actions without probabilistic effects, by nature, have independent duration. Actions with monotonic continuations are common, e.g. those with uniform, exponential, Gaussian, and many other duration distributions. However, actions with bimodal or multi-modal distributions don’t have monotonic continuations. For example consider an action with uniform distribution over [1,3]. If the

action doesn’t terminate until 2, then the expected time until completion is calculated as 2, 1.5,

and 1 for times 0, 1, and 2 respectively, which is monotonically decreasing. For an example of

non-monotonic continuation see Figure 18.

Conjecture 8 If all actions are TGP-style, have independent duration and monotonic continuation,

then the set of decision epochs may be restricted to happenings without sacrificing completeness or

optimality.

If an action’s continuation is nonmonotonic then failure to terminate can increase the expected

time remaining and cause another sub-plan to be preferred (see Figure 7). Similarly, if an action’s

duration isn’t independent then failure to terminate changes the probability of its eventual effects

and this may prompt new actions to be started.

By exploiting these theorems and conjecture we may significantly speed planning since we are

able to limit the number of decision epochs needed for decision-making. We use this theoretical

understanding in our models. First, for simplicity, we consider only the case of TGP-style actions

with deterministic durations. In Section 6, we relax this restriction by allowing stochastic durations,

both unimodal as well as multimodal.

51

M AUSAM & W ELD

toggle−p12

p12 (effect)

conflict

¬p12 (Precondition)

toggle−x1

0

2

4

6

8

10

Figure 8: A sample execution demonstrating conflict due to interfering preconditions and effects. (The

actions are shaded to disambiguate them with preconditions and effects)

5. Temporal Planning with Deterministic Durations

We use the abbreviation CPTP (short for Concurrent Probabilistic Temporal Planning) to refer to

the probabilistic planning problem with durative actions. A CPTP problem has an input model

similar to that of CoMDPs except that action costs, C(s, a, s ), are replaced by their deterministic

durations, Δ(a), i.e., the input is of the form S, A, Pr, Δ, G, s0 . We study the objective of minimizing the expected time (make-span) of reaching a goal. For the rest of the paper we make the

following assumptions:

Assumption 3 All action durations are integer-valued.

This assumption has a negligible effect on expressiveness because one can convert a problem

with rational durations into one that satisfies Assumption 3 by scaling all durations by the g.c.d. of

the denominators. In case of irrational durations, one can always find an arbitrarily close approximation to the original problem by approximating the irrational durations by rational numbers.

For reasons discussed in the previous section we adopt the TGP temporal action model of Smith

and Weld (1999), rather than the more complex PDDL2.1 (Fox & Long, 2003). Specifically:

Assumption 4 All actions follow the TGP model.

These restrictions are consistent with our previous definition of concurrency. Specifically, the

mutex definitions (of CoMDPs over probabilistic STRIPS) hold and are required under these assumptions. As an illustration, consider Figure 8. It describes a situation in which two actions with

interfering preconditions and effects can not be executed concurrently. To see why not, suppose

initially p12 was false and two actions toggle-x1 and toggle-p12 were started at time 2 and 4, respectively. As ¬p12 is a precondition of toggle-x1 , whose duration is 5, it needs to remain false

until time 7. But toggle-p12 may produce its effects anytime between 4 and 9, which may conflict

with the preconditions of the other executing action. Hence, we forbid the concurrent execution of

toggle-x1 and toggle-p12 to ensure a completely predictable outcome distribution.

Because of this definition of concurrency, the dynamics of our model remains consistent with

Equation 5. Thus the techniques developed for CoMDPs derived from probabilistic STRIPS actions

may be used.

52

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

An Aligned Epoch policy execution

(takes 9 units)

toggle−x1

t3

f

f

f

f

t3 t3 t3 t3

0

5

s

10

time

toggle−x1

f

f

f

f

t3 t3 t3 t3 t3

s

An Interwoven Epoch policy execution

(takes 5 units)

Figure 9: Comparison of times taken in a sample execution of an interwoven-epoch policy and an alignedepoch policy. In both trajectories the toggle-x3 (t3) action fails four times before succeeding.

Because the aligned policy must wait for all actions to complete before starting any more, it takes

more time than the interwoven policy, which can start more actions in the middle.

5.1 Formulation as a CoMDP

We can model a CPTP problem as a CoMDP, and thus as an MDP, in more than one way. We list

the two prominent formulations below. Our first formulation, aligned epoch CoMDP models the

problem approximately but solves it quickly. The second formulation, interleaved epochs models

the problem exactly but results in a larger state space and hence takes longer to solve using existing

techniques. In subsequent subsections we explore ways to speed up policy construction for the

interleaved epoch formulation.

5.1.1 A LIGNED E POCH S EARCH S PACE

A simple way to formulate CPTP is to model it as a standard CoMDP over probabilistic STRIPS,

in which action costs are set to their durations and the cost of a combination is the maximum

duration of the constituent actions (as in Equation 6). This formulation introduces a substantial

approximation to the CPTP problem. While this is true for deterministic domains too, we illustrate

this using our example involving stochastic effects. Figure 9 compares the trajectories in which the

toggle-x3 (t3) actions fails for four consecutive times before succeeding. In the figure, “f” and “s”

denote failure and success of uncertain actions, respectively. The vertical dashed lines represent the

time-points when an action is started.

Consider the actual executions of the resulting policies. In the aligned-epoch case (Figure 9

top), once a combination of actions is started at a state, the next decision can be taken only when

the effects of all actions have been observed (hence the name aligned-epochs). In contrast, Figure 9

bottom shows that at a decision epoch in the optimal execution for a CPTP problem, many actions

may be midway in their execution. We have to explicitly take into account these actions and their

remaining execution times when making a subsequent decision. Thus, the actual state space for

CPTP decision making is substantially different from that of the simple aligned-epoch model.

Note that due to Corollary 7 it is sufficient to consider a new decision epoch only at a happening,

i.e., a time-point when one or more actions complete. Thus, using Assumption 3 we infer that these

decision epochs will be discrete (integer). Of course, not all optimal policies will have this property.

53

M AUSAM & W ELD

State variables : x1 , x2 , x3 , x4 , p12

Action

Δ(a) Precondition

toggle-x1

5

¬p12

toggle-x2

5

p12

toggle-x3

1

true

Effect

x1 ← ¬x1

x2 ← ¬x2

x3 ← ¬x3

no change

toggle-x4

1

true

x4 ← ¬x4

no change

toggle-p12

5

true

p12 ← ¬p12

Goal : x1 = 1, x2 = 1, x3 = 1, x4 = 1

Probability

1

1

0.9

0.1

0.9

0.1

1

Figure 10: The domain of Example 1 extended with action durations.

But it is easy to see that there exists at least one optimal policy in which each action begins at a

happening. Hence our search space reduces considerably.

5.1.2 I NTERWOVEN E POCH S EARCH S PACE

We adapt the search space representation of Haslum and Geffner (2001), which is similar to that

in other research (Bacchus & Ady, 2001; Do & Kambhampati, 2001). Our original state space S

in Section 2 is augmented by including the set of actions currently executing and the times passed

since they were started. Formally, let the new interwoven state5 s ∈ S –- be an ordered pair X, Y where:

• X∈S

• Y = {(a, δ)|a ∈ A, 0 ≤ δ < Δ(a)}

Here X represents the values of the state variables (i.e. X is a state in the original state space)

and Y denotes the set of ongoing actions “a” and the times

passed since their start “δ”. Thus the

overall interwoven-epoch search space is S –- = S × a∈A {a} × ZΔ(a) , where ZΔ(a) represents

the set {0, 1, . . . , Δ(a) − 1} and

denotes the Cartesian product over multiple sets.

Also define As to be the set of actions already in execution. In other words, As is a projection

of Y ignoring execution times in progress:

As = {a|(a, δ) ∈ Y ∧ s = X, Y }

Example: Continuing our example with the domain of Figure 10, suppose state s1 has all state

variables false, and suppose the action toggle-x1 was started 3 units ago from the current time. Such

a state would be represented as X1 , Y1 with X1 =(F, F, F, F, F ) and Y1 ={(toggle-x1 ,3)} (the five

state variables are listed in the order: x1 , x2 , x3 , x4 and p12 ). The set As1 would be {toggle-x1 }.

To allow the possibility of simply waiting for some action to complete execution, that is, deciding at a decision epoch not to start any additional action, we augment the set A with a no-op action,

which is applicable in all states s = X, Y where Y = ∅ (i.e. states in which some action is still

being executed). For a state s, the no-op action is mutex with all non-executing actions, i.e., those in

A \ As . In other words, at any decision epoch either a no-op will be started or any combination not

5. We use the subscript –- to denote the interwoven state space (S –- ), value function (J –- ), etc..

54

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

involving no-op. We define no-op to have a variable duration6 equal to the time after which another

already executing action completes (δnext (s, A) as defined below).

The interwoven applicability set can be defined as:

Ap –- (s) =

Ap (X) if Y = ∅ else

{noop}∪{A|A∪As ∈ Ap (X) and A∩As = ∅}

Transition Function: We also need to define the probability transition function, Pr –- , for the

interwoven state space. At some decision epoch let the agent be in state s = (X, Y ). Suppose

that the agent decides to execute an action combination A. Define Ynew as the set similar to Y

but consisting of the actions just starting; formally Ynew = {(a, Δ(a))|a ∈ A}. In this system, the

next decision epoch will be the next time that an executing action terminates. Let us call this time

δnext (s, A). Notice that δnext (s, A) depends on both executing and newly started actions. Formally,

δnext (s, A) =

min

(a,δ)∈Y ∪Ynew

Δ(a) − δ

Moreover, multiple actions may complete simultaneously. Define Anext (s, A) ⊆ A ∪ As to be

the set of actions that will complete exactly in δnext (s, A) timesteps. The Y -component of the state

at the decision epoch after δnext (s, A) time will be

Ynext (s, A) = {(a, δ + δnext (s, A))|(a, δ) ∈ Y ∪ Ynew , Δ(a) − δ > δnext (s, A)}

Let s=X, Y and let s =X , Y . The transition function for CPTP can now be defined as:

Pr –- (s |s, A)=

Pr (X |X, Anext (s, A)) if Y = Ynext (s, A)

0

otherwise

In other words, executing an action combination A in state s = X, Y takes the agent to a

decision epoch δnext (s, A) ahead in time, specifically to the first time when some combination

Anext (s, A) completes. This lets us calculate Ynext (s, A): the new set of actions still executing

with their times elapsed. Also, because of TGP-style actions, the probability distribution of different

state variables is modified independently. Thus the probability transition function due to CoMDP

over probabilistic STRIPS can be used to decide the new distribution of state variables, as if the

combination Anext (s, A) were taken in state X.

Example: Continuing with the previous example, let the agent in state s1 execute the action combination A = {toggle-x4 }. Then δnext (s1 , A) = 1, since toggle-x4 will finish the first. Thus,

Anext (s1 , A)= {toggle-x4 }. Ynext (s1 , A) = {(toggle-x1 ,4)}. Hence, the probability distribution of

states after executing the combination A in state s1 will be

• ((F, F, F, T, F ), Ynext (s1 , A)) probability = 0.9

• ((F, F, F, F, F ), Ynext (s1 , A)) probability = 0.1

6. A precise definition of the model will create multiple no-opt actions with different constant durations t and the no-opt

applicable in an interwoven state will be the one with t = δnext (s, A).

55

M AUSAM & W ELD

Start and Goal States: In the interwoven space, the start state is s0 , ∅ and the new set of goal

states is G –- = {X, ∅|X ∈ G}.

By redefining the start and goal states, the applicability function, and the probability transition

function, we have finished modeling a CPTP problem as a CoMDP in the interwoven state space.

Now we can use the techniques of CoMDPs (and MDPs as well) to solve our problem. In particular,

we can use our Bellman equations as described below.

Bellman Equations: The set of equations for the solution of a CPTP problem can be written as:

J ∗- (s) = 0, if s ∈ G –- else

–

⎧

∗

⎪

⎨

⎫

⎪

⎬

(11)

J - (s) = min

δnext (s, A) + Pr –- (s |s, A)J ∗- (s )

–

–

⎪

A∈Ap - (s) ⎪

⎩

⎭

s ∈S –

–

We will use DURsamp to refer to the sampled RTDP algorithm over this search space. The main

bottleneck in naively inheriting algorithms like DURsamp is the huge size of the interwoven state

space. In the worst case (when all actions can be executed concurrently) the size of the state space is

|S| × ( a∈A Δ(a)). We get this bound by observing that for each action a, there are Δ(a) number

of possibilities: either a is not executing or it is and has remaining times 1, 2, . . . , Δ(a) − 1.

Thus we need to reduce or abstract/aggregate our state space in order to make the problem

tractable. We now present several heuristics which can be used to speed the search.

5.2 Heuristics

We present both an admissible and an inadmissible heuristics that can be used as the initial cost

function for DURsamp algorithm. The first heuristic (maximum concurrency) solves the underlying MDP and is thus quite efficient to compute. The second heuristic (average concurrency) is

inadmissible, but tends to be more informed than the maximum concurrency heuristic.

5.2.1 M AXIMUM C ONCURRENCY H EURISTIC

We prove that the optimal expected cost in a traditional (serial) MDP divided by the maximum

number of actions that can be executed in parallel is a lower bound for the expected make-span of

reaching a goal in a CPTP problem. Let J(X) denote the value of a state X ∈ S in a traditional

MDP with costs of an action equal to its duration. Let Q(X, A) denote the expected cost to reach the

goal if initially all actions in the combination A are executed and the greedy serial policy is followed

thereafter. Formally, Q(X, A) = X ∈S Pr (X |X, A)J(X ). Let J –- (s) be the value for equivalent

CPTP problem with s as in our interwoven-epoch state space. Let concurrency of a state be the

maximum number of actions that could be executed in the state concurrently. We define maximum

concurrency of a domain (c) as the maximum number of actions that can be concurrently executed

in any world state in the domain. The following theorem can be used to provide an admissible

heuristic for CPTP problems.

Theorem 9 Let s = X, Y ,

J ∗- (s) ≥

–

J ∗- (s) ≥

–

J ∗ (X)

for Y = ∅

c

Q∗ (X, As )

for Y = ∅

c

56

(12)

P LANNING WITH D URATIVE ACTIONS IN S TOCHASTIC D OMAINS

Proof Sketch: Consider any trajectory of make-span L (from a state s = X, ∅ to a goal state) in a

CPTP problem using its optimal policy. We can make all concurrent actions sequential by executing

them in the chronological order of being started. As all concurrent actions are non-interacting, the

outcomes at each stage will have similar probabilities. The maximum make-span of this sequential

trajectory will be cL (assuming c actions executing at all points in the semi-MDP trajectory). Hence

J(X) using this (possibly non-stationary) policy would be at most cJ ∗- (s). Thus J ∗ (X) ≤ cJ ∗- (s).

–

–

The second inequality can be proven in a similar way. 2

There are cases where these bounds are tight. For example, consider a deterministic planning

problem in which the optimal plan is concurrently executing c actions each of unit duration (makespan = 1). In the sequential version, the same actions would be taken sequentially (make-span =

c).

Following this theorem, the maximum concurrency (MC) heuristic for a state s = X, Y is

defined as follows:

J ∗ (X)

Q∗ (X, As )

else HM C (s) =

if Y = ∅ HM C (s) =

c

c

The maximum concurrency c can be calculated by a static analysis of the domain and is a onetime expense. The complete heuristic function can be evaluated by solving the MDP for all states.

However, many of these states may never be visited. In our implementation, we do this calculation

on demand, as more states are visited, by starting the MDP from the current state. Each RTDP run

can be seeded by the previous value function, thus no computation is thrown away and only the

relevant part of the state space is explored. We refer to DURsamp initiated with the MC heuristic by

DURMC

samp .

5.2.2 AVERAGE C ONCURRENCY H EURISTIC

Instead of using maximum concurrency c in the above heuristic we use the average concurrency

in the domain (ca ) to get the average concurrency (AC) heuristic. We call the resulting algorithm

DURAC

samp . The AC heuristic is not admissible, but in our experiments it is typically a more informed

heuristic. Moreover, in the case where all the actions have the same duration, the AC heuristic equals

the MC heuristic.

5.3 Hybridized Algorithm

We present an approximate method to solve CPTP problems. While there can be many kinds of

possible approximation methods, our technique exploits the intuition that it is best to focus computation on the most probable branches in the current policy’s reachable space. The danger of this

approach is the chance that, during execution, the agent might end up in an unlikely branch, which

has been poorly explored; indeed it might blunder into a dead-end in such a case. This is undesirable, because such an apparently attractive policy might have a true expected make-span of infinity.

Since, we wish to avoid dead-ends, we explore the desirable notion of propriety.

Definition 6 Propriety: A policy is proper at a state if it is guaranteed to lead, eventually, to the goal

state (i.e., it avoids all dead-ends and cycles) (Barto et al., 1995). We define a planning algorithm

proper if it always produces a proper policy (when one exists) for the initial state.

We now describe an anytime approximation algorithm, which quickly generates a proper policy

and uses any additional available computation time to improve the policy, focusing on the most

likely trajectories.

57

M AUSAM & W ELD

5.3.1 H YBRIDIZED P LANNER

Our algorithm, DURhyb , is created by hybridizing two other policy creation algorithms. Indeed,

our novel notion of hybridization is both general and powerful, applying to many MDP-like problems; however, in this paper we focus on the use of hybridization for CPTP. Hybridization uses an

anytime algorithm like RTDP to create a policy for frequently visited states, and uses a faster (and

presumably suboptimal) algorithm for the infrequent states.

For the case of CPTP, our algorithm hybridizes the RTDP algorithms for interwoven-epoch and

aligned-epoch models. With aligned-epochs, RTDP converges relatively quickly, because the state

space is smaller, but the resulting policy is suboptimal for the CPTP problem, because the policy

waits for all currently executing actions to terminate before starting any new actions. In contrast,

RTDP for interwoven-epochs generates the optimal policy, but it takes much longer to converge.

Our insight is to run RTDP on the interwoven space long enough to generate a policy which is

good on the common states, but stop well before it converges in every state. Then, to ensure that the

rarely explored states have a proper policy, we substitute the aligned policy, returning this hybridized

policy.

Algorithm 3 Hybridized Algorithm DURhyb (r, k, m)

1: for all s ∈ S - do

–

2:

initialize J –- (s) with an admissible heuristic

3: repeat

4:

perform m RTDP trials

5:

compute hybridized policy (πhyb ) using interwoven-epoch policy for k-familiar states and aligned-

epoch policy otherwise

clean πhyb by removing all dead-ends and cycles

J π- s0 , ∅ ← evaluation of πhyb from the start state

– π

J - (s0 ,∅)−J - (s0 ,∅)

–

–

8: until

<r

J - (s0 ,∅)

–

9: return hybridized policy πhyb

6:

7:

Thus the key question is how to decide which states are well explored and which are not. We

define the familiarity of a state s to be the number of times it has been visited in previous RTDP

trials. Any reachable state whose familiarity is less than a constant, k, has an aligned policy created