DEVELOPMENTAL EVALUATION: USING ASSESSMENT RESULTS TO INFORM INNOVATIVE AND PROGRAMMATIC CHANGES

advertisement

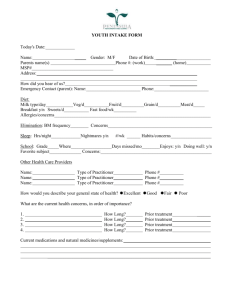

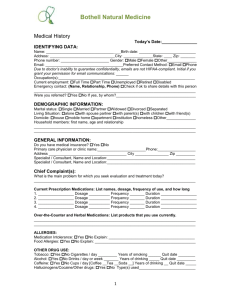

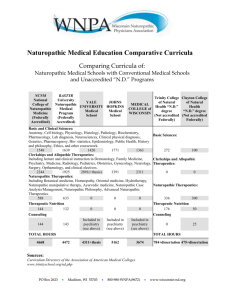

DEVELOPMENTAL EVALUATION: USING ASSESSMENT RESULTS TO INFORM INNOVATIVE AND PROGRAMMATIC CHANGES Dr. Nancy Stackhouse Director of Academic Assessment Southwest College of Naturopathic Medicine, Tempe, Arizona California State University, Fullerton Academic Assessment Conference “Navigating Assessment Results: Riding the Waves of Change” March 20, 2015 DEVELOPMENTAL EVALUATION DEFINED • Developmental Evaluation (DE) falls under the larger umbrella of Utilization-Focused Evaluation which is used to maximize the usefulness of the results of an assessment research analysis for its aimed users or stakeholders. • The goal of DE is to make complexity manageable all for the purpose of improved student learning. There is a need to become “journey-oriented” vs. “outcome-oriented” (Patton, 2014). • DE produces more than improvements; it supports program development (Patton, 2011). FORMATIVE VS. SUMMATIVE VS. DEVELOPMENTAL • https://www.youtube.com/watch?v=Wg3IL-XjmuM • The above three-minute video is of Michael Quinn Patton discussing participatory, formative, summative, and developmental evaluation. MORE ABOUT DEVELOPMENTAL EVALUATION (DE) DE is used to: 1) evaluate processes 2) ask evaluative questions 3) apply evaluation logic 4) support program, product, staff, and/or organizational development -Michael Quinn Patton (2011) ASSESSMENT VS. EVALUATION ASSESSMENT • Process of gathering evidence of student learning to inform instructional decisions EVALUATION • Procedure for collecting information and making a judgment about it (Burke, 2010) • Accurate, timely evidence used to • Renders definitive judgments of support student learning • Consists of all the tools that teachers use to collect information about student learning and instructional effectiveness (Burke, 2010) success or failure • Measures success against predetermined goals • Often serves compliance and accountability functions (Patton, 2014) DEVELOPMENTAL EVALUATION (DE)… • Supports innovation and adaptation • Provides feedback and generates learnings • Supports direction or affirms changes in direction in real time • Develops new measures and monitoring mechanisms as goals emerge and evolve • Is designed to capture system dynamics, interdependencies, and emergent connections (Patton, 2014) DE… (CONTINUED) • Aims to produce context-specific understandings that inform ongoing innovation • Focuses on accountability centered on the innovators’ deep sense of fundamental values and commitments – and learning • Values the need to learn to respond to lack of control and stay in touch with what’s unfolding and thereby respond strategically • Views evaluation as a leadership function where results-focused, learning-oriented leadership is developed • Supports a hunger for learning (Patton, 2014) IMPROVEMENT VS. DEVELOPMENT • Improvement is focused on • Development is focused on making doing what you are currently doing better or more efficiently. • Improving infers “making current practices better.” fundamental changes informed by data that are innovative and transformative. • Developing infers “intentionally changing and adapting based on complex, highly-contextualized circumstances or situations” (Patton, 2014). EXAMPLES OF IMPROVEMENT VS. DEVELOPMENT Improvement: Development: • Expand the recruitment effort to a • Fundamentally change the recruitment wider target area • Fine tune program delivery based on participant feedback • Add a new topic to existing curriculum • Provide staff training to enhance skills of current staff efforts/plan • Change program delivery from face-to-face to online • Change entire scope, sequence, and delivery of the curriculum • Change job descriptions, qualifications, and competencies of staff (Patton, 2014) SIMPLE FOLLOWING A RECIPE • The recipe is essential • Recipes are tested to assure replicability of later efforts • No particular expertise; knowing how to cook increases success • Recipe notes the quantity and nature of “parts” needed • Recipes produce standard products • Certainty of same results every time COMPLICATED A ROCKET TO THE MOON • Formulae are critical and necessary • Sending one rocket increases assurance that next will be okay • High level of expertise in many specialized fields and coordination • Separate into parts and then coordinate COMPLEX RAISING A CHILD • Formulae have only a limited application • Raising one child gives no assurance of success with the next • Expertise can help but is not sufficient; relationships are key • Can’t separate parts from the whole • Rockets similar in critical ways • Every child is unique • High degree of certainty of • Uncertainty of outcome outcome remains (Patton, 2014) FIVE TYPES OF DE Primary DE Purpose Complex System Challenges Implications Example 1. ONGOING DEVELOPMENT Being implemented in a complex and dynamic environment No intention to become Visionary hopes and emerging ideas fixed/standardized model; Identifies that you want to develop into an effective principles intervention 2. ADAPTING EFFECTIVE PRINCIPLES TO A NEW CONTEXT Innovative initiative; Develop “own” version based on adaptation of effective principles and knowledge Top-down – general principles and knowledge disseminated; Bottom-up – sensitive to context, experience, capabilities and priorities; adaptation vs. adoption An intervention model that worked and you want to adapt its general principles to a new context navigating top-down and bottom-up forces for change 3. RAPID RESPONSE IN TURBULENT CRISIS CONDITIONS Existing initiatives and responses no longer effective as conditions change suddenly Planning, execution and evaluation occur simultaneously Sudden major change or crisis requires real time solutions and innovative interventions for those in need 4. PREFORMATIVE DEVELOPMENT Changing/dynamic situations require innovative solutions; Model does not exist or needs to be developed Models may move into formative and summative evaluation while others remain in developmental mode and inform scaling options An innovative intervention you want to explore and shape into a potential model to ready for formative and eventually summative evaluation 5. MAJOR SYSTEMS CHANGE Disrupt existing system; Changing scale reflecting new levels of complexity Adaptive cross scale innovations assume complex, nonlinear dynamics, agility, and responsiveness Project a successful intervention in one system to a different system (Patton, 2014) USING DE: CRITICAL SUPPORTS AND ENHANCEMENTS • High tolerance for ambiguity • Find out what existing subgroups believe • Identify “critical incidences” leading to new interventions and directions • Leave behind ineffective strategies and replace with new, emergent strategies/plans • Look at unanticipated consequences • Understand the difference between adapting/developing vs. improving • Document what did not work (i.e., failure report) This sparks innovation USING DE: CRITICAL SUPPORTS AND ENHANCEMENTS (CONTINUED) • Goal is to make complexity manageable and figure out what you can do. • Do lots of little things and make connections/improve communications/networks. • Never set targets where you don’t know how to get there. • Be sure you have clear and measurable outcomes. • Critical supports and enhancements include: 1) training, 2) communication, 3.) engagement, and 4.) establishing and supporting a culture of assessment and evaluation at your institution (Patton, 2014). SPECIFIC ASSESSMENT EXAMPLES • Assessment examples at Southwest College of Naturopathic Medicine: Primary Status Milestone Exam (both developments and improvements) Student Learning Outcome: “The student will demonstrate clinical knowledge, skills, and attitudes in the care and treatment of patients.” Students did not meet the criterion for success in previous academic year. ACTIONS: 1.) advanced scoring rubric developed 2.) Course changed from one credit to two credits 3.) Two five-hour “exam prep immersion” days scheduled 4.) Full-day physician/examiner training held to improve scoring consistencies between examiners, cases, and standardized patients and will be held annually PRIMARY STATUS MILESTONE EXAM (PARTIAL) Student Name: Click here to enter text. CC: Click here to enter text. Examiner: Click here to enter text. Date: Click here to enter text. SP: Click here to enter text. Start Time: __________ HISTORY TAKING (____/25 Points) General HPI 1. Introduced self and asked patient’s Name 2. Age and DOB 3. Chief concern 4. Onset (what, when) 5. Preceding events 6. Location/region of symptom 7. Sensation/ quality of the symptom 8. Radiation/referral if pain is present (if no pain free point) End Time: ___________ Yes No 9. 10. Severity/intensity/degree symptom is impacting life Timing/progression of symptom (open/closed ended) 11. 12. 13. 14. Better (open/closed ended) Worse (open/closed ended) Previous episodes of symptom Treatments patient has tried (and their effectiveness) Review of Systems (must cover at least two systems relevant to the case) 1. Covered Review of Systems pertinent to case: 1st system covered: _______________ 2. Covered Review of Systems pertinent to case: 2nd system covered: _______________ Pts STUDENT SURVEY ON PRINCIPLES OF NATUROPATHIC MEDICINE • Issues include: pre-post administration resulted in lowering of ratings, alignment problem between student learning outcome and survey items. Instrument needs improvements and developments. • This assessment is given in year one and in year four as a pre/post measure of improvement on the following Student Learning Outcome: “The student will demonstrate a commitment to the principles of naturopathic medicine.” • Success will be achieved if 80% of students respond “strongly agree” or “agree” with each statement in year one and 90% of students respond “strongly agree” or “agree” with each statement in year four to demonstrate growth throughout the program. • For year one students (pre/2012), the criterion was met on all five items. • For year four students (post/2015), the criterion was partially met (on three of five items) STUDENT SURVEY ON PRINCIPLES OF NATUROPATHIC MEDICINE • Less than 90% of students responded “strongly agree” or “agree” with Q3 and Q5 in year four. Scores actually went down on all five items from year one to year four. Comparison of the Means 5.00 4.50 4.91 4.79 4.41 4.51 4.66 4.61 4.36 4.30 4.19 4.00 3.50 2.85 3.00 2.50 2.00 1.50 1.00 0.50 0.00 Q1: Understanding of principles Q2: Application of principles Q3: Ability to explain principles Spring / Summer 2012 Winter 2015 Q4: Future use of principles Q5: Integration of principles Survey for CLTR 4900 Date/ Quarter ____________________________________________________ Please respond to the following items by placing an X in the box that represents your response: Please add any additional comments in the space below or on the back of this page: Item 1. I feel confident in my understanding of the six principles of naturopathic medicine. 2. I have applied the principles of naturopathic medicine in my personal life. 3. I am confident in my ability to explain the principles of naturopathic medicine to my future patients. 4. I am confident I will use the principles of naturopathic medicine as the foundation of my future naturopathic medical practice. 5. The principles of naturopathic medicine are integrated consistently in the curriculum at SCNM. 5 Strongly Agree 4 Agree 3 Neutral 2 Disagree 1 Strongly Disagree Comments HOW TO APPLY DE TO ACADEMIC ASSESSMENT SITUATIONS STEP 1: Identify an assessment at your institution that has proven to be unsuccessful that you are now tasked with improving or developing. STEP 2: Brainstorm ideas for improvements, developments, and/or innovations for the assessment. This can include replacing the assessment altogether. Determine alignment between the assessment and the Student Learning Outcome. STEP 3: Decide how you will measure/determine the success and value of the refined or new assessment. STEP 4: Decide how you will implement the refined or new assessment at your institution. IN YOUR GROUP: 1. Discuss an assessment example at your institution (or please use the Student Survey on Principles of Naturopathic Medicine example) that needs (or needed) improvement and/or development. 2. Brainstorm ideas/rationales for improvements, developments, or innovations for the instrument. 3. Select someone from your group to briefly share the assessment example and one or two ideas for improvement, development, or innovation. TIME FOR SHARING: QUESTIONS? REFERENCES Burke, K. (2010). Balanced assessment: From formative to summative. Solution Tree Press. Patton, M. Q. (October, 2014). Developmental evaluation: Systems thinking and complexity science. Professional development workshop at American Evaluation Association Conference, Denver, CO. Patton, M. Q. (2011). Developmental evaluation: Applying complexity concepts to enhance innovation and use. Guilford Press. CONTACT INFORMATION Nancy Stackhouse, Ed.D. Director of Academic Assessment Southwest College of Naturopathic Medicine 2140 East Broadway Road Tempe, AZ 85282 Email: n.stackhouse@scnm.edu