AN EAGER REGRESSION METHOD BASED ON SELECTING

APPROPRIATE FEATURES

Tolga Aydm and H.Altay Giivenir

Departmentof ComputerEngineering

Bilkent University. Ankara.TURKEY

Abstract

This paper describes a machinelearning method,

called Regression

by SelecthtgBest P~’ttllll’es (RSBF).

RSBFconsists of two phases: The first phaseaimsto

find the predictive power of each feature by

constructing simple linear regression lines, one per

each continuousfeature and numberof categories pen

each categorical feature. Althoughthe predictive

powerof a continuousfeature is constant,it varies for

eachdistinct valueof categoricalfeatures. Thesecond

phase constructs multiple linear regression lines

amongcontinuousfeatures, each time excludingthe

worst feature amongthe current set, and constructs

multiplelinear regressionlines. Finally, these muhiple

linear regressionlines and the categorical features"

simplelinear regressionlines are sorted accordingto

their predictive power. In the querying phase of

learning, the best lineal" regression line and the

features constructing that line are selected to make

predictions.

Keywords: Pnediction. Feature Projection. and

Regression.

1

INTRODUCTION

Prediction has been one of the most commonproblems

researched in data mining and machine learning.

Predicting the values of categorical features is knownas

classification,

whereas predicting the values of

continuous features is knownas regression. From this

point of view, classification can be considered as a

special case of regression. In machine learning, much

research has been performed for classification. But,

recently the ft~cus of researchers has movedtowards

regression, since manyof the real-life problemscan be

modeledas regression problems.

There are two different

approaches for

regression in the machine learning community: Eager

and lazy learning. Eager regression methodsconstruct

rigorous models by using the training data, and the

prediction task is based on these models. The

advantage of eager regression methods is not only the

ability to obtain the interpretation of the underlying

data, but also the reduced query time. On the other

hand, the main disadvantage is their long train time

requirement. Lazy regression methods, t m the other

hand, do not construct models by using the training

data. Instead, they delay all processingto the prediction

Copyright

©2001,

Ak,

AI.Allrights

reserved.

phase. The most important disadvantage of lazy

regression methodsis the fact that, they do not provide

an interpretable modelof the training data, because the

modelis usually the training data itself. It is not a

compact description

of the training data, when

comparedto the models constructed by eager regression

methods, such as regression trees and rule based

regression.

In the literature,

many eager and lazy

regression methods exist. Amongeager regression

methods, CART[1], RETIS17], M515], DART

[2], and

Stacked Regressions [9] induce regression trees, FORS

16] uses inductive logic programmingfor regression,

RULE[3] induces regression rules, and MARS[8]

constructs

mathematical

models. Among lazy

regression methods, kNN[4, 10, 15] is the most popular

nonparametric instance-based approach.

In this paper, we describe an eager learning

method, namely Regression by Selecthzg Best Features

(RSBF)[13, 14]. This method makes use of the linear

least squares regression.

A preprocessing phase is required to increase

the predictive power of the method. According to the

Chebyshev’sresult [12], for any positive numberk, at

least (1 - l/k’-) * 100%of the values in any population

of numbers are within k standard deviations of the

mean. Wefind the standard deviation of the target

values of the training data, and discard the training data

whosetarget value is not within k standard deviations of

the mean target. Empirically, we reach the best

prediction by taking k as ,~-.

In the first phase, RSBFconstructs projections

of the training data on each feature, and this phase

continues by constructing simple linear regression lines,

one per each continuous feature and number of

categories per each categorical feature. Then, the simple

linear regression lines belonging to continuous features

are sorted according to their prediction ability. The

second phase begins by constructing multiple linear

regression lines amongcontinuous features, each time

excluding the worst feature amongthe current set, and

continues by constructing multiple linear regression

lines. Then these multiple linear regression lines

together with the categorical features’ simple linear

regression lines are sorted accordingto their predictive

power. In the querying phase of learning, the target

value of a query instance is predicted using the linear

From: FLAIRS-01 Proceedings. Copyright © 2001, AAAI (www.aaai.org). All rights reserved.

MACHINELEARNING 361

regression line, multiple or simple, having the minimum

relative error, i.e. having the maximumpredictive

power.If this linear regression line is not suitable fi)r

our query instance, we keep searching for the best linear

regression line among the ordered list of linear

regression lines.

In this paper, RSBFis compared with three

eager methods (RULE, MARS,DART)and one lazy

method (kNN) in terms of predictive

power and

computational complexity. RSBFis better not truly in

terms of predictive power but also in terms of

computational complexity, when compared to these

well-known methods. For most data mining or

knowledge discovery applications, where very large

databases are concerned, this is thought of as a solution

because of low computational complexity. Again RSBF

is noted to be powerful in the presence of missing

feature values, target noise and irrelevant features.

In Section 2, we review the kNN. RULE, MARS

and DARTmethods for regression. Section 3 gives a

detailed description of the RSBF.Section 4 is devoted

to the empirical evaluation of RSBFand its comparison

with other methods. Finally, in Section 5, conclusions

are presented.

2

REGRESSION OVERVIEW

kNNis the most commonlyused lazy methodfi~n" both

classification and regression problems. The underlying

idea behind the kNNmethodis that the closest instances

to the query point have similar target values to the

query. Hence, the kNNmethodfirst finds the closest

instances to the query point in the instance space

according to a distance measure. Generally, the

Euclidean distance metric is used to measure the

similarity between two points in the instance space.

Therefore, by using the Euclidean distance metric as our

distance measure,k closest instances to the query pt~int

are found. Then kNNoutputs the distance-weighted

averageof the target values of those closest instances as

the prediction for that queryinstance.

In machine learning, inducing rules from the

given training data is also popular. Weissand lndurkhya

adapted the rule-based classification algorithm [ILl,

Swap-I, for regression. Swap-Ilearns decision rules in

Disjunctive Normal Form (DNF). Since Swap-I

designed for the prediction of categorical features, using

a preprocessing procedure, the numeric feature in

regression to be predicted is transformed to a nominal

one. For this transformation, the P-class algorithm is

used [3]. If we let {y} be a set of output values, this

transtbrmation can be regarded as a one-dimensional

clustering of training instances on response variable y,

in order to form classes, The purpose is to make v

values within one class similar, and across classes

dissimilar. The assignmentof these values to classes is

362

FLAIRS-2001

done in such a way that the distance between each yi

and its class mean must be minimum.After formation

of pseudo-classes and the application of Swap-l, a

pruning and optimization procedure can be applied to

construct an optimumset of regression rules.

The MARS

18] method partitions the training

set into regions by splitting the features recursively into

two regions, by constructing a binary regression tree.

MARS

is continuous at the borders of the partitioned

regions. It is an eager, partitioning, interpretable and

adaptive method.

DART,also an eager method, is the latest

regression tree induction program developed by

Friedman [21. It avoids limitations of disjoint

partitioning, used for other tree-based regression

methods, by constructing overlapping regions with

increasedtraining cost.

3

REGRESSION BY SELECTING BEST

FEATURES(RSBF)

The RSBFmethod tries to determine a subset of the

features such that this subset consists of the best

features. The next subsection describes the training

phase tbr RSBF, and then we describe the querying

phase.

3.1 Training

Training in RSBFbegins simply by storing the training

data set as projections to each feature separately. A

copy of the target values is associated with each

projection and the training data set is sorted for each

feature dimensionaccording to their feature values. If a

training instance includes missing values, it is not

simply ignored as in many regression algorithms.

Instead, that training instance is stored for the l%atures

on which its value is given. The next step involves

constructing the simple linear regression lines for each

feature. This step differs for categorical and continuous

features. In the case of continuousfeatures, exactly one

simple linear regression line per feature is constructed.

On the other hand, the number of simple linear

regression lines per each categorical feature is the

number of distinct feature values at the feature of

concern. For any categorical feature, the parametric

form of any simple regression line is constant, and it is

equal to the average target value of the training

instances whosecorresponding feature value is equal to

that categorical value.

The training phase continues by constructing

multiple linear regression lines among continuous

features, each time excluding the worst one. Thenthese

lines, together with the categorical features’ simple

linear regression lines are sorted according to their

predictive power. The training phase can be illustrated

through an example.

Let our example domain consist of five

features, j~, j~, f~, f4 and fs , wherej], .[2. and f3 are

continuous and f4, fs are categorical. For categorical

features, No_categories [j’] is defined to give the

number of distinct categories of feature f. In our

exampledomain, let the following values be observed:

No_categories

[/’4] = 2 (values: A, B)

No_categories

If.s] = 3 (values: X, Y, Z)

For this example domain, 8 simple linear

regressionlines are constructed:I lbrfl, 1 for f2, 1 fi)r.f:~.

2 for f4, and finally 3 for f.s. Let the followingbe the

parametricform of the simple linear regression lines:

Simplelinear regressionlinelbrfl: target= _9.I]- 5

Simplelinear regresstonline tbrf2: target = -4fz + 7

Simplelinear regressionline tbr~: target= 53’3+ I

Simplelinear regressionline tbr A categoryoff4: target = 6

Simplelinear regressionline for B categoryoff4: target = -5

Simplelinear regresstonline for Xcategoryof./~: target= 10

Simplelineal regressionline tbr Ycategoryoff.s: target=1

Simplelinear regresstonline for Z categoryoffs: target= 12

The training phase continues by sorting the

simple linear regression lines belonging to continuous

features according to their predictive accuracy. The

relative error (RE)of the regression lines is used as the

indicator of predictive power: the smaller the RE, the

stronger the predictive power. The REof a simple linear

regression line is computedby the following formula:

line is denoted by MLRLL2.3. Then we exclude the

worst feature, namelyfi, and run multiple linear least

squares regression to obtain MLRL2.3.In the final step,

we exclude the next worst feature of the current set,

namely f~, and obtain MLRL2.Actually the multiple

linear least squares regression transforms into simple

linear least squaresregression in the final step, since we

deal with exactly one feature. Let the following be the

parametricform of the multiple linear regression lines:

MLRLI~_.3

:

MLRL,_.3:

MLRL,_ :

target= -fi + 8]’_,+f~+ 3

target= 6f2+ 6f3-9

target= -4f_,+ 7

The second phase of training is completed by

sorting MLRLs

together with the categorical features"

simple linear regression lines according to their

predictive power, the smaller the REof a regression

line, the stronger the predictive powerof that regression

line.

Let’s suppose that the linear regression lines

are sorted in the following order, from the best

predictive to the worst one:

MLRL,3 > f4=A > MLRL2> j’.~=X > MLRLL,_.3> f.s=Y >

./~=Z > f4=B.

This shows that any categorical feature’s

predictive powermayvary amongits categories. For the

above sorting schema, categorical feature .f4’s

predictions are reliable amongits category A, although

it is very poor amongcategory B.

3.2 Querying

where Q is the number of training instances used to

construct the simple linear regression line, t-is the

medianof the target values of Qtraining instances, t(qi)

is the actual target value the ith training instance The

MAD

(MeanAbsolute Distance) is defined as tbllows:

Q

1 lt(

MAD=

--a i~=l

qi )- t( qi

Here, /’ (qi) denotes the predicted target value of the ith

training instance according to the induced simple linear

regression line.

Let’s assume that continuous features are

sorted as f2, f3, Ji according to their predictive power.

The second step of training phase begins by employing

multiple linear least squares regression on all 3 features.

The output of this process is a multiple linear regression

line involving contributions of all three features. This

In order to predict the target value of a query

instance I i, the RSBFmethoduses exactly one linear

regression line. This line maynot always be the best

one. The reason for this situation is explained via an

example. Let the feature values of the query instance t~

be the following:

.fi(ti) 5,f,,(ti) = 10,fa(ti) = missing,.A(ti) =B,fs(ti) = mi

Althoughthe best linear regression line is MLRLz.3,

this line cannot be used for our ti, sincef.~(ti) = missing.

The next best linear regression line, whichis worsethan

only MLRL2.3,is f4=A. This line is also inappropriate

fbr our ti, since, j~(ti) A.Therefore, the search for

the best linear regression line, continues. The line

constructed by f2 comesnext. Sincef2(ti) 4: missing,

succeed in finding the best linear regression line. So the

prediction madefor target value of t~ is (-4 *f2(tO + 7)

(-4 * 10 + 7) = -33. Once the appropriate linear

regression line is found, the remaininglinear regression

lines need not be dealed anymore.

MACHINELEARNING 363

4

EMPIRICAL

the best on 11 data files in the presenceof 30 irrelevant

features (Table5, 6, 7).

EVALUATION

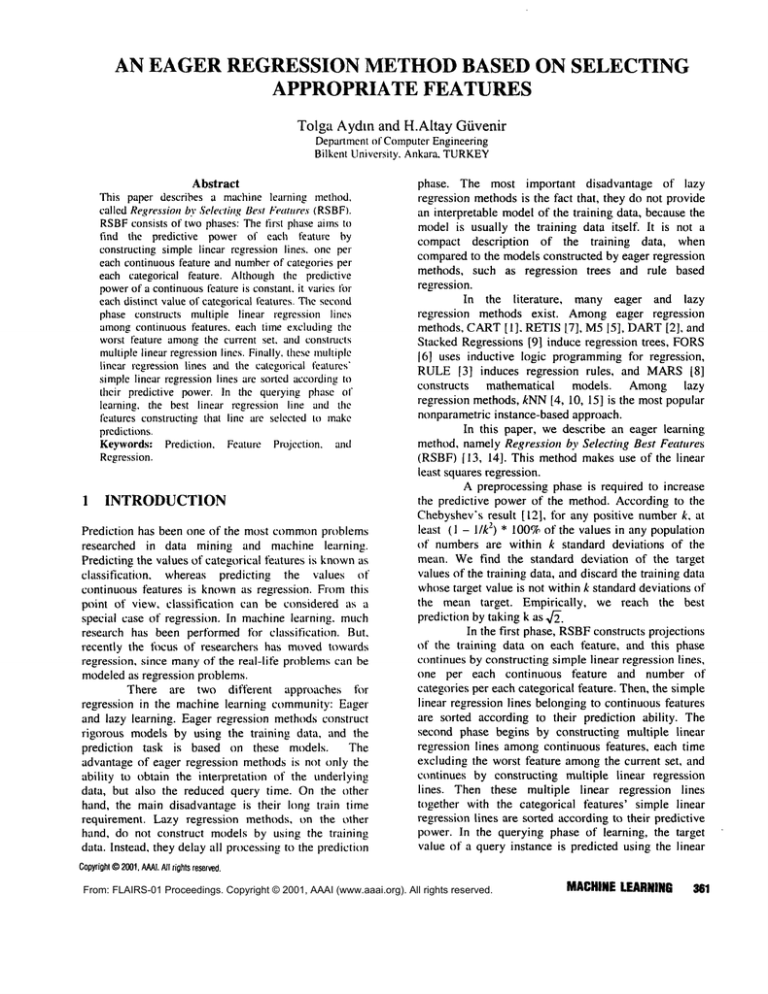

The RSBFmethod was compared with the other wellknownmethodsmentioned above, in terms of predictive

accuracy and time complexity. We have used a

repository consisting of 27 data files in our experiments.

The characteristics of the data files are summarizedin

Table 1. Most of these data files are used for the

experimental analysis of function approximation

techniques and for training and demonstration by the

machinelearning and statistics community.

A 10-fold cross-validation

technique was

employedin the experiments. For the lazy regression

methodthe value of parameter k was set to 10, where k

denotes the number of nearest neighbors considered

aroundthe query instance.

In terms of predictive

accuracy, RSBF

pertbrmed the best on 13 data files amongthe 27, and

obtained the lowest meanrelative error (Table 2).

In terms of time complexity, RSBFperformed

the best in the total (training + querying) execution

time, and becamethe fastest method(Table 3, 4).

In machinelearning, it is very important for an

algorithm to still perform well whennoise, the missing

feature value and irrelevant features are added to the

system. Experimental results showed that RSBFwas

again the best method whenever we added 20%target

noise, 20%missing feature value and 30 irrelevant

features to the system. RSBFperformed the best on 12

data files in the presence of 20%missing value, the best

on 21 data files in the presence of 20%target noise and

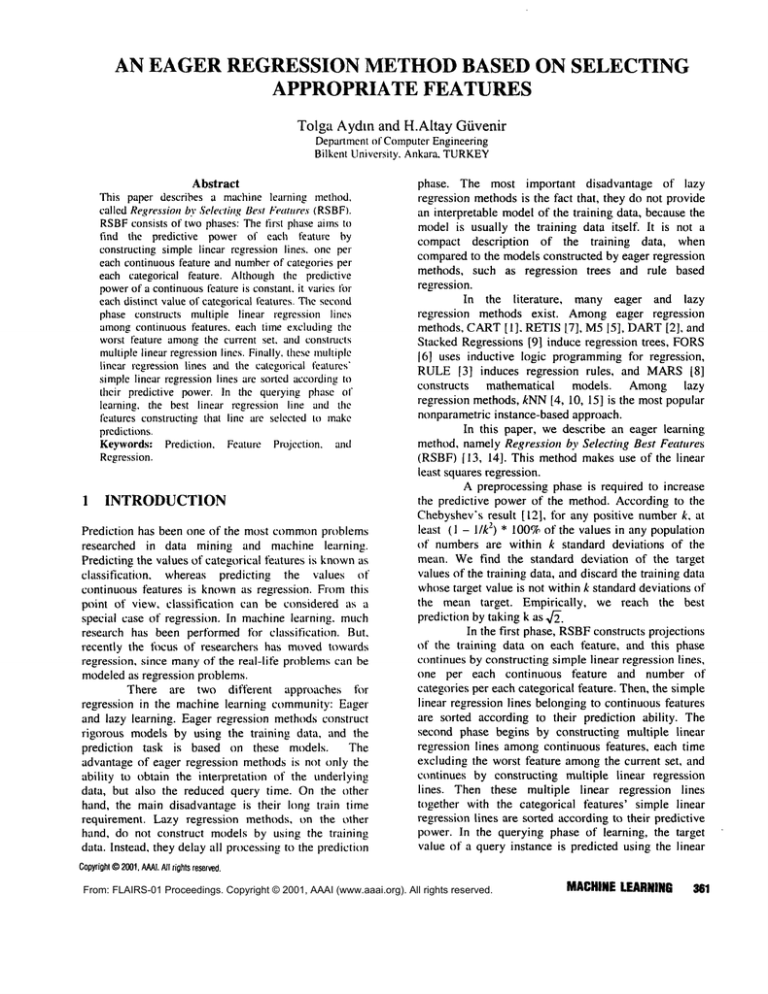

Dataset

Original Name

Instances

AB

AI

AU

BA

BU

CL

CO

CF

ED

EL

FA

FI

FL

FR

HO

HU

NO

NR

PL

PO

RE

SC

SE

ST

TE

[IN

VL

Abalone

Airport

Auto-mpg

Baseball

Buying

College

Country

Cpa

Education

Electric

Fat

Fishcatch

Flare2

Fmitfly

Home Run Race

Housing

Normal Temp.

Northridge

Plastic

Poverty

Read

Schools

Servo

Stock Prices

Televisions

UsnewsColl.

Villages

4177

135

398

337

100

236

122

209

1500

240

9_,52

158

1066

125

163

506

130

2929

1650

97

681

62

167

950

40

1269

766

Features

CC+N)

8 (7 + I)

4 (4 + 0)

7 (6 + 1)

16 ( 16+ 0)

39 (39 + 0)

25 (25 + O)

20 (20 + 0)

7( 1 + 6)

43 (43 + 0)

12 (10 + 2)

17 (17 +0)

7 (6 + I

10 (0 + 10)

4 (3 + I

19 (19 + 0)

13 ( 12+ 1)

2 (2 + 0)

I 0 ( I 0 +0)

2 (2 + 0)

6 {5 + I)

25 (24 + 1)

19 (19 + 0)

4 (0 + 4)

9 (9 + 0)

4 (4 + 0)

31 (31 + 0)

32 (29 + 3)

Missing

Value~

None

None

6

None

27

381

34

None

2918

58

None

87

None

None

None

None

None

None

None

6

1097

I

None

None

None

769-4

3986

Tablel. Characteristics of the data files usedill the empirical evaluations.

C: Continuous, N: Nominal

364

FLAIRS-2001

5 CONCLUSIONS

In this paper, we have presented an eager regression

methodbased on selecting appropriate features. RSBF

selects the best feature(s) andformsa parametricmodel

for use in the queryingphase. This parametricmodelis

either a multiple linear regression line involving the

contributionof continuousfeatures, or a simple linear

regressionline of a categorical value of any categorical

feature. Themultiple linear regressionline reducesto a

simplelinear regression line, if exactly one continuous

featureconstructsthe multiplelinear regressionline.

RSBFis better than other well-known eager

and lazy regression methodsin terms of prediction

accuracyand computationalcomplexity. It also enables

the interpretation of the training data. That is, the

methodclearly states the mostappropriatefeatures that

are powerfulenoughto determinethe value of the target

feature.

The robustness of any regression methodcan

be determinedby analyzing the predictive powerof that

methodin the presence of target noise, irrelevant

features andmissingfeature values. Thesethree factors

heavily exist in real life databases,andit is important

for a learning algorithmto give promisingresults in the

presenceof those factors. Empiricalresults indicate that

RSBFregression approachis also a robust method.

Dataset

RSBF

KNN

RULE

MARS

DART

AB

AI

AU

BA

BU

CL

CO

CP

ED

EL

FA

FI

FL

FR

HO

HU

NO

NR

PL

PO

RE

SC

SE

ST

"rE

UN

VL

0.678

0.532

0.413

0.570

0.732

1.554

1.469

0.606

0.461

1.020

0.177

0.638

1.434

1.013

0.707

0.589

0.977

0.938

0.444

0.715

1.001

0.175

0.868

1.101

1.175

0.385

0.930

0.661

0.612

0.321

0.443

0.961

0.764

1.642

0.944

0.654

1.194

0.785

0.697

2.307

1.201

0.907

0.600

1.232

1.034

0.475

0.796

1.062

0.388

0.619

0.599

1.895

0.480

1.017

0.899

0.7,t4

0.451

0.666

0.946

0.290

6.307

0.678

0.218

1.528

0.820

0.355

1.792

1.558

0.890

O.641

1.250

1.217

0.477

0.916

1.352

0.341

0.229

0.906

4.195

0.550

1.267

0.683

0.720

0.333

0.493

0.947

1.854

5.110

0.735

0.359

1.066

0.305

0.214

1.556

1.012

0.769

0.526

1.012

0.928

0.404

1.251

1.045

0.223

0.432

0.781

7.203

0.412

1.138

0.678

0.546

0.346

0.508

0.896

0.252

1.695

0.$10

0.410

1.118

0.638

0.415

1.695

1.077

0.986

0.S22

1.112

0.873

0.432

0.691

1.189

0.352

0.337

0.754

2.690

0.623

1.355

Mean

0.789

0.900

1.166

1.167

0.841

Table2. Relative errors (RE) of algorithms. Best REsare shownin bold font

Damset

RSBF

KNN

RULE

MARS

AB

AI

At:

BA

BU

CL

CO

CP

ED

EL

FA

FI

FL

FR

HO

tlU

NO

NR

PL

PO

RE

SC

SE

ST

TE

UN

VL

211.5

2

12.5

35.3

45.5

34.1

17.9

6.3

691.1

13.3

36.4

4.2

40.8

I

19

36.3

0.2

189.9

13.7

2.1

104.3

8.I

2.2

57.1

11.2

245

136.8

8.9

0

0.6

0

0

11,5

0,1

0

13.5

0.2

II

11

3.5

11

0

1

0

7.4

11.2

0

3

II

0

!.4

0

7.4

4.4

32t9

911.8

248.9

181.8

67.1

148.2

108.6

52.7

862.8

69.5

161.I

47.8

108.8

34.1

57.5

264.9

311.6

3493

175.3

411.9

196

45.3

37

365.1

30.9

2547.1

513.6

10270

159.2

5711.5

915.1

761.7

1274.3

475.3

575.3

10143.9

4117.5

985

240,2

667.2

99.5

616.3

1413.9

69.3

57119.9

82.-!..8

127.3

27.14.6

2611.8

116.4

2281.4

3I. I

8435.2

3597.8

Mean

72.84

1.9296

488.83

1991.61

DART

Damsel

RSBF

KNN

RULE

MARS

477775

62

18911.1

3171.1

794.4

717.6

481

286

27266

1017

1773.9

201.4

’)71.4

45.9

893.9

8119.7

18.9

87815

10024.4

44

331144.1",

84.4

83.4

17341.,.4

3.I

168169

234115

AB

AI

At;

BA

BU

CL

CO

CP

ED

EL

FA

FI

FL

FR

HO

11U

NO

NR

PL

P(I

RE

SC

SE

ST

TE

UN

VL

21.3

1

2.1

2.2

0

1

0.3

1

8. l

1.6

1.4

1.2

4.2

0.1

11.6

3

0

12.6

9.5

0

3.2

0.3

0. I

5.4

0

7.3

5.8

6547

3.4

64.5

54.6

11.6

38.2

8.4

11.6

2699.7

21

33.I

7.9

407.8

2

13.3

107.8

1.9

3399.4

571.9

2.2

265.6

2

4.2

303.2

0

1383.2

439

14433.1

141.7

462.2

244.8

32.I

40.3

98.4

87.3

312.3

117.5

96.4

48.8

"Y~--3.6

45.4

43

410.5

30.8

11326.8

2192.7

37.1

627.2

27.8

49.1

1090.9

24

1877.3

1118.2

7.9

0

0

0

0

I

0

0

2.7

0

0

0

0.4

0

0

0

0

4.7

0.2

I)

0

3.7

0

0. I

0

7

0.3

6.1

0

0

0

0

0

0.1

0

1.7

0

0

0

0

0

0

0

0

1.75

1.2

(1

I

0

0

0

0

2

11

321155.7

Mean

3.456

6117.574

1.03704

0.513

1305.16

DART

Table3. Train tinre of algorithmsin milliseconds. Best resu.lts a~e shown

in bold font.

Table4. Querytime of algorithms in milliseconds. Best results are

shownin bold font.

Dalaset

RSBF

KNN

RULE

:MARS

I)ART

Dataset

RSBF

KNN

RULE

MARS

DART

AB

AI

AU

BA

BII

CL

CO

(T

El)

El,

FA

FI

FL

FR

HO

|IU

NO

NR

PL

PO

RE

SC

SE

ST

TE

[’N

VL

0.720

11.496

I).499

0.714

I).682

0.622

1.399

0.719

I).572

1.019

11.739

11.631

1.429

1.1134

0.725

¢)

0.72

1.006

I).951

0.515

11.767

11.995

11.281

11.879

1.228

1.4118

11.388

11.947

11.7511

0.726

0.414

11.553

0.951

11.942

1.856

11.922

11.743

i .097

0.849

0.675

1.851

1.711

11.910

0.761

1.229

I.II72

11.733

I).976

I .(159

0.449

0.921

0.744

4.398

0.558

1.1156

0.961

0.676

11.526

0.833

0.878

(I.399

3.698

0.832

11.497

1.537

11.948

11.543

1.751

1.557

1.040

0.748

1.363

1.272

0.686

I. 189

I. 364

0.500

0.849

0.9114

3.645

11.620

1.4111

11.748

I).798

0.414

11.637

0.862

I).801

3.733

11.747

11.595

1.073

11.731

11.537

1.557

1.1112

0.836

0.649

11.989

0.972

0.679

1.026

I .(148

(I.31)3

0.746

0.930

16.50

I).497

1.090

11.688

11.546

0.363

11.576

I .t)2b

0.o,35

2.377

11.(R|8

0.536

I. 19I

11.735

11.4111

1.421

1.347

0.974

0.51R1

1.222

*

0.420

0.792

1.229

t1,370

0.495

11.7117

2.512

0.844

*

AB

AI

AI.!

BA

BU

CL

CO

CP

El)

EL

FA

FI

FL

FR

110

HU

NO

NR

PL

PO

RE

SC

SE

ST

TE

(IN

VL

11.726

0.906

0.398

0.675

0.935

0,834

1.702

0.720

0.4311

11.995

0.1711

11.653

2.366

1.036

0.754

0.575

11.91)9

0.947

0.411

11.692

11.958

0.533

11.697

0.646

1.747

0.634

0.976

7.592

0.807

1.832

11.457

12.66

8.283

1.676

0.930

2.166

1.465

2.525

0.710

73.89

2.394

7.853

2.801

1.403

38.84

5.492

9.429

6.597

0.583

21.29

1.921

2.087

0.636

1.030

9.301

1.122

2.531

0.712

12.92

I 1.24

3.102

0.782

2.384

1.899

3.208

0.528

77.21

3.247

I 1.53

3.635

2.220

42.32

5.777

9.456

I(1.33

0.968

27.77

3.887

4.569

0.865

1.513

7.6112

0.856

2.107

0.537

13.30

9.393

5.874

0.745

2.164

1.148

2.447

0.501

70.90

1.7111

10.29

2.893

1.037

37.66

4.921

4.213

6.759

0.700

22.01

1.966

7.267

11.541

0.977

6.603

0.785

1.981

0.556

10.67

6.127

2.040

11.636

2.276

1.431

2.1158

(I.387

71.40

2.089

6. 115

2.61I

1.196

31.54

5.107

6.038

7.108

0.627

21.72

1.871

2.671

0.764

1.518

Mean

0.818

1.071

I. 157

1.5011

0.896

Mean

0.853

8.0511

9.446

8.167

7.331

"Fable5. Relative errors (R E) of algorithms. where20’Y,missing lbatttre vahles

added. Best REs are shownin hold font. (* Me(illSrest.ill isll’[ a’.’llilal’d¢due10

singular varialme/covariancenlalrix)

Tal)le6. Relative errors (RE) of algorithtm where 20%target noise

added. Best REsare shownin bold font. (* Meansresult isn’t available

due to singular vuriance/covariauce matrix)

MACHINE

LEARNING 366

Dataset

AB

AI

AU

BA

BU

CL

CO

CP

ED

EL

FA

FI

FL

FR

HO

HU

NO

NR

PL

PO

RE

SC

SE

ST

TE

UN

VL

Mean

RSBF

0.677

0.794

0.429

0,603

1.325

I.I11

2. I 19

0.676

0.461

1.010

0.204

0.694

1.429

1.096

0.800

0.601

1.070

0.938

0.450

0.838

1.014

0.672

1.036

1.104

2.222

0.385

0.930

0.914

KNN

0.873

1.514

0.538

0,568

0.968

1.162

2.854

1,107

0.802

1.037

1.026

0.917

1.454

1.0671

0.932

0.920

1.079

1.076

0.961

0.855

1.045

0.582

0.835

1.188

3.2A1

0.757

1.050

1.126

RULE

0.934

0.723

0.491

0.574

1.073

0,.7.84

1.794

0.753

0.268

1.367

1.039

0.456

1.765

1,513

1.049

0.701

1.484

1.284

0.575

0.934

1.380

0.386

0,471

0.914

5.572

0.557

1.454

1.104

MARS

0.682

0.682

0..~8

0.536

0.877

2.195

4.126

0.613

0.404

1.134

0.249

0.247

1.629

1.777

0.847

0.$21

1.370

0.916

0.407

1.005

1.042

0.305

0.798

0.817

5.614

0.394

1.257

1.14l

DART

*

0.657

0.51I

0,628

0.969

0.306

1.662

0.668

0.573

1.236

0.877

0.420

1.490

1.430

1.165

0.653

I. 156

*

0.734

1.013

1.31I

0.391

0.641

11,756

2.709

0.~16

1.307

0.967

Table7. Relative errors (RE) of algorithms, where 31) irrelevant features

added, Best REsare shownin bold font. (* Meansresult isn’t available due to

singular variance/covarianee matrix)

References

[1] Breiman, L, Friedman, J H, Olshen, R A and

Stone, C J ’Classification and Regression Trees’

Wadsworth,Belmont, Calitbrnia (1984)

[2] Friedman, J H ’Local Learning Based on Recursive

Covering’ Department of Statistics.

Stanford

University ( 19961

[3] Weiss, S and Indurkhya, N " Rule-based Machine

Learning Methods for Functional Prediction’

Journal of Artificial Intelligence Research Vol 3

(19951 pp 383-403

[4] Aha, D, Kibler, D and Albert, M’Instance-based

Learning Algorithms’ Machine Learning Vo{ 6

(19911 pp 37 - 66

[5] Quinlan, J R ’Learning with Continuous Classes"

Proceedings A!’92 Adams and Sterling (Eds)

Singapore (19921 pp 343-348

[6] Bratko, I and Karalic A ’First Order Regression’

MachhzeLearning Vol 26 (19971 pp 147-176

[7] Karalic, A ’Employing Linear Regression in

Regression Tree Leaves’ Proceedings of ECAl’92

Vienna, Austria, Bernd Newmann(Ed.) (19921

440-441

[8] Friedman, J H ’Multivariate Adaptive Regression

Splines’ The Annalsof Statistics Vol 19 No1 ( 1991)

pp 1-141

[9] Breiman, L ’Stacked Regressions’

Machine

Learning Vol 24 (1996) pp 49-64

366

FLAIRS-2001

[10] Kibler, D, Aha D Wand Albert, MK ’Instancebased Prediction of Real-valued

Attributes’

Comput.Intell. Vol 5 (19891 pp 51-57

[11] Weiss, S and Indurkhya, N ’Optimized Rule

Induction’ IEEE Expert Vol 8 No6 (1993) pp 61-69

[12] Graybill, F, lyer, H and Burdick, R ’Applied

Statistics’ UpperSaddle River, NJ (1998)

[13] Aydm, T ’Regression

by Selecting

Best

Feature(s)’ M.S.Thesis, ComputerEngineering, Bilkent

University, September, (2000)

[14] Aydln, T and Giivenir, H A ’Regression by

Selecting Appropriate Features’ Proceedings of

TAINN’2000,Izmir, June 21-23, (2000), pp 73-82

[15] Uysal, i and Giiveoir, H A ’Regression on

Feature Projections’ Knowledge-Based

Systems, Vol. 13,

No:4, (2000), pp 207-214