From: FLAIRS-00 Proceedings. Copyright © 2000, AAAI (www.aaai.org). All rights reserved.

Validation

of Cryptographic

Protocols

by Efficient

Automated Testing

Sigrid Giirgens

Ren@Peralta *

GMD- German National Research Center

for Information Technology

Rheinstrasse 75, 64295 Darmstadt, Germany

guergens@darmstadt.gmd.de

Ren@Peralta

Computer Science Department

Yale University

New Haven, CT. 06520-8285

peralta-rene@cs.yale.edu

Abstract

Wepresent a methodfor validating cryptographic protocols. The methodcan find flaws which are inherent i~l the design of the protocol as well as flaws arising from the particular implementationof the protocol.

Thelatter possibility arises fromthe fact that there is

no universally accepted standard for describing either

the cryptographic protocols themselves or their implementation. Thus, security call (and in practice does)

depend on decisions made by tlm implementer, who

maynot have the necessary expertise. Our methodrelics on automatic theoremproving tools. Specifically,

wc used "Otter", an automatic theorem proving software developed at ArgonneNational Laboratories.

Keywords: Security analysis, authentication protocol. automatic theorem proving, protocol validation

Introduction

Cryptographic protocols play a key role in communication via open networks such as the Internet. These

networks are insecure in the sense that any adversary

with the necessary technical background can monitor

and even modify the messages. Cryptographic protocols are used to provide confidentiality and authenticity of the communication. Messages are encrypted to

ensure that only the intended recipient can understand

them. Message authentication codes or digital signatures are used so that modifications can be detected.

All of these communication "services" can be provided

as long as the cryptographic protocols themselves are

correct and secure. However, the literature provides

manyexamples of protocols that were first considered to

be secure and were later found to contain flaws. Hence

validation of cryptographic protocols is an important

issue.

In the last ten to fifteen years, active areas of research

have fo(:used on:

"Supported in part by NSFGrant CCR-9207204and by

the GermanNational Research Center for Information Technology.

Copyright (~) 2000, AmericanAssociation for Artificial Intelligence (www.aaai.org).All rights reserved.

1. Developing design methodologies which yield cryptoprotocols for which security properties can be formally proven.

2. Formal specification

cryptoprotocols.

and verification/validation

of

An early paper which addresses the first of these

issues is (Berger, Kannan, & Peralta 1985), where

is argued that protocols should not be defined simply

as communicating programs but rather as sequences of

messageswith verifiable properties; i.e. security proofs

can not be based on unverifiable assumptions about how

an opponent constructs its messages. As with much of

the work done by people in the "cryptography camp",

this research does not adopt the framework of formal

language specification.

More recent work along these

lines includes that of Bellare and Rogaway(Bellare

Rogaway 1995), Shoup and Rubin (Shoup & Rubin

1996) (which extends the model of Bellare and Rogaway to smart card protocols), and Bellare, Canetti and

Krawczyk (Bellare, Canetti, & Krawczyk 1998).

The second issue, formal specification and automatic

verification or validation methods for cryptographic

protocols, has developed into a field of its own. Partial overviews of this area can be found in (Meadows

1995), (Marrero, Clarke, & Jha 1997) and (Paulson

1998). Most of the work in this field can be categorized as either developmentof logics for reasoning about

security (so-called authentication logics) or as development of model checking tools. Both techniques aim at

verifying security properties of formally specified cryptoprotocols.

A seminal paper on logics of authentication is (Burrows, Abadi, & Needham1989). Work in this area has

produced significant results in finding protocol flaws,

but also appears to have limitations which will be hard

to overcome within the paradigm.

Modelchecking involves the definition of a state space

(typically modelingthe "knowledge"of different participants in a protocol) and transition rules which define

both the protocol being analyzed, the network properties, and the capabilities of an enemy. Initial work in

this area can be traced to (Dolev & Yao 1983).

Much has already been accomplished. A well-known

AI APPLICATIONS 7

software tool is the NRLProtocol Analyzer (Meadows

1991). l Other promising work includes Lowe’s use of

the Failures Divergences Refinement Checker in CSP

(Lowe 1996); Sehneider’s use of CSP (Schneider 1997);

the model checking algorithms of Marrero, Clarke, and

.lira (Marrero, Clarke, &Jha 1997); Paulson’s use of induction and the theorem prover Isabelle (Paulson 1998);

and the work of Heintze and Tygar considering compositions of cryI)toprotocols (Heintze & Tygar 1994).

As far as we know, the model checking approach has

been used almost exclusively for verification of cryptoprotocols. \~rification can be achieved efficiently if

siml)lif~’ing assumptious are made in order to obtain

sulti(’i,.ntly small stat.c space. Verification tools which

,’an han(lh, infinite state spaces must simplify the not ions of s(,(:urity and correctness to the point that proofs

,’ml I)(, ol)taine(1 using induction or other logical tools

to reason about infinitely many states. Both methods

hay(: produced valuable insight into ways in which cryptOln’OtO(:ols can fail. The problem, however, is not only

complex but also evolving. The early work centered

around proving security of key-distribution protocols.

Currently, it is fair to say that other, muchmore complex protocols, are being deployed in E-commerceapplications without the benefit of comprehensivevalidation.

In this paper we present a validation without verification approach. That is, we do not attempt to formally

prove that a protocol is secure. Rather, our tools are designed to find flaws in cryptoprotocols. Wereason that

by not attempting the very difficult task of proving security and correctness, we can produce an automated

tool that is good at finding flaws when they exist. We

use the theorem prover Otter (see (Wos et al. 1992))

our search engine. Someof the results we have obtained

t.o date are presented in the following sections.

Cryptographic

protocols

Iu what f, Jllows, we restrict ourselves to the study of

key-distribution protocols. The participants of such a

protocol will be called "agents". Agents are not nec,’ssarily people. They can be, for example, computers

acting autonomously or processes in an operating system. In general, the secrecy of cryptographic keys cannot I)e assumed to last forever. Pairs of agents nmst

p,,riodi(’all.v repla(:e their keys with newones. Thusthe

aim of a key-distribution protocol is to produce and securely distribute what are called "session" keys. This

is often achieved with the aid of a trusted key distribution server S (this is the mechanismof the widely used

Kerberos System).

The general format of these protocols is the following:

¯ Agent A wants to obtain a session key for communicating with agent B.

I The work in (Meadows& Syverson [998) expands NRL

to include somespecification capabilities. The resulting tool

W~L~

powerful enough to analyze the SETprotocol used by

Mastercard and Visa (Mastercard and VISA1996).

8

FLAIRS-2000

¯ It then initiates a protocol which involves S, A,

and B.

¯ The protocol involves a sequence of messages

which, in theory, culminate in A and B sharing

a key gAB.

¯ The secrecy of KABis supposed to be guaranteed

despite the fact that all communication occurs

over insecure channels.

To illustrate the need for security analysis of such

protocols we describe a protocol introduced in (Needham & Schroeder 1978) and an attack on this protocol

first shown in (Denning & Sacco 1982). In what follows, a message is an ordered tuple (ml,m2,...,mr)

of concatenated message blocks m/, viewed at an abstract level where the agents can distinguish between

different blocks. The notation {m}g denotes the encryption of the message m using key K. The notation

n. A ~ B : m denotes that in step n of a protocol

execution (which we will call a protocol run throughout this paper) A sends message m to B. The protocol assumes that symmetric encryption and decryption

functions are used (where encryption and decryption

are performed with the same key).

1.

2.

3.

4.

5.

A ~ S :

S ~ A :

A

~B:

B

~ A:

A

)B

A,B, RA

{RA, B, gAB, {gAB, A}K.s }gAs

{KAB,A}Kes

{RB}Ka,

{RB--1}KAB

In the first message agent A sends to S its name A,

the name of the desired communication partner (B) and

a random number RA which it generates for this protocol run. 2 S then generates a ciphertext for A, using

the key KAS that it shares with A. This ciphertext

includes A’s random number, B’s name, the new key

KAB, and a ciphertext intended for B. The usage of

the key gas shall prove to agent A that the message

was generated by S. The inclusion of RA ensures A

that this ciphertext and in particular the key gAB is

generated after the generation of RA, i.e. during the

current protocol run. Agent A also checks that the ciphertext includes B’s name, making sure that S sends

the new key to B and not to someone else, and then

forwards {KAB, A}K~s to B.

For B the situation is slightly different, as it learns

from A’s name who it is going to share the new key

with, but nothing in this ciphertext can prove to B

that this is indeed a new key. According to the protocol

description in (Needham & Schroeder 1978) this shall

be achieved with the last two messages: The fact that

B’s random number RB-- 1 is enciphered using the key

gABshall convince B that this is a newly generated key.

2In this paper, "random numbers"are abstract objects

with the property that they cannot be generated twice and

they cannot be predicted. It is not trivial to implement

these objects, and protocol implementations mayvery well

fail because the properties are not met. However,tackling

this problemis beyondthe scope of this paper.

However,there is a well-known attack on this protocol,

first shown in (Denning & Sacco 1982), which makes

clear ttmt this conclusion can not be drawn. In fact,

all that B can deduce fronl message 5 is that the key

A’.LI~ is used by someone other than B in the current

proto(’ol run.

TI~e attack assumes that an eavesdropper E monitors

ore, run of the protocol and stores {KAB, A}KBs. Since

wr assume the secrecy of agents’ keys holds only for a

limited time period, we consider the point at which E

gets to know the key I(AB. Then it can start a new

protocol run with B by sending it the old ciphertext

{KAB, A}g~s as message 3. Since B has no means to

notice that this is an old ciphertext, it proceeds according to the protocol description above. After the protocol run has finished, it believes that it shares gABas a

new session key with A, when in fact it shares this key

with E.

There is no way to repair this flaw without changing

the nmssages of the protocol if we do not want to make

the (unrealistic) assumption that B stores all keys

ever used. So, this is an example of a flaw inherent in

the i)rotocol design.

Another type of flaw can be due to the underspecification of a cryptoprotocol. Manyprotocol descriptions are vague about what checks are to be per[orm(’d by the recipient of a message. Thus, a protocol

imph,m,,ntation may be secure or insecure depending

cx(’lusiv(fly on whether or not a particular check is performed.

The

communication

and

attack

model

The security analysis of protocols does not deal with

weaknesses of the encryption and decryption functions

used. In what follows we assume that the following

security properties hold:

1. Messages encrypted with a secret key K can only be

-1.

decrypted with the inverse key K

2. A key can not be guessed.

3. Given m, it is not possible to find the corresponding ciphertext for any message containing m without

knowledge of the key.

Wtfile the first two items describe generally accepted

properties of encryption functions, the last one which

Boyd called tim "cohesive property" in (Boyd 1990)

does not hold in general. Boyd and also Clark and Jacot) (see (Clark & Jacob 1995)) show that under certain

(’(mditions, t)articular modesof somecryptographic

gorithms allow the generation of a ciphertext without

knowledge of the key.

These t)al)ers were important in that they drew attention to hidden cryptographic assumptions in "proofs" of

security of cryptoprotocols. In fact, it is clear nowthat

the mmfl)er (and types) of hidden assumptions usually

present in security proofs is much broader than what

Boyd and Clark and Jacob point out.

(

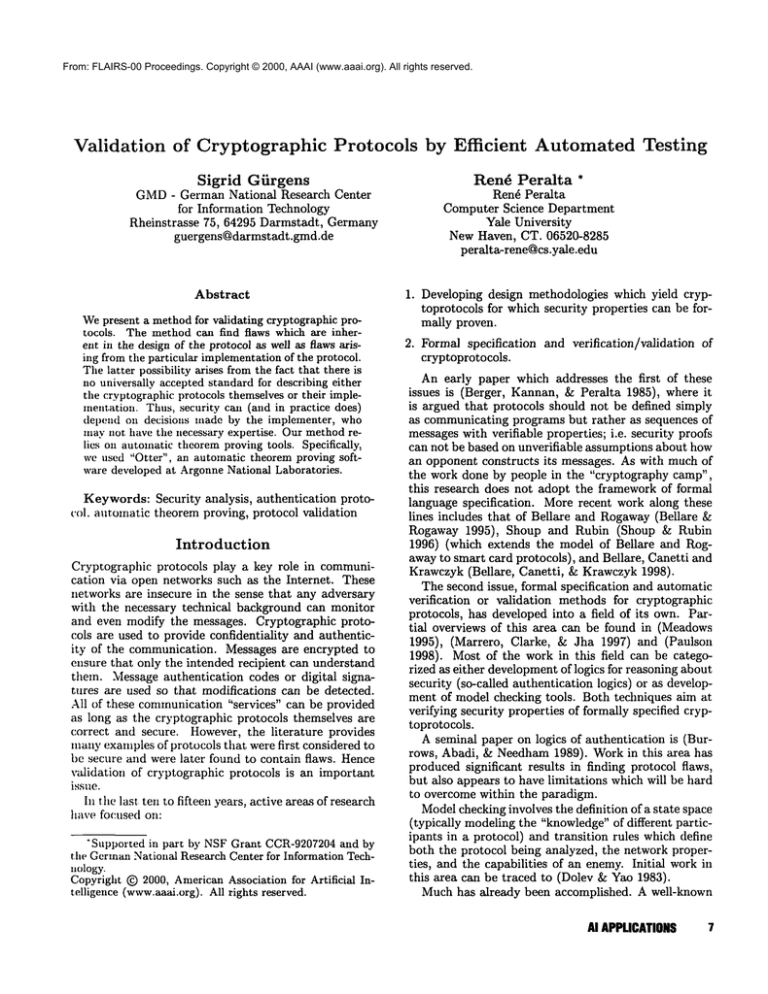

Figure 1: The communication model.

All communication is assumed to occur over insecure

communication channels. What an insecure communication channel is can be defined in several ways. In our

model we assume that there is a third agent E with the

ability to intercept messages. After intercepting, E can

change the message to anything it can compute. This

includes changing the destination of the message and

the supposed identity of the sender. Furthermore, E

is considered a valid agent, thus also shares a symmetric key KES 1)

with S and/or owns a key pair (K~, K~

for an asymmetric algorithm, and is allowed to initiate

communication either with other agents or S. Figure 1

depicts our communication model.

E intercepts all messages and the agents and S only

receive messages sent by E. What E can send depends

on what it is able to generate, and this in turn depends

on what E knows.

The knowledge of E can be recursively defined as

follows:

1. E knows the names of the agents.

2. E knows the keys it owns.

3. E knows every message it has received.

4. E knows every part of a message received (where

ciphertext is considered one message part).

5. E knows everything it can generate by enciphering

data it knows using data it knows as a key.

6. E knows every plaintext it can generate by deciphering a ciphertext it knows, provided it knows the inverse of the key used as well.

7. E knows every concatenation of data it knows.

8. E knows the origin and destination of every message.

9. At every instance of the protocol run, E knows the

"state" of every agent, i.e. E knowsthe next protocol

step they will perform, the structure of a message

necessary to be accepted, what they will send after

having received (and accepted) a message, etc.

At every state in a possible run, after having received

an agent’s message, E has the possibility to forward

this message or to send whatever message it can generate to one of the agents or S. Obviously, the state

AI APPLICATIONS 9

space will grow enormously. Thus, we must limit the

concept of knowledgeif the goal is to produce a validation tool which can be used in practice. Therefore we

restrict the definition in various ways in order to make

E’s knowledgeset finite. For example, we do not allow

E to know ciphertexts of level 3 (e.g. {{{m}K}K}K).

Wedo this on an ad-hoc basis. This problem needs

further exploration. 3

Note that the above knowledge rules imply that E

never forgets. In contrast, the other agents forget everything which they are not specifically instructed to

r(~memberby the t)rotocol description.

Automatic

testing

of protocol

instantiations

As the title of this section suggests, we makea distinction between a protocol and a "protocol instantiation".

W,, deemthis a necessary distinction because protocols

in the literature are often under-specified. A protocol

may l)c implenmnted in any of several ways by the applications programmer.

Otter is based on first order logic. Thusfor describing

the protocol to be analyzed, the environment, and the

possible state transitions, we use first order functions,

predicates, implications and the usual connectives. At

any step of a protocol run, predicates like

state( xevents, xE knows,. . . , xwho,xto, xmess, . . .)

are used to describe the state of the protocol run given,

the list xevents of actions already performed and the

list xEk’nows of data knownby E. These predicates are

also used to describe the states of the agents (e.g. data

like session keys, nonces, etc. the agents have stored

so far for later use, the protocol runs they assume to

be taking part in, and the next step they will perform

in each of these runs). In general the predicates can

include as manyagents’ states and protocol runs as desired, but so far we have restricted this information to

agents A, B, E and S in the context of two interleaving protocol runs. Furthermore, the predicates contain,

amongother data used to direct the search, the agent

x who which sent the current message, the intended re(:ipieIlt xto and the messagexmess sent.

Using these predicates we write formulas which describe possible state transitions. The action of S when

receiving message1 of the protocol described in the second section can be formalized as follows:

sees( xevents, x E knows, . . . , xwho, xto, xmess.... )

A xto = S

A is_agent(el(l, xmess))

A is_agent(el(2, xmess))

.-+

send(update(xevents), xEknows.... , S, el(l, xmess),

3~Ve note, however,that leaving this part of our model

open allows for a very general tool. This is in part responsible for the success of the tool, as documented

in later sections.

10

FLAIM-2000

[enc(key(el(1, xmess), [el( 3, xmess),

el(2, xmess), new_key, enc( key(el( 2, xmess),

[new_key,

el(l, xmess) ] ) ] ) ], . .

where update(xevents) includes the current send action

and el(k, xmess) returns the kth block of the message

xmess just received.

Whenever E receives

a message (indicated

by a formula sees_E(...)),

an internal

procedure adds all that E can learn from the message to the knowledge list xEknows.

Implications sees_E(...)

--~ send_E(...,messagel,...)

send_E(...,message2,...)

A... formalize that after

having received a particular message, E has several

possibilities to proceed with the protocol run, i.e. each

of the predicates send_E(..., message,...) constitutes

a possible continuation

of the protocol run. The

messages E sends to the agents are constructed using

the basic data in xEknowsand the specification of E’s

knowledgeas well as some restrictions as discussed in

the previous section.

Weuse equations to describe the properties of the encryption and decryption functions, the keys, the nonces

etc.. For example, the equation

dec(x, enc(x, y) )

formalizes symmetric encryption and decryption functions where every key is its own inverse. These equations are used as demodulators in the deduction process.

The above formulas constitute the set of axioms describing the particular protocol instantiation to be analyzed. Anadditional set of formulas, the so-called "set

of support", includes formulas that are specific for a

particular analysis. In particular, we use a predicate

describing the initial state of a protocol run (the agent

that starts the run, the initial knowledgeof the enemy

E, etc.) and a formula describing a security goal. An

example of such a formula is "if agents A and B share

a session key, then this key is not knownby E".

Using these two formula sets and logical inference

rules (specifically, we makeextensive use of hyper resolution), Otter derives new valid formulas which correspond to possible states of a protocol run. The process stops if either no more formulas can be deduced

or Otter finds a contradiction which involves the formula describing the security goal. Whenhaving proved

a contradiction, Otter lists all formulas involved in the

proof. An attack on the protocol can be easily deduced

fromthis list.

Otter has a number of facilities

for directing the

search, for a detailed description see (Woset al. 1992).

Known and new attacks

Weused Otter to analyze a number of protocols. Whenever we model a protocol we are confronted with the

problem that usually the checks performed by the parties upon receiving the messages are not specified completely. This means that we must place ourselves in

the situation of the implementer and simply encode the

obvious checks. For example, consider step 3 of the

Needham-Schroeder protocol explained in the second

section.

3.

A ~ B : {KAB,A}KBs

Here is a case where we have to decide what B’s

checks are. Wedid the obvious:

¯ B decrypts

using KBs.

¯ B checks that the second component of the decrypted

message is some valid agent’s name.

¯ B assumes, temporarily, that the first component of

the decrypted message is the key to be shared with

this agent.

To our great surprise,

Otter determined

that this was a fatal flaw in the implementation.

Wecall the type of attack found the "arity" attack,

since it depends on an agent not checking the number

of message blocks embeddedin a cyphertext. The arity

attack found by Otter works as follows: in the first

message E impersonates B (denoted by EB) and sends

to S:

1. EB ~ S: B,A, RE

Then, S answers according to the protocol description

and sends the following message to B:

2. S ) B: {RE, A, KAB,{KAB,B}KAs}KBs

This is passed on by E, and B interprets it as being the

third message of a new protocol run. Since this message

passes the checks described above, B takes RE to be a

new session key to be shared with A and the last two

prot, otol steps are as follows:

4. B ~EA: {R~}RE

5. EA ) B: {RB- 1}R~

where tile last message can easily be generated by E

since it knowsits randomnumber sent in the first protocol step (see (Gfirgens &Peralta 1999) for a formalization of B’s actions).

It is interesting to note that an applications programrner is quite likely to not include an "arity-check" in the

code. This is because modular programming naturally

leads to the coding of routines of the type

get ( cyphertext, key, i)

which decodes cyphertext with key and returns the th

i

element of the plaintext.

By including the arity check in B’s actions when receiving message 3, we analyzed a different instantiation of the same protocol. This time Otter found the

Denning-Sacco attack.

It seems that many protocols may be subject to the

"’arit.y" attack: our methodsfound that this attack is

also possible on the Otway-Reesprotocol (see (Gfirgens

£: Peralta 1999) for a detailed description of the protocol and its analysis) and on the Yahalomprotocol, as

described in (Burrows, Abadi, & Needham1989).

Furthermore, we analyzed an authentication protocol

for smartcards with a digital signature application being standardized by the Germanstandardization organisation DIN ((DIN NI-17.4 1998)) and found the

posed standard to be subject to some interpretations

which would make it insecure (see (G/irgens, Lopez,

Peralta 1999)).

Among the known attacks which were also found

by our methods are the Lowe ((Lowe 1996)) and

Meadows (Meadows 1996) attacks on the NeedhamSchroeder public key protocol and the Simmonsattack

on the TMNprotocol (see (Tatebayashi, Matsuzaki,

Newman1991)). The latter is noteworthy since this

attack uses the homomorphismproperty of some asymmetric algorithms.

Conclusions

As is well known, there does not exist an algorithm

which can infallibly decide whether a theorem is true

or not. Thus the use of Otter, and per force of any theorem proving tool, is an art as well as a science. The

learning curve for using Otter is quite long, which is a

disadvantage. Furthermore, one can easily write Otter

input which would simply take too long to find a protocol failure even if one exists and is in the search path of

Otter’s heuristic. Thus, using this kind of tool involves

the usual decisions regarding the search path to be followed. Nevertheless, our approach has shown itself to

be a powerful and flexible way of analyzing protocols.

In particular,

our methods found protocol weaknesses

not previously found by other formal methods.

We do not yet have a tool which can be used by

applications programmers. Analyzing protocols with

our methods requires some expertise in the use of Otter

as well as some expertise in cryptology. Although it is

clear that much of what we now do can be automated

(e.g. a compiler could be written which maps protocol

descriptions to Otter input); it is not clear how much

of the search strategy can be automated.

Our work with Otter has led us to the conclusion that

it is not sufficient to ask protocol designers to follow

basic design principles in order to avoid security flaws.

One reason for this is that those principles might not

be appropriate for particular applications (e.g. security considerations in smart card applications are quite

different from, say, security considerations in network

applications). Adherence to some basic design principles, although a good idea, will not guarantee security

in the real world. The new attack we found on the

Needham-Schroeder protocol, for example, would not

be prevented by using additional messagefields to indicate the direction of a message. Our methodology can

be useful for deciding what implementation features are

necessary in a particular environment for a given protocol to be secure.

AI APPLICATIONS11

References

Bellare, M., and Rogaway, P. 1995. Provably secure

session key distribution - the three party case. In Annual Symposium on the Theory of Computing, 57-66.

ACM.

Bellare, M.; Canetti, R.; and Krawczyk, H. 1998. A

Modular Approach to the Design and Analysis of Authentication and Key Exchange Protocols. In Annual

Symposium on the Theory of Computing. ACM.

Berger, R.; Kannan, S.; and Peralta, R. 1985. A

framework for the study of cryptographic protocols.

Ill Advances in Cryptology - CRYPTO’95, Lecture

Note.s in Computer Science, 87-103. Springer-Verlag.

B~)’rl. C. 1990. Hidden assumptions in cryptographic

protocols. In IEEE Proceedings, volume 137, 433-436.

Burrow.~, M.: Abadi, M.; and Needham, R. 1989. A

Logi(’ of Authentication. Report 39, Digital Systems

R(:scarch Center, Palo Alto, California.

Clark, J., and Jacob, J. 1995. On the Security of Rec(,nt Protocols. Information Processing Letters 56:151155.

Denning, D., and Sacco, G. 1982. Timestamps in key

distribution

protocols. Communications of the ACM

24:533-536.

DIN NI-17.4. 1998. Spezifikation der Schnittstelle

zu Chipkarte’n mit Digitaler Signatur-Anwendung /

Funktion nach SigG und SigV, Version 1.0 (Draft).

Dolev, D., and Yao, A. 1983. On the security of

1)ublic-key protocols. IEEE Transactions on Information Theory 29:198-208.

Giirgens, S., and Peralta, R. 1999. Efficient Automated Testing of Cryptographic Protocols. Technical Report 45-1998, GMDGerman National Research

Center for Information Technology.

Giirgcns, S.; Lopez, J.; and Peralta, R. 1999. Efficient

Detoction of Failure Modes in Electronic Commerce

Protocols. In DEXA’99 lOth International Workshop

on Database and Expert Systems Applications, 850857. IEEE Computer Society.

Hcintze, N., and Tygar, J. 1994. A Model for Secure Protocols and their Compositions. In 1994 IEEE

Comlrater Society Symposium on Research in Security

and Privacy, 2-13. IEEE Computer Society Press.

Lowc, G. 1996. Breaking and fixing the NeedhamSchroeder tmblic-key protocol using CSPand FDR. In

S¢:co’nd International Workshop, TA CAS ’96, volume

1055 of LNCS, 147-166. SV.

Marrero, W.; Clarke, E. M.; and Jha, S. 1997. A Model

Checker for Authentication

Protocols. In DIMACS

Workshop on Cryptographic Protocol Design and Veri, fication.

1996. Mastercard

and VISA Corporations,

Secure Electronic Transactions (SET) Specification.

http://www.setco.org.

12

FLAIRS-2000

Meadows, C., and Syverson, P. 1998. A formal specification of requirements for payment transactions in

the SET protocol. In Proceedings o/Financial Cryptography.

Meadows,C. 1991. A system for the specification and

verification

of key management protocols. In IEEE

Symposium on Security and Privacy, 182-195. IEEE

Computer Society Press, NewYork.

Meadows, C. 1995. Formal Verification

of Cryptographic Protocols: A Survey. In Advances in Cryptology - Asiacrypt ’9~, volume 917 of LNCS, 133 - 150.

SV.

Meadows, C. 1996. Analyzing the Needham-Schroeder

Public Key Protocol:

A Comparison of Two Approaches. In Proceedings of ESORICS.Naval Research

Laboratory: Springer.

Needham, R., and Schroeder, M. 1978. Using encryption for authentication in large networks of computers.

Communications of the A CM993-999.

Paulson, L. C. 1998. The inductive approach to verifying cryptographic protocols. Journal of Computer

Security 6:85-128.

Schneider, S. 1997. Verifying authentication protocols

with CSP. In IEEE Computer Security Foundations

Workshop. IEEE.

Shoup, V., and Rubin, A. 1996. Session key distribution using smart card. In Advances in Cryptology

- EUROCRYPT

’96, volume 1070 of LNCS, 321-331.

SV.

Tatebayashi, M.; Matsuzaki, N.; and Newman.1991.

Key Distribution Protocol for Digital Mobile Communication Systems. In Brassard, G., ed., Advances in

Cryptology - CRYPTO’89, volume 435 of LNCS, 324333. SV.

Wos, L.; Overbeek, R.; Lusk, E.; and Boyle, J. 1992.

Automated Reasoning - Introduction and Applications.

McGraw-Hill, Inc.