Proceedings of the Twenty-Third AAAI Conference on Artificial Intelligence (2008)

Semi-supervised Classification Using Local and Global Regularization

Fei Wang1 , Tao Li2 , Gang Wang3, Changshui Zhang1

1

2

Department of Automation, Tsinghua University, Beijing, China

School of Computing and Information Sciences, Florida International University, Miami, FL, USA

3

Microsoft China Research, Beijing, China

Abstract

works concentrate on the derivation of different forms of

smoothness regularizers, such as the ones using combinatorial graph Laplacian (Zhu et al., 2003)(Belkin et al., 2006),

normalized graph Laplacian (Zhou et al., 2004), exponential/iterative graph Laplacian (Belkin et al., 2004), local linear regularization (Wang & Zhang, 2006) and local learning

regularization (Wu & Schölkopf, 2007), but rarely touch the

problem of how to derive a more efficient loss function.

In this paper, we argue that rather than applying a global

loss function which is based on the construction of a global

predictor using the whole data set, it would be more desirable to measure such loss locally by building some local predictors for different regions of the input data space. Since

according to (Vapnik, 1995), usually it might be difficult to

find a predictor which holds a good predictability in the entire input data space, but it is much easier to find a good

predictor which is restricted to a local region of the input

space. Such divide and conquer scheme has been shown

to be much more effective in some real world applications

(Bottou & Vapnik, 1992). One problem of this local strategy

is that the number of data points in each region is usually too

small to train a good predictor, therefore we propose to also

apply a global smoother to make the predicted data labels

more comply with the intrinsic data distributions.

In this paper, we propose a semi-supervised learning (SSL)

algorithm based on local and global regularization. In the local regularization part, our algorithm constructs a regularized

classifier for each data point using its neighborhood, while

the global regularization part adopts a Laplacian regularizer

to smooth the data labels predicted by those local classifiers.

We show that some existing SSL algorithms can be derived

from our framework. Finally we present some experimental

results to show the effectiveness of our method.

Introduction

Semi-supervised learning (SSL), which aims at learning

from partially labeled data sets, has received considerable

interests from the machine learning and data mining communities in recent years (Chapelle et al., 2006b). One reason

for the popularity of SSL is because in many real world applications, the acquisition of sufficient labeled data is quite

expensive and time consuming, but the large amount of unlabeled data are far easier to obtain.

Many SSL methods have been proposed in the recent

decades (Chapelle et al., 2006b), among which the graph

based approaches, such as Gaussian Random Fields (Zhu et

al., 2003), Learning with Local and Global Regularization

(Zhou et al., 2004) and Tikhonov Regularization (Belkin et

al., 2004), have been becoming one of the hottest research

area in SSL field. The common denominator of those algorithms is to model the whole data set as an undirected

weighted graph, whose vertices correspond to the data set,

and edges reflect the relationships between pairwise data

points. In SSL setting, some of the vertices on the graph

are labeled, while the remained are unlabeled, and the goal

of graph based SSL is to predict the labels of those unlabeled

data points (and even the new testing data which are not

in the graph) such that the predicted labels are sufficiently

smooth with respect to the data graph.

One common strategy for realizing graph based SSL is

to minimize a criterion which is composed of two parts, the

first part is a loss measures the difference between the predictions and the initial data labels, and the second part is a

smoothness penalty measuring the smoothness of the predicted labels over the whole data graph. Most of the past

A Brief Review of Manifold Regularization

Before we go into the details of our algorithm, let’s first review the basic idea of manifold regularization (Belkin et al.,

2006) in this section, since it is closely related to this paper.

As we know, in semi-supervised learning, we are given a

set of data points X = {x1 , · · · , xl , xl+1 , · · · , xn }, where

Xl = {xi }li=1 are labeled, and Xu = {xj }nj=l+1 are unlabeled. Each xi ∈ X is drawn from a fixed but usually

unknown distribution p(x). Belkin et al. (Belkin et al.,

2006) proposed a general geometric framework for semisupervised learning called manifold regularization, which

seeks an optimal classification function f by minimizing the

following objective

Xl

Jg =

L(yi , f (xi , w)) + γA kf k2F + γI kf k2I , (1)

i=1

where yi represents the label of xi , f (x, w) denotes the classification function f with its parameter w, kf kF penalizes

the complexity of f in the functional space F , kf kI reflects

c 2008, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

726

The Construction of Local Classifiers

the intrinsic geometric information of the marginal distribution p(x), γA , γI are the regularization parameters.

The reason why we should punish the geometrical information of f is that in semi-supervised learning, we only have

a small portion of labeled data (i.e. l is small), which are

not enough to train a good learner by purely minimizing the

structural loss of f . Therefore, we need some prior knowledge to guide us to learn a good f . What p(x) reflects is just

such type of prior information. Moreover, it is usually assumed (Belkin et al., 2006) that there is a direct relationship

between p(x) and p(y|x), i.e. if two points x1 and x2 are

close in the intrinsic geometry of p(x), then the conditional

distributions p(y|x1 ) and p(y|x2 ) should be similar. In other

words, p(y|x) should vary smoothly along the geodesics in

the intrinsic geometry of p(x).

Specifically, (Belkin et al., 2006) also showed that kf k2I

can be approximated by

X

Ŝ =

(f (xi ) − f (xj ))2 Wij = f T Lf

(2)

In this subsection, we will introduce how to construct the

local classifiers. Specifically, in our method, we split the

whole input data space into n overlapping regions {Ri }ni=1 ,

such that Ri is just the k-nearest neighborhood of xi . We

further construct a classification function fi for region Ri ,

which, for simplicity, is assumed to be linear. Then gi predicts the label of x by

gi (x) = wiT (x − xi ) + bi ,

(3)

where wi and bi are the weight vector and bias term of gi 1 .

A general approach for getting the optimal parameter set

{(wi , bi )}ni=1 is to minimize the following structural loss

n

X

X

Jˆl =

i=1 xj ∈Ri

(wiT (xj − xi ) + bi − yj )2 + γA kwi k2 .

However, in semi-supervised learning scenario, we only

have a few labeled points, i.e., we do not know the corresponding yi for most of the points. To alleviate this problem,

we associate each yi with a “hidden label” fi , such that yi

is directly determined by fi . Then we can minimize the following loss function instead to get the optimal parameters.

i,j

where n is the total number of data points, and Wij

are the edge weights in the data adjacency graph, f =

(f (x1 ), · · · , f (xn ))T . L = D − W ∈ Rn×n is the graph

Laplacian where W is the graph weight matrix with its

(i, j)-th entry W(i, j) =

P Wij , and D is a diagonal degree

matrix with D(i, i) = j Wij . There has been extensive

discussion on that under certain conditions choosing Gaussian weights for the adjacency graph leads to convergence

of the graph Laplacian to the Laplace-Beltrami operator

△M (or its weighted version) on the manifold M(Belkin

& Niyogi, 2005)(Hein et al., 2005).

Jl

=

=

l

X

i=1

n

X

(yi − fi )2 + λJˆl

X

i=1 xj ∈Ri

(4)

(wiT (xj − xi ) + bi − fj )2 + γA kwi k2

P

Let

= xj ∈Ri (wiT (xj − xi ) + bi − fi )2 + γA kwi k2 ,

which can be rewritten in its matrix form as

2

wi

˜i Jli = G

−

f

i

bi

Jli

The Algorithm

In this section we will introduce our learning with local and

global regularization approach in detail. First let’s see the

motivation of this work.

where

xTi1 − xTi

xTi − xT1

2

..

Gi =

T . T

xi − xi

ni

√

γA Id

Why Local Learning

Although (Belkin et al., 2006) provides us an excellent

framework for learning from labeled and unlabeled data, the

loss Jg is defined in a global way, i.e. for the whole data set,

we only need to pursue one classification function f that can

minimize Jg . According to (Vapnik, 1995), selecting a good

f in such a global way might not be a good strategy because

the function set f (x, w), w ∈ W may not contain a good

predictor for the entire input space. However, it is much easier for the set to contain some functions that are capable of

producing good predictions on some specified regions of the

input space. Therefore, if we split the whole input space into

C local regions, then it is usually more effective to minimize

the following local cost function for each region.

Nevertheless, there are still some problems with pure local learning algorithms since that there might not be enough

data points in each local region for training the local classifiers. Therefore, we propose to apply a global smoother to

smooth the predicted data labels with respect to the intrinsic data manifold, such that the predicted data labels can be

more reasonable and accurate.

1

1

..

.

fi1

fi2

..

.

˜

, fi =

f

1

ini

0

0

.

where xij represents the j-th neighbor of xi , ni is the cardinality of Ri , and 0 is a d × 1 zero vector, d is the dimen∂Jli

= 0, we can

sionality of the data vectors. By taking ∂(wi ,b

i)

get that

∗

wi

= (GTi Gi )−1 GTi f˜i

(5)

bi

Then the total loss we want to minimize becomes

X

X

T

Jli =

f˜i G̃i G̃i f˜i ,

(6)

Jˆl =

i

1

i

Since there is only a few data points in each neighborhood,

then the structural penalty term kwi k will pull the weight vector

wi toward some arbitrary origin. For isotropy reasons, we translate

the origin of the input space to the neighborhood medoid xi , by

subtracting xi from the training points xj ∈ Ri

727

where J ∈ Rn×n is a diagonal matrix with its (i, i)-th entry

1, if xi is labeled;

J(i, i) =

(12)

0, otherwise,

where G̃i = I − Gi (GTi Gi )−1 GTi . If we partition G̃i into

four block as

ni ×ni

Ai

Bni i ×d

G̃i =

i

Cd×n

Dd×d

i

i

y is an n × 1 column vector with its i-th entry

yi , if xi is labeled;

y(i) =

0, otherwise

T

Let fi = [fi1 , fi2 , · · · , fini ] , then

T

Ai Bi

fi

T

˜

˜

fi G̃i fi = [fi 0]

= fiT Ai fi

Ci Di

0

Induction

Thus

Jˆl =

X

fiT Ai fi .

(7)

i

Furthermore, we have the following theorem.

Theorem 1.

−1

T T

XTi H−1

i Xi 11 Xi Hi Xi

Ai = Ini − XTi H−1

X

+

i

i

ni − c

−1

−1

T

T T

T

X H Xi 11

11 Xi Hi Xi

11T

− i i

−

+

,

ni − c

ni − c

ni − c

where Hi = Xi XTi + γA Id , c = 1T XTi H−1

i Xi 1, 1 ∈

Rni ×1 is an all-one vector, and Ai 1 = 0.

Proof. See the supplemental material.

Then we can define the label vector f

=

[f1 , f2 , · · · , fn ]T ∈ Rn×1 , the concatenated label vector

f̂ = [f1T , f2T , · · · , fnT ]T and the concatenated block-diagonal

matrix

A1 0 · · ·

0

0

0 A2 · · ·

,

Ĝ =

..

..

..

...

.

.

.

0

0 · · · An

P

P

which is of size i ni × i ni . Then from Eq.(7) we can

T

derive that

P Jl = f̂ Ĝf̂ . Define the selection matrix S ∈

n× i ni

{0, 1}

, which is a 0-1 matrix and there is only one 1

in each row of S, such that f̂ = Sf . Then Jˆl = f T ST ĜSf .

Let

M = ST ĜS ∈ Rn×n ,

(8)

Relationship with Related Approaches

There has already been some semi-supervised learning algorithms based on different regularizations. In this subsection,

we will discuss the relationships between our algorithm with

those existing approaches.

Relationship with Gaussian-Laplacian Regularized Approaches Most of traditional graph based SSL algorithms

(e.g. (Belkin et al., 2004; Zhou et al., 2004; Zhu et al.,

2003)) are based on the following framework

f = arg min

f

γI

J =

(yi − fi ) + λf Mf + 2 f T Lf .

n

i=1

T

By setting ∂J /∂f = 0 we can get that

γi −1

f = J + λM + 2 L

Jy,

n

(fi − yi )2 + ζf T Lf ,

(13)

where f = [f1 , f2 , · · · , fl , · · · , fn ]T , L is the graph Laplacian constructed by Gaussian functions. Clearly, the above

framework is just a special case of our algorithm if we set

λ = 0, γI = n2 ζ in Eq.(10).

(9)

Relationship with Local Learning Regularized Approaches Recently, Wu & Schölkopf (Wu & Schölkopf,

2007) proposed a novel transduction method based on local learning, which aims to solve the following optimization

problem

As stated in section 3.1, we also need to apply a global

smoother to smooth the predicted hidden labels {fi }. Here

we apply the same smoothness regularizer as in Eq.(2), then

the predicted labels can be achieved by minimizing

2

l

X

i=1

SSL with Local & Global Regularizations

l

X

Discussions

In this section, we discuss the relationships between the

proposed framework with some existing related approaches,

and present another mixed regularization framework for the

algorithm presented in section .

which is a square matrix, then we can rewrite Jˆl as

Jˆl = f T Mf .

To predict the label of an unseen testing data point, which

has not appeared in X , we propose a three-step approach:

Step 1. Solve the optimal label vector f ∗ using LGReg.

Step 2. Solve the parameters {wi∗ , b∗i } of the optimal local

classification functions using Eq.(5).

Step 3. For a new testing point x, first identify the local

regions that x falls in (e.g. by computing the Euclidean distance between x to the region medoids and select the nearest

one), then apply the local prediction functions of the corresponding regions to predict its label.

f = arg min

(10)

f

l

X

i=1

(fi − yi )2 + ζ

n

X

i=1

kfi − oi k2 ,

(14)

where oi is the label of xi predicted by the local classifier

constructed on the neighborhood of xi , and the parameters

of the local classifier can be represented by f via minimizing

some local structural loss functions as in Eq.(5).

(11)

728

This approach can be understood as a two-step approach

for optimizing Eq.(10) with γI = 0: in the fist step, it optimizes the classifier parameters by minimizing local structural loss (Eq.(4)); in the second step, it minimizes the prediction loss of each data points by the local classifier constructed just on its neighborhood.

Table 1: Descriptions of the datasets

Datasets

Sizes Classes Dimensions

g241c

1500

2

241

g241n

1500

2

241

USPS

1500

2

241

COIL

1500

6

241

digit1

1500

2

241

cornell

827

7

4134

texas

814

7

4029

wisconsin

1166

7

4189

washington 1210

7

4165

BCI

400

2

117

diabetes

768

2

8

ionosphere

351

2

34

A Mixed-Regularization Viewpoint

In section 3.3 we have stated that our algorithm aims to minimize

J =

l

X

i=1

(yi − fi )2 + λf T Mf +

γI T

f Lf

n2

(15)

where M is defined in Eq.(8) and L is the conventional

graph Laplacian constructed by Gaussian functions. It is

easy to prove that M has the following property.

Theorem 2. M1 = 0, where 1 ∈ Rn×1 is a column vector

with all its elements equaling to 1.

Proof. From the definition of M (Eq.(8)), we have

M1 = ST ĜS1 = ST Ĝ1 = 0,

Methods & Parameter Settings

Besides our method, we also implement some other competing methods for experimental comparison. For all the methods, their hyperparameters were set by 5-fold cross validation from some grids introduced in the following.

• Local and Global Regularization (LGReg). In the

implementation the neighborhood size is searched

from {5, 10, 50}, γA and λ are all searched from

{4−3 , 4−2 , 4−1 , 1, 41 , 42 , 43 } and we set λ + nγI2 = 1, the

width of the Gaussian similarity when constructing the

graph is set by the method in (Zhu et al., 2003).

• Local Learning Regularization (LLReg).

The implementation of this algorithm is the same as in

(Wu & Schölkopf, 2007), in which we also adopt

the mutual neighborhood with its size search from

{5, 10, 50}. The regularization parameter of the local classifier and the tradeoff parameter between the

loss and local regularization term are searched from

{4−3 , 4−2 , 4−1 , 1, 41 , 42 , 43 }.

• Laplacian Regularized Least Squares (LapRLS).

The implementation code is downloaded from

http://manifold.cs.uchicago.edu/

manifold_regularization/software.html,,

in which the width of the Gaussian similarity is also set

by the method in (Zhu et al., 2003), and the extrinsic

and intrinsic regularization parameters are searched from

{4−3 , 4−2 , 4−1 , 1, 41 , 42 , 43 }. We adopt the linear kernel

since our algorithm is locally linear.

• Learning with Local and Global Consistency (LLGC).

The implementation of the algorithm is the same as in

(Zhou et al., 2004), in which the width of the Gaussian similarity is also by the method in (Zhu et al.,

2003), and the regularization parameter is searched from

{4−3 , 4−2 , 4−1 , 1, 41 , 42 , 43 }.

• Gaussian Random Fields (GRF). The implementation of

the algorithm is the same as in (Zhu et al., 2003).

• Support Vector Machine (SVM). We use libSVM (Fan

et al., 2005) to implement the SVM algorithm with a

linear kernel, and the cost parameter is searched from

{10−4, 10−3 , 10−2 , 10−1 , 1, 101 , 102 , 103 , 104 }.

Therefore, M can also be viewed as a Laplacian matrix.

That is, the last two terms of Rl can all be viewed as regularization terms with different Laplacians, one is derived

from local learning, the other is derived from the heat kernel. Hence our algorithm can also be understood from a

mixed regularization viewpoint (Chapelle et al., 2006a)(Zhu

& Goldberg, 2007). Just like the multiview learning algorithm, which trains the same type of classifier using different

data features, our method trains different classifiers using the

same data features. Different types of Laplacians may better reveal different (maybe complementary) information and

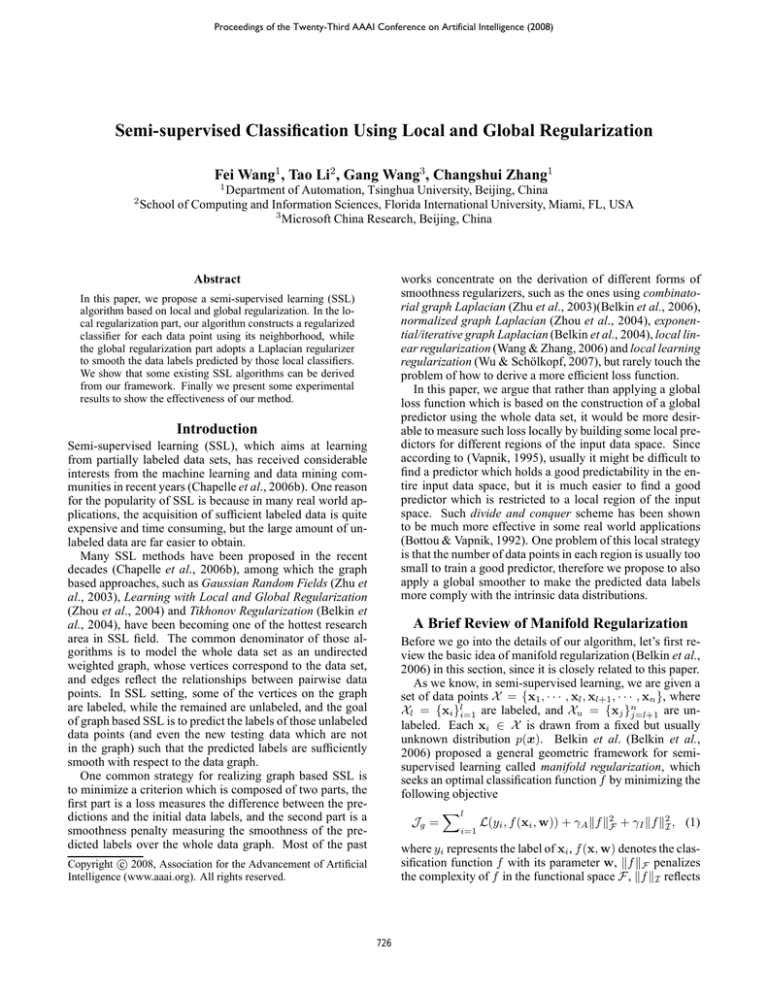

thus provide a more powerful classifier.

Experiments

In this section, we present a set of experiments to show the

effectiveness of our method. First let’s describe the basic

information of the data sets.

The Data Sets

We adopt 12 data sets in our experiments, including 2 artificial data sets g241c and g241n, three image data sets USPS,

COIL, digit1, one BCI data set2 , four text data sets cornell, texas, wisconsin and washington from the WebKB

data set3 , and two UCI data sets diabetes and ionosphere4 .

Table 1 summarizes the characteristics of the datasets.

2

All these former 6 data sets can be downloaded from

http://www.kyb.tuebingen.mpg.de/ssl-book/

benchmarks.html.

3

http://www.cs.cmu.edu/˜WebKB/.

4

http://www.ics.uci.edu/mlearn/

MLRepository.html.

729

average classification accuracy

0.8

0.75

0.7

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.65

0.6

0.55

10

20

30

40

percentage of randomly labeled points

0.7

0.6

0.5

0.4

50

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

10

20

30

40

percentage of randomly labeled points

(a) g241c

0.9

0.85

0.65

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

10

20

30

40

percentage of randomly labeled points

0.98

0.96

0.94

0.92

0.9

0.88

50

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

10

20

30

40

percentage of randomly labeled points

0.8

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.75

0.7

10

20

30

40

percentage of randomly labeled points

0.9

0.88

0.86

0.7

0.65

10

20

30

40

percentage of randomly labeled points

0.6

0.5

10

20

30

40

percentage of randomly labeled points

50

0.82

0.8

0.78

0.76

10

20

30

40

percentage of randomly labeled points

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.75

0.95

0.9

0.85

10

20

30

40

percentage of randomly labeled points

50

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.8

0.75

0.7

0.65

50

10

20

30

40

percentage of randomly labeled points

50

(i) washington

0.76

0.74

0.72

0.7

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.68

0.66

0.64

50

0.8

1

0.76

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

(j) BCI

0.85

(h) wisconsin

0.55

0.45

0.84

(f) cornell

0.84

0.74

50

average classification accuracy

average classification accuracy

0.75

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.86

0.7

50

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

(g) texas

0.8

0.9

0.88

(e) digit1

average classification accuracy

average classification accuracy

(d) COIL

0.85

0.92

0.9

average classification accuracy

average classification accuracy

average classification accuracy

0.95

0.7

0.94

(c) USPS

1

0.75

0.96

(b) g241n

1

0.8

0.98

0.82

50

average classification accuracy

0.5

0.8

average classification accuracy

average classification accuracy

0.85

average classification accuracy

0.9

0.9

10

20

30

40

number of randomly labeled points

50

0.74

0.72

0.7

0.68

0.64

0.62

(k) diabetes

Figure 1: Experimental results of different algorithms.

730

LGReg

LLReg

LapRLS

LLGC

GRF

SVM

0.66

10

20

30

40

percentage of randomly labeled points

(l) ionosphere

50

Table 2: Experimental results with 10% of the data points randomly labeled

g241c

g241n

USPS

COIL

digit1

cornell

texas

wisconsin

washington

BCI

diabetes

ionosphere

SVM

75.46 ± 1.1383

75.10 ± 1.7155

88.23 ± 1.1087

78.95 ± 1.9936

92.08 ± 1.4818

70.62 ± 0.4807

69.60 ± 0.5612

74.10 ± 0.3988

69.45 ± 0.4603

59.77 ± 4.1279

72.36 ± 1.5924

75.25 ± 1.2622

GRF

56.34 ± 2.1665

55.06 ± 1.9519

94.87 ± 1.7490

91.23 ± 1.8321

96.95 ± 0.9601

71.43 ± 0.8564

70.03 ± 0.8371

74.65 ± 0.4979

78.26 ± 0.4053

50.49 ± 1.9392

70.69 ± 2.6321

70.21 ± 2.2778

LLGC

77.13 ± 2.5871

49.75 ± 0.2570

96.19 ± 0.7588

92.04 ± 1.9170

95.49 ± 0.5638

76.30 ± 2.5865

75.93 ± 3.6708

80.57 ± 1.9062

80.23 ± 1.3997

53.07 ± 2.9037

67.15 ± 1.9766

67.31 ± 2.6155

Experimental Results

LLReg

65.31 ± 2.1220

73.25 ± 0.2466

95.79 ± 0.6804

86.86 ± 2.2190

97.64 ± 0.6636

79.46 ± 1.6336

79.44 ± 1.7638

83.62 ± 1.5191

86.37 ± 1.5516

51.56 ± 2.8277

68.38 ± 2.1772

68.15 ± 2.3018

LapRLS

80.44 ± 1.0746

76.89 ± 1.1350

88.80 ± 1.0087

73.35 ± 1.8921

92.79 ± 1.0960

80.59 ± 1.6665

78.15 ± 1.5667

84.21 ± 0.9656

86.58 ± 1.4985

61.84 ± 2.8177

64.95 ± 1.1024

65.17 ± 0.6628

LGReg

72.29 ± 0.1347

73.20 ± 0.5983

99.21 ± 1.1290

89.61 ± 1.2197

97.10 ± 1.0982

81.39 ± 0.8968

80.75 ± 1.2513

84.05 ± 0.5421

88.01 ± 1.1369

65.31 ± 2.5354

72.36 ± 1.3223

84.05 ± 0.5421

from Labeled and Unlabeled Examples. Journal of Machine Learning Research 7(Nov): 2399-2434.

Bottou, L. and Vapnik, V. (1992). Local learning algorithms. Neural Computation, 4:888-900.

Chapelle, O., Chi, M. and Zien, A. (2006). A Continuation

Method for Semi-Supervised SVMs. ICML 23, 185-192.

Chapelle, O., B. Schölkopf and A. Zien. (2006). SemiSupervised Learning. 508, MIT Press, Cambridge, USA.

Fan, R. -E., Chen, P. -H., and Lin, C.-J. (2005). Working

Set Selection Using Second Order Information for Training

SVM. Journal of Machine Learning Research 6.

Lal, T. N., Schröder, M., Hinterberger, T., Weston, J., Bogdan, M., Birbaumer, N., and Schölkopf, B. (2004). Support

Vector Channel Selection in BCI. IEEE TBE, 51(6).

Gloub, G. H., Vanloan, C. F. (1983). Matrix Computations.

Johns Hopking UP, Baltimore.

Hein, M., Audibert, J. Y., and von Luxburg, U. (2005).

From Graphs to Manifolds-Weak and Strong Pointwise

Consistency of Graph Laplacians. In COLT 18, 470-485.

Schölkopf, B. and Smola, A. (2002). Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. The MIT Press, Cambridge, MA.

Smola, A. J., Bartlett, P. L., Scholkopf, B., and Schuurmans, D. (2000). Advances in Large Margin Classifiers,

The MIT Press.

Vapnik, V. N. (1995). The Nature of Statistical Learning

Theory. Berlin: Springer-Verlag, 1995.

Wang, F. and Zhang, C. (2006). Label Propagation

Through Linear Neighborhoods. ICML 23.

Wu, M. and Schölkopf, B. (2007). Transductive Classification via Local Learning Regularization. AISTATS 11.

Zhou, D., Bousquet, O., Lal, T. N. Weston, J., & Schölkopf,

B. (2004). Learning with Local and Global Consistency. In

NIPS 16.

Zhu, X., Ghahramani, Z., and Lafferty, Z. (2003). SemiSupervised Learning Using Gaussian Fields and Harmonic

Functions. In ICML 20.

Zhu, X. and Goldberg, A. (2007). Kernel Regression with

Order Preferences. In AAAI.

The experimental results are shown in figure 1. In all the

figures, the x-axis represents the percentage of randomly labeled points, the y-axis is the average classification accuracy

over 50 independent runs. From the figures we can observe

• The LapRLS algorithm works very well on the toy and

text data sets, but not very well on the image and UCI

data sets.

• The LLGC and GRF algorithm work well on the image

data sets, but not very well on other data sets.

• The LLReg algorithm works well on the image and text

data sets, but not very well on the BCI and toy data sets.

• SVM works well when the data sets are not well structured, e.g. the toy, UCI and BCI data sets.

• LGReg works very well on almost all the data sets, except

for the toy data sets.

To better illustrate the experimental results, we also provide the numerical results of those algorithms on all the data

sets with 10% of the points randomly labeled, and the values

in table 2 are the mean classification accuracies and standard

deviations of 50 independent runs, from which we can also

see the superiority of the LGReg algorithm.

Conclusions

In this paper we proposed a general learning framework

based on local and global regularization. We showed that

many existing learning algorithms can be derived from our

framework. Finally experiments are conducted to demonstrate the effectiveness of our method.

References

Belkin, M., Matveeva, I., and Niyogi, P. (2004). Regularization and Semi-supervised Learning on Large Graphs. In

COLT 17.

Belkin, M., and Niyogi, P. Towards a Theoretical Foundation for Laplacian-Based Manifold Methods. In COLT 18.

Belkin, M., Niyogi, P., and Sindhwani, V. (2006). Manifold Regularization: A Geometric Framework for Learning

731