Error Bounds for Approximate Value Iteration

Rémi Munos

Centre de Mathématiques Appliquées,

Ecole Polytechnique, 91128 Palaiseau Cedex, France.

remi.munos@polytechnique.fr

Abstract

Approximate Value Iteration (AVI) is an method for solving

a Markov Decision Problem by making successive calls to a

supervised learning (SL) algorithm. Sequence of value representations Vn are processed iteratively by Vn+1 = AT Vn

where T is the Bellman operator and A an approximation

operator. Bounds on the error between the performance of

the policies induced by the algorithm and the optimal policy

are given as a function of weighted Lp -norms (p ≥ 1) of

the approximation errors. The results extend usual analysis

in L∞ -norm, and allow to relate the performance of AVI to

the approximation power (usually expressed in Lp -norm, for

p = 1 or 2) of the SL algorithm. We illustrate the tightness

of these bounds on an optimal replacement problem.

Introduction

We study the resolution of Markov Decision Processes

(MDPs) (Puterman 1994) using approximate value function representations Vn . The Approximate Value Iteration

(AVI) algorithm is defined by the iteration

Vn+1 = AT Vn ,

(1)

where T is the Bellman operator and A an approximation operator or a supervised learning (SL) algorithm. AVI

is very popular and has been successfully implemented

in many different settings in Dynamic Programming (DP)

(Bertsekas & Tsitsiklis 1996) and Reinforcement Learning

(RL) (Sutton & Barto 1998).

A simple version is: at stage n, select a sample of states

(xk )k=1...K from some distribution µ, compute the backedup values vk := T Vn (xk ), then make a call to a SL algorithm. This returns a function Vn+1 minimizing

some averP

1

age empirical loss Vn+1 = arg minf K

l(f

(x

k ) − vk ).

k

Most SL algorithms use squared (L2 ) or absolute (L1 )

loss functions (or variants) thus perform a minimization

problem in weighted L1 or L2 , in which the weights are defined by µ. It is therefore crucial to estimate the performance

of AVI as a function of the weighted Lp - norms (p ≥ 1) used

by the SL algorithm. The goal of this paper is to extend usual

results in L∞ -norm to similar results in weighted Lp -norms.

The performance achieved by such a resolution of the MDP

c 2005, American Association for Artificial IntelliCopyright gence (www.aaai.org). All rights reserved.

may then be directly related to the approximation power of

the SL algorithm.

Alternative results in approximate DP with weighted

norms include Linear Programming (de Farias & Roy 2003)

and Policy Iteration (Munos 2003).

Let X be the state space assumed to be finite with N states

(although the results given in this paper extend easily to continuous spaces) and A a finite action space. Let p(x, a, y) be

the probability that the next state is y given that the current

state is x and the action is a. Let r(x, a, y) be the reward

received when a transition (x, a) → y occurs.

A policy π is a mapping from X to A. We write

P π the N × N −matrix with elements P π (x, y) :=

p(x, π(x), y) and r π the vector with components r π (x) :=

P

y p(x, π(x), y)r(x, π(x), y).

For a policy π, we define the value function V π which,

in the discounted and infinite horizon setting studied here, is

the expected discounted sum of future rewards

X

∞

V π (x) := E

γ t r(xt , at , xt+1 )|x0 = x, at = π(xt ) ,

t=0

where γ ∈ [0, 1) is a discount factor. V π is the fixed point

of the operator T π : IRN → IRN defined, for any vector

W ∈ IRN , by T π W := rπ + γP π W.

The optimal value function V ∗ := supπ V π is the fixedpoint of the Bellman operator T defined, for any W ∈ IR N ,

by

X

T W (x) = max

p(x, a, y)[r(x, a, y) + γW (y)].

a∈A

y

We say that a policy π is greedy with respect to W ∈ IR N ,

if for all x ∈ X,

π(x) ∈ arg max

a∈A

X

p(x, a, y)[r(x, a, y) + γW (y)].

y

An optimal policy π ∗ is a policy greedy w.r.t. V ∗ .

An exact resolution method for computing V ∗ is the Value

Iteration algorithm defined by the iteration Vn+1 = T Vn .

Due to the contraction property in L∞ −norm of the operator T , the iterates Vn converge to V ∗ as n → ∞. However,

problems with a large number of states prevent us from using such exact resolution methods; we need to represent the

AAAI-05 / 1006

functions with a moderate number of coefficients and perform approximate iterations such as (1).

The paper is organized as follows. We first remind some

approximation results in L∞ -norm, then give componentwise bounds and use them to derive error-bounds in Lp norms. Finally we detail some practical implementations

and provide a numerical experiment for an optimal replacement problem. The main result of this paper is Theorem 1.

All proofs are detailed in the Appendix.

We recall the definition of the norms: let u ∈ IR N . Its

L∞ -norm is ||u||∞ := supx |u(x)|. Let µ be a distribution

on X. The weighted Lp -(semi) norms (denoted by Lp,µ ) is

P

p 1/p

||u||p,µ :=

. Let us denote by || · ||p

x µ(x)|u(x)|

the unweighted Lp -norms (i.e. when µ is uniform).

Approximation results in L∞ -norm

Consider the AVI algorithm defined by (1) and write εn =

T Vn −Vn+1 the approximation error at stage n. In general,

this algorithm does not converge, but its asymptotic behavior

may be analyzed. If the approximation errors are uniformly

bounded ||εn ||∞ ≤ ε then, a bound on the error between the

asymptotic performance of the policies πn greedy w.r.t. Vn

and the optimal policy is (Bertsekas & Tsitsiklis 1996):

lim sup ||V ∗ − V πn ||∞ ≤

n→∞

2γ

ε.

(1 − γ)2

(2)

This L∞ -bound requires a uniformly low approximation

error over all states, which is difficult to guarantee in practice, especially for large-scale problems. Most function approximation (exceptions include (Gordon 1995; Guestrin,

Koller, & Parr 2001)) such as those described in the next

section perform a minimization problem using weighted L1

and L2 norms.

the Matching Pursuit and variants (Davies, Mallat, & Avellaneda 1997).

In Statistical Learning (Hastie, Tibshirani, & Friedman

2001), other SL algorithms include Neural Network, Locally

Weighted Learning and Kernel Regression (Atkeson, Schaal,

& Moore 1997), Support-Vectors and Reproducing Kernels

(Vapnik, Golowich, & Smola 1997).

We call A an ε−approximation operator if A returns an

ε−approximation g of f : ||f − g|| ≤ ε.

Componentwise error bounds

Here we provide componentwise bounds that will be used in

the next section. We consider the AVI algorithm defined by

(1) and write εn = T Vn − Vn+1 the approximation error at

stage n. A componentwise bound on the asymptotic performance of the policies πn greedy w.r.t. Vn and the optimal

policy is given now (and proved in the Appendix)

Lemma 1. We have

lim sup V ∗ − V πn ≤ lim sup(I − γP πn )−1

n→∞

γ n−k

k=0

π∗ n−k

(P )

+ P πn P πn−1 . . . P πk+1 |εk |,

(3)

where |εk | is the vector whose components are the absolute

values of εk .

Approximation results in Lp -norms

We use the componentwise result of the previous section to

extend the error bound (2) in Lp -norm, under one of the two

following assumption.

Let µ be a distribution over X.

Assumption A1 [Smooth transition probabilities].

There exists a constant C > 0 such that, for all states

x, y ∈ X, all policy π,

Approximation operators

A supervised learning algorithm A returns a good fit g

(within given classes of functions F) of the data (xk , vk ) ∈

XN ×IR, k = 1 . . . K, with the xk being sampled from some

distribution µ and the values vk being unbiased estimates

of some function f (xk ), by minimizing an average empiriPK

1

cal loss K

k=1 l(vk − g(xk )) using mainly L1 or L2 loss

functions (or variants). If the values are not perturbated (i.e.

vk = f (xk )), A may be considered as an approximation

operator that returns a compact representation g ∈ F of a

general function f by minimizing some L1,µ or L2,µ -norm.

Approximation theory studies the approximation error as a

function of the smoothness of f (DeVore 1997).

The projection onto the span of a fixed family of functions (called features) is called linear approximation and include Splines, Radial Basis, Fourier or Wavelet decomposition. A better approximation is reached when choosing the

features according to f (i.e. feature selection). This nonlinear approximation is particularly efficient when f has

piecewise regularities (e.g. in adaptive wavelet basis (Mallat 1997) such functions are compactly represented with few

non-zero coefficients). Greedy algorithms for selecting the

best features among a given dictionary of functions include

n→∞

n−1

X

P π (x, y) ≤ Cµ(y).

Assumption A2 [Smooth future state distribution].

There exits a distribution ρ and coefficients c(m) such that

for all m ≥ 1 policies π1 , π2 , . . . , πm ,

ρP π1 P π2 . . . P πm ≤ c(m)µ.

(4)

Then we define the smoothness constant C of the discounted

future state distribution

X

C := (1 − γ)2

mγ m−1 c(m).

m≥1

Assumption A1 was introduced in (Munos 2003) for deriving performance bounds in policy iteration algorithms.

We notice that A1 is stronger than A2 since when A1 holds,

A2 also holds for any distribution ρ (with the same constant C). A1 concerns the immediate transition probabilities (an example for which A1 holds is the optimal replacement problem described below) whereas A2 expresses some

smoothness property of the future state distribution w.r.t. µ

when initial state is drawn from some distribution ρ. Indeed,

in terms of Markov chain, assumption A2 implies that for

any sequence of policies π1 , . . . , πm , the discounted future

AAAI-05 / 1007

state distribution starting from ρ is bounded by a constant C

times µ: for all x0 , y in X,

P∞

(1 − γ)2 m=1 mγ m−1 Pr xm = y x0 ∼ ρ,

xi ∼ p(xi−1 , πi (xi−1 ), ·), 1 ≤ i ≤ m ≤ Cµ(y).

1.0

i≥0

j≥0

This is the worst case.

In that case, (5)

may be derived from the L∞ bound (2) since

lim supn→∞ ||V ∗ − V πn ||p,ρ ≤ lim supn→∞ ||V ∗ −

2γ

1/p

V πn ||∞ ≤ (1−γ)

ε.

2N

• The lowest possible value of C is obtained in a MDP

with uniform transition probabilities P π (x, y) = 1/N (or

more generally if ρ = µ is the steady-state distribution of

P π ). Here c(m) = 1 and C = 1.

Thus the smoothness constant expresses how picky the discounted future state distribution is, compared to µ. A low

C means that the mass of the future state distribution starting from ρ does not accumulate on few specific states for

which the distribution µ is low. For that purpose, it is

desirable that µ be somehow uniformly distributed (this

condition was already mentioned in (Koller & Parr 2000;

Kakade & Langford 2002; Munos 2003) to secure Policy

Improvement steps in Approximate Policy Iteration).

0.1

r=1

0.9

1.0

0.9

0.9

1

Lp error bounds

At each iteration of the AVI algorithm the new function

Vn+1 is obtained by approximating T Vn via a call to a SL

algorithm A, which solves a minimization problem in Lp,µ norm. Our main result relates the performance of AVI as a

function of the approximation errors using the same norm as

that used by the SL algorithm.

Theorem 1. Let µ be a distribution on X. Let A be an

ε−approximation operator in Lp,µ -norm (p ≥ 1) (i.e. for

all n ≥ 0, ||εn ||p,µ ≤ ε). Then:

• Given assumption A1, we have

2γ

lim sup ||V ∗ − V πn ||∞ ≤

Cε.

(5)

(1 − γ)2

n→∞

• Given assumption A2, we have

2γ

lim sup ||V ∗ − V πn ||p,ρ ≤

C 1/p ε. (6)

(1

−

γ)2

n→∞

Let us give some insight about the constant C for a uniform distribution µ = ( N1 . . . N1 ). Here assumption A1 may

always be satisfied by choosing C = N (but then, (5) is not

better than (2), since ||εn ||∞ ≤ N 1/p ||εn ||p ). We are thus

interested in finding a constant C N . An interesting case

for which this happens is when the state space is continuous

and the transition densities are upper bounded (this will be

illustrated in the numerical experiments below).

Let us give some insight about the constant C in the case

of assumption A2 when ρ and µ are uniform.

• The largest possible value of C is obtained in a MDP

where for a specific policy π all states jump to a given

state -say state 1- with probability 1. Thus ρ(P π )m =

(1 0 . . . 0) ≤ N µ. Thus for all m, c(m) = N and

X X

C = (1 − γ 2 )

γi

γ j h(i + j + 1) = N.

0.1

0.1

2

3

0.1

0.1

0.9

N−1

N

r=1

0.9

0.1

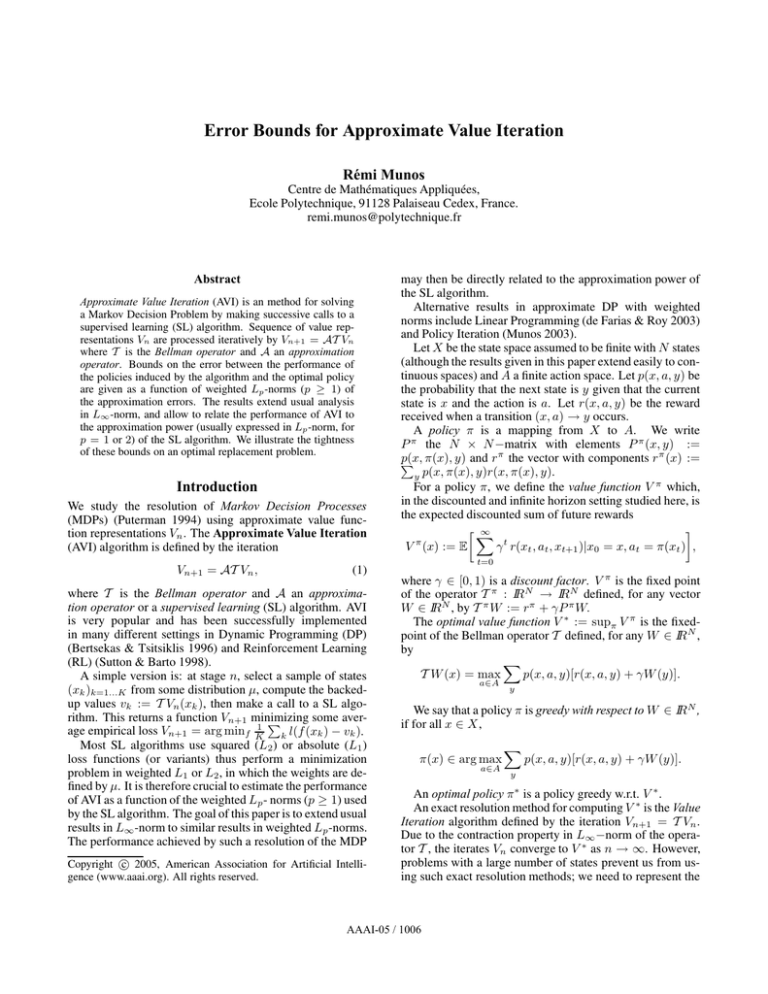

Figure 1: The chain walk MDP.

Illustration on the chain walk MDP

We illustrate the fact that the Lp -norm (for p = 1 and 2)

bounds given in Theorem 1 under assumption A2 may be

much tighter than the L∞ −norm (2) on the chain walk MDP

defined in (Lagoudakis & Parr 2003) (see Figure 1).

This is a linear chain with N states with two dead-end

states: states 1 and N . On each of the interior states

2 ≤ x ≤ N − 1 there are two possible actions: right or

left, which changes the state in the intended direction with

probability 0.9, and fails with probability 0.1 changing the

state in the opposite direction. The reward simply depends

on the current state and is 1 at boundary states and 0 elsewhere: r = (1 0 . . . 0 1)0 .

We consider an approximation of the value function of

the form Vn (x) = αn + βn x where x ∈ {1, . . . , N } is the

state number. Assume that the initial approximation is zero:

V0 = (0 . . . 0)0 . Then T V0 = (1 0 . . . 0 1)0 . The best fit

in L∞ -norm is a constant function V1 = ( 12 . . . 21 )0 which

produces an error ||V1 − T V0 ||∞ = 21 .

Let us choose uniform distributions µ = ( N1 . . . N1 ). In

L1 -norm we find that the best fit is V1 = (0 . . . 0)0 (for N >

4) and the resulting error is ||V1 − T V0 ||1 = N2 . In L2 -norm

the best fit is also constant V1 = ( N2 . . . N2 )0 and the error is

√

−4

||V1 − T V0 ||2 = 2N

.

N

In the three cases, by induction, we observe that the successive approximations Vn are constant, thus T Vn = r +

γVn and the approximation errors remain the same as in the

first iteration: ||Vn+1 −T Vn ||∞ = 21 , ||Vn+1 −T Vn ||1 = N2 ,

√

−4

and ||Vn+1 − T Vn ||2 = 2N

.

N

Since Vn is constant, any policy πn is greedy w.r.t. Vn .

Hence for πn = π ∗ the l.h.s. of (2) and (6) are equal to zero.

Now, in order to compare the r.h.s. of these inequalities, let

us calculate the constant C under Assumptions A1 and A2.

Since state 1 jumps to itself with probability 1, under A1,

we have no better constant than C = N .

Under A2, the worst case in (4) is obtained when the mass

of the future state distribution is mostly concentrated on one

boundary state -say state 1- which corresponds to a policy

πLeft that chooses everywhere action left. We see that for

ρ = µ,

ρ(P πLeft )m (x) ≤ ρ(P πLeft )m (1) ≤ (1 + 0.9m)µ(x),

for all x ≥ 0, thus Assumption A2 is satisfied with

c(m) = P

1 + 0.9m. We deduce that the constant C =

(1 − γ)2 m≥1 mγ m−1 (1 + 0.9m) is independent from the

number of states N .

Thus, when the number of states N is large, the L1 −norm

bound provides an approximation of order O(N −1 ), the

AAAI-05 / 1008

L2 −norm bound is of order O(N −1/2 ), whereas the

L∞ −norm bound (2) is only of order O(1).

Notice that here, we used the same norms for the minimization problem (function fitting) as those used for the

bounds. If, say, a L2 -norm were used for minimization, then

this would provide even worst L∞ error bounds.

the policy πn greedy w.r.t. Vn , defined by Vn (x) :=

maxa Qn (x, a). However, bounds similar to (2), (5), and (6)

on the performance of these policies πn0 may be derived analogously. For example, one may prove that the L∞ bound is

Practical algorithms

(there is an additional error of 2ε/(1 − γ) compared to (2)).

Model-based AVI

Let µ be a distribution over X. Given ε > 0 and an

ε−approximation operator A in Lp,µ -norm (for p ≥ 1). A

model-based version of AVI would consider, at each iteration, the following steps:

1. Select set of states xk ∈ XN , k = 1 . . . K, sampled from

the distribution µ,

2. Compute the backed-up values vk = T Vn (xk ),

3. Make a call to the supervised learning algorithm A with

the data {xk ; vk }, which returns an ε−approximation

Vn+1 .

Reinforcement Learning

Step 2 in the preceding algorithm requires the knowledge of

a model of the transition probabilities (as well as a way to

compute the expectations in the operator T ). If this is not

the case, one may consider using a Reinforcement Learning

(RL) algorithm (Sutton & Barto 1998). Let us introduce the

Q-values and the operator R defined on XN × A,

X

RQ(x, a) :=

p(x, a, y) r(x, a, y) + γ max Q(y, b) .

b∈A

y∈X

The AVI algorithm is equivalent to defining successive approximations Qn according to

Qn+1 = ARQn ,

where A is an approximation operator on X × A. Thus, a

model-free RL algorithm would be defined by the steps:

r

k

yk , k = 1 . . . K,

1. Observe a set of transitions: (xk , ak ) →

where for current state xk and action ak , yk ∼ p(xk , ak , ·)

is the next observed state and rk the received reward,

2. Compute the values vk = rk + γ maxb Qn (yk , b),

3. Make a call to the supervised learning algorithm

A with the data {(xk , ak ); vk }, which returns an

ε−approximation estimate \

Qn+1 .

An interesting case is when A is a linear operator in the

values {vk } (which implies that the operators A and E

commute) such as in Least Squares Regression, k-Nearest

Neighbors, Locally Weighted Learning. Then the approx\

imation Q

n+1 returned by A is an unbiased estimate of

ARQn (since the values {vk } are unbiased estimates of

RQn (xk , ak )). Thus when K is large, such an iteration acts

like a (model-based) AVI iteration, and bounds similar to

those of Theorem 1 may be derived.

Notice that the policy derived from the approximate Qvalues: πn0 (x) ∈ arg maxa Qn (x, a) is different from

0

lim sup ||V ∗ − V πn ||∞ ≤

n→∞

2

ε.

(1 − γ)2

Experiment: an optimal replacement problem

This experiment illustrate the respective tightness of the L∞ ,

L1 , and L2 norm bounds on a discretization (for several

resolutions) of a continuous space control problem derived

from (Rust 1996).

A one-dimensional continuous variable xt ∈ IR+ measures the accumulated utilization (such as the odometer

reading on a car) of a durable. xt = 0 denotes a brand

new durable. At each discrete time t, there are two possible decisions: either keep (at = K) or replace (at = R), in

which case an additional cost Creplace (of selling the existing durable and replacing it for a new one) occurs.

The transition density functions are exponential with parameter β:

βe−β(y−x)

if y ≥ x

p(x, a = K, y) =

(7)

0

if y < x,

βe−βy

if y ≥ 0

p(x, a = R, y) =

0

if y < 0.

Moreover, if the next state y is greater than a maximal value

xmax (e.g. the car breaks down because it is too damaged) then a new state is immediately redrawn according

to p(x, a = R, ·) and a penalty Cdead > Creplace occurs.

The current cost (opposite of a reward) c(x) is the sum of a

slowly increasing function (maintenance cost) and a shortterm discontinuous and periodic additional cost (e.g. which

may represent car insurance fees).

The current cost function and the value function (computed by a discretization on a high resolution grid) are shown

on Figure 1a.

We choose the numerical values γ = 0.6, β = 0.6,

Creplace = 50, Cdead = 70, and xmax = 10. Two finite state space MDPs (with N = 200 or 2000 points)

are generated by discretizing the continuous space problem over the domain [0, xmax ] using uniform grids {xk :=

kxmax /N }0≤k<N with N points.

We consider a linear approximation on the space spanned

by the truncated cosine basis

n

P

x o

) ,

F := f (x) = M

α

cos(mπ

m=1 m

xmax

with M = 20 coefficients. We start with initial values V0 =

0. At each iteration, the fitted function Vn+1 ∈ F is such as

to minimise the L2 error:

1 PN

2

Vn+1 = arg min

k=1 [f (xk ) − T Vn (xk )] .

f ∈F N

In Figure 1.b we show the first iteration (for the grid with

200 points): the backed-up values T V0 (indicated with

AAAI-05 / 1009

70

70

Value function

Cost function

V20

V1

TV0

Accumulated utilization

0

10

0

Accumulated utilization

0

0

10

Figure 2: Cost and value functions.

Figure 3: T V0 (crosses), V1 and V20 .

crosses), the corresponding approximation V1 (best fit of

T V0 in the cosine approximation space). The approximate

value function computed after 20 iterations (when there are

no more improvement in the approximations) is also plotted.

Here, the highest pick in the future state distribution occurs at x = 0 for a policy that

R ∞would always chose action

R. By our choice of xmax , xmax p(0, R, y)dy is negligible. Thus, Assumption A1 is satisfied (as well as A2) with

C = βxmax = 6.

regression) is straightforward (as long as l is an increasing

and convex function over IR+ ).

Other possible extensions include Markov games and online reinforcement learning.

N = 200

N = 2000

||εn ||∞

12.4

12.4

C||εn ||1

0.367

0.0552

C 1/2 ||εn ||2

1.16

0.897

Appendix: proof of the results

Proof of Lemma 1

Since T Vk ≥ T π Vk and T V ∗ ≥ T πk V ∗ , we have

∗

∗

∗

∗

V ∗ − Vk+1

= T π V ∗ − T π Vk + T π Vk − T V k + ε k

V ∗ − Vk+1

≤ γP π (V ∗ − Vk ) + εk

= T V ∗ − T πk V ∗ + T πk V ∗ − T V k + ε k

≥ γP πk (V ∗ − Vk ) + εk ,

∗

Table 1: Comparison of the L∞ , L1 and L2 bounds.

Table 1 compares the right hand side of equations (2), (5),

and (6) (up to the constant 2γ/(1 − γ)2 ). We notice that

the L1 and L2 bounds are much tighter than the L∞ one,

and decrease when the number of grid points tends to infinity (asymptotic behavior similar to the chain walk MDP),

whereas the L∞ bound does not. Indeed, when the number

of states N goes to infinity, the discrete MDP gets closer to

the continuous problem, and since the cost function is discontinuous, the L∞ approximation error (using continuous

function approximation such as the cosine basis) will never

be lower than half the value of the largest discontinuity.

Conclusion

Theorem 1 provides a useful tool to relate the performance

of AVI to the approximation power of the SL algorithm. Expressing the performance of AVI in the same norm as that

used by the supervised learner to minimize the approximation error guarantees the tightness of this bound.

Extension to other loss functions l, such as -insensitive

(used in Support Vectors) or Huber loss function (for robust

from which we deduce by induction

V ∗ − Vn ≤

n−1

X

∗

γ n−k−1 (P π )n−k−1 εk

k=0

n

(8)

∗

+γ (P π )n (V ∗ − V0 )

V ∗ − Vn ≥

n−1

X

γ n−k−1 (P πn−1 P πn . . . P πk+1 )εk

k=0

+γ n (P πn P πn−1 . . . P π1 )(V ∗ − V0 ). (9)

Now, from the definition of πk and since T Vn ≥ T π Vn ,

we have:

∗

V ∗ − V πn

∗

∗

∗

= T π V ∗ − T π V n + T π V n − T V n + T V n − T πn V πn

∗

≤ γP π (V ∗ − Vn ) + γP πn (Vn − V ∗ + V ∗ − V πn ),

thus (I − γP πn )(V ∗ − V πn ) ≤ γ(P π − P πn )(V ∗ −

V

(I − γP πn ) is invertible and its inverse

Pn ). Now,πnsince

k

) has positive elements, we deduce

k≥0 (γP

∗

∗

V ∗ − V πn ≤ γ(I − γP πn )−1 (P π − P πn )(V ∗ − Vn ).

AAAI-05 / 1010

This, combined with (8) and (9), and after taking the absolute value, yields

V ∗ − V πn ≤ (I − γP πn )−1

n n−1

X

∗

γ n−k (P π )n−k + (P πn P πn−1 . . . P πk+1 ) |εk | (10)

k=0

o

∗

+γ n+1 (P π )n+1 + (P πn P πn P πn−1 . . . P π1 ) |V ∗ − V0 | .

We deduce (3) by taking the upper limit. Proof of Theorem 1

We have seen that if assumption A1 holds, then A2 also

holds for any distribution ρ. Now, for p = 1, if the bound

(6) holds for any ρ, then (5) also holds. Thus, we only need

to prove (6) in the case of assumption A2.

We may rewrite (10) as

n−1

i

2γ(1 − γ n+1 ) h X

∗

α

A

|ε

|+α

A

|V

−V

|

,

V ∗ −V πn ≤

k

k

k

n

n

0

(1 − γ)2

k=0

with the positive coefficients (0 ≤ k < n)

(1 − γ)γ n

(1 − γ)γ n−k−1

and

α

:=

,

αk :=

n

1 − γ n+1

1 − γ n+1

(defined such that they sum to 1) and the stochastic matrices

∗

πn −1

Ak := 1−γ

) [(P π )n−k + (P πn . . . P πk+1 )

2 (I − γP

∗

πn −1

) [(P π )n+1 + (P πn P πn . . . P π1 ) .

An := 1−γ

2 (I − γP

We have

h 2γ(1 − γ n+1 ) ip X

||V ∗ − V πn ||pp,ρ ≤

ρ(x)

(1 − γ)2

x∈X

h n−1

X

k=0

≤

ip

αk Ak |εk | + αn An |V ∗ − V0 | (x)

h 2γ(1 − γ n+1 ) ip X

(1 − γ)2

h n−1

X

k=0

(11)

ρ(x)

x∈X

i

αk Ak |εk |p + αn An |V ∗ − V0 |p (x),

by using two times Jensen’s inequality (since the coefficients

{αk }0≤k≤n sum to 1 and the matrix Ak are stochastic) (i.e.

convexity of x → |x|p ). The second term in the brackets

will disappear when taking the P

upper limit. Now, from assumption A2, ρAk ≤ (1 − γ) m≥0 γ m c(m + n − k)µ,

P

Pn−1

thus the first term x ρ(x) k=0 αk Ak |εk |p (x) in (11) is

bounded by

n−1

X

X

αk (1 − γ)

γ m c(m + n − k)||εk ||pp,µ

m≥0

k=0

References

Atkeson, C. G.; Schaal, S. A.; and Moore, A. W. 1997.

Locally weighted learning. AI Review 11.

Bertsekas, D. P., and Tsitsiklis, J. 1996. Neuro-Dynamic

Programming. Athena Scientific.

Davies, G.; Mallat, S.; and Avellaneda, M. 1997. Adaptive

greedy approximations. J. of Constr. Approx. 13:57–98.

de Farias, D., and Roy, B. V. 2003. The linear programming approach to approximate dynamic programming. Operations Research 51(6).

DeVore, R. 1997. Nonlinear Approximation. Acta Numerica.

Gordon, G. 1995. Stable function approximation in dynamic programming. Proceedings of the International

Conference on Machine Learning.

Guestrin, C.; Koller, D.; and Parr, R. 2001. Max-norm

projections for factored mdps. Proceedings of the International Joint Conference on Artificial Intelligence.

Hastie, T.; Tibshirani, R.; and Friedman, J. 2001. The Elements of Statistical Learning. Springer Series in Statistics.

Kakade, S., and Langford, J. 2002. Approximately optimal

approximate reinforcement learning. Proceedings of the

19th International Conference on Machine Learning.

Koller, D., and Parr, R. 2000. Policy iteration for factored

mdps. Proceedings of the 16th conference on Uncertainty

in Artificial Intelligence.

Lagoudakis, M., and Parr, R. 2003. Least-squares policy

iteration. Journal of Machine Learning Research 4:1107–

1149.

Mallat, S. 1997. A Wavelet Tour of Signal Processing.

Academic Press.

Munos, R. 2003. Error bounds for approximate policy iteration. 19th International Conference on Machine Learning.

Puterman, M. L. 1994. Markov Decision Processes,

Discrete Stochastic Dynamic Programming. A WileyInterscience Publication.

Rust, J. 1996. Numerical Dynamic Programming in Economics. In Handbook of Computational Economics. Elsevier, North Holland.

Sutton, R. S., and Barto, A. G. 1998. Reinforcement learning: An introduction. Bradford Book.

Vapnik, V.; Golowich, S. E.; and Smola, A. 1997. Support vector method for function approximation, regression

estimation and signal processing. In Advances in Neural

Information Processing Systems 281–287.

n−1

(1 − γ) X X m+n−k−1

γ

c(m + n − k)εp

1 − γ n+1

2

≤

m≥0 k=0

1

Cεp ,

≤

1 − γ n+1

where we replaced αk by their values, and used the fact that

||εk ||p,µ ≤ ε. By taking the upper limit in (11), we deduce

(6). AAAI-05 / 1011