From: AAAI-87 Proceedings. Copyright ©1987, AAAI (www.aaai.org). All rights reserved.

ArEnaand E. P&ditis

and Jack Mostow

Department of Computea: Science

Rutgers University, New Brunswick, NJ 08903

Abstract

PROLEARN

An adaptive inteTpTeter for a programming language

adapts to particular applications by learning from

execution experience. This paper describes PROLEARN, a prototype adaptive interpreter for a subset

of Prolog. It uses two methods to speed up a given

program: explanation-based generalization and partial evaluation. The generalization of computed results differentiates PROLEARN from programs that

cache and reuse specific values. We illustrate PROLEARN on several simple programs and evaluate its

capabilities and limitations. The effects of adding a

learning component to Prolog can be summarized as

follows: the more search and subroutine calling in

the original query, the more speedup after learning; a

learned subroutine may slow down queriesthat match

its head but fail its body.

to compute

execution

simplify

trace.

Partial

techniques

execution

trace for more efficient

The rest of this paper is organized

II describes EBG in PRQLEARN

partial evaluation in PROLEARN.

to its execution

preter for a subset of Prolog.

a prototype

sults.

Section

VII summarizes

environ-

handles

all

except for the cut symbol (used to control backand side-effects

(primitives

that cause input or

output or change the database).

PROLEARN

learns new

subroutines that represent justifiable gener&zations

of example executions.

(We will use “subroutine”

to mean a

user-defined Prolog rule, as opposed to a primitive or a

is an important

way to increase

the performance of search-based problem-solving system

[Mitchell et al., 1986, Mitchell et al., 1983, Minton, 1985,

Langley, 1983, Laird et al., 198623, Fikes et al., 1972, Maha&v=,

1985, Korf, 19851. Because Prolog uses search

as a basic mechanism

and has built-in unification,

it is a

natural vehicle for developing adaptive interpreters.

PROLEARN

combines explanation-based generdiwation (EBC) [Mitchell et al., 19861 with partial evaluation

[Kahn and Carlsson, 1984, Kahn, 19841 to learn from execution experience and thereby reduce future search. As

’TlGs work 1s Lupported by NSF under Grant Number DMC8810507, and by the Rutgers Center for Computer Aids to Industrial

Productivity,as well as by DARPA under Contract Number N6001485-K-0116

Machine Learning 2%Knowledge Acquisitiorn

Section

VI discusses

related

the research

work.

Finally,

contributions

Section

of this work.

in

crdaptive intep-

PROLEARN

as follows.

and Section III describes

PROLEARN

is only a

for a practical adaptive interpreter;

Section IV

some of its shortcomings

and suggests possible

Section V summarizes

the empirical reimprovements.

ment by learning from execution

experience

and thereby

customize itself toward particular apphcations? T&a paper describes PROLEARN,

are used to

prototype

discusses

a program,

PROLEARN

routine call as a goal concept

adapt

call, it uses EBG

evaluation

the generalized

As it executes

How c0l.M an interpreter

fact .)

Search reduction

each subroutine

class of queries solved by the same

execution.

I

of Prolog

tracking)

interprets

the general

terms of primitives

and facts.

trace as a template,

the subroutine,

primitive

subroutines

every sub-

Using the specific

it constructs

as follows.

treats

for EBG to operationalize

a customized

PROLEARN

and user-defined

the course of executing

the subroutine,

remove the dependence

on the particular

to the subroutine, and conjoins

records

facts

version

of

all calls to

performed

generalizes

arguments

the generalized

in

execution

in

them to

passed

calls.

Thr

resulting conjunction

is used to define a new special case

version of the original subroutine.

To illustrate, consider the following database (from

[Mitchell

et al., 19861):

on(boxf ,,tablei).

voluwte(boxl,lO).

isa(boxi Sbox) .

iaa(tablei

oendtable).

color(box1 ,red) .

color (table1

Bblue) .

density(boxl,

i0) S

safe-to-stack(X,Y)

:-

lighter(X,Y);not(fragile(Y)).

lighter(X,Y)

:weight (X,Wl) S

weight (Y ,W2),

Wl < w2.

weight(X,Y) :wollune~x,~~,

density(X,D)

Y iz V * D.

EBG sometimes

D

be simplified

subroutine.

weight(K,50O)

Given

the

:-

isa(X,endtabae).

query

PROLEARN

learns

safe-to-stack(X,Y)

safe-to-staek(boxl,tablel),

the subroutine

:-

produces

a conjunction

by exploiting

This simplification

becomes

below

claus~(wollame(X,v),true),

elause(density&X,B)otsue),

IL4

ie B * D,

evident

solves

from

the Using

The

pole.

formulation

evaluation

the Towers

The program

puzzle-where

the From pole

program

evalua-

of the generalization

example.

of Manoi

of the solution:

the learned

in [Kahn and Carlsson,

in the following

disks are move’d

about

is similar to partial

tion without execution as described

19841 and [Bloch, 19841.

The need for partial

definition

of terms that can

constraints

N 2

to the To pole

uses a standard

0

via

recursive

move N-l disks from the From

pole to the Using pole, move one disk from the From pole

That

product

is, object

of X’s

X is safe to stack

volume

Y is an endtable.

and density

The learned

on object

Y if the

is less than

500 and

uses clause to

subroutine

ensure that user-defined predicates like wolunw, density,

othand iza are evaluated solely by matching stored facts;

erwise the learned subroutine might invoke arbitrarily expensive subroutines for these predicates, thereby defeating

its efficiency. PROLEARN

also learns similar specialized

versions of each subroutine

invoked in the course

cuting safe-to-&a&

(e.g., lighter).

EBG in PROLEARN

tive calls effectively

caches

the result of searching

safe-to-stach(box1

The learned subroutine,

namely

,tablel)

9

isa(obj2 0endtable).

which is unfolded

in terms of prim-

itive calls like iaa(Y ,endtabls),

eliminates this search.

While subroutine unfolding provides some speedup in procedural

languages,

greater be&use-of

While

original

in Prolog

definition,

speedup

can be much

this search reduction.

the learned

trace generated

the

subroutine

is a special

it is a generalization

for the specific

query.

case of the

of the execution

Therefore

it will ap-

ply

- _ to queriesother than the one that led to itscreation,

and should speed up interpret ation for the entire class of

such queries. This class is restricted to queries whose execution would have followed the same execution path as

the original

query.

Relaxing

this restriction

would require

allowing learned subroutines

to call user-defined

subroutines, which would make learned subroutines more general

but possibly less efficient.

PROLEARN

relies on EBG

generalization

is justifiable.

to guarantee that tius

An execution

resulting

to the

BlOV@(O,-,- 9- , Cl).

mower(l,FPo~,To,Using,plan) :N > 0, M is N-1,

Izlovg(PI,From,UP3ing,To,Subplanl),

mover(H,lJeing,To,From,Subplan2),

append(Subplan1, CCFr~~~,T~ll,Frontplan),

app5ndC ClJJ9.

for the

PROLEARN

must determine which definition of weight

to use. As it turns out, the first definition fails and it must

use the second one instead,

plan is bound

The

append(Frontplan,Subplan2,Plan).

intermediate subroutines

needed to answer the query. Par

example, when the query weight (tablei

,W2) is evaluated

in the course of executing

disks from the Using

pole to the To pole.

variable plan.

of exe-

amounts to subroutine

unfoldUnfolding a query into its primi-

ing with generalization.

to the To pole, and finally move N-l

trace constitutes

a proof of a query in the “theory” defined by the subroutines and facts in the program. EBG generalizes away only

those details of the trace ignored by this “proof.99

The

learned subroutine should therefore P ,,rz for any query it

matches, that is, produce the sam1~,oehavior as the original

program. (Section IV discusses the problem of preserving

appsnd(CHIT1 ,L, [HIUI)

:-

append(T,L,U).

Given the query move(3,lsft,right,cgnter,Plan),

Plan is bound

to:

C[left,rightlo i&ft,centerl 9

[right Dcenter]

s Cleft,ri

[centsr,rightl, [left ,ri

Since rsove

LEARN

learns

contains

recursive

three move

learns the following

calls

subroutines.

to stove,

PRO-

In particular

it

subroutine:

mowe(M,From,To,Using,C[From,TolD [From,UsinglB

[To ,Usingl

o CFrom,Tol

DCUsing,Froml

TO] ) [F~oRI,ToI 1)

,

:-

i5 N-l, pI>O, L is M-f,

L>O, 0 is L-l, L>O, 0 is L-l,

M>O, K is M-l, K>O, 0 is K-f,

K>O, 0 is K-I.

The saove definition

learned

by EBG represents

a spe-

cialized subroutine for

to the To pole via th

’

Worse still, future

with move of N > 3 disks will

3 disks from the From pole

pole.

The newly learned

move” z for two disks and one disk are similarly verbose.

queries

match the learned rule and not fail until they reach

conjunct 0 is L-l.

the

The term 0 is K-l (in the above conjunction) could

be eliminated by binding K to 1 instead.

In general, if

two of the unknowns in an expression like X is Y - Z are

known then the third

can be deduced.

PROLEARN

uses

correctness

of the original

program.)

Prieditis and Mostow

4%

such rules to simplify primitive

subroutine

LEARN actually learns the above move as:

calls.

33.

PRO-

move(3,From,To,Using,

C[F~O~,TO]a [Frorn,Usingl,

, [Using ,Frod ,

[To ,Usingl , CF~OIU,TOI

[Using I To] , [From, To] 1) .

PROLEARN

partially

evaluates

the following

between

down

are eliminated

terms like 12 is

X * 4 are eliminated

3. Terms like 4 is

y/3

by binding

kinds of

following

are simplified

((Y > 119, (Y < 1599to exploit PROLEARN’s

division.

Early cutoff occurs

After

partial

PROLEARN

inserts

The

integer

is

that it is tried before

subroutines.

a number

Search

of shortcomings

architectures

common

PaobBem

As

PROLEARN

learns more subroutines,

search time

As an example,

consider member, a

gradually increases.

commonly-used

Prolog subroutine that tests for member-

[Xi-

member(X,

C-IT])

Giventhe

length

[b,c,d,al),

PROLEARN

third

and second

member(X,

member(X,

[TI,T2,XlCTl,XlI).

V-IY,M) .

.

equiw(X

A Y,M A I)

:-

equiv(X,M),

equiv(X

V Y,M V N)

:-

equiv(X,M),equiv(Y,N).

equiv

are equivalent

is used to test whether

boolean

expressions

of 1, A and V).

operators

V b)

equiv(Y,N).

A -(c

V d) s(a

its two

(with

the

Consider

the

V b)

A (c

V d))

(A) to find relevant subroutines.

Here the only match is the

subroutine whose head is equiv(X

A Y ,M A N) . In fact,

there is only one relevant subroutine

at each node of the

entire search tree for this query, so its branching factor is

1. When branching factor is this low, there is no search to

eliminate. Here, speedup derives only from elimination

of

subroutine calls in the learned rules, namely:

A-lY,X

equiw(llX

A Y) .

9.

a query

is worth

cost (search

learning

from

and subroutine

learning, the cost of the learning process,

bution of subsequent queries. PROLEARN

from every execution

without

considering

depends

calling)

on

without

and the distrisimply learns

these factors.

positions

6.

list.

[Tl,TB,T3,Xl-

AlY,M)

equiv(lX

its execution

three member definitions (since zaexnber

three times). They represent member-

mmber(X,

equiv(lX

:-

Whether

xnember(X,T).

ship of an item at the fourth,

in an arbitrary

:-

logical

query:

the

equiv(X,Y).

A Y) ,M)

equiv(llX,X

querymember(a,

learns the following

is called recursively

consider

19851):

equiv(l(X

standard

following

I).

:-

[Mahadevan,

V Y) ,M)

arguments

ship of an item in a list:

member(X,

and remembering?

learning,

equiw(l(X

equiw(ll(a

that learn.

Bottleneck

:-

at the cost of slowing

learning

worthless

(after

beand

Prolog interpreters

typically

index on the subroutine’s

name (equiv)

and the first argument’s

principal functor

Limitations

exhibits

of possibly

database

queries

is worth

The subroutine

subroutine

version from which it was derived,

to other problem-solving

A.

learned

it above existing

V

PROLEARN

the

To ensure

the less efficient original

like

when Y 5 11.

evaluation,

added to the database.

X to

to conjunctions

rth Problem

up some

what

equiv(X,X).

equiw(llX,Y)

X to Y, and

by binding

speeding

others,

As an example

terms: terms like 3 < 5 (that are always true) are eliminated, terms like X == Y (a test on whether X and Y are

the same variable) and X = Y (a test on whether X has the

same value as Y)

The Learning

Given the tradeoffs between storing and computing,

tween the cost of learning and the resulting speedup,

The

Correctness

and

I).

1).

Is the set of programs

new subroutines?

still correct

That

after PROLEARN

is, does the new program

learns

preserve

Given the query mexnber(a, [bpc,d,e,al),

PROLEARN must search through all the learned member defi-

the user-intended

behavior?

Two problems,

redundancy

and over-generalization,

make this question difficult to an-

nitions to get to the general case for member. A query testing the membership

of an object in the list of a hundred

swer.

items may generate ninety-nine

new member definitionsclogging up the interpreter if most of them are executed

to avoid

again.

ular query,

A number

of researchers

have observed

that

uncon-

trolled learning of macro-operators

can cause search bottlenecks [Minton,

1985, Iba, 1985, Fikes et al., 19721.

Minton’s programused

the frequency of use and the heuristic usefulness of macro-operators

as a filter to control learning. Iba’s program learned only those operator sequences

leading from one state with peak heuristic value to another.

4%

Machine Learning & Knowledge Acquisition

PROLEARN

disallows

subroutines

with

side-effects

redundancy.

Since every learned subroutine

in

re p resents another way to execute a particPROLEARN

the database

of original

subroutines

describes how to execute the query (perhaps

cient manner). The danger lies in side-effects

repeated and thus change program behavior

violates the intent of the original program.

PROLEARN

also disallows

already

in a less effithat may be

in a way that

the cut symbol

(used

to

control backtracking

and define negation-as-failure).

If

PROLEARN

allowed the cut symbol, learning new subroutines would, in general, be impossible

without considering

how much

the context of other subroutines

(e.g., cut and fail

combinations that were executed during a particular query).

While applying PROLEARN

to a program with side

effects is not guaranteed to preserve its behavior, neither

does it necessarily

tive interpreters

lead to disaster.

preserve

Demanding

program

behavior

that adap-

penalty,

is unnecessar-

to characterize

and expand

PROLEARN

is also theoretically

subject

ecution

rule that didn’t

apply

this problem

diately

unwieldy

by placing

above

in Prolog)

each learned

the subroutine

from

because

Although

It ap-

problem-solving.

to overcome

member

equiv

2

2

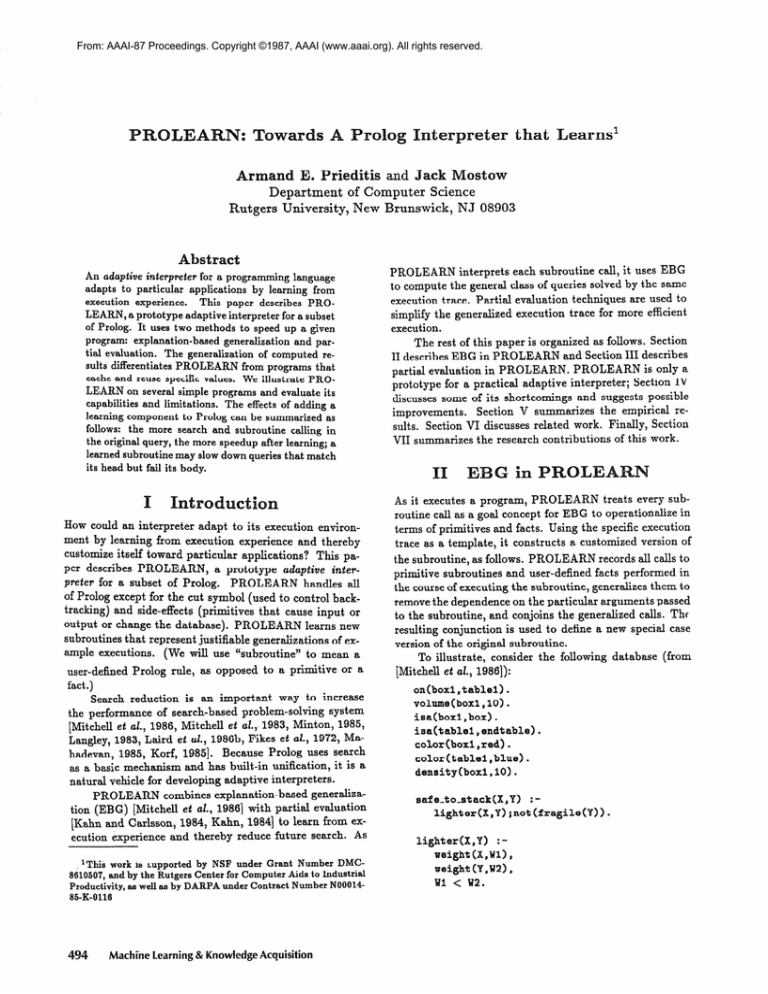

Table 1: Speedup

Table

Factors

While

imme-

1 lists the speedup

original query in Prolog

it was derived

For each example,

1.3

1

6

36

1

1

3

6

factors

the table

time

for the original

follows:

The results

alone,

age branching

greatest

the example

factor

speedup.

from eliminating

The

user subroutine

speedup.

routine

with

above.

with

branching

speedup

averthe

resulted

calls (member and equiv)

arithmetic

1 and few

had the least

resulted

from sub-

not search reduction.

operations

were eliminated.

,

Since each learned subroutine applies to a whole class

of queries, the speedup results apply to the entire class.

However, the results presented above are incomplete,

since

they do not show the slowdown

for queries outside this

class.

Such

slowdown

occurs

when

a query

matches

learned subroutine and then fails after executing

the terms in its definition.

We measured

speedup

does

not

values [Mostow

and

learned

that cache specific

chunks.

et al., 19791, PROLEARN

the execution frequency of different control paths in a program, they do not generalize as PROLEARN

does.

by comparing

time before and after learning.

This paper has shown how an interpreter can adapt to its

execution environment

and thus customize itself to a particular application.

PROLEARN,

a

some of

execution

This comparison

measures

The generalization

sults differentiates

PROLEARN

and reuse specific

values.

The effects of adding

can be summarized

proto-

of computed

from programs

a learning

component

as follows:

The more search

and subroutine

to Prolog -

calls in the original

after learning:

and backtracking

by search are eliminated,

caused

well as the overhead

of subroutine

re-

that cache

query, the more speedup

the indexing

as

calls.

The same speedup applies to the class of queries that

match the learned subroutine, not just the query from

which the subroutine was learned. This class includes

queries that would have followed the same execution

path

as the original

e A learned

Prolog

an implemented

type of an adaptive Prolog interpreter, uses two methods to

increase its performance:

explanation-based

generalization

and partial evaluation.

calls (move).

factor

from EBG

as

a The one example

(move) where partial evaluation

helped was sped up by a factor of 8.5 over EBG because costly

which avoids this

mechanism

subroutines

produced

The next greatest

Any speedup

uses EBG,

chunking

to simplify

occur-

of the same variable,

that are more specific

19841. While some optimizers

use data about the execution environment

[Cohen, 19861 or collect statistics about

over the

the largest

(safe-to-stack)

ca3l elimination,

that multiple

vironments more dynamically

than typical program optimizers [Aho et al., 1986, Kahn and Carlsson, 1984, Bloch,

can be summarized

the user subroutine

two examples

Soar’s

evaluation

cached subroutines

Soar assumes

also lists the average branch-

of calls to user-defined

B For EBG

4

stores genBoth approaches

pay for decreased

execution time with increased space costs and lookup time.

PROLEARN

adapts programs to their execution en-

presented

ing factor and number

query.

exfrom

54 to 156 times slower

PROLEARN’s

PROLEARN

Also,

Cohen, 1985, Lenat

eralized procedures.

Query in Prolog

in CPU

for the examples

ranging

by the interpretation

rences of a constant are instances

which can cause it to learn chunks

than necessary.

Over Original

by factors

is outweighed

resemble Soar’s chunks,

Unlike systems

23

94

4

queries

of Prolog’s

up PROLEARN’s

et a!., 1986b], PROLEARN

is an s’ncbdental learning system because it learns as a side-effect of

use partid

23

11

4

speeds

Like Soar [Laird

assumption.

safe-to-staclt

it loses the efficiency

learning

of the example

The extra

performance

than Prolog.

rather than at the top of the database.

RlOVB

if PROLEARN’s

to the same

subroutine

which

achieved

penalty, which renders PRCLEARN

changing

when the rule was learned.

(though

be

Prolog meta-interpreter.

imposes a considerable

to 32, this speedup

over-generalization

problem as SOAR [Laird et al, 1986a],

in which a learned rule masks a pre-existing

special-case

pears possible

largely

indexing.

the class

of programs PROLEARN

can interpret without

program behavior in unacceptable

ways.

would

simple but inefficient

level of interpretation

ily restrictive,

since not all behavior

changes are unacceptable [Mostow and Cohen, 1985, Cohen, 19861. Further work is needed

speedup

techniques were eficiently

implemented

in the Prolog interpreter itself. PROLEARN

is actually implemented

as a

subroutine

query.

may

slow

down

queries

that

match its head but fail its body.

Prieditis and Mostow

497

[Langley, 19831 P.

Ackmowlledgments

Many

thanks

van, Prasad

go

to

Tony

Tadepalli,

Bonner,

Steve

Smadar

Kedar-Cabelli

Thanks

also go to Chun

Minton,

Sridhar

Tom

for their comments

Mahade-

Fawcett,

and

on this paper.

Liew and Milind

Deshpande

for

their help with I#!.

Langley.

Learning

effective

search

In Proceedings IJCAI-8,

International

heuristics.

Joint Conferences

on Artificial

Intelligence,

Karlsruhe, West

Germany,

[Lenat et al., 19791 D.B. Lenat,

Klahr.

Cognitive

economy

systems.

In Proceedings

Conferences

August

[Aho et al., 1986]

pilerx

A. Aho,

R. Sethi, and J. Ullman. ComTechniques, and TOOL. Addison-

Principles,

Wesley,

Reading,

Mass.,

[Bloch, 19841 6. Bloch.

of Logic Programs.

mann Institute

1986.

S ource-to-Source

Technical

of Science,

Report

Tranaformationa

CS84-22, Weiz-

November

1984.

19861 D. C o h en. Automatic

compilation

of logical

specifications

into efficient programs.

In Proceedings

[Cohen,

American

gence, Seattle, WA,

A AAI-87,

Association

for Artificial

August 1986.

[Fikes et al., 19721 R. Fikes,

P. Hart,

and N. J. Nilsson.

Learning and executing generalized

tificial Intelligence, 3(4):251-288,1972.

ing8 in Artificial

robot plans. ArAlso in Read-

Webber,

Intelligence,

Intelli-

B. L. and Nils-

son, N. J., (Eds.).

[Iba, 19851 G. Iba.

problem-solving.

Learning by discovering

macros in

InternaIn Proceedings IJCAI-9,

tional Joint Conferences on Artificial Intelligence, Los

Angeles,

CA, August

[Kahn, 19841 K. K a h n.

1985.

Partial

methodology

and artificial

5(1):53-57,

Spring 1984.

evaluation,

programming

intelligence.

AI Mugazine,

[Kahn and Carlsson, 19841 K. Kahn

and M. Carlsson.

The compilation

of prolog programs without the use

In Proceedinga of the Internaof a prolog compiler.

tional Conference on Fifth Generation Computer Systems, ICOT, Tokyo, Japan, 1984.

[Kedar-Cabelli

and McCarty,

19871 S. T. Kedar-Cabelli

and L. T. McCarty.

Explanation-based

generalization as resolution

theorem

of the Fourth International

proving.

Machine

In Proceeding8

Learning Work-

ahop, Irvine, CA, June 1987.

[Korf, 19851 R.

Learning to Solve Problem

by

Korf.

Searching for Mucro- Operators. Pitman, Marshfield,

MA,

1985.

[Laird et al., 1986a]

J.E. Laird,

P.S. Rosenbloom,

and A.

Newell. Overgeneralization

during knowledge compilation in soar. In Worbhop

on Knowledge Compilation, Oregon

State University,

Corvallis,

OR, Septem-

ber 1986.

[L&d

et al., 1986b]

Newell.

Soar:

mechanism.

498

J. E. Laird, P. S. Rosenbloom,

and A.

the architecture

of a general learning

Machine Learning, l(l):ll-46,

Machine learning & Knowledge Acquisition

1986.

August

1983.

F. Hayes-Roth,

and P.

in artificial intelligence

IJCAI-6,

on Artificial

International

Intelligence,

Tokyo,

Joint

Japan,

1979.

Verification-based

[Mahadevan, 19851 S. Mahadevan.

a generalization

strategy

for inferring

learning:

In Proceedings

problem-decomposition

methods.

IJCAI-9, International Joint Conferences on Artificial

Intelligence,

Los Angeles,

[Minton, 19851 S. Minton.

for problem-solving.

national

Joint

Los Angeles,

[Mitchell

Conferences

et al., 19861 T.

[Mitchell

view.

Learning

chine Learning,

and Cohen,

tomating

program

R.

Keller,

Palo Alto,

19851 J. Mostow

speedup

and

S.

generalization:

heuristics.

CA,

and

acquirIn Ma-

1983.

and D. Cohen.

by deciding

a

1986.

P. E. Utgoff,

by experimentation:

problem-solving

Tioga,

Intelligence,

Learning, 1(1):47-80,

et al., 19831 T. M. Mitchell,

ing and refining

[Mostow

on Artificial

Mitchell,

Machine

plans

Inter-

IJCAI-9,

1985.

Explanation-based

R. B. Banerji.

1985.

generalizing

In Proceedinga

CA, August

Kedar-Cabelli.

unifying

CA, August

Selectively

Au-

what to cache.

International

Joint ConferIn Proceedinga IJCAI-9,

ences on Artificial Intelligence,

Los Angeles, CA, August 1985.