From: AAAI-83 Proceedings. Copyright ©1983, AAAI (www.aaai.org). All rights reserved.

Episodic Learning

Dennis Kibler

Bruce Porter

Informationand ComputerScienceDepartment

Universityof CaliforniaaltIrvine

Irvine,California

Abstract

- Stage 1 learninginvolvesunderstanding

&.en each availableoperatorshouldbe

applied. The concernhere is with

learningthe enablingconditionsfor

individualoperators,withoutknowledge

of the other operatorsin the solution

path to providecontext.

A system is describedwhich learns to compose

sequencesof operatorsinto episodesfor problem

solving. The system incrementally

learnswhen and

why operatorsare applied. Episodesare segmented

so that they are generalizableand reusable. The

idea of *augmenting

the instancelanguagewith

higher level conceptsis introduced. The

techniqueof perturbationis describedfor

discoveringthe essentialfeaturesfor a rule with

minimal teacherguidance. The approachis applied

to the domain of solvingsimultaneouslinear

equations.

- Stage 2 learninginvolvesunderstanding

J&Y each operatoris appliedwith

emphasison the sequencingof operators.

We refer to this as episodiclearning.

Episodicsegmentationis the groupingof

operatorsto form an episode. Episodes

are discrete,reusablecompnents for

plan generationand each simplifiesthe

problem state.

1. Introduction

With the aid of a teacher,juniorhigh school

studentscan learn to solve simultaneouslinear

equations. Operatorsthat are appliedin solving

these problemsincludemultiplyingan equationby

a constantand combininglike terms. The students

are alreadyfamiliarwith &

these operatorsare

applied. Moreover,the teacherassumesthat the

studentsunderstandbasic conceptsabout numbers,

such as a numberbeing positive,negative,or

non-zero.

The main featuresof our approachto episodic

learningare:

- segmentationof operatorsequencesinto

meaningful,reusable episodes.

- augmentationof the instancelanguageto

includehigher-orderconceptsnot

present in the traininginstanceitself.

Our system,nicknamedPETr2 incrementally

(definedin [7]) inducescorrectrules from the

traininginstancespresented. The rules are

correctin the sense that at any point in the

learningprocess:

- perturbationof a traininginstanceto

create new instances.

2. Related Work

- the knowledgeis consistentwith all

past traininginstances.

Relatedwork in stage 1 learningincludes

Neves's [lo]systemwhich learnedto solve one

equationin one unknownfrom textbooktraces. The

system learnedboth the context (preconditions)

of

an operatoras well as which operatorwas applied,

althoughthe operatorhad to be known to the

system.His generalization

languagewas simpler

than ours in that a constantcould only be

generalizedto a variable. The programLEX [8]

uses version spacesto describethe current

hypothesisspace as well as concepttrees to

direct or bias the generalizations.

As it is not

the main point of our work, we keep only the

minimal (maximallyspecific)generalization[ll]

of the examples.

- sequencesof rules (episodes)are

guaranteedto simplifythe problemstate

if they apply.

Learningrules for applyingoperatorsinvolves

two stagesof learning:

1Thi.sresearchwas supportedby the Naval Ocean

System Center under contractNO6123-81-C-1165.

2Please refer to [41 for a completedescription

of our approachincludinga PDL d;scriptionof the

learningalgorithmand multipleexamplesof its

use. PET is implementedin Prolog on Dec2020.

Availableupon request.

MACROPS [2] is an exampleof stage 2, or

episodiclearning. This system remembersrobot

plans that have been generatedso that the plan

can be reusedwithout re-generation.The plans

are stored in triangletableswhich recordthe

191

variablesin the instancelanguage.

order of applicationof operatorsin the plan and

how their pre-conditions

are satisfied. The plans

are generalizedto be applicableto other

instances(as are episodes).

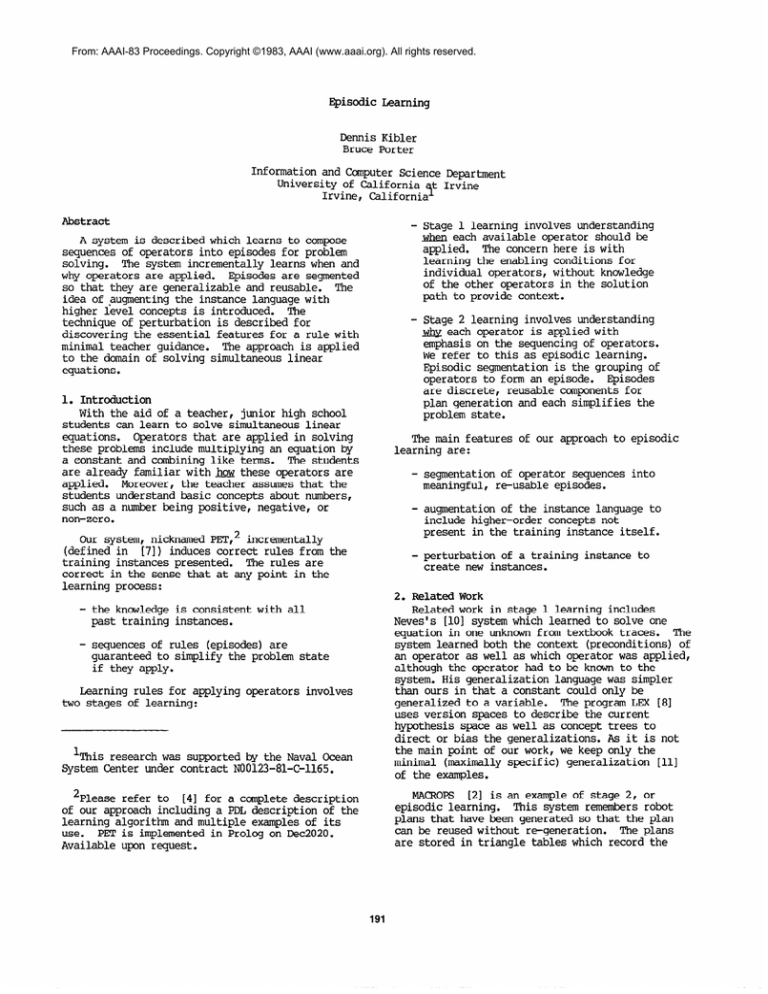

4.2. Generalization

Language

FollowingMitchell [81 and Michalski[61 we

have concepttrees for integers,equationlabels,

and variables(figure4-l). Basicallywe are

using the typed variablesof Michalski[61.

While effectivein learningplans, MACROPShas

difficultyapplyingits acquiredknowledge [l].

The centralproblem is that the operatorsin a

MACROPSplan are not segmentedinto meaningful

sequences. Any sequenceof operatorscan be

extractedfrom the triangletable and reused as a

macro operator. A sequenceof lengthN defines

N(N-1)/2macros. However,few of these sequences

are useful. MACROPSoffers no assistancein

selectingthe useful sequencesfrom a plan. If

sequencesare not extractedfrom the triangle

table then the entire plan must be consideredan

episode. This resultsin a large collectionof

opaque,single-purpose,

macro operators.

Branchingwithin an episode is made impossible.

In either case, combinatorialexplosignmakes

planningwith the macros impractical.

integer

/

\

non-zero

zero

/

\

\

positive negative

0

/I\

/I

1 2 3 ..e -1 -2 ...

Figure 4-l:

b

variable

/\

x Y

a

Concepttrees

We permit generalizations

by 1) deleting

conditions,2) replacingconstantsby variables

(typed),and 3) climbingtree generalization.

Disjunctivegeneralizationis allowedby adding

additionalrules.This coversall the

generalizationrules discussedby Michalski 161

except for closed intervalgeneralization.

3. Operators for Solving Linear Fquations

The operatorsapplicableto solving

simultaneouslinear equationsare describedin the

followingtable:

4.3. Rule Language

Knowledgeis encodedin ruleswhich suggest

operatorsto apply. Rules are of the form:

rator

Semantics

ccPnbinex(Eq)Combinex-termsin Eq.

combiney(Fq) Combiney-terms in Eq.

combinec(Eq) Combineconstantterms

in Eq.

deletezero

Delete term with 0 coeff.

or 0 constantfrom Eq.

ReplaceE42 by the result

=-wEql,Eq2)

of subtractingEql from IQ2

ReplaceEQ2 by the result

addU%&W)

of adding Eql and Eq2

ReplaceIQ by the result

mult@WJ)

of multiplyingQ by N

4. Description

3

<score>-- <bag of terms expressedin

generalization

language> => <operator>

(The score of a rule is describedin section6.2.)

PET does not learn "negative"rules to prune the

search tree, as in [3].

5. Perturbation

Perturbationis a techniquefor stage 1

learningwhich enablesa learningsystem to

discoverthe essentialfeaturesof a rule with

minimal teacherinvolvement. A perturbationof a

traininginstanceis createdby:

Languages

- deletinga featureof the instanceto

determinewhether its presenceis

essential.

4.1. InstanceLanguage

The instancelanguageservesas "internalform"

for traininginstances. We adopt a relational

descriptionof each equation,so the training

instance:

- if a featureis essential,modifyingit

slightlyto determineif it can be

generalized. Perturbationoperators,

which are added to the concepttree used

for generalization,

make theseminor

modifications.

a: 2x-5y=-1

b: 3x+4y=lO

is storedas:

{term(a,2*x),term(a,-53),term(a,l),

term(b,3*x),term(b,4*y),term(b,-10))

For example,given the problem (a) 2x+3y=7 (b)

2x+3~-5y=5,the advice to cambinex(b),and an

empty rule base, PET first describesthe rule as:

where a,b are equationlabelsand x,y are

(term(a,2*x),term(a,3*y),term(a,-7),

term(b,2*x),term(b,3*x),term(b,-5*y),

term(b,-5))=> combinex(b).

31t shouldbe recognizedthat MACROPSwas

designedto controla physicalrobot,not a

simulation. For this reason,the designers

thought it importantto permit the planner to skip

ahead in a plan if situationpermitsor to repeat

a step in a plan if the operationfailed due to

physicaldifficulties.

Now PET perturbsthe instanceby modifyingeach

of the coefficientsindividually.This is done by

zeroing,incrementingand decrementingeach

coefficient. Same of the instancescreatedby

perturbationare:

192

(i)

3y=7

2x+3x-5y=5

(ii)

2x+3y=7

3x-5y=5

4 PET understandstW0

applied.

selectingan operator:

(iii)

2x+3y=7

2x+4x-5y=5

reasonsfor

1. By applyingthe operator,the problem

Since ccmCnex(b) is effectivein example i, PET

generalizes(minimally)its currentrule

conditionswith this exampleyieldingthe new

rule:

state is simplified. In the domain of

algebraproblems,a state is simplified

if the number of terms in the equations

is reduced.

{tem(aJjcy),temb,-7) I

term(b,2*x),term(b,3*x),term(b,-5*y),

term(b,-5))=> ccmbinex(b).

2. By applyingthe operator,the

preconditionsof an existingrule are

satisfied. The rule being formed for

the operatoris then looselylinked

with the rule that the operator

enables. If more than one rule is

enabled,then multiplebranchesthrough

the episodeare allowed.

The major effect is to delete the conditionon the

x-term of equation(a).

The operatoris not effectivefor exampleii,

and the negativeinformationis (in the current

system)unused. Generalizingwith example iii,

the rule becomes:

term(b,2*x),term(b,pos(N)*x),term(b,-5"y),

term(b,-5))=> combinex(b).

And after all positiveinstancesof the operator

c&inex(b) have been generatedby the

perturbationtechniqueand generalized,the rule

is formed:

PET adds a rule to the rulebasewhen the

purposeof the rule'saction (the "why" component

of the operator)is understood. The first

operatorsfor which rules can be learnedare those

which simplifythe problemstate, such as

combininglike terms. Any operatorsapplied

before combinecannotbe understoodand PET must

"bearwith" the teacher. After rulesare formed

for the combineoperators,subtractcan be

learned. For instance,sub(a,b)appliedto:

a:

b:

yields:

a:

b:

term(b,pos(M)*x))

=> cambinex(b).

Essentially,perturbationis a techniquefor

creatingnear-examplesand near-misses [13]with

minimal teacherinvolvementupon which standard

generalization

techniquescan be applied.

Mitchell'sLEX system [91 uses a similarmethod

for creatingtraininginstances. Note that in our

techniquewe perturban instanceand try the same

operatorthat worked before. The perturbationis

used to guide the generalization

process. In LEX,

perturbationis used to generatenew, possibly

solvable,problems. Heuristicsare needed to

select appropriateproblemsas the cost of

applyingthe problemsolver is high. See [5] for

more detail on our perturbationtechnique.

2x+3y=5

2x+=1

2x+3y=5

2x-2x -ly-3y=l-5

Now PET can learn sub(a,b)for reason (2) above: a

rule which is alreadyunderstood(fora combine

operator)is enabledby the subtraction.An

episodecan be formedconnectingthe rules for

sub(a,b)and combine(b).

6. Episodic Learning

6.1. Importancefor Learning

As we noted in the MACROPSuse of operator

sequences,unless the system can select

meaningfullyuseful sequencesfrom the set of

candidatesequences,combinatorialexplosionmakes

reuse of generalizedplans infeasible. We define

an episodeto be a sequenceof ruleswhich, when

applied,simplifiesa problemstate. Our episodes

are "looselypackaged"to allow branching. Rather

than storingan entireplan for reachinga goal

state from the start state,we segmentthe

solutionpath into small, re-usable,generalizable

episodes,each accomplishing

a simplification

of

the problem.

6.2. ScoringOperators

A simple scoringscheme connectsrules into

episodesand resolvesconflictswhen more than one

rule is enabled. A naturalscheme is to score

each rule by its positionin an episode. The

rules for the ccmbineoperatorsare given a score

of 0. The rule for subtract,which enablesa

ccnnbine

operator,is given a score of 1 (O+l).

Intuitivelyrthe score is the length of the

episodebefore somethinggood happens (i.e.the

equationsget simplified).When selectinga rule,

PET selectsthe one with the lawest score among

those enabled. Ties are resolvedarbitrarily.

4"Understand"is used here to mean "know-how"

encodedin productionrules. We do not mean to

suggesta deep model of understanding

which might

includecausalityand analogy.

Episodic,or stage 2, learningis concerned

with these sequencesof rules and their

connections. These sequencesare learned

incrementally.Learninga rule for an operator

dependson an understanding

of J&Y the operatoris

193

7. Augmentation

(analogousto our test for predictiveness),

then

more backgroundinformationmust be "pulledin"

until it is associated.

A descriptionin the instancelanguageis

basicallya translationof a traininginstance.

This descriptionis in an instancelanguagemore

appropriateto computationthan the surface

languageused to input the instance. In complex

domains,more knowledgeneeds to be represented

than is capturedin a literaltranslationof a

traininginstanceinto the instancelanguage.

In the domainof algebraproblems,augmentation

serves to relateterms in the instancelanguage.

For example,a relevantrelationbetween

coefficientsis productof(N,M,P)

(theproductof N

and M is P). Augmentationof the instance

languageis necessarywhen the terms or values

necessaryfor an operation(theRHS of a rule) are

not present in the pre-conditions

for the

operation(theLHS of the rule). For example,

considerthe traininginstance:

a: 6x-15y=-3

b: 3x+4y=lO

with the teacheradvice to apply mult(b,2). The

problem is that the rule formedby PET will have a

2 on the RHS (for the operation)but no 2 on the

LHS. In this case, we say that the rule is not

predictiveof the operator.

An augmentationof the instancelanguageis

needed to relatethe 2 on the PHS with some term

on the LHS. In this case, the additional

knowledgeneeded is the 3-ary predicateproductof,

specificallyproductof(2,3,6).Now the rule to

cover the traininginstancecan be formed (after

perturbationand augmentation):

{term(a,G*x),term(b,3*x),

productof(2,3,6))

=> mult(a,2)

Augmentationis similarto selectingbackground

knowledge. One problemwith both approachesis

determininghow much backgroundknowledgeto

incorporate. Incorporating

too little kncwledge,

which resultsin an over-generalized

rule, can be

detectedby an associationchain violationor' in

PET, by a non-predictiverule. However,detecting

when too much knowledgehas been pulled in is

difficult. In this case, the rule formedwill be

over-specialized.We overcomethis problem (to a

large extent)by perturbation. Vere reliessolely

on forminga disjunctionof rules (eachoverly

specialized)for the correctgeneralization.

Vere allows only one conceptin the background

knowledge. This furthersimplifiesthe task of

knowinghaw much knowledgeto pull in. However,

as the complexityof problemdomainsincrease,

more backgroundknowledgemust be broughtto bear.

Our augmentationaddressessome of the problemsof

managingthis knowledge.

8. Examplesof SystemPerformance

This sectiondiscusseshighlightsfrom PET's

episodiclearningfor problemsolvingin the

domain of linearequations.

8.1. Examplel-Learning Combine

The rulebaseis initiallyempty and, as PET

learns, rules are added, generalized,and

supplanted. PET requestsadvicewheneverthe

currentrules do not apply to the problemstate.

The teacherpresentsthe traininginstance:

a: 2x+3y=5

b: 2x+4y=6

Conceptsin the augmentationlanguageform a

second-ordersearchspace (figure7-1) for

generalizingan operation. The space consistsof

a (partial)list of conceptsthat a studentmight

rely on for understandingrelationsbetween

numbers. When a predictiverule cannotbe found

in the first-ordersearch space then PET tries to

form a rule using the augmentationas well.

Conceptsare pulled from the list and added to a

developingrule. If the conceptmakes the rule

predictive,then it is retained. Otherwise,it is

removedand anotherconceptis tried. If no

predictiverule can be found then PET ignoresthe

traininginstance.

with the advice sub(a,b). PET appliesthe

operatorwhich yields:

a: 2x+3y=5

b: 2x-2x+4y-3y=6-5

PET must understandwhy an operatoris useful

before a rule is formed. The operatorfailed to

simplifythe equations(in fact the number of

terms in the equationswent from six to nine) and

did not enable any other rules (sincethe rulebase

is empty). PET cannot form a rule and waits for

somethingunderstandable

to happen.

The teachernow suggeststhat combinex(b)be

applied,yielding:

sumof(L,M,N)

(sum of L and M is N)

productof(L,M,N)(productof L and M is N)

squareof(~,~) (squareof M is N)

a: 2x+3y=5

b: Ox+4y-3y=6-5

Figure7-l: augmentationsearchspace

The number of terms is reduced,so PET

hypothesizesa rule. Perturbationtests each term

in the equationsto determinewhich are essential

and which can be generalized. PET forms the rule:

Vere [12]has also addressedthe problemof

learningin the presenceof "background

information."For example,learninga general

rule for a straightin a poker hand requires

knowledgeof the next number in sequence. This is

consideredbackgroundto the knowledgein the

poker domain. Vere describesan 'association

chain"which links togethereach term in a rule.

If a term in the rule is not linked in the chain

kern'hpos (N)*xl,

term(b,neg(M)*x))

=> combinex(b)

which means:

194

a: 2x+3y=5

b: 2x+4y=6

given a problemstate,whenever

equationb containsan x-termwith a

positivecoefficientand an x-termwith a

negativecoefficient,then combinethe two

terms.

The operatorenablesthe partially-learned

rule

for combinex(b),so PET employsperturbationto

form the rule:

PET is unable to apply currentknowledge(i.e.

the rule for combinex(b))to the currentproblem

state so the teachersuggestscombiney(b) which

yields:

{term(a,2*x),term(b,2*x),

term(b,pos(N)*'y)

1 => sub(a,b)

The rule is assigneda score of 1, looselylinking

it with the rules for combineand deletezero.

a: 2x+3y=5

b: Ox+ly=6-5

Presentedwith furthertraininginstances,PET

inducesthe rule:

Stage 1 learningproducesthe rule:

{term b,pos

(N) 9)

(term(egn(Ll),nonzero(N)*var(X)),

term(eqn(L2),nonzero(M)*var(X)),

term(egn(L2),nonzero(O)*var(Y)))

=> =Jweqmu

,eqnW) 1

I

term(b,neg(M)*y))

=> c&iney(b)

This rule cannotbe generalizedwith the current

rulelistand is simplyadded.

8.3. Example3-Learning Multiply

The teacherpresentsPET with an exampleof

multiplywith the traininginstance:

Learningrules for the operatorsccrmbinec(b)

and deletezero are similarand will be assumed

to be completed.

a: 3x+4y=7

b: 6x+2y=8

Stage 2 learningof the combineoperators

involvesrelatingthem to episodes,or sequences

of rules. Since combinesimplifiesa problem

state immediately,it is given a score of zero.

The currentrulelist(withscores)is:

No rule in the kncwledgebase applies,so PET

requestsadvice. mult(a,2)is suggestedwhich

yields:

0 -- {term(b,pos(N)*x),

term(b,neg(M)*x)}

=> combinex(b)

O{termbps (NJ9) I

term(b,neg(M)*y))

=> combiney(b)

0 -- bmb,posW

1I

term(b,neg(M))

=> combinec(b)

0 -- (term(b,O*x))

=> deletezero

0 -- (term(b,O))=> deletezero

a: 6x+8y=14

b: 6x+2y=8

Now sub(a,b)appliesso the rule for mult(a,2)

can be learned. Mult(a,2)is given a score of 2

(onemore than the score of the rule enabled).

After perturbationPET forms the rule:

{term(a,3*x),term(b,6*X)r

term(b,pos(N)%))=> mult(a,2)

With furthertraininginstancesfor the combine

operators,PET forms the rules:

0--{term(egn(L),int(N)*x),

term(egn(L),int(M)*x))=>ccPnbinex(egn(L))

O-hmn(eqn(L) ,int(N)

%I,

term(egn(L)lint(M)%)]=>ccmbiney(egn(L))

0-(term(eqn(L),int(N)),

term(egn(L),int(M))

=> combinec(egn(L))

0-(term(egn(L),O*var(X))}

=> deletezero(egn(L))

0--(term(egn(L),O))

=> deletezero(egn(L))

At this point PET realizesthat it has

over-generalized

since the rule is non-predictive

(the2 on the RHS does not occur on the LHS). PET

augmentsthe instancedescriptionand forms the

candidaterule:

(term(a,3*x),term(b,6*x),term(b,pos(N)%),

productof(2,3,6)}

=> mult(a,2)

After additionalexamples,PET forms the

correctrule:

2

8.2. Examplea--LearningSubtract

Now that the ccmbineoperatorsare partially

learned,PET can begin to learn subtract. Since

our episodesare looselypackaged,there is not a

problemwith furthergeneralizingthe rules for

the combineoperatorsonce subtractis affiliated

with them. Learningfor each operatorcan proceed

independently.

-- (term(a,pos(K)*x),term(b,pos(L)*x)

I

termbpos 04 9) r

productof(pos(N)

,pos(K)

,pos(L)

1

=> mult(a,pos(N))

which supplantsthe more specificrule in the

rulebase.

8.4. Example4-Learning "CrossMultiply"

The teacherpresentsthe traininginstance:

Naw the traininginstanceabove which PET had

to ignorecan be understood. The instancewith

teacheradvicesub(a,b)is:

a: 2xt6y=8

b: 3x+4y=7

Since no rule is enabled,the teacheradvice to

awly mult(a,3)yields:

'deletezero

(b) could also be suggested,but we

continuewith a combineoperatorfor continuity.

a: 6x+18y=24

b: 3x+ 4y=7

195

Since mult(b,2)is enabled,mult(a,3)can be

learned. After perturbation,PET acguiresthe

rule:

REFERENCES

1.

Carbonell,J.G. Learningby Analogy:

Formulatingand GeneralizingPlans from Past

Experience. In Michalski,R.S.,

Carbonell,J.G.,

Mitchell,T.M.,

Ed., Machinem,

Tiogo

Publishing,1983.

2. Fikes,R.E. and Nilsson,N.J. STRIPS:A new

approachto the applicationof theoremprovingto

problemsolving. AL 2 (1971),189-208.

3. Kibler,D.F, and Morris,P.H. Dont be Stupid.

IJCAI (1981),345-347.

4. Kibler,D.F. and Porter,B.W. Episodic

Learning. 194, Universityof California,Irvine,

1983.

5. Kibler,D.F. and Porter,B.W. Perturbation:

A

Means for GuidingGeneralization.m

(1983).

6. Michalski,R.S., Dietterich,T.G. Learning

and Generalizationof Characteristic

Descriptions:

EvaluationCriteriaand ComparativeReview of

SelectedMethods. mfi

(1979),223-231.

Michalski,R.S.,

Carbonell,J.G.,

Mitchel1,T.M.

kchine

.

ng Tiogo Publishing,1983.

8. Mitchell,T.M., Utgoff, P.E., Nudel, B, and

Banerji,R. LearningProblem-Solving

Heuristics

ThroughPractice. um

1 (1981),127-134.

9. Mitchell,T.M., Utgoff,P.E., Nudel, B, and

Banerji,R. Learningby Experimentation:

Acquiring

and RefiningProblePnSolvingHeuistics. In

Michalski,R.S.,

Carbonell,J.G.,

Mitchell,T.M.,

Ed., Machinekarninq, Tiogo Publishing,1983.

10. Neves, D.M. A computerprogramthat learns

algebraicproceduresby examiningexamplesand

workingproblemsin a textbook. CSCSIu

(1978),

191-195.

11. Vere, S.A. Inductionof conceptsin the

predicatecalculus. IJCAI 4 (1975),281-287.

12. Vere, S.A. Inductionof Relational

Productionsin the Presenceof Background

Information.IJCAT 5 (1977),349-355.

13. Winston,P.H. Learningstructural

descriptionfrom examples. In Winston,P.H., Ed.,

a

Psvcholouyof ComouterVision,McGraw-Hill,

1975.

(term(a,2*x),term(b,3*x),term(b,pos(N)~)]

=> mult(a,3)

This rule is given a score of 3 since it enablesa

rule with score 2. The rule will be generalized

(withsubsequenttraininginstances)to:

3 -- {term(egn(I),nonzero(N)*var(X)),

term(egn(J),nonzero(M)*var(X)),

term(eqn(J),nonzero(L)*var(Y))]

=> mult(egn(I),nonzero(M))

9. Limitations and Extensions

As with most learningprogramswe requirethat

the conceptto be learnedbe representablein our

generalization

language. In additionPET has to

be suppliedwith some coarsenotion of when an

operatorhas been effectivein simplifyingthe

current state. Furthermorewe assume that the

teachergives only appropriateadviceand there is

no "noise."

Extensionsthat we are consideringare:

- Learningfrom negativeinstancesas well

as positiveones.

- Improvingthe use of augmentationby

introducingstructuredconcepts. These

would permit climbingtree

generalizations

for this second-order

knowledge. Another improvementwould be

allowingmultipleconceptsto be pulled

into a rule from the augmentationsearch

space. This requiresa requisitechange

in the test for predictiveness.

- Applyingthe theory to learning

operatorsin other domains. Integration

problemshave been attempted [8]. We

would like to try our approachin the

calculusproblemdomain.

10. Conclusions

A system,PET, has been describedwhich learns

sequencesof rules,or episodes,for problem

solving. The learningis incrementaland

thorough. The system learnswhen and why

operatorsare applied. AlthoughPET startswith

an extremelygeneraland coarse notion of why an

operatorshouldbe appliedit's representation

beccmes increasinglyfine and completeas it forms

rules from examples. PET detectswhen rules are

non-predictive

and augmentsthe generalization

languagewith higher level concepts. Due to the

power of perturbation,our systemcan learn

episodeswith minimalteacherinteraction. The

episodesare segmentedinto discrete,re-usable

segments,each accomplishing

a recognizable

simplification

of the problem state. The approach

is shown effectivein the dcnnain

of solving

simultaneouslinear equations. We suspectthe

techniquewill also work for solvingproblemsin

symbolicintegrationand differentialequations

196