Existence and Finiteness Conditions for Risk-Sensitive Planning:

First Results

Yaxin Liu (yxliu@cc.gatech.edu)

Sven Koenig (skoenig@usc.edu)

College of Computing

Georgia Institute of Technology

Computer Science Department

University of Southern California

Abstract

Decision-theoretic planning with risk-sensitive planning objectives is important for building autonomous agents or decisionsupport agents for real-world applications. However, this line

of research has been largely ignored in the artificial intelligence

and operations research communities since planning with risksensitive planning objectives is much more complex than planning with risk-neutral planning objectives. To remedy this situation, we develop conditions that guarantee the existence and

finiteness of the expected utilities of the total plan-execution reward for risk-sensitive planning with totally observable Markov

decision process models. In case of Markov decision process

models with both positive and negative rewards our results hold

for stationary policies only, but we conjecture that they can be

generalized to hold for all policies.

Introduction

Decision-theoretic planning is important since real-world applications need to cope with uncertainty. Many decisiontheoretic planners use totally observable Markov decision process (MDP) models from operations research (Puterman 1994)

to represent planning problems under uncertainty. However,

most of them minimize the expected total plan-execution cost

or, synonymously, maximize the expected total reward (MER).

This planning objective and similar simplistic planning objectives often do not take the preferences of human decision makers sufficiently into account, for example, their risk attitudes in

planning domains with huge wins or losses of money, equipment or human life. This means that they are not well suited

for real-world planning domains such as space applications

(Zilberstein et al. 2002), environmental applications (Blythe

1997), and business applications (Goodwin, Akkiraju, & Wu

2002). In this paper, we provide a first step towards a comprehensive foundation of risk-sensitive planning. In particular,

we develop sets of conditions that guarantee the existence and

finiteness of the expected utilities when maximizing the expected utility (MEU) of the total reward for risk-sensitive planning with totally observable Markov decision process models

and non-linear utility functions.

Risk Attitudes and Utility Theory

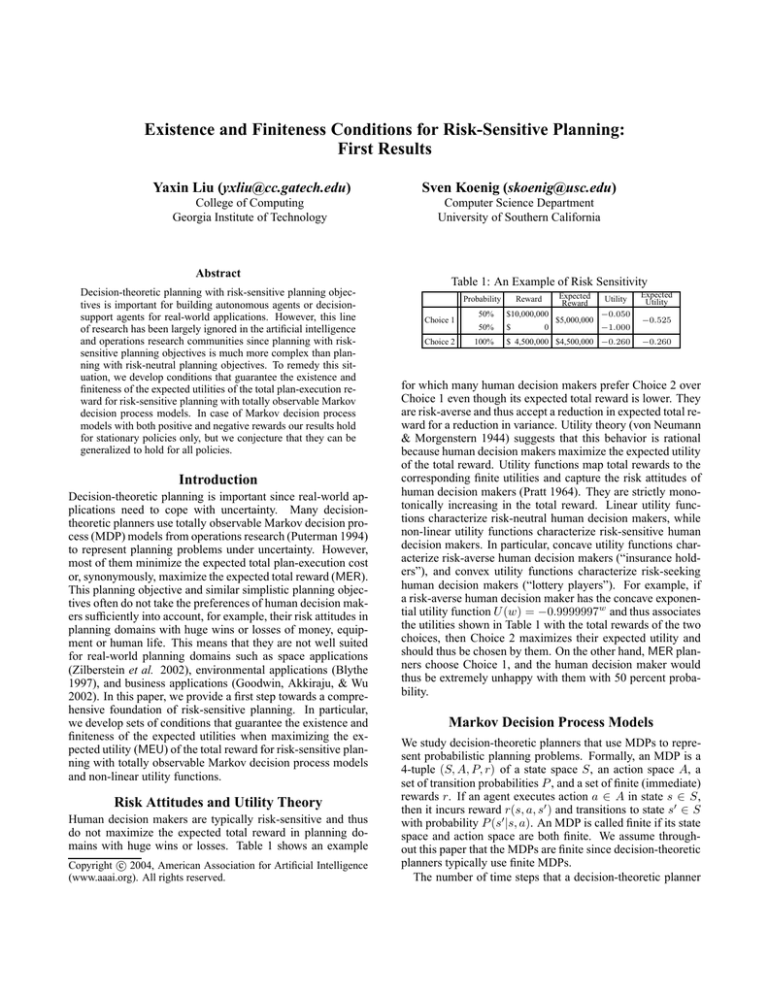

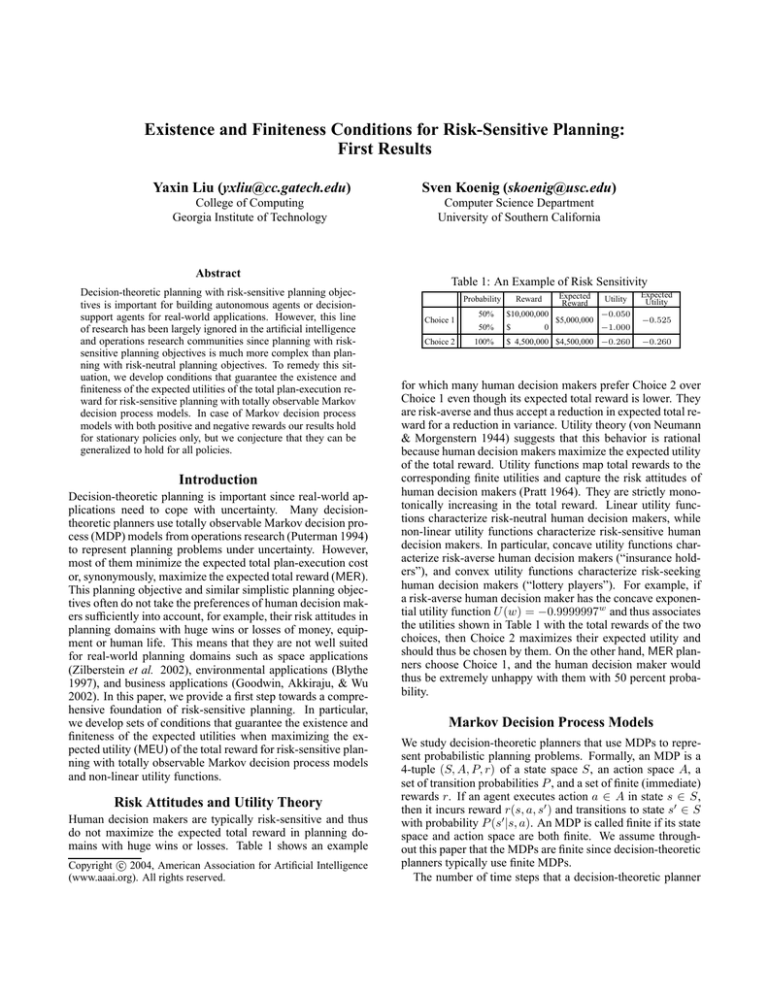

Human decision makers are typically risk-sensitive and thus

do not maximize the expected total reward in planning domains with huge wins or losses. Table 1 shows an example

c 2004, American Association for Artificial Intelligence

Copyright (www.aaai.org). All rights reserved.

Table 1: An Example of Risk Sensitivity

Probability

Choice 1

Choice 2

Expected

Reward

Reward

50%

$10,000,000

50%

$

100%

0

$5,000,000

Utility

Expected

Utility

−0.050

−0.525

−1.000

$ 4,500,000 $4,500,000 −0.260

−0.260

for which many human decision makers prefer Choice 2 over

Choice 1 even though its expected total reward is lower. They

are risk-averse and thus accept a reduction in expected total reward for a reduction in variance. Utility theory (von Neumann

& Morgenstern 1944) suggests that this behavior is rational

because human decision makers maximize the expected utility

of the total reward. Utility functions map total rewards to the

corresponding finite utilities and capture the risk attitudes of

human decision makers (Pratt 1964). They are strictly monotonically increasing in the total reward. Linear utility functions characterize risk-neutral human decision makers, while

non-linear utility functions characterize risk-sensitive human

decision makers. In particular, concave utility functions characterize risk-averse human decision makers (“insurance holders”), and convex utility functions characterize risk-seeking

human decision makers (“lottery players”). For example, if

a risk-averse human decision maker has the concave exponential utility function U (w) = −0.9999997w and thus associates

the utilities shown in Table 1 with the total rewards of the two

choices, then Choice 2 maximizes their expected utility and

should thus be chosen by them. On the other hand, MER planners choose Choice 1, and the human decision maker would

thus be extremely unhappy with them with 50 percent probability.

Markov Decision Process Models

We study decision-theoretic planners that use MDPs to represent probabilistic planning problems. Formally, an MDP is a

4-tuple (S, A, P, r) of a state space S, an action space A, a

set of transition probabilities P , and a set of finite (immediate)

rewards r. If an agent executes action a ∈ A in state s ∈ S,

then it incurs reward r(s, a, s0 ) and transitions to state s0 ∈ S

with probability P (s0 |s, a). An MDP is called finite if its state

space and action space are both finite. We assume throughout this paper that the MDPs are finite since decision-theoretic

planners typically use finite MDPs.

The number of time steps that a decision-theoretic planner

plans for is called its (planning) horizon. A history at time step

t is the sequence ht = (s0 , a0 , · · · , st−1 , at−1 , st ) of states

and actions from the initial state to the current state. The set of

all histories at time step t is Ht = (S × A)t × S. A trajectory

is an element of H∞ for infinite horizons and HT for finite

horizons, where T ≥ 1 denotes the last time step of the finite

horizon.

Decision-theoretic planners determine a decision rule for

every time step within the horizon. A decision rule determines which action the agent should execute in its current

state. A deterministic history-dependent (HD) decision rule

at time step t is a mapping dt : Ht → A. A randomized

history-dependent (HR) decision rule at time step t is a mapping dt : Ht → P(A), where P(A) is the set of probability

distributions over A. Markovian decision rules are historydependent decision rules whose actions depend only on the

current state rather than the complete history at the current

time step. A deterministic Markovian (MD) decision rule

at time step t is a mapping dt : S → A. A randomized

Markovian (MR) decision rule at time step t is a mapping

dt : S → P(A). A policy π is a sequence of decision rules

dt , one for every time step t within the horizon. We use ΠK

to denote the set of all policies whose decision rules all belong to the same class, where K ∈ {HR, HD, MR, MD}. The

set of all possible policies Π is the same as ΠHR . Decisiontheoretic planners typically determine stationary policies. A

Markovian policy π ∈ Π is stationary if dt = d for all time

steps t, and we write π(s) = d(s). We use ΠSD to denote

the set of all deterministic stationary (SD) policies and ΠSR

to denote the set of all randomized stationary (SR) policies.

The state transitions resulting from stationary policies are determined by Markov chains. A state of a Markov chain and

thus also a state of an MDP under a stationary policy is called

recurrent iff the expected number of time steps between visiting the state is finite. A recurrent class is a maximal set of

states that are recurrent and reachable from each other. These

concepts play an important role in the proofs of our results.

Planning Objectives

MEU planners determine policies that maximize the expected

utility of the total reward for a given utility function U . One

difference between the MER and MEU objective is that the

MEU objective can result in planning problems that are not

decomposable, which makes it impossible to use the divideand-conquer principle (such as dynamic programming) to efficiently find policies that maximize the expected utility. Therefore, we need to re-examine the basic properties of decisiontheoretic planning when switching from the MER to the MEU

objective.

For planning problems with finite horizons T , the expected

utility of the total reward starting from s ∈ S under π ∈ Π is

T −1 P

π

rt

vU,T

(s) = E s,π U

,

t=0

s,π

where the expectation E is taken over all possible trajectories. The expected utilities exist (= are well-defined) under

all policies and are bounded because the number of trajectories is finite for finite MDPs. MEU planners then need to

determine the maximal expected utilities of the total reward

∗

π

vU,T

(s) = supπ∈Π vU,T

(s) and a policy that achieves them. The

−1/0.5

−1/0.5

s1

−2/0.5

s2

0/1.0

−2/0.5

Figure 1: Example 1

maximal expected utilities exist and are finite because the expected utilities are bounded under all policies.

For planning problems with infinite horizons, the expected

utility of the total reward starting from s ∈ S under policy π

is

π

π

vU

(s) = lim vU,T

(s) = lim E s,π U

T →∞

T →∞

TP

−1

rt

.

(1)

t=0

The expected utilities exist iff the limit converges on the extended real line, that is, the limit results in a finite number,

positive infinity or negative infinity. MEU planners then need

to determine the maximal expected utilities of the total reward vU∗ (s) = supπ∈Π vUπ (s) and a policy that achieves them.

To simplify our terminology, we refer to the expected utiliπ

ties vU

(s) for all s ∈ S as the values under policy π ∈ Π

∗

and to the maximal expected utilities vU

(s) for all s ∈ S as

the optimal values. A policy is optimal if its values equal

the optimal values for all states. A policy is K-optimal if

it is optimal and it is in the class of policies ΠK , where

K ∈ {HR, HD, MR, MD, SR, SD}.

Discussion of Assumptions

So far, we have motivated why decision-theoretic planners

should maximize the values for non-linear utility functions.

The kinds of MDPs that decision-theoretic planners typically

use tend to have goal states that need to get reached (Boutilier,

Dean, & Hanks 1999). The MDP in Figure 1 gives an example. Its transitions are labeled with their rewards followed

by their probabilities. The rewards of the two actions in state

s1 are negative because they correspond to the costs of the

actions. State s2 is the goal state, in which only one action

can be executed, namely an action with zero reward whose

execution leaves the state unchanged. The optimal value of

a state then corresponds to the largest expected utility of the

plan-execution cost for reaching the goal state from the given

state. To achieve generality, however, we do not make any

assumptions in this paper about the structure of the MDPs or

their rewards. For example, we do not make any assumptions

about how the structure of the MDPs and their rewards encode

the goal states. Neither do we make any assumptions about

whether all of the rewards are positive, negative or zero. We

avoid such assumptions because MDPs can mix positive rewards (which model, for example, rewards for reaching a goal

state) and negative rewards (which model, for example, action

costs).

The results of this paper would be trivial if we used discounting, that is, assumed that a reward obtained at some

time step is worth only a fraction of the same reward obtained one time step earlier. Discounting is a way of modeling interest on investments. In our case, there is typically

no way to invest resources and thus no reason to use discounting. This is fortunate because discounting makes it difficult to find optimal policies for the MEU objective even

+1/1.0

s1

s2

−1/1.0

Figure 2: Example 2

though it guarantees that the expected utilities exist and are

finite (White 1987). For example, all optimal policies can

be non-stationary for the MEU objective if the utility function is exponential and discounting is used (Chung & Sobel

1987), which makes it very difficult to find an optimal policy. On the other hand, there always exists an SD-optimal

policy for the MEU objective if the utility function is exponential and discounting is not used (Ávila-Godoy 1999;

Cavazos-Cadena & Montes-de-Oca 2000). Thus, there is an

advantage to not using discounting for the MEU objective.

This advantage does not exist for the MER objective because

there always exists an SD-optimal policy for the MER objective whether discounting is used or not (Puterman 1994).

Existence and Finiteness Conditions

In this paper, we study conditions that guarantee that the values of all states exist and are finite for the MEU objective.

It is important that the optimal values exist since MEU

planners determine a policy that achieves the optimal values.

There are cases where the optimal values do not exist, as the

MDP in Figure 2 illustrates. An agent that starts in state s1

receives the following sequence of rewards for its only policy: +1, −1, +1, −1, . . . , and consequently the following sequence of total rewards: +1, 0, +1, 0, . . . , which oscillates.

Thus, the limit in Formula (1) does not exist for any utility

function under this policy, and the optimal value of state s1

does not exist either. A similar argument holds for state s2 as

well.

It is also important that the optimal values be finite. There

are cases where the optimal values are not finite. The MDP in

Figure 1 illustrates such a case and the problem that it poses

for MEU planners. The MDP has two SD policies. Policy π1

assigns the top action to state s1 , and policy π2 assigns the

bottom action to state s1 . The values of both states are the

same under both policies and utility function U (w) = −0.5w ,

namely

π1

vU

(s1 ) =

∞ h

X

t=1

π2

(s1 ) =

vU

∞ h

X

∞

i

X

1 = −∞,

−0.5(−1)t · 0.5t = −

t=1

−0.5(−2)t · 0.5t = −

t=1

π1 2

(s )

and vU

1

i

π2 2

(s )

vU

∞

X

2t = −∞,

t=1

= −1. Thus, the optimal value of state

=

s exists but is negative infinity. All trajectories have identical

probabilities under both policies, but the total reward and thus

also the utility of each trajectory is larger under policy π1 than

under policy π2 . Thus, policy π1 should be preferred over policy π2 for all utility functions. Policy π2 of this example shows

that a policy that achieves the optimal values and thus is optimal according to our definition is not always the best one.

The problem is that the values of the states under policy π1 are

guaranteed to dominate the values of the states under policy

π2 , but they are guaranteed to strictly dominate the values of

the states under policy π2 only if the optimal values are finite.

This example also shows that the optimal values are not guaranteed to be finite even if all policies reach a goal state with

probability one. Furthermore, the optimal values of both states

are, for example, finite for the utility function U (w) = w and

thus any policy that achieves the optimal values is indeed the

best one for this utility function, which shows that the problem

can exist for some utility functions but not others, depending

on whether the optimal values are finite for the given MDP.

Existing Results

The easiest way to guarantee that the optimal values exist and

are finite is to impose conditions that guarantee that the values

of all states exist for all policies and are finite. We first review

such conditions that have been obtained primarily for MDPs

with linear and exponential utility functions. We then use these

results to identify similar conditions for more general MDPs.

Linear Utility Functions

Linear utility functions characterize risk-neutral human decision makers. In this case, the MEU objective is the same as

the MER objective. We omit the subscript U for linear utility

functions.

Positive MDPs MDPs for which Condition 1 holds are

called positive (Puterman 1994). The values exist for positive MDPs under all policies since vTπ (s) is monotonic in T .

Thus, the optimal values exist as well. They are finite if Condition 2 holds (Puterman 1994). In fact, the optimal values

are finite even if Π is replaced with ΠSD in Condition 2, since

there exists an SD-optimal policy for the MER objective (Puterman 1994). Note that the values of all recurrent states under

a policy are zero if Condition 2 holds.

Condition 1: For all s, s0 ∈ S and all a ∈ A, r(s, a, s0 ) ≥ 0.

Condition 2: For all π ∈ Π and all s ∈ S, v π (s) is finite.

Negative MDPs MDPs for which Condition 3 holds are

called negative (Puterman 1994). Similar to positive MDPs,

the values exist for negative MDPs under all policies and thus

the optimal values exist as well. The optimal values are finite

if Condition 4 holds (Puterman 1994). Again, the optimal values are finite even if Π is replaced with ΠSD in Condition 4

since there exists an SD-optimal policy for the MER objective

(Puterman 1994).

Condition 3: For all s, s0 ∈ S and all a ∈ A, r(s, a, s0 ) ≤ 0.

Condition 4: There exists π ∈ Π such that for all s ∈ S,

v π (s) are finite.

General MDPs In general, MDPs can have both positive

and negative (as well as zero) rewards. We define the positive part of a real number r to be r+ = max(r, 0) and its

negative part to be r− = min(r, 0). We then obtain the positive part of an MDP by replacing every reward of the MDP

with its positive part. We use v +π (s) to denote the values of

the positive part of an MDP under policy π ∈ Π. We define

the negative part of an MDP and the values v −π (s) in an analogous way. The values exist under all policies if Condition 5

holds (Feinberg 2002). Thus, the optimal values exist as well

if Condition 5 holds. They are finite if Condition 5 and Con-

+2/1.0

s1

s2

−1/1.0

Figure 3: Example 3

dition 6 hold (Feinberg 2002). Again, the optimal values are

finite even if Π is replaced with ΠSD in Condition 5 and Condition 6, since there exists an SD-optimal policy for the MER

objective (Puterman 1994).

Condition 5: For all π ∈ Π and all s ∈ S, v +π (s) is finite.

Condition 6: There exists π ∈ Π such that for all s ∈ S,

v −π (s) is finite.

Condition 7 is the weakest condition that we use in this

paper. It is more general than Condition 1, Condition 3, and

Condition 5, since, for example, Condition 1 implies that for

all π ∈ Π and all s ∈ S, v −π (s) = 0. The values exist under

all policies and v π (s) = v +π (s) + v −π (s) for all s ∈ S and

all π ∈ Π if Condition 7 holds (Puterman 1994). Thus, the

optimal values exist as well if Condition 7 holds, but they are

not guaranteed to be finite (Puterman 1994).

Condition 7: For all π ∈ Π and all s ∈ S, at least one of

v +π (s) and v −π (s) is finite.

The MDPs in Figures 2 and 3 illustrate Condition 7. The

MDP in Figure 2 does not satisfy Condition 7. The values of its states do not exist under its only policy π, as we

have argued earlier. It is easy to see that v +π (s1 ) = +∞

and v −π (s1 ) = −∞, which violates Condition 7 and illustrates that Condition 7 indeed rules out MDPs whose values

do not exist under all policies. The MDP in Figure 3 is another MDP that does not satisfy Condition 7. The values of its

states, however, exist under its only policy π 0 . For example, an

agent that starts in state s1 receives the following sequence of

rewards: +2, −1, +2, −1, . . . , and consequently the following sequence of total rewards: +2, +1, +3, +2, +4, +3, . . . ,

which converges toward positive infinity. Thus, the limit in

Formula (1) exists under π 0 , and the value of state s1 thus

exists as well under π 0 . However, it is easy to see that

0

0

v +π (s1 ) = +∞ and v −π (s1 ) = −∞, which violates Condition 7 and demonstrates that Condition 7 is not a necessary

condition for the values to exist under all policies.

Exponential Utility Functions

Exponential utility functions are the most widely used nonlinear utility functions (Corner & Corner 1995). They are of

the form Uexp (w) = ιγ w , where ι = sign ln γ. If γ > 1,

then the exponential utility function is convex and characterizes risk-seeking human decision makers. If 0 < γ < 1, then

the utility function is concave and characterizes risk-averse human decision makers. We use the subscript exp instead of U

for exponential utility functions and use MEUexp instead of

MEU to refer to the planning objective.

Positive MDPs The values exist for positive MDPs under

π

all policies since vexp,T

(s) is monotonic in T . Thus, the optimal values exist as well. They are finite if 0 < γ < 1 or

if γ > 1 and Condition 8 holds (Cavazos-Cadena & Montes-

de-Oca 2000). Again, the optimal values are finite even if Π

is replaced with ΠSD in Condition 8 since there exists an SDoptimal policy for the MEUexp objective (Cavazos-Cadena &

Montes-de-Oca 2000).

π

Condition 8: For all π ∈ Π and all s ∈ S, vexp

(s) is finite.

Negative MDPs The values exist for negative MDPs under

π

all policies since vexp,T

(s) is monotonic in T . Thus, the optimal values exist as well. They are finite if γ > 1 or if

0 < γ < 1 and Condition 9 holds (Ávila-Godoy 1999).

Again, the optimal values are finite even if Π is replaced with

ΠSD in Condition 9 since there exists an SD-optimal policy

for the MEUexp objective (Ávila-Godoy 1999).

Condition 9: There exists π ∈ Π such that for all s ∈ S,

π

vexp

(s) is finite.

Some Useful Lemmata

The following lemmata are key to proving Theorem 4, Theorem 6, Theorem 9, and Theorem 10. Because of the space

limit, we state these lemmata and the following theorems without proof. Lemma 1 describes the behavior of the agent after

entering a recurrent class, and Lemma 2 describes its behavior

before entering a recurrent class. The idea of splitting trajectories according to whether the agent is in a recurrent class is

key to the proofs of the results in following sections.

Lemma 1 states that one can only receive all non-negative

rewards, all non-positive rewards or all zero rewards if one

enters a recurrent class and Condition 7 holds.

Lemma 1. Assume that Condition 7 holds. Consider an arbitrary π ∈ ΠSR and an arbitrary s ∈ S that is recurrent under

π. Let Aπ (s) ⊆ A denote the set of actions whose probability

is positive under the probability distribution π(s).

a. If v π (s) = +∞, then for all a ∈ Aπ (s) and all s0 ∈ S with

P (s0 |s, a) > 0, r(s, a, s0 ) ≥ 0.

b. If v π (s) = −∞, then for all a ∈ Aπ (s) and all s0 ∈ S with

P (s0 |s, a) > 0, r(s, a, s0 ) ≤ 0.

c. If v π (s) = 0, then for all a ∈ Aπ (s) and all s0 ∈ S with

P (s0 |s, a) > 0, r(s, a, s0 ) = 0.

Lemma 2 concerns the well-known geometric rate of state

evolution (Kemeny & Snell 1960) and its corollaries. We use

this lemma, together with the fact that the rewards accumulate

at a linear rate, to show that the limit in (1) converges on the

extended real line under various conditions.

Lemma 2. For all π ∈ ΠSR , let Rπ denote the set of recurrent

states under π. Then for all s ∈ S, there exists 0 < ρ < 1

such that for all t ≥ 0,

a. there exists a > 0 such that P s,π (st ∈

/ Rπ ) ≤ aρt ,

s,π

b. there exists b > 0 such that P (st ∈

/ Rπ , st+1 ∈ Rπ ) ≤

t

bρ , and

c. for any recurrent class Riπ , there exists c > 0 such that

P s,π (st ∈

/ Rπ , st+1 ∈ Riπ ) ≤ cρt ,

where P s,π is a shorthand for the probability under π conditional on s0 = s.

The MDP in Figure 4 illustrates Lemma 2. State s2 is the

only recurrent state under its only policy if p > 0. For this

1

policy π and all t ≥ 0, P s ,π (st 6= s2 ) = (1 − p)t (which

1

illustrates Lemma 2a) and P s ,π (st 6= s2 , st+1 = s2 ) = p(1−

t

p) (which illustrates Lemma 2b and Lemma 2c).

a/1 − p

1

s

b/1.0

s2

a/p

Figure 4: Example 4

Exponential Utility Functions

and General MDPs

We discuss convex and concave exponential utility functions

separately for MDPs with both positive and negative rewards.

+π

We use vexp

(s) to denote the values of the positive part of an

MDP with exponential utility functions under policy π ∈ Π

+∗

and vexp

(s) to denote its optimal values. We define the values

−π

−∗

vexp (s) and vexp

(s) in an analogous way.

Theorem 4 shows that the values exist for convex exponential

utility functions under all SR policies if Condition 10 holds.

Condition 10 is analogous to Condition 7, and Lemma 3 relates them.

Condition 10: For all π ∈ Π and all s ∈ S, at least one of

+π

vexp

(s) and v −π (s) is finite.

Lemma 3. If γ > 1, Condition 10 implies Condition 7.

Theorem 4. Assume that Condition 10 holds and γ > 1. For

π

all π ∈ ΠSR and all s ∈ S, vexp

(s) exists.

However, it is still an open problem whether there exists an

SD-optimal policy for MDPs with both positive and negative

rewards for the MEUexp objective. Therefore, it is currently

unknown whether the optimal values exist.

Assume that Condition 10 holds and the optimal values exist. The optimal values are finite if for all π ∈ Π and all s ∈ S,

+π

vexp

(s) is finite. This is so because Condition 8 implies that

+∗

for all s ∈ S, vexp

(s) is finite. Furthermore, for all π ∈ Π and

all s ∈ S,

s,π

Uexp

TX

−1

t=0

rt ≤ E

s,π

Uexp

TX

−1

t=1

+

+π

rt = vexp,T (s).

π

that vexp

(s)

Taking the limit as T approaches infinity shows

≤

+π

∗

+∗

vexp

(s) < +∞. Therefore, vexp

(s) ≤ vexp

(s) < +∞.

The MDP in Figure 4 illustrates Theorem 4. Its probabilities

and rewards are parameterized, where p > 0. We will show

that the values of both states under its only policy π exist for

all parameter values if Condition 10 holds and γ > 1. Assume that the premise is true. We distinguish two cases: either

v −π (s1 ) is finite or v −π (s1 ) is negative infinity.

If v −π (s1 ) is finite, then v −π (s2 ) is finite as well. If

v −π (s2 ) is finite, then it is zero since state s2 is recurrent. If

v −π (s2 ) is zero, then b ≥ 0, that is, either b > 0 or b = 0. If

π

π

b > 0, then vexp

(s1 ) = vexp

(s2 ) = +∞, that is, the values of

π

both states exist. If b = 0 (Case X), then vexp

(s2 ) = 1 and

π

vexp

(s1 ) =

∞

X

γ (t+1)a p(1 − p)t .

t=0

a

If γ (1 − p) < 1, then the above sum can be simplified to

π

vexp

(s1 ) =

pγ a

,

1 − γ a (1 − p)

π

vexp,T

(s1 ) =

T

−1

X

γ (t+1)a+(T −t−1)b p(1 − p)t + γ T a (1 − p)T

t=0

= pγ a−b

Convex Exponential Utility Functions

π

(s) = E

vexp,T

otherwise it is positive infinity. Thus, in either case, the values

of both states exist.

If v −π (s1 ) is negative infinity, then v −π (s2 ) is negative infinity as well. If v −π (s2 ) is negative infinity, then b < 0 and

π

vexp

(s2 ) = 0. We distinguish two cases: either a ≤ 0 or

a > 0. If a ≤ 0, then the total rewards of all trajectories are

π

negative infinity and thus vexp

(s1 ) = 0, that is, the values of

both states exist. If a > 0, then γ a (1 − p) < 1 because Condi+π 1

tion 10 requires that vexp

(s ) be finite if v −π (s1 ) is negative

infinity and the positive part of the MDP satisfies the conditions of Case X above. If the agent enters state s2 at time step

t + 1, then it receives reward b from then on. Thus,

γ bT − [γ a (1 − p)]T

+ [γ a (1 − p)]T .

1 − γ a−b (1 − p)

Since γ > 1 and γ a (1 − p) < 1, it holds that

π

π

vexp

(s1 ) = lim vexp,T

(s1 ) = 0.

T →∞

Thus, in all cases, Condition 10 indeed guarantees that the

values of both states exist for the MDP in Figure 4.

Concave Exponential Utility Functions

The results and proofs for concave exponential utility functions are analogous to the ones for convex exponential utility

functions.

Condition 11: For all π ∈ Π and all s ∈ S, at least one of

−π

v +π (s) and vexp

(s) is finite.

Lemma 5. If 0 < γ < 1, Condition 11 implies Condition 7.

Theorem 6. Assume that Condition 11 holds and 0 < γ < 1.

π

For all π ∈ ΠSR and all s ∈ S, vexp

(s) exists.

Assume that Condition 11 holds and the optimal values exist. The optimal values are finite if there exists π ∈ Π such that

−π

(s) is finite. This is so because for this π

for all s ∈ S, vexp

and all s ∈ S,

π

vexp,T

(s) = E s,π

"

Uexp

TP

−1

rt

t=0

!#

≥ E s,π

"

Uexp

TP

−1

t=0

rt−

!#

−π

= vexp,T

(s).

∗

Taking the limit as T approaches infinity shows that vexp

(s) ≥

π

−π

vexp (s) ≥ vexp (s) > −∞.

General Utility Functions

We now consider non-linear utility functions that are more

general than exponential utility functions. Such utility functions are necessary to model risk attitudes that change with

the total reward.

Positive and Negative MDPs

The values exist for positive and negative MDPs under all poliπ

cies since vU,T

(s) is monotonic in T . Thus, the optimal values

exist as well. Theorem 7 gives a condition under which the optimal values are finite for positive MDPs, and Theorem 8 gives

a condition under which they are finite for negative MDPs.

Theorem 7. Assume that Condition 1 and Condition 8 hold

for some γ > 1. If the utility function U satisfies U (w) =

π

O(γ w ) as w → +∞, then for all π ∈ Π and all s ∈ S, vU

(s)

∗

and vU

(s) are finite.

Theorem 8. Assume that Condition 3 and Condition 9 hold

for some π ∈ Π and some γ with 0 < γ < 1. If the utility

function U satisfies U (w) = O(γ w ) as w → −∞, then for

π

∗

this π and all s ∈ S, vU

(s) and vU

(s) are finite.

General MDPs

Acknowledgments

The following sections suggest conditions that constrain

MDPs with both positive and negative rewards and the utility functions to ensure that the values exist. The first part

gives conditions that constrain the MDPs but not the utility

functions. The second part gives conditions that constrain the

utility functions but require the MDPs to satisfy only Condition 7. The last part, finally, gives conditions that mediate

between the two extremes.

Bounded Total Rewards We first consider the case where

the total reward is bounded. We use H s,π to denote the set

of trajectories starting from s ∈ S under policy π ∈ Π. We

define w(h) =

∞

P

π

rt (h) for h ∈ H s,π , vmax

(s) = max

w(h)

s,π

h∈H

t=0

π

and vmin

(s) = min

w(h). The total reward w(h) exists if

s,π

h∈H

Condition 7 holds. The optimal values exist and are finite if

Condition 7 holds and for all s ∈ S,

π

sup vmax

(s) < +∞

and

π∈Π

π

inf vmin

(s) > −∞.

π∈Π

These conditions are, for example, satisfied for acyclic MDPs

if plan execution ends in absorbing states but are satisfied for

some cyclic MDPs as well. Unfortunately, it can be difficult

to check the conditions directly. However, the optimal values

also exist and are finite if for all s ∈ S,

+π

sup vmax

(s) < +∞

and

π∈Π

−π

inf vmin

(s) > −∞.

π∈Π

In fact, the optimal values also exist even if Π is replaced with

ΠSD in this condition, which allows one to check the condition

with a dynamic programming procedure.

Bounded Utility Functions We now consider the case

π

where the utility functions are bounded. In this case, vU,T

(s)

is bounded as T approaches infinity but the limit might not

exist since the values can oscillate. Theorem 9 provides a

condition that guarantees that the values exist under stationary policies.

Theorem 9. Assume that Condition 7 holds.

If

lim U (w) = U − and lim U (w) = U + with U + 6= U −

w→−∞

only a first step towards a comprehensive foundation of risksensitive planning. In future work, we will study the existence

of optimal and -optimal policies, the structure of such policies, and basic computational procedures for obtaining them.

w→+∞

π

being finite, then for all π ∈ ΠSR and all s ∈ S, vU

(s) exists

and is finite.

Linearly Bounded Utility Functions Finally, we consider

the case where the utility functions are bounded by linear functions. Theorem 10 shows that the values exist under conditions

that are, in part, similar to those for the MER objective.

Theorem 10. Assume Condition 7 holds. If U (w) = O(w)

π

as w → ±∞, then for all π ∈ ΠSR and all s ∈ S, vU

(s) exists.

Conclusions and Future Work

We have discussed conditions that guarantee the existence

and finiteness of the expected utilities of the total planexecution reward for risk-sensitive planning with totally observable Markov decision process models. Our results are

This research was partly supported by NSF awards to Sven

Koenig under contracts IIS-9984827 and IIS-0098807 and an

IBM fellowship to Yaxin Liu. The views and conclusions contained in this document are those of the authors and should

not be interpreted as representing the official policies, either

expressed or implied, of the sponsoring organizations, agencies, companies or the U.S. government.

References

Ávila-Godoy, M. G. 1999. Controlled Markov Chains with Exponential Risk-Sensitive Criteria: Modularity, Structured Policies

and Applications. Ph.D. Dissertation, Department of Mathematics,

University of Arizona.

Blythe, J. 1997. Planning under Uncertainty in Dynamic Domains.

Ph.D. Dissertation, School of Computer Science, Carnegie Mellon

University.

Boutilier, C.; Dean, T.; and Hanks, S. 1999. Decision-theoretic

planning: Structural assumptions and computational leverage. Journal of Artificial Intelligence Research 11:1–94.

Cavazos-Cadena, R., and Montes-de-Oca, R. 2000. Nearly optimal

policies in risk-sensitive positive dynamic programming on discrete

spaces. Mathematics Methods of Operations Research 52:133–167.

Chung, K.-J., and Sobel, M. J. 1987. Discounted MDP’s: Distribution functions and exponential utility maximization. SIAM Journal

of Control and Optimization 35(1):49–62.

Corner, J. L., and Corner, P. D. 1995. Characteristics of decisions

in decision analysis practice. The Journal of Operational Research

Society 46:304–314.

Feinberg, E. A. 2002. Total reward criteria. In Feinberg, E. A.,

and Shwartz, A., eds., Handbook of Markov Decision Processes:

Methods and Applications. Kluwer. chapter 6, 173–208.

Goodwin, R. T.; Akkiraju, R.; and Wu, F. 2002. A decision-support

system for quote-generation. In Proceedings of the Fourteenth Conference on Innovative Applications of Artificial Intelligence (IAAI02), 830–837.

Kemeny, J. G., and Snell, J. L. 1960. Finite Markov Chains. D. Van

Nostrand Company.

Pratt, J. W. 1964. Risk aversion in the small and in the large. Econometrica 32(1-2):122–136.

Puterman, M. L. 1994. Markov Decision Processes: Discrete

Stochastic Dynamic Programming. John Wiley & Sons.

von Neumann, J., and Morgenstern, O. 1944. Theory of Games and

Economic Behavior. Princeton University Press.

White, D. J. 1987. Utility, probabilistic constraints, mean and variance of discounted rewards in Markov decision processes. OR Spektrum 9:13–22.

Zilberstein, S.; Washington, R.; Bernstein, D. S.; and Mouaddib,

A.-I. 2002. Decision-theoretic control of planetary rovers. In Beetz,

M.; Hertzberg, J.; Ghallab, M.; and Pollack, M. E., eds., Advances

in Plan-Based Control of Robotic Agents, volume 2466 of Lecture

Notes in Computer Science. Springer. 270–289.