Cooperative Solving of a Children’s Jigsaw Puzzle between Human and Robot:

First Results

Catherina Burghart and Christian Gaertner and Heinz Woern

Institute of Process Control and Robotics

University of Karlsruhe

D-76128 Karlsruhe, Germany

Abstract

Intelligent human robot cooperation still is a challenging field of research. At the Institute of Process Control and Robotics at the University of Karlsruhe we intend to use an anthropomorphic robot to learn how to

solve a children’s jigsaw puzzle together with a human

tutor. Instead of choosing a straight forward engineer’s

approach, we have decided to combine a psychologist’s

and a computer scientist’s views; the first results are presented in this paper.

Introduction

Epigenetic robots require a given set of senses, some basic skills to be adapted and combined, a knowledge base to

be continuously changed, enlarged and improved and some

kind of attention and / or learning mode to learn a specific

behavior. Most research groups take infants of an early

age as a model for their robotic systems. The robots either

learn social behavior (Breazeal, Hoffman, & Lockerd 2004;

Kozima, Nakagawa, & Yano 2003; Kawamura et al. 2005),

words or names of objects (Kozima, Nakagawa, & Yano

2003), faces and voices of people or basic movements (Billard 2001).

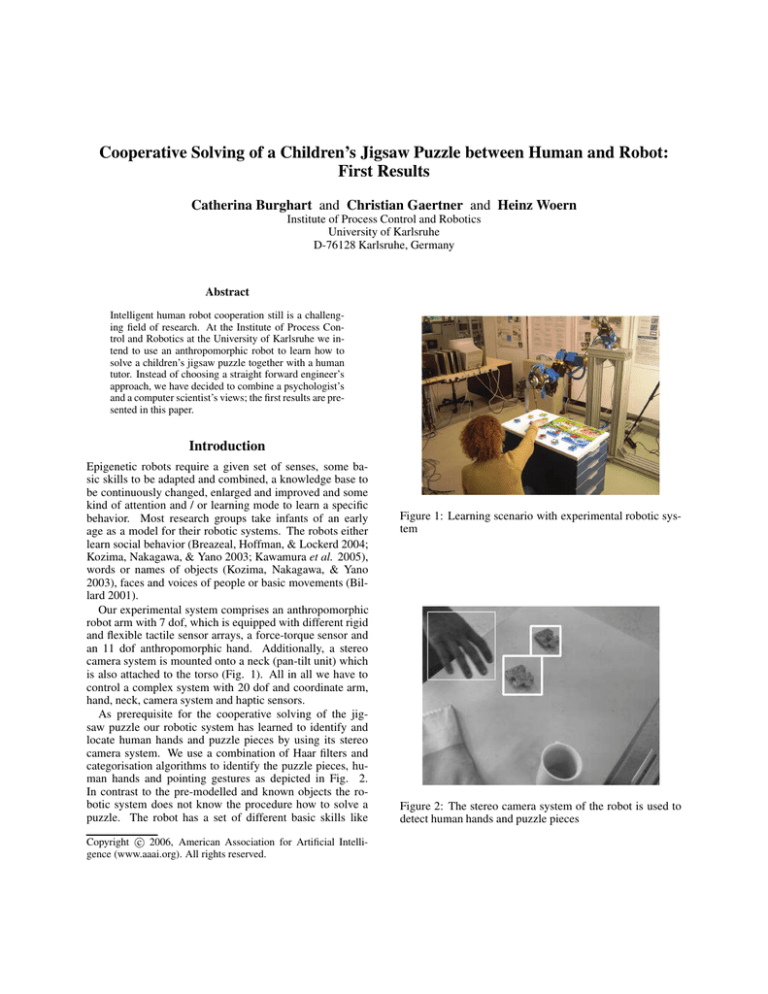

Our experimental system comprises an anthropomorphic

robot arm with 7 dof, which is equipped with different rigid

and flexible tactile sensor arrays, a force-torque sensor and

an 11 dof anthropomorphic hand. Additionally, a stereo

camera system is mounted onto a neck (pan-tilt unit) which

is also attached to the torso (Fig. 1). All in all we have to

control a complex system with 20 dof and coordinate arm,

hand, neck, camera system and haptic sensors.

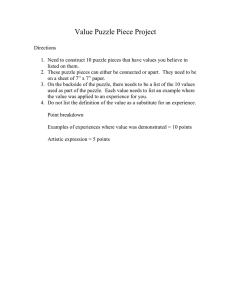

As prerequisite for the cooperative solving of the jigsaw puzzle our robotic system has learned to identify and

locate human hands and puzzle pieces by using its stereo

camera system. We use a combination of Haar filters and

categorisation algorithms to identify the puzzle pieces, human hands and pointing gestures as depicted in Fig. 2.

In contrast to the pre-modelled and known objects the robotic system does not know the procedure how to solve a

puzzle. The robot has a set of different basic skills like

c 2006, American Association for Artificial IntelliCopyright gence (www.aaai.org). All rights reserved.

Figure 1: Learning scenario with experimental robotic system

Figure 2: The stereo camera system of the robot is used to

detect human hands and puzzle pieces

Figure 4: Experimental set-up for study

Figure 3: Approach for the cooperative puzzle solving

autonomous movements, gripping an object, being manually guided either at the robot hand or at the forearm, elbow or upper arm (Yigit, Burghart, & Woern 2003; 2004;

Kerpa et al. 2003). These basic skills can be monitored and

stored as a sequence of parametricable actions in a database.

Solving a children’s jigsaw puzzle in cooperation with a

robotic system allows different forms of cooperation. First

of all the context and the environment have to be contemplated. The context in this case is the task to cooperatively

fit the puzzle pieces together, which can be contemplated as

enclosing context coupling human and robot. Besides this

overall task different subtasks can be made out as context

forming a basis for the human-robot-team. These subtasks

can be: turning all jigsaw pieces to the correct side at the beginning, sorting pieces, looking for specific pieces, or building the edge or a designated part of the puzzle. As it is

intended that our robotic system learns the way to solve a

puzzle by tutelage further forms of cooperation have to be

taken into account. As the robot is not equipped with speech

recognition at present, only actions envolving manual guiding of the robot arm and hand or interpreting the appropriate action by using the camera system are considered. We

do not use learning by imitation or demonstration. In these

cases we either have a direct physical coupling between robot and human partner or a visual coupling via the stereo

camera system besides the coupling by a given context or

task.

Instead of using a straight forward engineer’s approach to

realize the cooperative solving of a jigsaw puzzle we have

decided to also take a psychological approach into account

and combine both methods to achieve an optimal result (Fig.

3). Therefore, we started to look at the different forms of

knowledge representation, actions and facts in psychology

as well as learning procedures of children. We performed

an actual puzzle solving experiment with a child in order to

gain some information on a child’s way to tackle the puzzle

problem. Then possible actions of a learning robotic system were modelled and are used as entry for a simulation in

ACT-R.

This paper is organised as follows: Section II illustrates

the learning experiment of the selected child. The modelling

of the robot learning scenario in ACT-R is depicted in Section III, whereas the integration of ACT-R as planning module in the cognitive architecture of our robot is described in

Section IV. Section V concludes this paper.

Learning Experiment

As a first step to describe and model the behavior when

learning to solve a puzzle and cooperatively solving the

same we have performed an experiment using a three year

old child. The idea was to closely watch the child, analyze and describe its behavior and try to transfer appropriate

models, ways of learning and behaviors onto our robotic system. Though watching only one child is not representative

in terms of a psychological experiment, the analyzed behavior can still be used as model for an appropriate behavior of

the robotic system.

Experimental Set-Up

For our experiment we used a three year old girl who was

familiar with the way of solving a puzzle (Fig. 4); that

is she knew what to do with different pieces of a puzzle

which seemed to fit together. The girl was given a jigsaw

puzzle of 54 pieces, which is also intended for the robotic

experiments. The puzzle pictures a natural scene of animals

(horses, goats, cows and pigs) on a mountain pasture. The

material is foamed rubber, 1 cm thick, blue on the back and

coloured (printed picture) in front. There are only nine different types of pieces which thus makes solving the puzzle

slightly more difficult for the child. The puzzle itself and

the picture on the puzzle were unknown to the child. Also,

the picture was not presented during the puzzling process.

The girl solved the puzzle four times with the mother acting

as tutor. Each time the mother helped less until there was

practically no interference in the puzzling process the last

two times. All sessions were recorded on video and later

minutely analyzed with the help of a software by William L.

Figure 5: Results of the puzzle solving experiment

Roberts “Programs for the Collection and Analysis of Observational Data.” For this purpose all persons and objects

involved in the process were described as well as all single

actions performed by either the child and/or the mother. All

actions that can be observed have to be coded in tuples of

the following form:

(Acting person, action, goal)

We coded the following actions, which can be divided

into actions concerning the complete puzzle or all pieces,

actions used to find or fit a piece, actions for interacting

with the other person present, which are depicted in Table

1. All these actions were watched and analyzed as they

were performed by the mother and / or the daughter. During the coding process groups of actions belonging together

were formed in order to achieve a clear representation for

the analysis. Additionally, all actors and objects had to be

coded, as pictured in Table 2.

Coding the following action “the child grips a piece, turns

it, positions it and fits it” looks as follows:

1

1

1

1

11

12

40

20

00

01

02

03

04

05

10

11

12

13

20

21

22

30

31

32

40

41

42

50

51

52

53

60

61

63

64

65

66

67

Table 1: Coded Actions

Empty puzzle box

Accumulate pieces

Destruct puzzle

Shuffling pieces

Put away puzzle box

Stapling pieces

Searching

Gripping

Turning around

Holding

Fitting

Incorrect fitting

Fitting at other place

Putting back

Loosening piece

Dropping

Positioning

Rotating

Translation of piece

Requesting

Receiving

Handing over

Showing

Questioning

Looking

Talking

Being obstinate, defying

Sitting down

Counting

Getting up

5

5

5

5

Experimental Results

The analysis of the four sessions shows a distinctive progress

in the learning process. First of all the time the child needed

to solve the puzzle was significantly reduced from 14.57

minutes with the mother acting as tutor in the first session to

10.58 minutes in the second session. In the third and fourth

session the mother hardly helped at all, safe a small number

of actions the child solved the puzzle by herself. Here, she

also clearly took the lead as a sociological analysis of the

video recordings showed. In the third and fourth session

the child did not want to be the pupil any more. She even

went as far as to test her mother in the third session. The

child deliberately misplaced a piece several times in a row

and watched for the reaction of her mother. Also the number

of actions performed by either child and mother decreased

Table 2:

01

02

03

04

05

06

07

08

09

Coded Actors and Objects

Child

Mother

Edge piece

Corner piece

Puzzle piece

Puzzle combination

Ceiling

Chair

Puzzle box

throughout the four sessions from 697 at the beginning to

385 in the fourth session. In her first attempt, the daughter

often gripped pieces and put them back again. She also often

had to rotate or reposition a piece before fitting it. Also the

number of misfits was relatively high as depicted in Figure

5. As soon as the child had learned, that pieces with blue

belonged to the sky printed on the puzzle and pieces with

green to the meadow, her speed advanced and the number of

rotations and wrong fittings decreased.

The child’s progress can also be seen in the decreasing

number of the mother’s helping actions like helping with the

correct rotation, position or loosening of a wrongly fitted

piece (help puzzling in Figure 5) or like helping the child to

select the correct piece by giving it to her, indicating it or

taking wrong pieces away (help choosing in Figure 5).

The child’s actions involving no interaction with the

mother slightly increased in the third and fourth trial in contrast to the second session. This is due to the fact that on

the one hand the child did not really want any help any more

and that she also tested the mother. On the other hand she

got slightly bored in the third and fourth session, as she already knew the puzzle and longed to go to the refectory of

the university.

This psychological experiment clearly helped to identify

all single actions involved in teaching a child to solve a puzzle by tutelage. It also showed, that learning by tutelage is a

successful way to learn a certain procedure. In order to get

generalised statements about learning to solve jigsaw puzzles by tutelage this experiment should be repeated in form

of a field study involving a representative number of children

aged three and four.

Modelling the Scenario in ACT-R

Cognitive architectures are theories about the functionality

of human mind. They try to derive intelligent behavior from

fundamental assumptions about the functionality of human

mind. These assumptions are based on knowledge gained

from psychological experiments. Cognitive architectures are

used by psycologists to reconstruct the results of psychological experiments. Thereby they want to see into internal

procedures of the human mind.

ACT-R (Anderson 1996) is a cognitive architecture trying

to describe the functionality of human mind with an integrated approach. Following an integrated approach means

that the ACT-R theory is not trying to describe a specific

feature or part of human cognition. It rather wants to emulate the interaction of the mind’s main components in order

to simulate consciousness. Together with assumptions about

the functionality of the human mind ACT-R also describes

the mode of function of visual and auditive perception as

well as manual actions.

The ACT-R framework consists of a programming language to describe knowledge and the human mind’s basic

mode of function. The programming language is used to

describe declarative and procedural knowledge. Declarative

knowledge is the knowledge that describes facts and features

of objects. In ACT-R declarative knowledge is represented

by so called ”chunks”. A chunk can describe for example

the colour or the position of an object. Furthermore declarative knowledge has the property that human consciousness

is aware of it. In ACT-R the second kind of knowledge

is the procedural knowledge. This type of knowledge describes procedures, i.e. the way how something is done. A

procedural piece of knowledge is for example the information, how to look at something or how to press a key on the

keyboard. This sort of knowledge has the property that human consciousness is not aware of it. In ACT-R procedural

knowledge is represented by so called “productions”.

Additionally ACT-R describes interfaces for visual and

auditive perception as well as an interface for manual actions. These interfaces consist of buffers, which contain

declarative knowledge in form of chunks. Knowledge transfer and modification is performed by applying productions

on these buffers. By transferring chunks into interface

buffers or reading new information from them, interaction

with an environment can be implemented.

Apart from modelling the internal functions of the mind

ACT-R also supplies the creation of an environment for the

experiments. In ACT-R an environment can be created in

form of a screen window where text objects can be displayed. Like probands in psychological experiments the

ACT-R model is able to look at the screen window and move

its attention to objets inside the window. Together with the

internal model of mind the model of cognition and environment offers the ability to simulate psychological experiments. In addition the ACT-R framework supplies information about the duration and the accurancy of a model’s

response. Thus it is possible to compare statistical data

from experiments with human probants with statistical data

achieved by multiple runs of the model. Thereby conclusions on internal procedures of the human mind shall be

made possible.

State of the Art

In the past years ACT-R has been used to model and to

simulate in a number of different applications. Examples

are problem solving and decision making (Gunzelmann &

Anderson 2004) (Taatgen 1999) like mathematical problem

solving (Koedinger & Terao 2002) (Koedinger & MacLaren

1997), choice and strategy selection and language processing. As fas as we know, the solely applications of ACT-R in

robotics are used by (Hipp et al. 2005) and (Sofge, Traftton, & Schultz 2002). (Hipp et al. 2005) want to simplify

the handling of a wheelchair by handicaped people. They

use ACT-R in order to interpret given commands in correlation with the actual situation. The intention of the user

is interpreted out of the results gained by the application of

ACT-R and the data supplied by the sensor systems of the

wheelchair. In contrast (Sofge, Traftton, & Schultz 2002)

use ACT-R to simulate human communicative behavior in

human-robot-cooperation. But up to now no research group

has used ACT-R to actually control an intelligent robotic

system on the top-level.

Models

In our set-up the ACT-R framework is used to model cooperative solving of a jigsaw puzzle. The first part of the model

describes the environment of the experiment. The virtual environment for the simulated experiment consists of a screen

window. A screen shot of the screen window is shown in

Figure 6. Inside the screen window puzzle pieces and possible positions of the puzzle pieces are displayed. The environment describes the features of the puzzle pieces and positions, where the puzzle pieces can be placed. Initially, the

puzzle pieces are placed in the upper left area of the screen

window. The puzzle pieces can be joined together in the upper right area of the window. Furthermore, commands from

the tutor and reactions from the model are displayed in the

lower area of the window. Since the commands and reactions are always placed at the same location the robot can

easily find new orders.

This environmental model is necessary to allow interaction between the robot and the tutor. The environmental

model represents the work surface and the puzzle parts. Both

tutor and model can look at the screen window. By keyboard

commands tutor and robot give instructions to the environmental model. The environmental model interprets these instructions and displays the instructions on the window. Thus

puzzle parts can be moved and joined. The environmental

model recognises the different actions. These actions are

the same, which also occurred in the jigsaw puzzle experiment with tutor and child. After confirmation by the tutor,

these actions are executed by the environment.

Besides the environmental model ACT-R is also used to

create a cognitive model of the robot. This model consists

of a number of chunks and productions, which provide fundamental actions like the selection of a suitable action and

a suitable object as well as the interaction with the environment. The system of productions is organized modularly, so

it offers a good scalability for additional actions. The productions do not encode ways how the jigsaw puzzle can be

solved. They only encode ways for retrieving new actions

or objects and for reporting commands to the environmetal

model. The selection of the right sequence of action is not

coded within the model, so it has to be acquired by learning.

It is planned to evaluate symbolic and subsymbolic learning.

As a first step we implemented and evaluated the learning of

action sequences by concatenation. Reinforcemnt learning

and heuristic action selection are implemented into our system at present.

Simulation

The jigsaw puzzle is iterately solved by robot and tutor. The

main sections of the iteration process are shown in Figure

7. During each iteration step a fundamental operation is performed to solve the puzzle. Such a fundamental operation

is for example grabbing a specific puzzle part or moving the

puzzle piece to a position.

Each iteration step is devided into seven sub steps. In the

first step the robot tries to determine an applicable action

and an applicable object. Afterwards the robot suggests the

action and the object to the tutor. During the second substep the tutor decides whether the selected action shall be

executed with the object. If the tutor evaluates the action as

negative, he will demonstrate an action - possibly in connection with an object - to the robot in the third step. This action

Figure 6: Screenshot of the simulation window: upper

left: heap where the puzzle pieces are initially placed, upper right: puzzle pieces, which are already joined together,

lower half: area for commands by the tutor and responses by

the robot

is a hint for the robot to find the right action and object. For

example the tutor points to an object to tell the robot which

part to take next. In addition the tutor has the alternative of

accomplishing own actions. He may insert some parts and

afterwards the robot may continue with his actions.

The robot looks at the type of action and the object and

returns to the first iteration step. The robot selects a new

action and a new object within the context of the action given

by the tutor. In the second iteration step the new selected

action is presented to the tutor again. In case of a positive

evaluation the robot proceeds to the fourth step.

In the fourth step the robot tries to select a proper destination for the puzzle piece. The suggested destination is

presented to the tutor. He evaluates the destination in the

fifth step. If the destination is evaluated as negative, the tutor

demonstrates an action as well as a position to the robot in

the sixth step. The robot returns to the fourth step and selects

a new destination. The new destination is chosen within the

context of the action demonstrated by the tutor. In case of a

positive evaluation of the destination by the tutor the robot

transmits the action, the object and the destination to the environmental model. The model of the environment executes

the action by changing the position and states of the puzzle

piece in an appropriate way. Afterwards the next iteration

step is begun. The iteration is proceeded until the jigsaw

puzzle is finally completed.

The chosen structure of the simulation permits to use as

many types of actions as desired. The succession of choosing an action by the robot and evaluation by the tutor corre-

Time in s

Duration without

learning

Duration with

learning of

productions

Number of loops

Figure 7: Solving the puzzle together: Tutor and robot solve

the puzzle together by iteration. During every iteration step

a fundamental operation is executed. An iteration step is

devided into 7 sub steps within which the applicable actions

are retrieved by the robot, evaluated by the tutor and finally

executed by the model of the environment.

sponds to the situation that the tutor corrects a child or gives

small assistance while solving a puzzle. The better the robot selects the actions and objects, the more independently

the robot solves the puzzle. In a first step we allowed the

concatenation of productions (actions) in order to simulate a

progress in learning. Instead of asking the tutor after every

single iteration step the simulated robot starts to perform interaction steps forming a specific action without asking the

tutor after it has already successfully perfomred thesye iteration steps before hand. Figure 8 shows the comparison

between action sequences that are performed with and without the concatenation of iteration (action) steps. The performance clearly improves up to 30 percent and the interaction

between robot and tutor becomes smoother. In order to improve the selection of actions and objects several learning

procedures besides mere concatenation of productions are

to be implemented and examined in near future. Learning is

possible over the ambiguity of the applicable actions and by

demonstrating successions of interactions.

Integration into the Robotic System

As the Puzzle Project uses the same experimental robotic

system which is also used within some subprojects of the

German Humanoid Project SFB 588 (Dillmann, Becher, &

Steinhaus 2004), the cognitive architecture to be used is already stipulated (Burghart et al. 2005). It is a modified hierarchical three-layered architecture which also uses components tailored towards different congnitive functions. The

single cognitive modules communicte and interact with each

other accross all layers. Robot tasks are coordinated with

the help of Petri-nets. The actual flow of actions is also to

be coded by Petri-nets.

Figure 8: Concatenating actions clearly improves the interaction and reduces time

In order to achieve a fast translation of the simulated scenario onto the real robotic system we have started to combine ACT-R and the robot control as depicted in Figure 9.

The ACT-R scenario as presented in this paper is used as

a component doing task planning, attention control, execution supervision on a higher level, dialogue management and

concotion or optimisation of productions (learning). All relevant productions for the scenario are stored in the ACT-R

component at present. Later on they are to be stored in the

global knowledge base. The programming of interfaces between the ACT-R component and the robot control has already started. A production as coded within the ACT-R simulated scenario is translated into a robot command, which

is coded in form of a Petri-net. Vice versa inputs to the robotic system i.e. acknowledgements of the human, detection

of the human’s hand or of a piece of the jigsaw puzzle are

correlated with productions in the ACT-R scenario.

Conclusion

In this paper we have presented a novel approach to realize a cooperative task between human and robotic system

by combining the approaches of an engineer and a social

scientist. For this purpose a three year old child was closely

watched in her effort to solve a children’s jigsaw puzzle with

the mother acting as tutor. All actions were described, analyzed and transferred into productions applicable to the robotic system. As the robotic system does not use speech

recognition, other senses like haptics and vision were substituted. Instead of receiving spoken instructions the robotic

arm can be manually guided or instructions can be given by

pointing to a puzzle piece or an empty position in the puzzle.

These aspects have been considered when designing the productions simulating the behavior of the robotic system. As

the robot is to learn the procedure to solve a jigsaw puzzle

with the help of a human tutor using the coded productions

(basic robot skills as well as declarative knowledge about

the puzzle and the human hand), the robot learning scenario

has been simulated in ACT-R by allowing a concatenation

Task

knowledge

Top-level

Environment

model

Object

models

ACT-R Puzzle Component

Puzzle piece recognition

Mid-level

Task

coordination

Low-level

Task

execution

Low-level

perception

Actuators

Sensors

Mid-level

perception

Robot movements

Hand detection

Puzzle piece

detection

FTS sensor data

Tactile sensor data

Position sensor data

Software

Robot

hardware

Environment

Figure 9: Integration of the ACT-R puzzle component into

the robot architecture

of successfully performed robotic actions. Additionally, a

visual output in ACT-R has been created simulating the environment, the focus of attention and the execution of actions by robot and human. Next steps include the application of further learning modes like reinforcement learning

and heuristic selection fo actions. Instead of generating a

specific robotic cognitive structure we intend to integrate the

designed ACT-R component into our robotic architecture.

Acknowledgments

This research has been performed at the Institute of Process

Control and Robotics headed by Prof. H. Woern at the University of Karlsruhe.

References

Anderson, J. 1996. Act: A simple theory of complex cognition. American 51:355–365.

Billard, A. 2001. Learning motor skills by imitation: “a

biologically inspired robotic model”. Int. Journal of Cybernetics and Systems 155–193.

Breazeal, C.; Hoffman, G.; and Lockerd, A. 2004. Teaching and working with robots as a collaboration. In Third

International Joint Conference on Autonomous Agents and

Multiagents.

Burghart, C.; Mikut, R.; Holzapfel, H.; Steinhaus, P.; Asfour, T.; and Dillmann, R. 2005. A cognitive architecture

for a humanoid robot: A first approach. In Proc. IEEE Int.

Conf. On Humanoids.

Dillmann, R.; Becher, R.; and Steinhaus, P. 2004. ARMAR

II - a learning and cooperative multimodal humanoid robot

system. International Journal 1:143–155.

Gunzelmann, G., and Anderson, J. 2004. Spatial orientation using map displays: A model of the influence of target

location. In Proceedings of the 26th Annual Conference of

the Cognitive Science Society.

Hipp, M.; Badreddin, E.; Bartolein, C.; Wagner, A.; and

Wittmann, W. 2005. Cognitive modeling to enhance usability of complex technical systems in rehabilitation technology on the example of a wheelchair. In Proceedings of

the 2nd International Conference on Mechatronics.

Kawamura, K.; Dodd, W.; Ratanaswasd, P.; and Gutierrez,

R. 2005. Development of a robot with a sense of self. In

In Proceedings of CIRA 2005.

Kerpa, O.; Osswald, D.; Yigit, S.; Burghart, C.; and Woern,

H. 2003. Arm-hand-control by tactile sensing for human

robot co-operation. In Proc. of Humanoids’2003.

Koedinger, K., and MacLaren, B. A. 1997. Implicit strategies and errors in an improved model of early algebra problem solving. In Proceedings of the Nineteenth Annual Conference of the Cognitive Science Society.

Koedinger, K., and Terao, A. 2002. A cognitive task analysis of using pictures to support pre-algebraic reasoning. In

Proceedings of the Twenty-Fourth Annual Conference of

the Cognitive Science Society.

Kozima, H.; Nakagawa, C.; and Yano, H. 2003. Can a

robot empathize with people? In International Symposium

”Artificial Life and Robotics” (AROB-98, Beppu).

Sofge, D.; Traftton, J.; and Schultz, A. 2002. Human

robot collaboration and cognition with an autonomous mobile robot. In Proceedings of the International Conference

on Intelligent Autonomous Systems IAS 8.

Taatgen, N. 1999. Explicit learning in act-r. In Mind modelling: a cognitive science approach to reasoning.

Yigit, S.; Burghart, C.; and Woern, H. 2003. Specific combined control mechanisms for human robot co-operation.

In In Proceedings ISC 2003.

Yigit, S.; Burghart, C.; and Woern, H. 2004. Co-operative

carrying using pump-like constraints. In Proc. of Intelligent

Robots and Systems (IROS 2004).