From: AAAI Technical Report WS-02-06. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved.

WhiteBear:An Empirical Study of Design Tradeoffs for AutonomousTrading

Agents

Ioannis A. Vetsikas and Bart Selman

Departmentof ComputerScience

Cornell University

Ithaca, NY14853

{vetsikas,selman} @cs.comell.edu

Abstract

ThispaperpresentsWhiteBear,

oneof the top-scoringagents

in the 2"’i InternationalTradingAgentCompetition

(TAC).

TACwasdesignedas a realistic complextest-bed for designingagentstradingin e-marketplaces.

Ourarchitectureis

an adaptive,robustagentarchitecturecombining

principled

methodsand empiricalknowledge.

Theagent faced several

technicalchallenges.Deciding

the optimalquantitiesto buy

andsell, the desiredpricesandthe timeof bidplacement

was

onlypart of its design.Otherimportantissues that weresolvedwerebalancingthe aggressiveness

of the agent’sbids

againstthe costof obtainingincreasedflexibilityandthe integration of domainspecific knowledge

withgeneral agent

designtechniques.Wepresentour observationsandbackour

conclusions

withempiricalresults.

Introduction

The Trading Agent Competition (TAC) was designed and

organized by a group of researchers at the University of

Michigan, led by MichaelWellman(Wellmanet al. 2001).

In earlier work, e.g. (Anthonyet al. 2001), (Grecnwald,

Kephart, &Tesauro 1999), (Preist, Bartolini, &Phillips

2001), researchers tested their ideas about agent design in

smaller market gamesthat they designed themselves. Time

was spent on the design and implementationof the market,

but there was no common

marketscenario that researchers

could focus on and use to comparestrategies. TACprovides such a common

framework.It is a challenging benchmarkdomainwhichincorporates several elements found in

real marketplacesin the realistic setup of travel agents that

organize trips for their clients. It includes several complementaryand substitutable goodsI traded in a variety of

auctions by autonomousagents that seek to maximizetheir

profit while minimizingexpenses. These agents must decide the bids to be placed. The problemis isomorphicto

variants of the winnerdeterminationproblemin combinatorial auctions (Greenwald&Boyan2001). However,instead

of bidding for bundlesand letting the auctioneer determine

the final allocation that maximizes

income(for this problem

Copyright©2002,American

Associationfor Artificial Intelligence(www.aaai.org).

All rights reserved.

tThevariousentertainment

tickets are substitutable,whilehotels, planeandentertainment

tickets are complementary

goods.

see (Fujishima, Leyton-Brown, & Shoham1999), (Sandholm&Suri 2000)), in this setting the computationalcost

movedto the agents whohave to deal with complementarities and substitutabilities of goods.

Participating in TACgave us the opportunity to experiment with general agent design methodologies. Westudy

design tradeoffs applicable to most market domains. We

examinethe tradeoff of the agent paying a premiumeither

for acquiring informationor for havinga widerrangeof actions availableagainstthe increasedflexibility that this informationprovides. In addition, using the information known

about the individual auctions and modellingthe prices helps

the agent to judgeits flexibility moreaccurately and allows

it to strike a balancebetweenthe cost of havingan increased

flexibility and the benefit obtained fromit, whichin turn

improves overall performance even more. Wealso examine howagents of varying degrees of bidding aggressiveness

performin a range of environmentsagainst other agents of

different aggressiveness.Wefind that there is a certain optimal level of"aggressiveness":an agent that is just aggressive enoughto implementits plan outperformsagents who

are either not aggressive enoughor too aggressive. As we

will see, the resulting agent strategy is quite robust andperformswell in a range of agent environments.

Wealso showthat even thoughgenerating a goodplan is

crucial for the agent to maximize

its utility, it is not necessary to computethe optimal plan. Wewill see that a randomizedgreedy search methodsproducesplans that are very

close to optimal and morethan goodenoughfor effective

bidding. In fact, mostof the time the agent has only rather

incompleteknowledgeof the various price levels (auctions

are still open), therefore solving the optimizationtask optimally does not necessarily lead to better overall performance.Our results showthat it is actually moreimportant

to be able to quickly update the current plan whenevernew

information comesin. Our fast planning strategy allows us

to do so (plan generationgenerally in less than 1 second).

Onesubstantial benefit of our modularagent architecture

is that it is also able to combineseamlesslyboth principled

methodsand methodsbased on empirical knowledge.Overall our agent is adaptive, versatile, fast and robust and its

elements are general enoughto workwell under any situation that requires biddingin multiple simultaneousauctions.

Wehavedemonstratedthis not only in the controlled experi-

mentsbut also in practice by performingconsistently among

the top scoring agents in the TAC.

The paper is organized as follows. In the next section,

we describe the TACmarket gameformally. After that we

present our agent design architecture. In the sections afterwardswe present the results of the competitionand weexplain the experimentsperformedto validate our conclusions.

Finally we discuss possible directions for future workand

conclude.

TAC: A description

of the Market Game

In the Trading Agent Competition, an autonomoustrading

agent competesagainst 7 other agents in each game.Each

agent is a travel agent with the goal of arranginga trip to

Tampafor CUSTcustomers. To do so it must purchase

plane tickets, hotel roomsand entertainmenttickets. This is

done in simultaneousonline auctions. The agents send bids

to the central server runningat the Universityof Michigan

and are informedabout price quotes and transaction informarion. Eachtype of commodity

(tickets, rooms)is sold

separate auctions (for a surveyof auction theory see (Klemperer 1999)). Eachgamelasts for 12 minutes(720 seconds).

There is only one flight per day each waywith an infinite amountof tickets, whichare sold in separate, continuously clearing auctions in which prices follow a random

walk. For each flight a hidden parameterz is chosenuniformlyin [10, 90]. Theinitial price is chosenuniformlyin

[250, 400] and is perturbed by a value drawnuniformlyin

[-10, 10 + r--~o " (z - 10)], wheret (sec) is the timeelapsed

since the gamestarted. Theagents maynot resell tickets.

There are two hotels in Tampa: the TampaTowers

(cleaner and more convenient) and the Shoreline Shanties

(the not so goodand expected to be cheaper hotel). There

are 16 roomsavailable each night at each hotel. Roomsfor

each of the days are sold by each hotel in separate, ascending, open-cry, multi-unit, 16th-price auctions. A customer

must be given a hotel roomfor every night betweenhis arrival andthe night before his departingdate and they are not

allowed to changehotel during their stay. Theseauctions

close at randomlydeterminedtimes and more specifically

at minute4 (4:00) a randomlyselected auction closes, then

anotherat 5:00, until at 11:00the last one closes. Noprior

knowledgeof the closing order exists and agents maynot

resell roomsor bids.

Entertainmenttickets are traded (bought and sold) among

the agents in continuousdoubleauctions (stock markettype

auctions) that close whenthe gameends. Bids matchas soon

as possible. Eachagent starts with an endowment

of 12 randomtickets and these are the only tickets available in the

game.

r~ anda

Eachcustomeri has a preferred arrival date PR~

dep.

preferred departure date PR

She also has a preference

for staying at the goodhotel representedby a utility bonus

UHias well as individual preferences for each entertainmentevent j represented by utility bonuses UENTi,j.Let

DAYSbe the total numberof days and ET the number of

different entertainmenttypes.

Theparametersof customeri’s itinerary that an agent has

82

to decide uponare the assigned arrival and departuredates,

AAi and ADi respectively, whether the customer is placed

in the goodhotel GHi(whichtakes value 1 if she is placed

in the Towersand 0 otherwise) and ENTi,j whichis the day

that a ticket of the event j is assignedto customer/ (this is

e.g. 0 if no suchticket is assigned).

Theutility that the travel plan has for eachcustomeri is:

utili

= 1000 + UH~¯ GHi

ADi

+ ~ n~ax { UENT, d. I(ENT,,j

d=AAi

(1)

= d)}

-- 100. ([PR.~"~ - Amil + IPRai*" - AD,[)

if 1 < AA~ < AD~< DAYS,

else utili = 0, becausethe plan is not feasible.

It should be noted that only one entertainmentticket can

be assigned each day and this is modeledby taking the

maximum

utility from each entertainment type on each day.

Weassume that an unfeasible plan means no plan (e.g.

AAi = ADi = 0). The function I(bool_expr) is 1 if the

bool_expr =TRUE

and 0 otherwise.

The total incomefor an agent is equal to the sum of

its clients’ utilities. Eachagents searches for a set of

itineraries (represented by the parameters AAi, ADi, GHi

and ENTi,j) that maximizethis profit while minimizingits

expenses.

The WhiteBear Architecture

The optimization problemin the 2nd TACis the sameas the

one in the first one, so weknewstrategies that workedwell

in that setting and had someidea of whereto start in our

implementation (Greenwald &Stone 2001). However,the

newauction rules were different enoughto introduce several issues that markedlychangedthe gameand madeit impossible to import agents without makingextensive modifications. For an agent architecture to be useful in a general

setting, it mustbe adaptive,flexible andeasily modifiable,

so that it is possible to makemodificationson the fly and

adapt the agent to the systemin whichit is operating. These

were lessons that we incorporated in to the design of our

2

architecture.

In addition, as informationis gained by participation in

the system, the agent architecture mustallow andfacilitate

the incorporation of the knowledgeobtained. During the

competitionwe changedmanyparts of our agent to reflect

the knowledgethat we obtained. For example,we initially

experimentedwith bidding based mainlyon heuristics, but

it did not take us too long to realize that this approachwas

not able to adapt fast enoughto the swift pace required by

bidding in simultaneousauctions. Biddingwithout an overall plan is quite inefficient, becauseof the complementarities

and substitutabilities betweengoods. Wetherefore decided

to formulate and solve the optimization problemof maximizingthe utility of our agent (see Planner section). Such

2Theoriginal architecture proposedby the TAC

teamseemed

an appropriatestarting point (witha number

of necessarymodifications).

a fundamentalchange wouldbe difficult and quite timeconsuming,if our architecture did not support interchangeable parts.

Theagent architecture is summarized

as follows:

while (not end of game)

1. Get price quotes and transaction information

2. Calculateprice estimates

3. Planner: Formand solve optimization problem

4. Bidder: Bid according to plan and price estimates

}

Our architecture is highly modularand each component

of the agent can be replacedby anotheror modified.In fact

several parts of the components

themselvesare also substitutable. This highly modularand substitutable architecture

allowedus to test several different strategies and to run controlled experimentsas well. Also note our agent repeatedly

reevaluates its plan and bids accordingly,since in mostdomainsit is crucial to react fast to informationon the domain

and to other agents actions and the TACgameis no exception to this rule. Wefurthermoredesigned our components

to be as fast and adaptiveas possiblewithoutsacrificing efficiency.

In the next subsection we describe howthe agent generates the price estimate vectors. In the subsectionafterwards

we explain howthe agent forms and then solves the optimizationproblemof maximizing

its utility. In the last subsection we present the biddingstrategies used by the bidder

to implementthe generatedplan.

these are also considered ownedby the planner. For the

plane tickets the PEV’sare equal to the current ask price,

for tickets not yet bought, but for hotel roomsit is higher

than that. In fact, we estimate the price of the next room

boughtas a function of the current price and for each additional roomthe price estimate increases by an amount

proportional to the current price and the day and type the

room(rooms on days 2 and 3 or on the goodhotel are estimated to cost more than roomson days 1 and 4 or on the

t (x) = O, if x <0d,t

ent and

bad hotel). Wealso set PEVd~

PEV~t(x) 200, if x > d, t +O elrl~

1, because all we are

certain fromobservingthe current ask and bid prices is that

there is at least one ticket up for buyand sale, but we’renot

sure if there are any more.Alsonote that entertainmenttickets ownedby the agent do not havea value of 0, since they

can be sold. By using them, the agent loses a value equal

to the price at whichit could have sold them. Thereforethe

PEVof ownedtickets is equal to the price at whichthey are

expectedto be sold.

Planner

The planner is another modulein our architecture. It uses

the PEV’sto generate the customersitineraries that maximizethe agent’s utility. Therefore,it also providesthe type

and total quantity of commoditiesthat should be purchased

or sold to achievethis. In order to do this the plannerformulates the followingoptimizationproblem:

GUST

(4)

Price Estimate Vectors

In order to formulatethe optimizationproblemthat the planner solves, it is necessaryto estimate the prices at which

commoditiesare expected to be boughtor sold. Westarted

from the "priceline" idea presented in (Greenwald&Boyan

2001) and we simplified and extendedit whereappropriate.

Weimplementeda modulewhich calculates price estimate

vectors (PEV).Thesecontain the value (price) of Zth

unit for each commodity.For somegoodsthis price is the

samefor all units, but for others it is not; e.g. buyingmore

hotel roomsusually increases the price one has to pay.

Let O~~r, Vd

()dep

g-}goodh

oent

and PEV2"~(x),

’ obadh,

’ Vd

d,t

PEVd~P(x), PEV~°°dh(x), PEVbdaah(x), PEV~,~’(x)

respectively the quantities ownedand the the price estimates

of the xth unit for the plane tickets, the hotel roomsand the

entertainmenttickets for event t for each day d. Let us also

define

theoperator z) = EL1/(0.

AA~,AD~,mGH%,ENT¢,,

83

E "utile-COST}

wherethe cost of buyingthe resourcesis

DAYS

GUST

COST= E [a( PEVff’~(x)’

d=l

~--~I(AA~=d))

c=l

GUST

+a(PEV:’P(x), ~-~ I(AD~ = d))

c=l

COST

+a(PEV:°°dn(x),

EIGHt. I(AA. _< d < AD.)])

e.=l

GUST

+a(PEV~adh(x),E[(1-CH~)

. I(AA~ <_ AD~) ])

c.=1

ET

+ Ea(PEV2,~t(x),

t=l

Theprice estimate vectors are

< current bid price ifx < 0~"t

PEV~,’~t(x) > current ask price if x --> OdJ

(2)

e,t

d,t

ifx < yp~

O~

O)

PEV~YP,(x) ~ =

> current ask price ~pe

ifx > O~

wheretypeE{ arr, dep,goodh,badh}.

Since onceboughtan agent cannot sell plane tickets nor hotel roomsto anyoneelse, this meansthat their value is 0

for the agent; it has to treat the moneythat it has already

paid for them as a "sunk cost". The agent must buy all

roomsthat it is currently winningif the auction closes, so

{

GUST

~_I(ENT,,t:d))]

c=l

(5)

Oncethe problemhas been formulated, the next step is

solving it. This problemis NP-complete,but for the size

of the TACprobleman optimal solution usually can be producedfast. In order to create a moregeneral algorithmwe

realized that it shouldscale well withthe size of the problem

and should not include elaborate heuristics applicable only

to the TACproblem. Thus we chose to implementa greedy

algorithm: the order of customers is randomiTedand then

each customer’sutility is optimizedseparately. This is done

a few hundredtimes in order to maximiTethe chances that

the solution will be optimalmostof the time. In practice we

havefoundthe followingadditions to be quite useful:

(i) Compute

the utility of the plan 791fromthe previousloop

before considering other plans. Thusthe algorithm always

finds a plan 792that is at least as goodas 791and there are

relatively few radical changesin plans betweenloops. We

observed empirically that this prevented someradical bid

changesand improvedefficiency.

(ii) Weaddeda constraint that dispersedthe bids of the agent

for resources in limited quantities (hotel roomsin TAC).

Plans, which demandedfor a single day more than 4 rooms

in the samehotel, or morethan 6 roomsin total, were not

considered. This leads to someutility loss in rare cases.

However,bidding heavily for one room type means that

overall demandwill very likely be high and therefore prices

will skyrocket,whichin turn will lowerthe agent’sscore signiticantly. Weobservedempiricallythat the utility loss from

not obtainingthe best plan tends to be quite small compared

to the expectedutility loss fromrising prices.

Wehave also verified that this randomizedgreedy algorithm gives solutions whichare often optimal and never far

fromoptimal. Wecheckedthe plans (at the game’send) that

were produced by 100 randomlyselect runs and observed

that over half of the plans wereoptimal and on averagethe

utility loss was about 15 points (out of 9800to 10000usually3), namelyclose to 0.15%.Compared

to the usual utility

of 2000 to 3000that our agents score in most games,they

achieved about 99.3%to 99.5%of optimal. These observations are consistent with the ones about a related greedy

strategy in (Stoneet al. 2001).Consideringthat at the beginning of the gamethe optimization problemis based on inaccurate values, since the closing hotel prices are not known,

an 100%-optimalsolution is not necessary and can be replaced by our almost optimal approximation. As commodities are boughtand the prices approachtheir closing values,

most of the commoditiesneededare already bought and we

haveobservedempirically that biddingis rarely affected by

the generationof approximatelyoptimalsolutions instead of

optimal ones.

This algorithm takes approximately 1 second to run

through 500 different randomizedorders and computean allocation for each. Our test bed wasa cluster of 8 Pentium

HI 550 MHzCPU’s, with each agent using no more than

one cpu. This systemwas used for all our experimentsand

4 Henceit is verified that our

our participation in the TAC.

goal to providea plannerthat is fast andnot domainspecific

is accomplishedand not at the expenseof the overall agent

performance.

Bidding Strategies

Oncethe plan has beengeneratedthe bidder places separate

bids for all the commoditiesneededto implementthe plan.

Duringthe competitionevery team, including ours, used empirical observationsfromthe gamesit participated in (over

rl’hesewerethe scoresof the allocationat the endof the game

(no expenseswereconsidered).

4During

the competitiononlyoneprocessorwasused,but duringthe experimentations

weusedall 8, since8 differentinstantiations of the agentwererunningat the sametime.

84

1000)in order to improveits strategy. Weexperimentedwith

several different approaches. In the next sections we describe the possiblebiddingstrategies for the different goods

and the tradeoffs that wefaced.

Paying for adaptability The purchase of flight tickets

presents a very interesting dilemma.Wehave verified that

ticket prices are expectedto increase approximatelyin proportion to the square of the time elapsed since the start of

the game.This meansthat the moreone waits the higher the

prices will get and the increaseis moredramatictowardsthe

end of the game.Fromthat point of view, if an agent knows

accuratelythe plan that it wishesto implement,it shouldbuy

the plane tickets immediately.Onthe other hand,if the plan

is not known

accurately(whichis usually the case), the agent

shouldwait until the prices for hotel roomshavebeendetermined.This is becausebuyingplane tickets early restricts

the flexibility (adaptability) that the agent has in forming

plans: e.g. if somehotel roomthat the agent needs becomes

too expensive,then if it has already boughtthe corresponding plane tickets, it must either wastethese, or pay a high

price to get the room.Anobvioustradeoff exists in this case,

since delaying the purchase of plane tickets increases the

flexibility of the agent and henceprovidesthe potential for a

higher incomeat the expenseof somemonetarypenalty.

Our initial attempt was similar to someof our competitors (as wefound out later, during the finals): we decided

to bid for the tickets between4:00 and 5:00. This is because most agents bid for the hotel roomsthat they need

right before 4:00, therefore after this time the roomprices

approximatesufficiently their closing prices and a plan created at that time is usually similar to the optimalplan for

knownclosing prices. Wetried waiting for a later minute,

but weempirically observedthat the price increase wasmore

than the benefit from waiting. A further improvement

is to

buysometickets at the start of the game.Thesetickets are

boughtbased on the prices and the preferences of the customers (preference is given to tickets on days 1 and 5, to

cheapertickets and to customerswith shorter itineraries).

About 50%of the tickets are bought in this way and we

have observedthat these tickets are rarely wasted. Another

improvement

is to bid for 2 less tickets per flight than reqttired by the plan until 4:00 and for 1 less until 5:00; this

providesgreater flexibility andit is highly unlikelythat the

final optimalplan will not makeuse of these tickets.

A further improvementwas obtained by estimating the

hidden parameter x of each flight. Let {yi,ti},Vi E

{1 ..... N}be the pairs of the price changesyi observed

at times ti and let N be the numberof such pairs. Let A =

x - 10. Then A E {0,..., 80} and P[A = z] = ~l,VZ

{0,..., 80} and P[A= z] = O, Vz q~ {0,..., 80} since x is

uniformlychosenamongthe integers in [10, 90]. Since A is

independentof the times {ti = Ti} whenthe changesoccur,

therefore

=P[A= z] P[A

= I{A,=l(ti

z N

= 7"/)}]. Also

yi is uniformlychosenin {-10,..., 10+ IAr~0J}, therefore

P[Yi = Yd(A = z) ^ (ti = T~)]

~, if Yi is an

integer in [-10,10 + zr~0] and P[yi = YiI(A= z) ^ (ti

Ti)] = 0, otherwise. In addition the price changeyi only de-

pendson time ti and is independentof all tj, Vj¢i, therefore P[yi = YiI(A = z) A (ti = T/)] = P[y, = Yd(A

N (ti = 7"/)}]. Giventhe observationsmadeso far,

z) A {Ai=l

the probability that A = z for any z E {0,..., 80}is

N

P[A = I{A

z =l(t,

N

= Ti)} A

=

=

~°o {P[A:~IA~ffi,(t,=T,)I.pc}

= ~°=o ~’pc =

p

N

wherep,

=

= T,)}].

= YOI(A =

Giventhe value of A, {yi = Y/} are independent of each

N =

other, thusp~ = I-[~=x P[yi = Y/I(A = z) {Ai=a(t,

T,)}] = 1-I~=, PlY, = Y/I(A= z) A (ti = T,)]. Since p: is

a product, that meansthat if anyof conditionalprobabilities

whomakeup the product is zero then Pz = 0 as well. Therefore forpz # 0 it must be that Vi,-10 < Y/<10+ A.T,720~

Vi,A__>720.(Yi-10)Ti ~ A_>maxi

720.(Y/Ti-10) . Therefore

¯

720.(YI-I0)

andp~= 0,

Pz = YI~=I

, ffA > maxi

Ti

otherwise. So we concludethat

~

wherepz---- I-INI

=

I

[~J+21’

if

__

Z > max

i

= ~¢=io PC

720.(Yi-I0)

T~

10 and z < 90. OtherwisePz = O.

In practice we haveobservedthat just the knowledgeof the

smallest value of z for whichp~ > 0 is enoughto estimate

the price increase and that we obtain

this knowledgequite

fast (around2:00 to 3:00 usually). This informationis then

usedto bid earlier for tickets whoseprice is verylikely to increase and to wait morefor tickets whoseprice is expected

to increaselittle or none(they are boughtwhentheir useful5ness for the agent is almostcertain).

Bid Aggressiveness Bidding for hotel roomsposes some

interesting questions as well. The mainissue in this case

seems to be howaggressively each agent should bid (the

level of the prices it submitsin its bids). Originallywedecided to havethe agent bid an incrementhigher than the current price. Theagent also bids progressivelyhigher for each

consecutive unit of a commodityfor which it wants more

than one unit. E.g. if the agent wants to buy 3 units of a

hotel room,it mightbid 210for the first, 250for the secondand 290for the third. This is the lowest (r.) possible

aggressivenesswe havetried. Anotherbidding strategy that

wehave used is that the agent bids progressivelycloser to

the marginalutility 5Uas time passes6. This is the highest

(H) aggressiveness level that we have tried. As a compromise betweenthe two extreme levels we also implemented

a versionof the agent that bids like the aggressive(H) agent

5If this procedureis not used,thenthe agentpaysan average

extra cost of 240to 300becauseit waitsuntil minutes4 and5 to

buythe last tickets.If it is used,thenthis costis almosthalved.

6"rhemarginalutility 5Ufor a particular hotel roomis the

change

in utility that occursif the agentfails to acquireit. In fact

for eachcustomer

i that needsa particularroomwebid -~ instead

of 5U,wherez is the numberof roomswhichare still neededto

complete

her itinerary. Wedo this in ordernot to drive the prices

up prematurely.

85

Exp’eriment1

ExpeJ:iment2

3500

8

O9

PI(A=z)A{A~=,

(Yi=Y~)}I

A~t=l(ti=T~)]

__

N

N (t~=T~)]

-P[A~=t(y~=YDI

Ai=,

P[A=zl AN, (ti=Ti)l.p.

-~ .p=

P[x = I{A,=,(t,

z N =

4000

, i

3000

>~ 2500

<

i

i

i

I Ji

i-"

,

2000 t

1500

WB-N2L

--q,,,

WB-M2M

Agent Types

1

,

i

WB-M2A

Figure 1: Averagescores for someagents that do and some

that do not modelthe flight ticket prices in twoexperiments

for roomsthat have a high value of 5Uand bids like the

non-aggressiveness (L) agent otherwise. This is the agent of

medium(M) aggressiveness. For more details into the behavior of these variants werun several experimentsand the

results are presentedlater in the paper.

Asfar as the timingof the bids is concerned,there is litfie ambiguityabout whata very goodstrategy is. Theagent

waits until 3:00 (and before 4:00) and then places its first

bids basedon the plan generatedby the planner. The reason

for this is that it doesnot wishto increasethe prices earlier than necessarynor to give awayinformationto the other

agents. Wealso observed empirically that an added feature whichincreases performance

is to place bids for a small

numberof roomsat the beginningof the gameat a very low

price (whetherthey are neededor not). In case these rooms

are eventuallybought,the agent pays only a very small price

andgains increasedflexibility in implementing

its plan.

EntertainmentThe agent buys (sells) the entertainment

tickets that it needs(doesnot need)for implementing

its plan

at a price equal to the current price plus (minus)a small increment.Theonly exceptionsto this rule are:

(i) At the game’sstart and depending on howmanytickets the agent beginswith, it will offer to buytickets at low

prices, in orderto increaseits flexibility at a smallcost. Even

if these tickets are not used the agent often managesto sell

themfor a profit.

(ii) Theagent will not buy(sell) at a high(low) price,

if this is beneficialto its utility, becauseotherwiseit helps

other agents. This restriction is somewhat

relaxed at 11:00,

in order for the agent to improve

its scorefurther, but it will

still avoid somebeneficial deals if these wouldbe veryprofitable for anotheragent.

Experimental

Results

To verify our observations and showthe generality of our

conclusions, we decided to perform several controlled experiments. Thus we gain more precise knowledgeof the

behaviorof different types of agents in various situations.

Statistically Significant Difference?

AgentScores

Average Scores

#WB-M2H 1

2

3

4

5

6

7

8 WB-M2L WB-M2MWB-M2HM2,L/M2M M2M/M2HM2I_/M2E

2626

N/A

7

0 (178) 2614 2638 24902463 2421 2442 2526 2455 2466

,/"

2344

2359

7

x

2 (242) 2339 2350 2269 2265 2229 224112347 2371 2251

2101

2056

x

X

X

4 (199) i2130 2073 2072 2029j2046 2098 2048 2033 2051

N/A

1138

865

,f

6 (100) 1112 1165 796 843 920 884 848 898

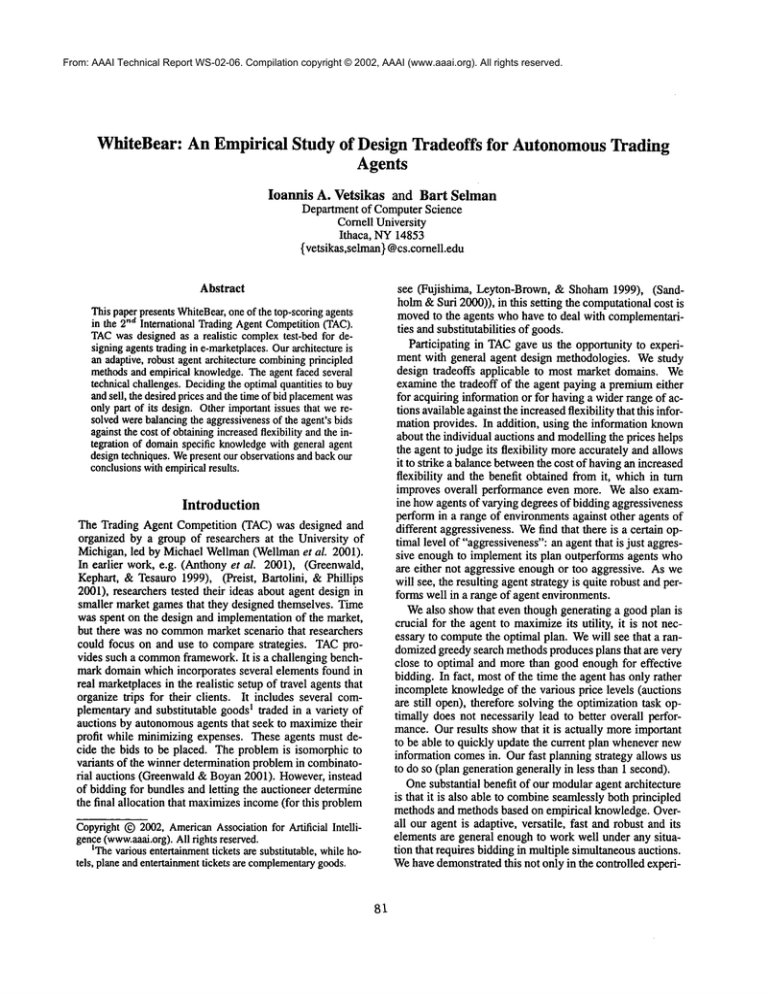

Table 2: Scores for agents WB-M2L,WB-M2M

and WB-M2H

as the number of aggressive agents (WB-M2H)participating

increases. In each experiment agents 1 and 2 are instances of WB-M2M.

The agents above the stair-step line are WB-M2L,

while the ones below are WB-M2H.

The averages scores for each agent type are presented in the next rows. In the last

rows, ¢" indicates statistically significant difference in the scores of the corresponding agents, while × indicates similar scores

(statistically significant).

2800

2600 "’----.......

2400 .......... "------.

220O

8 20OO

tn 1800

WB-M2H WB-M2M....

WB-M2L.

.............

~ 1600

1400

1200

1000

800

|

0

i

i

I

2

4

6

# Aggressive

Agents0NB-M2H)

Figure 2: Changes in agent’s average scores as the number

of very aggressive agents participating in the gameincreases

Experiments WB-N2L WB-M2L WB-M2MWB-M2E

agent l

2087

2238

2387

2429

Exp 1 agent 2 2087

2274

2418

2399

(1 44) average 2087

2256

2402

2414

Exp 2 (200)

3519

3581

3661

3656

Table 1: Average scores of agents WB-N2L,WB-M2L,WBM2Mand WB-M2H.For experiment 1 the scores of the 2

instances of each agent type are also averaged. The number

inside the parentheses is the total numberof gamesfor each

experiment and this will be the case for every table¯

To distinguish between the different versions of the agent,

we use the notation WB-zyz, where (i) :r is Mif the agent

models the plane ticket prices and N if this feature is not

used, (ii) V takes values 0, 1 or 2 and is equal to the maximumnumberof plane tickets per flight which are not bought

7before 4:00, in order not to restrict the planner’s options

and (iii) z characterizes the aggressiveness with which the

agent bids for hotel rooms and takes values L,M and H for

low, mediumand high degree of aggressiveness respectively.

To formally evaluate whether an agent type outperforms an7In the bidding section, wementionedthat until 4:00 the agent

buys PL~’~ - y and PL~ep - y plane tickets, where ~

PLy", PL~

are the total numberof plane tickets neededto implement

the plan.

In that case we used y = 2. Other options are y = 0 (the agent

buyseverything at the beginning)and y = 1 (one ticket per flight

is not boughtuntil after 4:00).

86

other type, we usepaired t-tests for all our experiments; values of less than 10%are considered to indicate a statistically

significant difference (in most of the experiments the values

are actually well below 5%). If more than one instances of

certain agent type participate in an experiment, we compute

sthe t-test for all possible combinationsof instances,

The first set of experiments were aimed at verifying our

observation that modeling the plane ticket prices improves

the performance of the agent. This is something that we expected, since the agent uses this information to bid later for

tickets whoseprice will not increase much(therefore achieving a greater flexibility at low cost), while bidding early for

tickets whoseprice increases faster (therefore saving considerable amount of money). We run 2 experiments with the

following

4 agent types: WB-N2L, WB-M2L, WB-M2M

and WB-M2H.

In the first we run 2 instances of each agent,

while in the second we run only one and the other 4 slots

were filled with the standard agent provided by the TAC

support team. The result of this experiment are presented

in table 1. The other agents, which model the plane ticket

prices, perform better than agent WB-N2L,which does not

do so (figure 1). The differences between WB-N2L

and the

other agents are statistically significant, except for the one

between WB-N2Land WB-M2Lin experiment 2. We also

observe that WB-M2Lis outperformed by agents WB-M2M

and WB-M2H,

which in turn achieve similar scores; these

results are statistically significant for experiment1.

Having determined that modeling of plane ticket prices

leads to significant improvement, we concentrated our attention to agents WB-M2L,WB-M2M

and WB-M2Hin the

next series of experiments. For all experiments, the number

of instances of agent WB-M2M

was 2, while the number

of aggressive agents WB-M2H

was increased from 0 to 6.

The rest of the slots were filled with instances of agent WBM2L.The result of this experiment are presented in table 2.

By increasing the number of agents which bid more aggressively, there is more competition between agents and the

hotel roomprices increase, leading to a decrease in scores.

While the number of aggressive agents #WB-M2H_<4,

the

decrease in score is relatively small for all agents and is approximately linear with #WB-M2H;

The aggressive agents

(WB-M2H)

do relatively better in less competitive environSThismeansthat 8 t-tests will be computedif we have 2 instances of type A and 4 of type B etc. Weconsider the difference

betweenthe scores of A and B to be significant, if almostall the

tests producevalues below10%.

ments and non-aggressive agents (WB-M2L)

do relatively

better in morecompetitiveenvironments,but still not good

enough compared to WB-M2M

and WB-M2H

agents. Overall WB-M2M

(mediumaggressiveness) performs comparably or better than the other agents in every instance (figure 2). Agents WB-M2L

are at a disadvantage comparedto

the other agents becausethey do not bid aggressivelyenough

to acquire the hotel roomsthat they need. Whenan agent

falls to get a hotel roomit needs, its score suffers a double

penalty:(i) it will haveto buyat least one moreplane ticket

at a highprice in order to completethe itinerary, or else it

will end up wasting at least someof the other commodities it has alreadyboughtfor that itinerary and(ii) since the

arrival and/or departure date will probablybe further away

from the customer’spreference and the stay will be shorter

(hence less entertainmenttickets can be assigned), there

a significant utility penalty for the newitinerary. Onthe

other hand, aggressive agents (WB-M2H)

will not face this

problemand they will score well in the case that prices do

not go up. In the case that there are a lot of themin the

gamethough, the price warswill hurt themmorethan other

agents. Thereasons for this are: (i) aggressiveagents will

pay morethan other agents, since the prices will rise faster

for the roomsthat they needthe mostin comparisonto other

rooms, whichare needed mostly by less aggressive agents,

and (ii) the utility penalty for losing a hotel roombecomes

comparableto the price paid for buyingthe room, so nonaggressiveagents suffer only a relatively small penalty for

being overbid. Agent WB-M2M

performs well in every situation, since it bids enoughto maximize

the probability that

it is not outbid for critical rooms,and avoids"price wars"to

a larger degree then WB-M2H.

Thelast experimentsintendedto further explorethe tradeoff of biddingearly for plane tickets against waitingmorein

order to gain moreflexibility in planning.Initially werun an

experimentwith 2 instances of each of the followingagents:

WB-M2M

and WB-M2H

(since they performed best in the

previous experiments) together with WB-M1M

(an agent

that bids for most of its tickets at the beginning)and WBM0H

(this agent bids at the beginningfor all the plane tickets it needsand bids aggressivelyfor hotel rooms.9 Weran

78 gamesand observed that WB-M2M

scores slightly higher

than the other agents, while WB-M2H

scores slightly lower.

Theseresults are howevernot statistically significant. For

the next experimentwe ran 2 instances of WB-M2M

against

6 of WB-MOH

to see howthe latter (early-bidding agent)

performsin the systemwhenthere is a lot of competition.

Weobservedthat instances of WB-M2M

lay to stay clear of

roomswhoseprice increases too much(usually, but not always,successfully), whilethe early-biddersdo not havethis

choicedueto the reducedflexibility in changingtheir plans.

The WB-M2M’s

average a score of 1586 against 1224, the

average score of the WB-MOH’s;

we ran 69 gamesand the

differences in the scores werestatistically significant. We

then changed the roles and ran 6 WB-M2M’s

against 2 WB-

of the Trading Agent Competition

The semifinals and finals of the 2nd International TACwere

held on October 12, 2001 during the 3~a ACMConference on e-Commerceheld in Tampa. The preliminary and

seeding rounds were held the monthbefore, so that teams

wouldhavethe opportunityto improvetheir strategies over

time. Out of 27 teams (belonging to 19 different institutions), 16 team~wereinvited to participate in the semi-finals

and the best 8 advancedto the finals. The 4 top scoring

agents (scores in parentheses) were: livingagonts (3670),

ATTae(3622), WhiteBear (3513) and tJrlaub01 (3421).

The White Bear variant we used in the competitionwas WBM2M.

Thescores in the finals were higher than in the previous

rounds, because most teams had learned (as we also did)

that it was generally better to have a moreadaptive agent

than to bid too aggressively.H Asurprising exceptionto this

rule was Iivingagonts whichfollowed a strategy similar to

WB-MOH

with the addition that it used historical prices to

approximateclosing prices. This agent capitalized on the

fact that the other agents werecareful not to be very aggressive and that prices remainquite low. Despite bidding

aggressively for rooms, since prices did not go up, it was

not penalizedfor this behavior. Theplans that it had formed

at the beginningof the gamewere thus easy to implement,

since all it had to do was to makesure that it got all the

roomsit needed, whichit accomplishedby bidding aggressively. In an environmentwhereat least someof the other

agents wouldbid moreaggressively, this agent wouldprobably be penalizedquite severely for its strategy. Weobserved

this behaviorin the controlled experimentsthat weran. ATTae went muchfurther in its learning effort: it learned a

modelfor the prices of the entertainmenttickets and the hotel roomsbasedon parameterslike the participating agents

and the closing order for hotel auctions in previous games.

Of course this makesthe agent design moretailored to the

9Anearly-bidder

mustbe aggressive,becauseif it fails to get a

room,it will paya substantialcostfor changing

its plan,dueto the

lackof flexibilityin planning.

l°Thisis a restriction of the gameandthe TAC

server

nThiswasdemonstratedby the secondcontrolled experiment

that weranas well.

87

MOH’s,

to see howthe latter performedin a setting where

they werethe minority. Weran 343 gamesand the score differences werestatistically not significant. Weobservedthat

the WB-MOH’s

scored on average close to the score of the

WB-M2M’s

and that in comparisonto the previous experimentthe scores for the WB-M2M’s

had decreaseda little bit

(1540) while the scores for the WB-MOH’s

had increased

significantly (1517). Theseresults allowus to concludethat

it is usuallybeneficialnot to bid for everythingat the beginning of the game.

Weare continuingour last set of experimentsin order to

increasethe statistical confidencein the interpretationof the

results so far. This is quite a time-consuming

process, since

each gameis run at 20 minuteintervals l°. It took close to

2000runs (about 4 weeksof continuousrunningtime) to get

the controlled experiment results and some1000 more for

our observations during the competition.

Results

particular environmentof the competition,12 but it does improve the performanceof the agent.

Conclusions

In this paper we have proposedan architecture for bidding

strategically in simultaneous auctions. Wehave demonstrated howto maximizethe flexibility (actions available,

informationetc.) of the agent while minimizing

the cost that

it has to pay for this benefit, and that by using simpleknowledge (like modelingprices) of the domainit can makethis

choice moreintelligently and improveits performanceeven

more. Wealso showedthat bidding aggressively is not a

panaceaand established that an agent, whois just aggressive

enoughto implement

its plan efficiently, outperformsoverall

agents whoare either not aggressive enoughor whoare too

aggressive.Finally weestablished that eventhoughgenerating a goodplan is crucial for the agentto maximize

its utility,

the greedy algorithmthat we used wasmorethan capable to

help the agent producecomparableresults with other agents

that use a slowerprovablyoptimalalgorithm.Oneof the primarybenefits of our architectureis that it is able to combine

seamlessly both principled methodsand methodsbased on

empirical knowledge,whichis the reason whyit performed

so consistently well in the TAC.Overall our agent is adapfive, versatile, fast and robust and its elementsare general

enoughto workwell under any situation that requires bidding in multiple simultaneousauctions.

In the future we will continueto run experimentsin order

to further determineparametersthat affect the performance

of agents in multi-agent systems. Wealso intend to incorporate learning into our agent to evaluate howmuchthis improves performance.

AcknowledgementsWewould like to thank the TACteam

for their technical supportduring the runningof our experiments and the competition (over 3000 games). This research was supported in part under grants 1]S-9734128(career award)and BR-3830(Sloan).

References

Anthony,P.; Hall, W.; Dang,V.; and Jennings, N. R. 2001.

Autonomous

agents for participating in multiple on-line

auctions. In Proc IJCAI Workshopon E-Business and Intelligent Web,54--64.

Fujishima, Y.; Leyton-Brown,K.; and Shoham,Yo1999.

Tamingthe computational complexity of combinatorial

auctions: Optimal and approximate approaches. In Proc

of the 16thInternationalJoint Conference

on Artificial Intelligence (IJCAI-99), 548-553.

Greenwald,A. R., and Boyan, J. 2001. Bid determination

for simultaneousauctions. In Proc of the 3rd ACM

Conference on Electronic Commerce

(EC-01), 115-124 and 210212.

~21fthe participantsor their sla’ategieschange

drastically,the

price predictionwouldmostlikely become

signilicanflyless accurate.

88

Greenwald, A., and Stone, E 2001. Autonomousbidding agents in the trading agent competition.IEEEInternet

Computing,April.

Greenwald,A. R.; Kephart,J. O.; and Tesauro,G. J. 1999.

Strategic pricebot dynamics. In Proceedingsof the ACM

Conferenceon Electronic Commerce

(EC-99), 58-67,

Klem~rer,P. 1999. Auctiontheory: A guide to the literature. Journalof EconomicSurveys Vol13(3).

Preist, C.; Bartolini, C.; and Phillips, I. 2001. Algorithm

design for agents whichparticipate in multiple simultaneous auctions. In Agent MediatedElectronic Commerce

II1

(LNAI), Springer-Verlag, Berlin 139-154.

Sandholm,T., and Suri, S. 2000. Improvedalgorithms for

optimal winnerdeterminationin combinatorialauctions. In

Proc 7th Conferenceon Artificial Intelligence (AAAI-O0),

90-97.

Stone, P.; Littman, M.L.; Singh, S.; and Kearns,M. 2001.

ATTac-2000:an adaptive autonomousbidding agent. In

Proc 5th International Conferenceon AutonomousAgents,

238-245.

Wellman,M. P.; Wurman,P. R.; O’Malley, K.; Bangera,

R.; de Lin, S.; Reeves,D.; and Walsh,W.E. 2001. Designing the market game for tae. IEEEInternet Computing,

April.