∑

advertisement

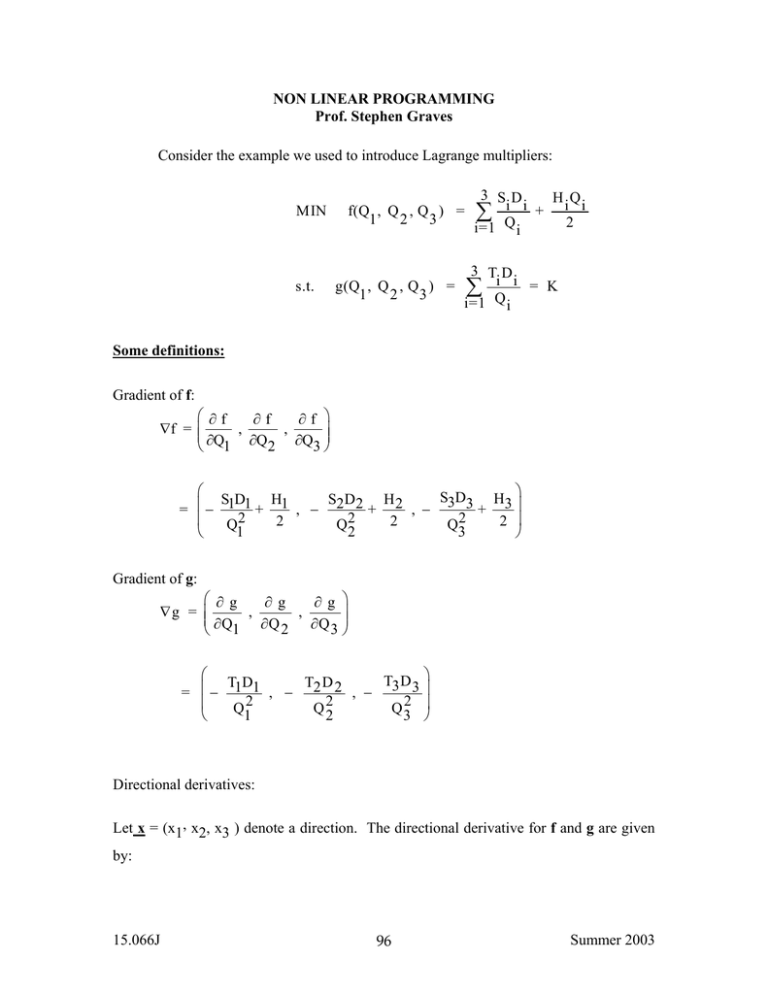

NON LINEAR PROGRAMMING Prof. Stephen Graves Consider the example we used to introduce Lagrange multipliers: MIN s.t. 3 SD H Q f(Q , Q , Q ) = ∑ i i + i i 1 2 3 2 i=1 Q i 3 TD g(Q , Q , Q ) = ∑ i i = K 1 2 3 i=1 Q i Some definitions: Gradient of f: ⎛∂f ∂f ∂f ⎞ ∇f = ⎜ , , ⎟⎟ ⎜ ∂Q ⎝ 1 ∂Q2 ∂Q3 ⎠ ⎛ SD S3D3 H3 ⎞ H1 S2D2 H 2 1 1 ⎜ ⎟ = − + , − + , − + 2 2 2 ⎜ ⎟ 2 2 2 Q1 Q2 Q3 ⎝ ⎠ Gradient of g: ⎛ ∂g ∂g ∂g ⎞ , , ∇g = ⎜ ⎟⎟ ⎜ ∂Q ⎝ 1 ∂Q 2 ∂Q 3 ⎠ ⎛ TD T3 D 3 ⎞ T2 D 2 1 1 ⎜ ⎟ = − , − , − 2 2 2 ⎜ Q1 Q2 Q3 ⎟ ⎝ ⎠ Directional derivatives: Let x = (x1, x2, x3 ) denote a direction. The directional derivative for f and g are given by: 15.066J 96 Summer 2003 3 df = ∑ dx i=1 3 ⎛ SD ⎛∂f ⎞ Hi ⎞⎟ i i ⎜ + x ⎜⎜ ⎟⎟ xi = ∑ − 2 ⎟ i Qi2 ⎝ ∂Qi ⎠ i=1 ⎜⎝ ⎠ 3 dg = ∑ dx i=1 3 ⎛ TD ⎞ ⎛∂g⎞ ⎜⎜ ⎟⎟ xi = ∑ ⎜ − i 2i ⎟ x i Qi ⎟ ⎝ ∂Qi ⎠ i=1 ⎜⎝ ⎠ Note: the directional derivative is the "dot product" of the gradient and the direction vector. For a given point, say (Q1, Q2, Q3 ), what is the direction of steepest ascent for the objective function f? That is, what direction provides the largest value for the directional derivative? The direction of steepest ascent will be the solution to the following optimization problem: df MAX = dx s. t. ⎛ SD Hi ⎞⎟ i i ⎜ ∑ − 2 + 2 ⎟ xi Qi i=1 ⎜⎝ ⎠ 3 x12 + x 22 + x 32 = 1 By using a Lagrange multiplier to solve this problem, you can show that the direction of steepest ascent is given by x * = (x1, x 2 , x 3 ) = ∇f/ ∇f That is, the "best" direction is the (normalized) gradient; for our example, the direction of steepest ascent (not normalized) is ⎛ SD S D H ⎞ H S D H x* = ⎜ − 1 1 + 1 , − 2 2 + 2 , − 3 3 + 3 ⎟ ⎜ 2 2 2 ⎟ Q12 Q22 Q32 ⎝ ⎠ Note that since the actual problem is a minimization, we actually want the direction of steepest descent, which would just be the negative of the gradient. 15.066J 97 Summer 2003 Now suppose that the given point (Q1, Q2, Q3 ) is feasible; that is, it satisfies the constraint: g(Q1, Q2, Q3 ) = K What is the best feasible direction? The best feasible direction (for ascent) will be the solution to the following optimization problem: ⎛ SD Hi ⎞⎟ i i ⎜ ∑ − 2 + 2 ⎟ xi Q i=1 ⎜⎝ i ⎠ 3 df MAX = dx s. t.. x12 + x 22 + x 32 = 1 dg = dx ⎛ TD ⎞ ∑ ⎜⎜ − i 2i ⎟⎟ xi = 0 Qi i=1 ⎝ ⎠ 3 By using two Lagrange multipliers to solve the problem, you can find that the best feasible direction (called the reduced gradient) is given by: x* = ( x1* , x*2 , x*3 ) = ∇f - ⎛⎜⎝ ∇∇fg •• ∇∇gg ⎞⎟⎠ ∇g where ∇f • ∇g = 3 ⎛∂f ∂g⎞ , and ∇ g • ∇ g = ⎜ ⎟ ∑ ⎜ ∑ ⎟ i=1 ⎝ ∂Qi ∂Qi ⎠ i=1 3 ⎛∂g ∂g⎞ ⎜⎜ ⎟⎟ ∂ Q ∂ Q i⎠ ⎝ i The reduced gradient can then be used as a search direction to improve the current solution. To see that the reduced gradient is a feasible direction, we note that ⎛ ∇f • ∇ g ⎞ x* • ∇g = ∇f • ∇g - ⎜ ⎟ ∇g • ∇g = 0 ⎝ ∇g • ∇g ⎠ Besides determining the reduced gradient, an algorithm would also need to determine the "step size:" namely how far to move along the reduced gradient. An algorithm stops when the reduced gradient equals (approximately) the zero vector. 15.066J 98 Summer 2003