Lecture 25 25.1 Goodness-of-fit for composite hypotheses.

Lecture 25

25.1

Goodness-of-fit for composite hypotheses.

(Textbook, Section 9.2)

Suppose that we have a sample of random variables X

1

, . . . , X n finite number of values B

1

, . . . , B r with unknown probabilities that can take a p

1

= ( X = B

1

) , . . . , p r

= ( X = B r

) and suppose that we want to test the hypothesis that this distribution comes from a parameteric family {

θ

: θ ∈ Θ } .

In other words, if we denote p j

( θ ) =

θ

( X = B j

) , we want to test:

H

1

H

2

: p j

= p j

( θ ) for all j ≤ r for some θ ∈ Θ

: otherwise.

If we wanted to test H

1 for one particular fixed θ we could use the statistic

T = r

X ( ν j

− np j

( θ )) 2

, np j

( θ ) j =1 and use a simple χ 2 test from last lecture. The situation now is more complicated because we want to test if p j

= p j

( θ ) , j ≤ r at least for some θ ∈ Θ which means that we have many candidates for θ.

One way to approach this problem is as follows.

(Step 1) Assuming that hypothesis find an estimate θ ∗ of this unknown θ

H

1 holds, i.e.

and then

=

θ for some θ ∈ Θ , we can

θ ∗ by using (Step 2) try to test whether indeed the distribution is equal to the statistics

T = r

X ( ν j

− np j

( θ ∗ )) 2 np j

( θ ∗ ) j =1 in χ 2 test.

99

LECTURE 25.

100

This approach looks natural, the only question is what estimate θ ∗ the fact that θ ∗ to use and how also depends on the data will affect the convergence of T.

It turns out that if we let θ ∗ be the maximum likelihood estimate, i.e.

θ that maximizes the likelihood function ϕ ( θ ) = p

1

( θ ) ν

1 . . . p r

( θ ) ν r then the statistic

T = r

X ( ν j

− np j

( θ ∗ )) 2 np j

( θ ∗ ) j =1

→ χ 2 r − s − 1 converges to χ 2 r − s − 1 distribution with r − s − 1 degrees of freedom, where s is the dimension of the parameter set Θ .

Of course, here we assume that s ≤ r − 2 so that we have at least one degree of freedom. Very informally, by dimension we understand the number of free parameters that describe the set Θ , which we illustrate by the following examples.

1. The family of Bernoulli distributions B ( p ) has only one free parameter p ∈ [0 , 1] so that the set Θ = [0 , 1] has dimension s = 1 .

2. The family of normal distributions

σ 2

N ( µ, σ 2 ) has two free parameters µ ∈ and

≥ 0 and the set Θ = × [0 , ∞ ) has dimension s = 2 .

3. Let us consider a family of all distributions on the set { 0 , 1 , 2 } .

The distribution

( X = 0) = p

1

, ( X = 1) = p

2

, ( X = 2) = p

3 is described by parameters p

1

, p

2 and p

3

.

But since they are supposed to add up to 1 p

3

, p

1

+ p

2

+ p

3

= 1 , one of these parameters is not free, for example,

= 1 − p

1

− p

2

.

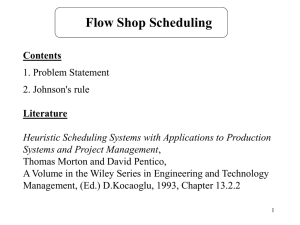

The remaining two parameters belong to a set p

1

∈ [0 , 1] , p

2

∈ [0 , 1 − p

1

] shown in figure 25.1, since their sum should not exceed 1 and the dimension of this set is s = 2 .

1 p2 s=2 p1

1

0

Figure 25.1: Free parameters of a three point distribution.

LECTURE 25.

101

Example.

(textbook, p.545) Suppose that a gene has two possible alleles A

1

A

2 and A

2

A

2

.

We want to test a theory that and and the combinations of theses alleles define there possible genotypes A

1

A

1

, A

1

A

2

Probability to pass A

1

Probability to pass A

2 to a child = θ : to a child = 1 − θ : and the probabilities of genotypes are given by p

1

( θ ) = ( A

1

A

1

) = θ 2 p

2

( θ ) = ( A

1

A

2

) = 2 θ (1 − θ ) p

3

( θ ) = ( A

2

A

2

) = (1 − θ ) 2

(25.1)

Suppose that given the sample X

1

, . . . , X n type are ν

1

, ν

2 of the population the counts of each genoand ν

3

.

To test the theory we want to test the hypotheses

H

1

H

2

: p

1

= p

1

( θ ) , p

: otherwise.

2

= p

2

( θ ) , p

3

= p

3

( θ ) for some θ ∈ [0 , 1]

First of all, the dimension of the parameter set is s = 1 since the family of distributions in (25.1) are described by one parameter θ.

To find the MLE θ ∗ we have to maximize the likelihood function p

1

( θ ) ν

1 p

2

( θ ) ν

2 p

3

( θ ) ν

3 or, equivalently, maximize the log-likelihood log p

1

( θ ) ν

1 p

2

( θ ) ν

2 p

3

( θ ) ν

3 = ν

1 log p

1

( θ ) + ν

2 log p

2

( θ ) + ν

3 log p

3

( θ )

= ν

1 log θ 2 + ν

2 log 2 θ (1 − θ ) + ν

3 log(1 − θ ) 2 .

To find the critical point we take the derivative, set it equal to 0 and solve for θ which gives (we omit these simple steps):

θ ∗ =

2 ν

1

+ ν

2

.

2 n

Therefore, under the null hypothesis H

1 the statistic

T =

( ν

1

− np

1

( θ ∗ )) 2 np

1

( θ ∗ )

→ χ 2 r − s − 1

= χ 2

3 − 1 − 1

+

( ν

2

= χ 2

1

− np

2

( θ ∗ )) 2 np

2

( θ ∗ )

+

( ν

3

− np

3

( θ ∗ )) 2 np

3

( θ ∗ ) converges to χ 2

1 distribution with one degree of freedom. If we take the level of significance α = 0 .

05 and find the threshold c so that

0 .

05 = α = χ 2

1

( T > c ) ⇒ c = 3 .

841

LECTURE 25.

102 then we can use the following decision rule:

H

1

H

2

: T ≤ c = 3 .

841

: T > c = 3 .

841

General families.

We could use a similar test when the distributions supported by a finite number of points B

1

, . . . , B r

θ

, θ ∈ Θ are not necessarily

(for example, continuous distributions). In this case if we want to test the hypotheses

H

1

H

2

: =

θ for some θ ∈ Θ

: otherwise we can discretize them as we did in the last lecture (see figure 25.2), i.e. consider a family of distributions p j

( θ ) =

θ

( X ∈ I j

) for j ≤ r, and instead consider derivative hypotheses

H

1

H

2

: p j

= p j

( θ ) for some θ, j = 1 , · · · , r

: otherwise.

P

θ

I1 I2 Ir

Figure 25.2: Goodness-of-fit for Composite Hypotheses.