Charting Past, Present, and Future Research in Ubiquitous Computing

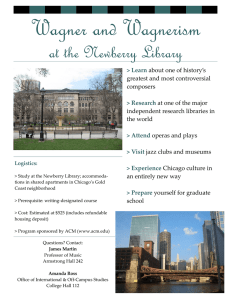

advertisement