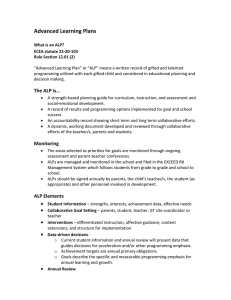

How to choose the state relevance weight in the approximate linear programming?

advertisement

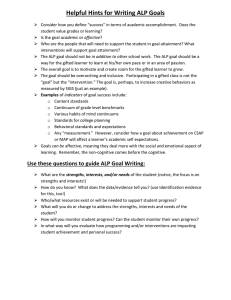

How to choose the state relevance weight in the approximate linear programming approach for dynamic programming? Yann Le Tallec and Theophane Weber Finite Markov chain framework • Finite state space X • For all x in X, finite control space U(x) • Bounded expected immediate cost gu(x) of control u in state x • Transition probability matrix under control u: Pu • Proposition: Any finite Markov chain can be transformed in an equivalent finite Markov chain with gu(x)=g(x) for all u in U(x). Linear programming • Let T be the DP operator for α-discounted problem: TJ=minu g + α PuJ. • By monotonicity of T, J § TJ fl J § TJ § TkJ § J*. • Linear programming approach to DP: For all c>0, J* unique optimal solution of (LP): max cTx s.t. J(x) § g(x)+ α Pu(x,y)J(y), "(x,u) Approximate linear program • Curse of dimensionality. Approximate: J*(x) º Φ(x)r, rœ Ñm, m á |X| • Approximate linear program, c>0, (ALP): maxr cTx s.t. Φr § T Φr. • Unlike (LP), c matters: r*=r*(c). • Φr § T Φr fl Φr § T Φr § J* General performance bound • Proposition: For all J in Ñ|X|, [ ] E | J u J ( x) − J * ( x) |; x ~ ν = J u J − J * where µν ,u = (1 − α )ν ( I − αPu ) T 1,ν −1 • In practice, ν is given by the application. ≤ J − J* 1, µν ,u J ALP approximation bound • Proposition: Let r* be an optimal solution of (ALP). Then for all v s.t. Φv is a positive Lyapunov function, J * − Φr * 1,c 2cT Φv min J * − Φr ≤ 1 − β Φv r ∞ ,1 / Φv • Compare with J u Φr* − J * 1,ν ≤ Φr * − J * 1, µν ,u J ALP approximation bound • Proposition: Let r* be an optimal solution of (ALP). Then for all v s.t. Φv is a positive Lyapunov function, J * − Φr * 1,c 2cT Φv min J * − Φr ≤ 1 − β Φv r ∞ ,1 / Φv • Compare with J u Φr* − J * 1,ν ≤ Φr * − J * 1, µν ,u J Choose c>0 to relate the 2 bounds in an efficient way Simple bounds We want J * − Φr * 1,µ ≤ K J * − Φr * 1,c , K > 0 T c 2 Φv to yield J * − J u min J * − Φr ≤K ∞ ,1 / Φv 1,ν 1 − β Φv r • This relation follows from µν ,u ≤ Kc • But r* depends implicitly on c via (ALP) 1. Trivially, c:=1. But poor bound for large state space 2. Algorithm using r*(c)=r*(Kc) for any K>0. • ν ,u Φr* Φr* Φr * 1. Solve (ALP) for any c>0. 2. Compute µν,Φr* 3. If possible, find the smallest K>0 such that µν,Φr* § Kc Find pmf c=µν,Φr* • If c=µν,Φr*>0, c cannot be big and we have K=1 • Naïve algorithm: ck ALP Ø rkgreedy Ø uΦrk Ø µν,uΦrk = ck+1. • Fixed point? Convergence? • Theoretical algorithm Relies on Brower’s fixed point theorem of continuous function in convex compact set of Ñ|X| – rk not well defined for multiple optima – rk not continuous in c => randomized c by Gaussian noise N(0,vI), v>0 – greedy not continuous in rk => δ-greedy: P(u) ∂ exp(- δ-1.(g+PuΦrk)) For all v and δ, there is a fixed point to the naïve algorithm Reinforced ALP • Would like to solve (ALP) with the additional constraint c = µν ,uΦr* = (1 − α )ν T ( I − αPuΦr* ) −1 T T • Recall that PuΦr* is greedy w.r.t Φr*, i.e. PuΦr Φr* § Pu Φr* for all u. • Hence, (1- α) νT(I- α Pu Φr )-1(I- α Pu) Φr* § (1- α)νTΦr*, "u cT • Add the necessary linear constraints to (ALP) cT(I- α Pu) Φr* § (1- α)νTΦr*, "u Conclusions • Some simple bounds on the (ALP) policy but not necessarily tight. • Theoretical algorithm to find c as a probability distribution. • Some insight in the role of c in (ALP) • Need practical algorithms depending on ν and the Markov chain.