Automatic algorithms for time series forecasting Rob J Hyndman

advertisement

Rob J Hyndman

Automatic algorithms

for time series

forecasting

Outline

1 Motivation

2 Exponential smoothing

3 ARIMA modelling

4 Automatic nonlinear forecasting?

5 Time series with complex seasonality

6 Hierarchical and grouped time series

7 The future of forecasting

Automatic algorithms for time series forecasting

Motivation

2

Motivation

Automatic algorithms for time series forecasting

Motivation

3

Motivation

Automatic algorithms for time series forecasting

Motivation

3

Motivation

Automatic algorithms for time series forecasting

Motivation

3

Motivation

Automatic algorithms for time series forecasting

Motivation

3

Motivation

Automatic algorithms for time series forecasting

Motivation

3

Motivation

1

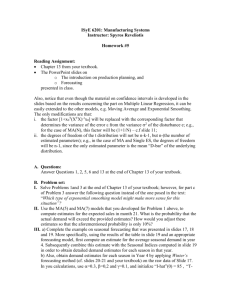

Common in business to have over 1000

products that need forecasting at least monthly.

2

Forecasts are often required by people who are

untrained in time series analysis.

Specifications

Automatic forecasting algorithms must:

å determine an appropriate time series model;

å estimate the parameters;

å compute the forecasts with prediction intervals.

Automatic algorithms for time series forecasting

Motivation

4

Motivation

1

Common in business to have over 1000

products that need forecasting at least monthly.

2

Forecasts are often required by people who are

untrained in time series analysis.

Specifications

Automatic forecasting algorithms must:

å determine an appropriate time series model;

å estimate the parameters;

å compute the forecasts with prediction intervals.

Automatic algorithms for time series forecasting

Motivation

4

Example: Asian sheep

450

400

350

300

250

millions of sheep

500

550

Numbers of sheep in Asia

1960

1970

1980

1990

2000

2010

Year

Automatic algorithms for time series forecasting

Motivation

5

Example: Asian sheep

450

400

350

300

250

millions of sheep

500

550

Automatic ETS forecasts

1960

1970

1980

1990

2000

2010

Year

Automatic algorithms for time series forecasting

Motivation

5

Example: Cortecosteroid sales

1.0

0.8

0.6

0.4

Total scripts (millions)

1.2

1.4

Monthly cortecosteroid drug sales in Australia

1995

2000

2005

2010

Year

Automatic algorithms for time series forecasting

Motivation

6

Example: Cortecosteroid sales

1.2

1.0

0.8

0.6

0.4

Total scripts (millions)

1.4

1.6

Forecasts from ARIMA(3,1,3)(0,1,1)[12]

1995

2000

2005

2010

Year

Automatic algorithms for time series forecasting

Motivation

6

Outline

1 Motivation

2 Exponential smoothing

3 ARIMA modelling

4 Automatic nonlinear forecasting?

5 Time series with complex seasonality

6 Hierarchical and grouped time series

7 The future of forecasting

Automatic algorithms for time series forecasting

Exponential smoothing

7

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

Simple exponential smoothing

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

A,N:

Simple exponential smoothing

Holt’s linear method

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

A,N:

Ad ,N:

Simple exponential smoothing

Holt’s linear method

Additive damped trend method

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

A,N:

Ad ,N:

M,N:

Simple exponential smoothing

Holt’s linear method

Additive damped trend method

Exponential trend method

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

A,N:

Ad ,N:

M,N:

Md ,N:

Simple exponential smoothing

Holt’s linear method

Additive damped trend method

Exponential trend method

Multiplicative damped trend method

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

A,N:

Ad ,N:

M,N:

Md ,N:

A,A:

Simple exponential smoothing

Holt’s linear method

Additive damped trend method

Exponential trend method

Multiplicative damped trend method

Additive Holt-Winters’ method

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

N,N:

A,N:

Ad ,N:

M,N:

Md ,N:

A,A:

A,M:

Simple exponential smoothing

Holt’s linear method

Additive damped trend method

Exponential trend method

Multiplicative damped trend method

Additive Holt-Winters’ method

Multiplicative Holt-Winters’ method

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

There are 15 separate exp. smoothing methods.

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

There are 15 separate exp. smoothing methods.

Each can have an additive or multiplicative error,

giving 30 separate models.

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

There are 15 separate exp. smoothing methods.

Each can have an additive or multiplicative error,

giving 30 separate models.

Only 19 models are numerically stable.

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Trend

Component

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

There are 15 separate exp. smoothing methods.

Each can have an additive or multiplicative error,

giving 30 separate models.

Only 19 models are numerically stable.

Multiplicative trend models give poor forecasts

leaving 15 models.

Automatic algorithms for time series forecasting

Exponential smoothing

8

Exponential smoothing methods

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

Trend

Component

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

General notation

E T S : ExponenTial Smoothing

Examples:

A,N,N:

A,A,N:

M,A,M:

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

Exponential smoothing methods

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

Trend

Component

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

General notation

E T S : ExponenTial Smoothing

Examples:

A,N,N:

A,A,N:

M,A,M:

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

Exponential smoothing methods

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

Trend

Component

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

General notation

Examples:

A,N,N:

A,A,N:

M,A,M:

E T S : ExponenTial Smoothing

↑

Trend

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

Exponential smoothing methods

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

Trend

Component

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

General notation

Examples:

A,N,N:

A,A,N:

M,A,M:

E T S : ExponenTial Smoothing

↑ -

Trend Seasonal

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

Exponential smoothing methods

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

Trend

Component

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

General notation

Examples:

A,N,N:

A,A,N:

M,A,M:

E T S : ExponenTial Smoothing

% ↑ -

Error Trend Seasonal

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

Exponential smoothing methods

Seasonal Component

N

A

M

(None)

(Additive) (Multiplicative)

Trend

Component

N

(None)

N,N

N,A

N,M

A

(Additive)

A,N

A,A

A,M

Ad

(Additive damped)

Ad ,N

M

(Multiplicative)

M,N

Ad ,A

M,A

Ad ,M

M,M

Md

(Multiplicative damped)

Md ,N

Md ,A

Md ,M

General notation

Examples:

A,N,N:

A,A,N:

M,A,M:

E T S : ExponenTial Smoothing

% ↑ -

Error Trend Seasonal

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

Exponential smoothing methods

Innovations state space models

Seasonal Component

Trend

N

A

M

å AllComponent

ETS models can (None)

be written

in innovations

(Additive)

(Multiplicative)

N

state

2002). N,A

(None) space form (IJF,N,N

A

(Additive)

N,M

A,N

A,A

A,M

d

d

d

d

d

d

å Additive

and multiplicative versions give the

A

(Additive damped)

A ,N

A ,A

A ,M

d

M

Md

same

point forecastsM,N

but different

prediction

(Multiplicative)

M,A

M,M

intervals.

(Multiplicative damped)

M ,N

M ,A

M ,M

General notation

Examples:

A,N,N:

A,A,N:

M,A,M:

E T S : ExponenTial Smoothing

% ↑ -

Error Trend Seasonal

Simple exponential smoothing with additive errors

Holt’s linear method with additive errors

Multiplicative Holt-Winters’ method with multiplicative errors

Automatic algorithms for time series forecasting

Exponential smoothing

9

ETS state space model

xt −1

yt

εt

Automatic algorithms for time series forecasting

State space model

xt = (level, slope, seasonal)

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

Automatic algorithms for time series forecasting

State space model

xt = (level, slope, seasonal)

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

State space model

yt+1

xt = (level, slope, seasonal)

εt+1

Automatic algorithms for time series forecasting

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

State space model

Automatic algorithms for time series forecasting

xt = (level, slope, seasonal)

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

State space model

xt = (level, slope, seasonal)

yt+2

εt+2

Automatic algorithms for time series forecasting

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

yt+2

εt+2

xt +2

State space model

Automatic algorithms for time series forecasting

xt = (level, slope, seasonal)

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

yt+2

εt+2

xt +2

State space model

xt = (level, slope, seasonal)

y t +3

εt+3

Automatic algorithms for time series forecasting

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

yt+2

εt+2

xt +2

y t +3

εt+3

xt +3

State space model

Automatic algorithms for time series forecasting

xt = (level, slope, seasonal)

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

yt+2

εt+2

xt +2

y t +3

εt+3

xt +3

State space model

xt = (level, slope, seasonal)

y t +4

ε t +4

Automatic algorithms for time series forecasting

Exponential smoothing

10

ETS state space model

xt −1

yt

εt

xt

yt+1

εt+1

xt+1

yt+2

εt+2

xt +2

y t +3

εt+3

xt +3

State space model

xt = (level, slope, seasonal)

y t +4

Estimation

Compute likelihood L from

ε1 , ε2 , . . . , εT .

Optimize L wrt model

parameters.

Automatic algorithms for time series forecasting

ε t +4

Exponential smoothing

10

Innovations state space models

iid

Let xt = (`t , bt , st , st−1 , . . . , st−m+1 ) and εt ∼ N(0, σ 2 ).

yt = h(xt−1 ) + k (xt−1 )εt

| {z }

µt

|

{z

et

Observation equation

}

xt = f (xt−1 ) + g(xt−1 )εt

State equation

Additive errors:

k (xt−1 ) = 1.

yt = µt + εt .

Multiplicative errors:

k (xt−1 ) = µt .

yt = µt (1 + εt ).

εt = (yt − µt )/µt is relative error.

Automatic algorithms for time series forecasting

Exponential smoothing

11

Innovations state space models

All models can be written in state space form.

Additive and multiplicative versions give same

point forecasts but different prediction

intervals.

Estimation

∗

X

n

L (θ, x0 ) = n log

n

X

ε /k (xt−1 ) + 2

log |k (xt−1 )|

2

t

t =1

2

t =1

= −2 log(Likelihood) + constant

Minimize wrt θ = (α, β, γ, φ) and initial states

x0 = (`0 , b0 , s0 , s−1 , . . . , s−m+1 ).

Automatic algorithms for time series forecasting

Exponential smoothing

12

Innovations state space models

All models can be written in state space form.

Additive and multiplicative versions give same

point forecasts but different prediction

intervals.

Estimation

∗

X

n

L (θ, x0 ) = n log

n

X

ε /k (xt−1 ) + 2

log |k (xt−1 )|

2

t

t =1

2

t =1

= −2 log(Likelihood) + constant

Minimize wrt θ = (α, β, γ, φ) and initial states

x0 = (`0 , b0 , s0 , s−1 , . . . , s−m+1 ).

Automatic algorithms for time series forecasting

Exponential smoothing

12

Innovations state space models

All models can be written in state space form.

Additive and multiplicative versions give same

point forecasts but different prediction

intervals.

Estimation

∗

X

n

L (θ, x0 ) = n log

n

X

ε /k (xt−1 ) + 2

log |k (xt−1 )|

2

t

t =1

2

t =1

= −2 log(Likelihood) + constant

Minimize wrt θ = (α, β, γ, φ) and initial states

x0 = (`0 , b0 , s0 , s−1 , . . . , s−m+1 ).

Automatic algorithms for time series forecasting

Exponential smoothing

12

Innovations state space models

All models can be written in state space form.

Additive and multiplicative versions give same

point forecasts but different prediction

intervals.

Estimation

∗

X

n

L (θ, x0 ) = n log

n

X

ε /k (xt−1 ) + 2

log |k (xt−1 )|

2

t

t =1

2

t =1

= −2 log(Likelihood) + constant

Minimize wrt θ = (α, β, γ, φ) and initial states

x0 = (`0 , b0 , s0 , s−1 , . . . , s−m+1 ).

Automatic algorithms for time series forecasting

Exponential smoothing

12

Innovations state space models

All models can be written in state space form.

Additive and multiplicative versions give same

point forecasts but different prediction

intervals.

Estimation

∗

X

n

L (θ, x0 ) = n log

n

X

ε /k (xt−1 ) + 2

log |k (xt−1 )|

2

t

t =1

2

t =1

= −2 log(Likelihood) + constant

Minimize wrt θ = (α, β, γ, φ) and initial states

x0 = (`0 , b0 , s0 , s−1 , . . . , s−m+1 ).

Automatic algorithms for time series forecasting

Exponential smoothing

12

Innovations state space models

All models can be written in state space form.

Additive and multiplicative versions give same

point forecasts but different prediction

intervals.

Estimation

∗

X

n

L (θ, x0 ) = n log

n

X

ε /k (xt−1 ) + 2

log |k (xt−1 )|

2

t

t =1

2

t =1

= −2 log(Likelihood) + constant

Q: How to choose

Minimize wrt θ = (α, β, γ,between

φ) and initial

states

the 15

useful

x0 = (`0 , b0 , s0 , s−1 , . . . , s−ETS

).

m+1models?

Automatic algorithms for time series forecasting

Exponential smoothing

12

Cross-validation

Traditional evaluation

Training data

●

●

●

●

●

●

●

●

●

●

●

●

Automatic algorithms for time series forecasting

Test data

●

●

●

●

●

●

●

●

●

●

●

●

Exponential smoothing

●

time

13

Cross-validation

Traditional evaluation

Training data

●

●

●

●

●

●

●

●

●

●

●

●

Test data

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

time

Standard cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Automatic algorithms for time series forecasting

●

●

●

●

●

●

●

●

●

●

Exponential smoothing

13

Cross-validation

Traditional evaluation

Training data

●

●

●

●

●

●

●

●

●

●

●

●

Test data

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

time

Standard cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Time series cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Automatic algorithms for time series forecasting

Exponential smoothing

13

Cross-validation

Traditional evaluation

Training data

●

●

●

●

●

●

●

●

●

●

●

●

Test data

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

time

Standard cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Time series cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Automatic algorithms for time series forecasting

Exponential smoothing

13

Cross-validation

Traditional evaluation

Training data

●

●

●

●

●

●

●

●

●

●

●

●

Test data

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

time

Standard cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Time series cross-validation

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ●

Also

known as “Evaluation on

● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ●

a

● rolling

● ● ● ● forecast

● ● ● ● ●origin”

● ● ● ● ● ● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Automatic algorithms for time series forecasting

Exponential smoothing

13

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

where L is the likelihood and k is the number of

estimated parameters in the model.

This is a penalized likelihood approach.

If L is Gaussian, then AIC ≈ c + T log MSE + 2k

where c is a constant, MSE is from one-step

forecasts on training set, and T is the length of

the series.

Minimizing the Gaussian AIC is asymptotically

equivalent (as T → ∞) to minimizing MSE from

one-step forecasts on test set via time series

cross-validation.

Automatic algorithms for time series forecasting

Exponential smoothing

14

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

where L is the likelihood and k is the number of

estimated parameters in the model.

This is a penalized likelihood approach.

If L is Gaussian, then AIC ≈ c + T log MSE + 2k

where c is a constant, MSE is from one-step

forecasts on training set, and T is the length of

the series.

Minimizing the Gaussian AIC is asymptotically

equivalent (as T → ∞) to minimizing MSE from

one-step forecasts on test set via time series

cross-validation.

Automatic algorithms for time series forecasting

Exponential smoothing

14

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

where L is the likelihood and k is the number of

estimated parameters in the model.

This is a penalized likelihood approach.

If L is Gaussian, then AIC ≈ c + T log MSE + 2k

where c is a constant, MSE is from one-step

forecasts on training set, and T is the length of

the series.

Minimizing the Gaussian AIC is asymptotically

equivalent (as T → ∞) to minimizing MSE from

one-step forecasts on test set via time series

cross-validation.

Automatic algorithms for time series forecasting

Exponential smoothing

14

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

where L is the likelihood and k is the number of

estimated parameters in the model.

This is a penalized likelihood approach.

If L is Gaussian, then AIC ≈ c + T log MSE + 2k

where c is a constant, MSE is from one-step

forecasts on training set, and T is the length of

the series.

Minimizing the Gaussian AIC is asymptotically

equivalent (as T → ∞) to minimizing MSE from

one-step forecasts on test set via time series

cross-validation.

Automatic algorithms for time series forecasting

Exponential smoothing

14

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

where L is the likelihood and k is the number of

estimated parameters in the model.

This is a penalized likelihood approach.

If L is Gaussian, then AIC ≈ c + T log MSE + 2k

where c is a constant, MSE is from one-step

forecasts on training set, and T is the length of

the series.

Minimizing the Gaussian AIC is asymptotically

equivalent (as T → ∞) to minimizing MSE from

one-step forecasts on test set via time series

cross-validation.

Automatic algorithms for time series forecasting

Exponential smoothing

14

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

Corrected AIC

For small T, AIC tends to over-fit. Bias-corrected

version:

AICC = AIC +

2(k +1)(k +2)

T −k

Bayesian Information Criterion

BIC = AIC + k [log(T ) − 2]

BIC penalizes terms more heavily than AIC

Minimizing BIC is consistent if there is a true

model.

Automatic algorithms for time series forecasting

Exponential smoothing

15

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

Corrected AIC

For small T, AIC tends to over-fit. Bias-corrected

version:

AICC = AIC +

2(k +1)(k +2)

T −k

Bayesian Information Criterion

BIC = AIC + k [log(T ) − 2]

BIC penalizes terms more heavily than AIC

Minimizing BIC is consistent if there is a true

model.

Automatic algorithms for time series forecasting

Exponential smoothing

15

Akaike’s Information Criterion

AIC = −2 log(L) + 2k

Corrected AIC

For small T, AIC tends to over-fit. Bias-corrected

version:

AICC = AIC +

2(k +1)(k +2)

T −k

Bayesian Information Criterion

BIC = AIC + k [log(T ) − 2]

BIC penalizes terms more heavily than AIC

Minimizing BIC is consistent if there is a true

model.

Automatic algorithms for time series forecasting

Exponential smoothing

15

What to use?

Choice: AIC, AICc, BIC, CV-MSE

CV-MSE too time consuming for most automatic

forecasting purposes. Also requires large T.

As T → ∞, BIC selects true model if there is

one. But that is never true!

AICc focuses on forecasting performance, can

be used on small samples and is very fast to

compute.

Empirical studies in forecasting show AIC is

better than BIC for forecast accuracy.

Automatic algorithms for time series forecasting

Exponential smoothing

16

What to use?

Choice: AIC, AICc, BIC, CV-MSE

CV-MSE too time consuming for most automatic

forecasting purposes. Also requires large T.

As T → ∞, BIC selects true model if there is

one. But that is never true!

AICc focuses on forecasting performance, can

be used on small samples and is very fast to

compute.

Empirical studies in forecasting show AIC is

better than BIC for forecast accuracy.

Automatic algorithms for time series forecasting

Exponential smoothing

16

What to use?

Choice: AIC, AICc, BIC, CV-MSE

CV-MSE too time consuming for most automatic

forecasting purposes. Also requires large T.

As T → ∞, BIC selects true model if there is

one. But that is never true!

AICc focuses on forecasting performance, can

be used on small samples and is very fast to

compute.

Empirical studies in forecasting show AIC is

better than BIC for forecast accuracy.

Automatic algorithms for time series forecasting

Exponential smoothing

16

What to use?

Choice: AIC, AICc, BIC, CV-MSE

CV-MSE too time consuming for most automatic

forecasting purposes. Also requires large T.

As T → ∞, BIC selects true model if there is

one. But that is never true!

AICc focuses on forecasting performance, can

be used on small samples and is very fast to

compute.

Empirical studies in forecasting show AIC is

better than BIC for forecast accuracy.

Automatic algorithms for time series forecasting

Exponential smoothing

16

What to use?

Choice: AIC, AICc, BIC, CV-MSE

CV-MSE too time consuming for most automatic

forecasting purposes. Also requires large T.

As T → ∞, BIC selects true model if there is

one. But that is never true!

AICc focuses on forecasting performance, can

be used on small samples and is very fast to

compute.

Empirical studies in forecasting show AIC is

better than BIC for forecast accuracy.

Automatic algorithms for time series forecasting

Exponential smoothing

16

ets algorithm in R

Based on Hyndman, Koehler,

Snyder & Grose (IJF 2002):

Apply each of 15 models that are

appropriate to the data. Optimize

parameters and initial values

using MLE.

Select best method using AICc.

Produce forecasts using best

method.

Obtain prediction intervals using

underlying state space model.

Automatic algorithms for time series forecasting

Exponential smoothing

17

ets algorithm in R

Based on Hyndman, Koehler,

Snyder & Grose (IJF 2002):

Apply each of 15 models that are

appropriate to the data. Optimize

parameters and initial values

using MLE.

Select best method using AICc.

Produce forecasts using best

method.

Obtain prediction intervals using

underlying state space model.

Automatic algorithms for time series forecasting

Exponential smoothing

17

ets algorithm in R

Based on Hyndman, Koehler,

Snyder & Grose (IJF 2002):

Apply each of 15 models that are

appropriate to the data. Optimize

parameters and initial values

using MLE.

Select best method using AICc.

Produce forecasts using best

method.

Obtain prediction intervals using

underlying state space model.

Automatic algorithms for time series forecasting

Exponential smoothing

17

ets algorithm in R

Based on Hyndman, Koehler,

Snyder & Grose (IJF 2002):

Apply each of 15 models that are

appropriate to the data. Optimize

parameters and initial values

using MLE.

Select best method using AICc.

Produce forecasts using best

method.

Obtain prediction intervals using

underlying state space model.

Automatic algorithms for time series forecasting

Exponential smoothing

17

Exponential smoothing

400

300

millions of sheep

500

600

Forecasts from ETS(M,A,N)

1960

1970

1980

1990

2000

2010

Year

Automatic algorithms for time series forecasting

Exponential smoothing

18

Exponential smoothing

400

fit <- ets(livestock)

fcast <- forecast(fit)

plot(fcast)

300

millions of sheep

500

600

Forecasts from ETS(M,A,N)

1960

1970

1980

1990

2000

2010

Year

Automatic algorithms for time series forecasting

Exponential smoothing

19

Exponential smoothing

1.2

1.0

0.8

0.6

0.4

Total scripts (millions)

1.4

1.6

Forecasts from ETS(M,N,M)

1995

2000

2005

2010

Year

Automatic algorithms for time series forecasting

Exponential smoothing

20

Exponential smoothing

1.2

0.6

0.8

1.0

fit <- ets(h02)

fcast <- forecast(fit)

plot(fcast)

0.4

Total scripts (millions)

1.4

1.6

Forecasts from ETS(M,N,M)

1995

2000

2005

2010

Year

Automatic algorithms for time series forecasting

Exponential smoothing

21

Exponential smoothing

> fit

ETS(M,N,M)

Smoothing parameters:

alpha = 0.4597

gamma = 1e-04

Initial states:

l = 0.4501

s = 0.8628 0.8193 0.7648 0.7675 0.6946 1.2921

1.3327 1.1833 1.1617 1.0899 1.0377 0.9937

sigma:

0.0675

AIC

AICc

-115.69960 -113.47738

BIC

-69.24592

Automatic algorithms for time series forecasting

Exponential smoothing

22

M3 comparisons

Method

MAPE sMAPE MASE

Theta

ForecastPro

ForecastX

Automatic ANN

B-J automatic

17.42

18.00

17.35

17.18

19.13

12.76

13.06

13.09

13.98

13.72

1.39

1.47

1.42

1.53

1.54

ETS

17.38

13.13

1.43

Automatic algorithms for time series forecasting

Exponential smoothing

23

Exponential smoothing

Automatic algorithms for time series forecasting

Exponential smoothing

24

Exponential smoothing

www.OTexts.org/fpp

Automatic algorithms for time series forecasting

Exponential smoothing

24

Exponential smoothing

Automatic algorithms for time series forecasting

Exponential smoothing

24

Outline

1 Motivation

2 Exponential smoothing

3 ARIMA modelling

4 Automatic nonlinear forecasting?

5 Time series with complex seasonality

6 Hierarchical and grouped time series

7 The future of forecasting

Automatic algorithms for time series forecasting

ARIMA modelling

25

ARIMA models

Inputs

Output

y t −1

y t −2

yt

y t −3

Automatic algorithms for time series forecasting

ARIMA modelling

26

ARIMA models

Inputs

Output

y t −1

Autoregression (AR)

model

y t −2

yt

y t −3

εt

Automatic algorithms for time series forecasting

ARIMA modelling

26

ARIMA models

Inputs

Output

y t −1

Autoregression moving

average (ARMA) model

y t −2

yt

y t −3

εt

ε t −1

ε t −2

Automatic algorithms for time series forecasting

ARIMA modelling

26

ARIMA models

Inputs

Output

y t −1

Autoregression moving

average (ARMA) model

y t −2

yt

y t −3

εt

ε t −1

ε t −2

Automatic algorithms for time series forecasting

Estimation

Compute likelihood L from

ε1 , ε2 , . . . , εT .

Use optimization

algorithm to maximize L.

ARIMA modelling

26

ARIMA models

Inputs

Output

y t −1

y t −2

yt

y t −3

εt

ε t −1

ε t −2

Automatic algorithms for time series forecasting

Autoregression moving

average (ARMA) model

ARIMA model

Autoregression moving

average (ARMA) model

applied to differences.

Estimation

Compute likelihood L from

ε1 , ε2 , . . . , εT .

Use optimization

algorithm to maximize L.

ARIMA modelling

26

ARIMA modelling

Automatic algorithms for time series forecasting

ARIMA modelling

27

ARIMA modelling

Automatic algorithms for time series forecasting

ARIMA modelling

27

ARIMA modelling

Automatic algorithms for time series forecasting

ARIMA modelling

27

Auto ARIMA

450

400

350

300

250

millions of sheep

500

550

Forecasts from ARIMA(0,1,0) with drift

1960

1970

1980

1990

2000

2010

Year

Automatic algorithms for time series forecasting

ARIMA modelling

28

Auto ARIMA

Forecasts from ARIMA(0,1,0) with drift

450

400

350

300

250

millions of sheep

500

550

fit <- auto.arima(livestock)

fcast <- forecast(fit)

plot(fcast)

1960

1970

1980

1990

2000

2010

Year

Automatic algorithms for time series forecasting

ARIMA modelling

29

Auto ARIMA

1.0

0.8

0.6

0.4

Total scripts (millions)

1.2

1.4

Forecasts from ARIMA(3,1,3)(0,1,1)[12]

1995

2000

2005

2010

Year

Automatic algorithms for time series forecasting

ARIMA modelling

30

Auto ARIMA

0.6

0.8

1.0

fit <- auto.arima(h02)

fcast <- forecast(fit)

plot(fcast)

0.4

Total scripts (millions)

1.2

1.4

Forecasts from ARIMA(3,1,3)(0,1,1)[12]

1995

2000

2005

2010

Year

Automatic algorithms for time series forecasting

ARIMA modelling

31

Auto ARIMA

> fit

Series: h02

ARIMA(3,1,3)(0,1,1)[12]

Coefficients:

ar1

-0.3648

s.e.

0.2198

ar2

-0.0636

0.3293

ar3

0.3568

0.1268

ma1

-0.4850

0.2227

ma2

0.0479

0.2755

ma3

-0.353

0.212

sma1

-0.5931

0.0651

sigma^2 estimated as 0.002706: log likelihood=290.25

AIC=-564.5

AICc=-563.71

BIC=-538.48

Automatic algorithms for time series forecasting

ARIMA modelling

32

How does auto.arima() work?

A non-seasonal ARIMA process

φ(B)(1 − B)d yt = c + θ(B)εt

Need to select appropriate orders p, q, d, and

whether to include c.

Algorithm choices driven by forecast accuracy.

Automatic algorithms for time series forecasting

ARIMA modelling

33

How does auto.arima() work?

A non-seasonal ARIMA process

φ(B)(1 − B)d yt = c + θ(B)εt

Need to select appropriate orders p, q, d, and

whether to include c.

Hyndman & Khandakar (JSS, 2008) algorithm:

Select no. differences d via KPSS unit root test.

Select p, q, c by minimising AICc.

Use stepwise search to traverse model space,

starting with a simple model and considering

nearby variants.

Algorithm choices driven by forecast accuracy.

Automatic algorithms for time series forecasting

ARIMA modelling

33

How does auto.arima() work?

A non-seasonal ARIMA process

φ(B)(1 − B)d yt = c + θ(B)εt

Need to select appropriate orders p, q, d, and

whether to include c.

Hyndman & Khandakar (JSS, 2008) algorithm:

Select no. differences d via KPSS unit root test.

Select p, q, c by minimising AICc.

Use stepwise search to traverse model space,

starting with a simple model and considering

nearby variants.

Algorithm choices driven by forecast accuracy.

Automatic algorithms for time series forecasting

ARIMA modelling

33

How does auto.arima() work?

A seasonal ARIMA process

Φ(Bm )φ(B)(1 − B)d (1 − Bm )D yt = c + Θ(Bm )θ(B)εt

Need to select appropriate orders p, q, d, P, Q, D, and

whether to include c.

Hyndman & Khandakar (JSS, 2008) algorithm:

Select no. differences d via KPSS unit root test.

Select D using OCSB unit root test.

Select p, q, P, Q, c by minimising AICc.

Use stepwise search to traverse model space,

starting with a simple model and considering

nearby variants.

Automatic algorithms for time series forecasting

ARIMA modelling

34

M3 comparisons

Method

MAPE sMAPE MASE

Theta

17.42

ForecastPro

18.00

B-J automatic 19.13

12.76

13.06

13.72

1.39

1.47

1.54

ETS

AutoARIMA

13.13

13.85

1.43

1.47

17.38

19.12

Automatic algorithms for time series forecasting

ARIMA modelling

35

Outline

1 Motivation

2 Exponential smoothing

3 ARIMA modelling

4 Automatic nonlinear forecasting?

5 Time series with complex seasonality

6 Hierarchical and grouped time series

7 The future of forecasting

Automatic algorithms for time series forecasting

Automatic nonlinear forecasting?

36

Automatic nonlinear forecasting

Automatic ANN in M3 competition did poorly.

Linear methods did best in the NN3

competition!

Very few machine learning methods get

published in the IJF because authors cannot

demonstrate their methods give better

forecasts than linear benchmark methods,

even on supposedly nonlinear data.

Some good recent work by Kourentzes and

Crone on automated ANN for time series.

Watch this space!

Automatic algorithms for time series forecasting

Automatic nonlinear forecasting?

37

Automatic nonlinear forecasting

Automatic ANN in M3 competition did poorly.

Linear methods did best in the NN3

competition!

Very few machine learning methods get

published in the IJF because authors cannot

demonstrate their methods give better

forecasts than linear benchmark methods,

even on supposedly nonlinear data.

Some good recent work by Kourentzes and

Crone on automated ANN for time series.

Watch this space!

Automatic algorithms for time series forecasting

Automatic nonlinear forecasting?

37

Automatic nonlinear forecasting

Automatic ANN in M3 competition did poorly.

Linear methods did best in the NN3

competition!

Very few machine learning methods get

published in the IJF because authors cannot

demonstrate their methods give better

forecasts than linear benchmark methods,

even on supposedly nonlinear data.

Some good recent work by Kourentzes and

Crone on automated ANN for time series.

Watch this space!

Automatic algorithms for time series forecasting

Automatic nonlinear forecasting?

37

Automatic nonlinear forecasting

Automatic ANN in M3 competition did poorly.

Linear methods did best in the NN3

competition!

Very few machine learning methods get

published in the IJF because authors cannot

demonstrate their methods give better

forecasts than linear benchmark methods,

even on supposedly nonlinear data.

Some good recent work by Kourentzes and

Crone on automated ANN for time series.

Watch this space!

Automatic algorithms for time series forecasting

Automatic nonlinear forecasting?

37

Automatic nonlinear forecasting

Automatic ANN in M3 competition did poorly.

Linear methods did best in the NN3

competition!

Very few machine learning methods get

published in the IJF because authors cannot

demonstrate their methods give better

forecasts than linear benchmark methods,

even on supposedly nonlinear data.

Some good recent work by Kourentzes and

Crone on automated ANN for time series.

Watch this space!

Automatic algorithms for time series forecasting

Automatic nonlinear forecasting?

37

Outline

1 Motivation

2 Exponential smoothing

3 ARIMA modelling

4 Automatic nonlinear forecasting?

5 Time series with complex seasonality

6 Hierarchical and grouped time series

7 The future of forecasting

Automatic algorithms for time series forecasting

Time series with complex seasonality

38

Examples

6500 7000 7500 8000 8500 9000 9500

Thousands of barrels per day

US finished motor gasoline products

1992

1994

1996

1998

2000

2002

2004

Weeks

Automatic algorithms for time series forecasting

Time series with complex seasonality

39

Examples

300

200

100

Number of call arrivals

400

Number of calls to large American bank (7am−9pm)

3 March

17 March

31 March

14 April

28 April

12 May

5 minute intervals

Automatic algorithms for time series forecasting

Time series with complex seasonality

39

Examples

20

15

10

Electricity demand (GW)

25

Turkish electricity demand

2000

2002

2004

2006

2008

Days

Automatic algorithms for time series forecasting

Time series with complex seasonality

39

TBATS model

TBATS

Trigonometric terms for seasonality

Box-Cox transformations for heterogeneity

ARMA errors for short-term dynamics

Trend (possibly damped)

Seasonal (including multiple and non-integer periods)

Automatic algorithm described in AM De Livera,

RJ Hyndman, and RD Snyder (2011). “Forecasting

time series with complex seasonal patterns using

exponential smoothing”. In: Journal of the

American Statistical Association 106.496,

pp. 1513–1527. URL: http://pubs.amstat.org/

doi/abs/10.1198/jasa.2011.tm09771.

Automatic algorithms for time series forecasting

Time series with complex seasonality

40

TBATS model

yt = observation at time t

(ω)

yt

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

log yt

if ω = 0.

= `t−1 + φbt−1 +

M

X

(i)

st−mi + dt

i=1

`t = `t−1 + φbt−1 + αdt

bt = (1 − φ)b + φbt−1 + β dt

p

q

X

X

dt =

φi dt−i +

θj εt−j + εt

i=1

j=1

(i)

ki

(i)

st =

X

j=1

(i)

sj,t

sj,t =

(i)

(i)

(i)

∗(i)

(i)

(i)

(i)

(i)

sj,t−1 cos λj + sj,t−1 sin λj + γ1 dt

(i)

(i)

∗(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

TBATS model

yt = observation at time t

(ω)

yt

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

log yt

if ω = 0.

= `t−1 + φbt−1 +

M

X

Box-Cox transformation

(i)

st−mi + dt

i=1

`t = `t−1 + φbt−1 + αdt

bt = (1 − φ)b + φbt−1 + β dt

p

q

X

X

dt =

φi dt−i +

θj εt−j + εt

i=1

j=1

(i)

ki

(i)

st =

X

j=1

(i)

sj,t

sj,t =

(i)

(i)

(i)

∗(i)

(i)

(i)

(i)

(i)

sj,t−1 cos λj + sj,t−1 sin λj + γ1 dt

(i)

(i)

∗(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

TBATS model

yt = observation at time t

(ω)

yt

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

log yt

if ω = 0.

= `t−1 + φbt−1 +

M

X

Box-Cox transformation

(i)

st−mi + dt

M seasonal periods

i=1

`t = `t−1 + φbt−1 + αdt

bt = (1 − φ)b + φbt−1 + β dt

p

q

X

X

dt =

φi dt−i +

θj εt−j + εt

i=1

j=1

(i)

ki

(i)

st =

X

j=1

(i)

sj,t

sj,t =

(i)

(i)

(i)

∗(i)

(i)

(i)

(i)

(i)

sj,t−1 cos λj + sj,t−1 sin λj + γ1 dt

(i)

(i)

∗(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

TBATS model

yt = observation at time t

(ω)

yt

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

log yt

if ω = 0.

= `t−1 + φbt−1 +

M

X

Box-Cox transformation

(i)

st−mi + dt

M seasonal periods

i=1

`t = `t−1 + φbt−1 + αdt

bt = (1 − φ)b + φbt−1 + β dt

p

q

X

X

dt =

φi dt−i +

θj εt−j + εt

i=1

j=1

(i)

ki

(i)

st =

X

j=1

global and local trend

(i)

sj,t

sj,t =

(i)

(i)

(i)

∗(i)

(i)

(i)

(i)

(i)

sj,t−1 cos λj + sj,t−1 sin λj + γ1 dt

(i)

(i)

∗(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

TBATS model

yt = observation at time t

(ω)

yt

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

log yt

if ω = 0.

= `t−1 + φbt−1 +

M

X

Box-Cox transformation

(i)

st−mi + dt

M seasonal periods

i=1

`t = `t−1 + φbt−1 + αdt

bt = (1 − φ)b + φbt−1 + β dt

p

q

X

X

dt =

φi dt−i +

θj εt−j + εt

i=1

st =

X

j=1

ARMA error

j=1

(i)

ki

(i)

global and local trend

(i)

sj,t

sj,t =

(i)

(i)

(i)

∗(i)

(i)

(i)

(i)

(i)

sj,t−1 cos λj + sj,t−1 sin λj + γ1 dt

(i)

(i)

∗(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

TBATS model

yt = observation at time t

(ω)

yt

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

log yt

if ω = 0.

= `t−1 + φbt−1 +

M

X

Box-Cox transformation

(i)

st−mi + dt

M seasonal periods

i=1

`t = `t−1 + φbt−1 + αdt

bt = (1 − φ)b + φbt−1 + β dt

p

q

X

X

dt =

φi dt−i +

θj εt−j + εt

i=1

j=1

(i)

ki

(i)

st =

X

j=1

(i)

sj,t

sj,t =

(i)

global and local trend

ARMA error

Fourier-like seasonal

(i)

(i)

∗(i)

(i)

(i)

sj,t−1 cos

λj + sj,t−1 sin λj + γ1 dt

terms

(i)

(i)

∗(i)

(i)

(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

TBATS model

yt = observation at time t

(ω)

yt

(

(ytω − 1)/ω if ω 6= 0;

=

logTBATS

yt

if ω = 0.

Box-Cox transformation

M

X (i)

Trigonometric

st−m + dt

i=1

Box-Cox

`t = `t−1 + φbt−1 + αdt

ARMA

bt = (1 − φ)b + φbt−1 + β dt

p

q

TrendX

X

dt =

φi dt−i +

θj εt−j + εt

Seasonal

i=1

j=1

(ω)

yt

(i)

= `t−1 + φbt−1 +

st =

ki

X

j=1

(i)

(i)

sj,t

M seasonal periods

i

sj,t =

(i)

global and local trend

ARMA error

Fourier-like seasonal

(i)

(i)

∗(i)

(i)

(i)

sj,t−1 cos

λj + sj,t−1 sin λj + γ1 dt

terms

(i)

(i)

∗(i)

(i)

(i)

sj,t = −sj,t−1 sin λj + sj,t−1 cos λj + γ2 dt

Automatic algorithms for time series forecasting

Time series with complex seasonality

41

Examples

9000

8000

fit <- tbats(gasoline)

fcast <- forecast(fit)

plot(fcast)

7000

Thousands of barrels per day

10000

Forecasts from TBATS(0.999, {2,2}, 1, {<52.1785714285714,8>})

1995

2000

2005

Weeks

Automatic algorithms for time series forecasting

Time series with complex seasonality

42

Examples

500

Forecasts from TBATS(1, {3,1}, 0.987, {<169,5>, <845,3>})

300

200

100

0

Number of call arrivals

400

fit <- tbats(callcentre)

fcast <- forecast(fit)

plot(fcast)

3 March

17 March

31 March

14 April

28 April

12 May

26 May

9 June

5 minute intervals

Automatic algorithms for time series forecasting

Time series with complex seasonality

43

Examples

Forecasts from TBATS(0, {5,3}, 0.997, {<7,3>, <354.37,12>, <365.25,4>})

20

15

10

Electricity demand (GW)

25

fit <- tbats(turk)

fcast <- forecast(fit)

plot(fcast)

2000

2002

2004

2006

2008

2010

Days

Automatic algorithms for time series forecasting

Time series with complex seasonality

44

Outline

1 Motivation

2 Exponential smoothing

3 ARIMA modelling

4 Automatic nonlinear forecasting?

5 Time series with complex seasonality

6 Hierarchical and grouped time series

7 The future of forecasting

Automatic algorithms for time series forecasting

Hierarchical and grouped time series

45

Hierarchical time series

A hierarchical time series is a collection of

several time series that are linked together in a

hierarchical structure.

Total

A

AA

AB

C

B

AC

BA

BB

BC

CA

CB

CC

Examples

Net labour turnover

Tourism by state and region

Automatic algorithms for time series forecasting

Hierarchical and grouped time series

46

Hierarchical time series

A hierarchical time series is a collection of

several time series that are linked together in a

hierarchical structure.

Total

A

AA

AB

C

B

AC

BA

BB

BC

CA

CB

CC

Examples

Net labour turnover

Tourism by state and region

Automatic algorithms for time series forecasting

Hierarchical and grouped time series

46

Hierarchical time series

A hierarchical time series is a collection of

several time series that are linked together in a

hierarchical structure.

Total

A

AA

AB

C

B

AC

BA

BB

BC

CA

CB

CC

Examples

Net labour turnover

Tourism by state and region

Automatic algorithms for time series forecasting

Hierarchical and grouped time series

46

Hierarchical time series

Total

A

B

C

Automatic algorithms for time series forecasting

Yt : observed aggregate of all

series at time t.

YX,t : observation on series X at

time t.

bt : vector of all series at

bottom level in time t.

Hierarchical and grouped time series

47

Hierarchical time series

Total

A

B

C

Automatic algorithms for time series forecasting

Yt : observed aggregate of all

series at time t.

YX,t : observation on series X at

time t.

bt : vector of all series at

bottom level in time t.

Hierarchical and grouped time series

47

Hierarchical time series

Total

A

B

C

Yt : observed aggregate of all

series at time t.

YX,t : observation on series X at

time t.

bt : vector of all series at

bottom level in time t.

1

1

yt = [Yt , YA,t , YB,t , YC,t ]0 =

0

0

Automatic algorithms for time series forecasting

1

0

1

0

1

Y

A

,

t

0

YB,t

0

YC,t

1

Hierarchical and grouped time series

47

Hierarchical time series

Total

A

B

C

Yt : observed aggregate of all

series at time t.

YX,t : observation on series X at

time t.

bt : vector of all series at

bottom level in time t.

1

0

1

0

|

{z

1

1

yt = [Yt , YA,t , YB,t , YC,t ]0 =

0

0

Automatic algorithms for time series forecasting

S

1

Y

A

,

t

0

YB , t

0

YC,t

1

}

Hierarchical and grouped time series

47

Hierarchical time series

Total

A

B

C

Yt : observed aggregate of all

series at time t.

YX,t : observation on series X at

time t.

bt : vector of all series at

bottom level in time t.

1

0

1

0

|

{z

1

1

yt = [Yt , YA,t , YB,t , YC,t ]0 =

0

0

Automatic algorithms for time series forecasting

S

1

Y

A

,

t

0

YB,t

0

YC,t

1 | {z }

}

bt

Hierarchical and grouped time series

47

Hierarchical time series

Total

A

B

C

Yt : observed aggregate of all

series at time t.

YX,t : observation on series X at

time t.

bt : vector of all series at

bottom level in time t.

1

0

1

0

|

{z

1

1

yt = [Yt , YA,t , YB,t , YC,t ]0 =

0

0

yt = Sbt

Automatic algorithms for time series forecasting

S

1

Y

A

,

t

0

YB,t

0

YC,t

1 | {z }

}

bt

Hierarchical and grouped time series

47

Hierarchical time series

Total

A

AX

yt =

AY

Yt

YA,t

YB,t

YC,t

YAX,t

YAY ,t

YAZ,t

YBX,t

YBY ,t

YBZ,t

YCX,t

YCY ,t

YCZ,t

C

B

AZ

BX

1

1

0

0

1

0

= 0

0

0

0

0

0

0

|

1

1

0

0

0

1

0

0

0

0

0

0

0

1

1

0

0

0

0

1

0

0

0

0

0

0

BY

1

0

1

0

0

0

0

1

0

0

0

0

0

1

0

1

0

0

0

0

0

1

0

0

0

0

{z

S

Automatic algorithms for time series forecasting

1

0

1

0

0

0

0

0

0

1

0

0

0

CX

BZ

1

0

0

1

0

0

0

0

0

0

1

0

0

1

0

0

1

0

0

0

0

0

0

0

1

0

1

0

0

1

0

0

0

0

0

0

0

0

1

CY

CZ

YAX,t

YAY,t

Y

AZ,t

YBX,t

YBY,t

YBZ,t

Y

CX,t

YCY,t

YCZ,t

| {z }

bt

}

Hierarchical and grouped time series

48

Hierarchical time series

Total

A

AX

yt =

AY

Yt

YA,t

YB,t

YC,t

YAX,t

YAY ,t

YAZ,t

YBX,t

YBY ,t

YBZ,t

YCX,t

YCY ,t

YCZ,t

C

B

AZ

BX

1

1

0

0

1

0

= 0

0

0

0

0

0

0

|

1

1

0

0

0

1

0

0

0

0

0

0

0

1

1

0

0

0

0

1

0

0

0