Lecture 41 Application of the entropy tensorization technique. 18.465

advertisement

Lecture 41

Application of the entropy tensorization technique.

18.465

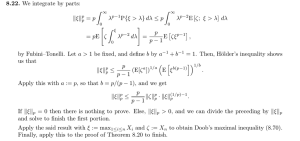

Recall the tensorization of entropy lemma we proved previously. Let x1 , · · · , xn be independent random

variables, x�1 , · · · , x�n be their independent copies, Z = Z(x1 , · · · , xn ), Z i = (x1 , · · · , xi−1 , x�i , xi+1 , · · · , xn ),

�n

and φ(x) = ex − x − 1. We have EeλZ − EeλZ log EeλZ ≤ EeλZ i=1 φ(−λ(Z − Z i )). We will use the ten­

sorization of entropy technique to prove the following Hoeffding-type inequality. This theorem is Theorem 9 of

Pascal Massart.

cal processes.

About the constants in Talagrand’s concentration inequalities for empiri­

The Annals of Probability, 2000, Vol 28, No.

2, 863-884.

Theorem 41.1. Let F be a finite set of functions |F| < ∞. For any f = (f1 , · · · , fn ) ∈ F, ai ≤ fi ≤ bi ,

√

�n

�n

2

L = supf i=1 (bi − ai ) , and Z = supf i=1 fi . Then P(Z ≥ EZ + 2Lt) ≤ e−t .

Proof. Let

⎛

Zi

=

sup ⎝ai +

f ∈F

Z

=

⎞

sup

�

fj ⎠

j�=i

n

�

def.

fi =

f ∈F i=1

n

�

fi◦ .

i=1

It follows that

0 ≤ Z − Zi ≤

�

i

Since

φ(x)

x2

=

ex −x−1

x2

fi◦ −

�

fj◦ − ai = fi◦ − ai ≤ bi (f ◦ ) − ai (f ◦ ).

�

j=i

is increasing in R and limx→0

EeλZ λZ − EeλZ log EeλZ

φ(x)

x2

→ 12 , it follows that ∀x < 0, φ(x) ≤ 21 x2 , and

≤ EeλZ

� �

�

φ −λ(Z − Z i )

i

≤

1 λZ � 2

Ee

λ (Z − Z i )2

2

i

≤

1 2 λZ

Lλ Ee .

2

Center Z, and we get

Eeλ(Z−EZ) λ(Z − EZ) − log Eeλ(Z−EZ)

≤

1 2 λ(Z−EZ)

Lλ Ee

.

2

112

Lecture 41

Application of the entropy tensorization technique.

18.465

Let F (λ) = Eeλ(Z−EZ) . It follows that Fλ� (λ) = Eeλ(Z−EZ) (Z − EZ) and

λFλ� (λ) − F (λ) log F (λ)

1 Fλ� (λ)

1

− 2 log F (λ)

λ F (λ)

λ

�

��

1

log F (λ)

λ

λ

1

log F (λ)

λ

F (λ)

1 2

Lλ F (λ)

2

1

L

2

≤

≤

≤

1

L

2

=

�

1

log F (t)�t→0 +

t

≤

1

Lλ

2

�

λ

�

0

��

1

log F (t) dt

t

t

1

≤ exp( Lλ2 ).

2

By Chebychev inequality, and minimize over λ, we get

P(Z ≥ EZ + t)

≤ e−λt Eeλ(Z−EZ)

1

2

≤ e−λt e 2 Lλ

minimize over λ

P(Z ≥ EZ + t)

2

≤ e−t

/(2L)

�

Let fi above be Rademacher random variables and apply Hoeffding’s inequality, we get P(Z ≥ EZ+

�

Lt/2) ≤

e−t . As a result, The above inequality improves the constant of Hoeffding’s inequality.

The following Bennett type concentration inequality is Theorem 10 of

Pascal Massart.

cal processes.

About the constants in Talagrand’s concentration inequalities for empiri­

The Annals of Probability, 2000, Vol 28, No.

2, 863-884.

Theorem 41.2. Let F be a finite set of functions |F| < ∞. ∀f = (f1 , · · · , fn ) ∈ F, 0 ≤ fi ≤ 1, Z =

�n

supf i=1 fi , and define h as h(u) = (1+u) log(1+u)−u where u ≥ 0. Then P(Z ≥ EZ +x) ≤ e−EZ·h(x/EZ) .

Proof. Let

Z

= sup

n

�

def.

fi =

f ∈F i=1

Z

i

= sup

f ∈F

�

n

�

fi◦

i=1

fj .

� i

j=

It follows that 0 ≤ Z − Z i ≤ fi◦ ≤ 1. Since φ = ex − x − 1 is a convex function of x,

φ(−λ(Z − Z i )) = φ(−λ · (Z − Z i ) + 0 · (1 − (Z − Z i ))) ≤ (Z − Z i )φ(−λ)

113

Lecture 41

Application of the entropy tensorization technique.

18.465

and

�

n

�

�

�

≤ E eλZ

φ −λ(Z − Z i )

�

E λZeλZ − EeλZ log EeλZ

�

�

i=1

�

�

��

≤ E eλZ φ(−λ)

Z −Z

�

i

i

�

�

λZ

≤ φ(−λ)E e

�

·

fi◦

i

φ(−λ)E Z · e

=

Set Z̃ = Z − EZ (i.e., center Z), and we get

�

�

˜ λZ˜ − EeλZ̃ log EeλZ̃

E λZe

�

�

˜ λZ̃ − EeλZ̃ log EeλZ̃

(λ − φ(−λ)) E Ze

λZ

�

�

.

�

�

��

�

�

≤ φ(−λ)E Z̃ · eλZ̃ ≤ φ(−λ)E Z̃ + EZ · eλZ̃

≤ φ(−λ) · EZ · EeλZ̃ .

Let v = EZ, F (λ) = EeλZ̃ , Ψ = log F , and we get

(λ − φ(−λ))

Fλ� (λ)

− log F (λ)

F (λ)

�

(λ − φ(−λ)) (log F (λ))λ − log F (λ)

(41.1)

≤ vφ(−λ)

≤ vφ(−λ).

Solving the differential equation

⎞�

⎛

⎜

⎟

(λ − φ(−λ)) ⎝log F (λ)⎠ − log F (λ) =

� �� �

� �� �

(41.2)

Ψ0

vφ(−λ),

Ψ0

λ

yields Ψ0 = v · φ(λ). We will proceed to show that Ψ satisfying 41.1 has the property Ψ ≤ Ψ0 :

Substract 41.2 from 41.1, and let Ψ1 =Ψ−Ψ0

(1 − e−λ )Ψ�1 − Ψ1

≤ 0

eλ

(eλ −1)(1−e−λ ) eλ1−1 =1−e−λ ,and (eλ −1)(1−e−λ ) (eλ −1)2 =1

�

�

λ

� λ

��

�

1

e

e − 1 1 − e−λ

Ψ� −

Ψ1

eλ − 1 1 (eλ − 1)2

�

��

�

“

Ψ1

eλ −1

≤ 0

”�

λ

Ψ1 (λ)

eλ − 1

≤

lim

λ→0

Ψ1 (λ)

= 0.

eλ − 1

It follows that Ψ ≤ vφ(λ), and F = EeλZ ≤ evφ(λ) . By Chebychev’s inequality, P (Z ≥ EZ + t) ≤ e−λt+vφ(λ) .

Minimizing over all λ > 0, we get P (Z ≥ EZ + t) ≤ e−v·h(t/v) where h(x) = (1 + x) · log(1 + x) − x.

�

The following sub-additive increments bound can be found as Theorem 2.5 in

Olivier Bousquet.

Concentration Inequalities and Empirical Processes Theory Applied to the

Analysis of Learning Algorithms.

PhD thesis, Ecole Polytechnique, 2002.

114

Lecture 41

Application of the entropy tensorization technique.

18.465

�

�n

def.

Theorem 41.3. Let Z = supf ∈F

fi , Efi = 0, supf ∈F var(f ) = supf ∈F i=1 fi2 = σ 2 , ∀i ∈ {1, · · · , n}, fi ≤

�

�

�

u ≤ 1. Then P Z ≥ EZ + (1 + u)EZ + nσ 2 x + x3 ≤ e−x .

Proof. Let

Z

=

sup

n

�

def.

fi =

f ∈F i=1

Zk

=

sup

f ∈F

Zk�

=

�

n

�

fi◦

i=1

fi

i=k

�

fk such that Zk = sup

f ∈F

�

fi .

� k

i=

It follows that Zk� ≤ Z − Zk ≤ u. Let ψ(x) = e−x + x − 1. Then

eλZ ψ(λ(Z − Zk ))

= eλZk − eλZ + λ(Z − Zk )eλZ

=

f (λ) (Z − Zk ) eλZ + (λ − f (λ)) (Z − Zk ) eλZ + eλZk − eλZ

=

f (λ) (Z − Zk ) eλZ + g(Z − Zk )eλZk .

�

� �

�

In the above, g(x) = 1 − eλx + (λ − f (λ)) xeλx , and we define f (λ) = 1 − eλ + λeλ / eλ + α − 1 where

α = 1/ (1 + u). We will need the following lemma to make use of the bound on the variance.

�

�

Lemma 41.4. For all x ≤ 1, λ ≥ 0 and α ≥ 21 , g(x) ≤ f (x) αx2 − x .

Continuing the proof, we have

eλZ ψ(λ(Z − Zk ))

= f (λ) (Z − Zk ) eλZ + g(Z − Zk )eλZk

�

�

2

≤ f (λ) (Z − Zk ) eλZ + eλZk f (λ) α (Z − Zk ) − (Z − Zk )

�

�

2

≤ f (λ) (Z − Zk ) eλZ + eλZk f (λ) α (Zk� ) − Zk� .

Sum over all k = 1, · · · , n, and take expectation, we get

eλZ

�

λZ

�

ψ(λ(Z − Zk )) ≤ f (λ)ZeλZ + f (λ)

�

k

Ee

k

�

�

2

eλZk α (Zk� ) − Zk�

k

λZ

ψ(λ(Z − Zk )) ≤ f (λ)EZe

+ f (λ)

�

�

�

2

EeλZk α (Zk� ) − Zk� .

k

115

Lecture 41

Application of the entropy tensorization technique.

18.465

2

Since EZk� = 0, EXk (Zk� ) = Efk2 = var(fk ) ≤ supf ∈F var(f ) ≤ σ 2 , it follows that

�

�

�

�

2

2

EeλZk α (Zk� ) − Zk�

= EeλZk αEfk (Zk� ) − Efk Zk�

≤ ασ 2 EeλZk

�

≤ ασ 2 EeλZk +λEZk

≤

Jensen’s inequality

2

λZk +λZk�

ασ Ee

≤ ασ 2 EeλZ .

Thus

�

�

E λZeλZ − EeλZ log EeλZ

≤ EeλZ

�

ψ(λ(Z − Zk ))

k

≤ f (λ)EZeλZ + f (λ)αnσ 2 EeλZ .

Let Z0 = Z − E, and center Z, we get

�

�

E λZ0 eλZ0 − EeλZ0 log EeλZ0

�

�

≤ f (λ)EZ0 eλZ0 + f (λ) αnσ 2 + EZ EeλZ0 .

Let F (λ) = EeλZ0 , and Ψ(λ) = log F (λ), we get

(λ − f (λ)) F � (λ) − F (λ) log F (λ)

(λ − f (λ))

�

�

≤ f (λ) αnσ 2 + EZ F (λ)

�

�

F � (λ)

− log F (λ) ≤ f (λ) αnσ 2 + EZ .

F (λ) � �� �

� �� �

Ψ(λ)

Ψ� (λ)

Solve this inequality, we get F (λ) ≤ evψ(−λ) where v = nσ 2 + (1 + u)EZ.

�

116