AN ABSTRACT OF THE THESIS OF

Leslie M. McDonald for the degree of Master of Science in Mathematics presented on

March 11, 2015.

Title: Probabilities of Voting Paradoxes with Three Candidates

Abstract approved:

Mina E. Ossiander

Pardoxes in voting has been an interest of voting theorists since the 1800’s when Condorcet

demonstrated the key example of a voting paradox: voters with individually transitive

rankings produce an election outcome which is not transitive. With Arrow’s Impossibility

Theorem, the hope of finding a fair voting method which accurately reflected society’s

preferences seemed unworkable. Recent results, however, have shown that paradoxes are

unlikely under certain assumptions. In this paper, we corroborate results found by Gehrelin for the probabilities of paradoxes, but also give results which indicate paradoxes are

extremely likely under the right conditions. We use simulations to show there can be many

situations where paradoxes can arise, dependent upon the variability of voters’ preferences,

which echo Saari’s statements on the topic.

c

Copyright

by Leslie M. McDonald

March 11, 2015

All Rights Reserved

Probabilities of Voting Paradoxes with Three Candidates

by

Leslie M. McDonald

A THESIS

submitted to

Oregon State University

in partial fulfillment of

the requirements for the

degree of

Master of Science

Presented March 11, 2015

Commencement June 2015

Master of Science thesis of Leslie M. McDonald presented on March 11, 2015.

APPROVED:

Major Professor, representing Mathematics

Chair of the Department of Mathematics

Dean of the Graduate School

I understand that my thesis will become part of the permanent collection of Oregon State

University libraries. My signature below authorizes release of my thesis to any reader

upon request.

Leslie M. McDonald, Author

ACKNOWLEDGEMENTS

Academic

I would like to thank my friends and professors at the University of South Alabama for

urging me to pursue graduate mathematics and thesis work. Much thanks also goes to my

advisor Mina Ossiander for her excellent suggestions and her patience. Last, but not least,

thanks to all my mathematical friends at Oregon State University for helping me out along

the way and generally being superb mathematicians.

Personal

I thank my parents for creating opportunities for me. My Mother has ever been my

biggest supporter. I would not be where I am without her encouragement and guidance,

and my Father’s natural diligence in his endeavors inspires me to continue. Thanks to the

rest of the world for thinking I must be a genius to be doing mathematics.

TABLE OF CONTENTS

Page

1

2

3

4

Introduction

1

1.1 History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1.2 Basic Definitions and Setting . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

1.3 Recent Results and Methods . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

Notation and Methods

13

2.1 Key Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

2.2 Central Limit Theorem Approximations . . . . . . . . . . . . . . . . . . . . .

17

2.3 Simulation methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

2.4 Simple Examples . . . . . . . . . . . . .

2.4.1 The probability that A wins by

2.4.2 Condorcet’s Example . . . . .

2.4.3 Single-peaked profiles . . . . .

22

22

25

26

. . . . . . . . . . . .

the pairwise method

. . . . . . . . . . . .

. . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Results

3.1 Main Analyses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.1.1 Plurality Winner is the Pairwise Loser . . . . . . . . . . . . . . . . .

3.1.2 Plurality Winner is Borda Loser . . . . . . . . . . . . . . . . . . . .

28

28

42

3.2 Summary of Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

Conclusion

4.1 Further Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

28

55

55

Appendix

56

Bibliography

66

LIST OF FIGURES

Figure

Page

1

6

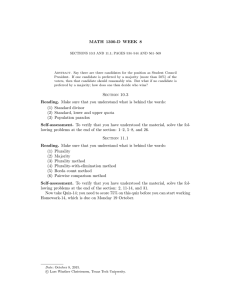

2.1

Limiting behavior in simulation when pk =

for all k . . . . . . . . . . . .

23

2.2

Probability of A being the pairwise winner under conditions p1 + p2 + p4 = 12

and p1 + p2 + p3 = 12 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

1

6

3.1

Limiting behavior in simulation when pk =

for all k . . . . . . . . . . . .

31

3.2

Limiting behavior in simulation when p1 = p2 = 0.20, p3 = p4 = 0.05,

p5 = 0.35, and p6 = 0.15 . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

32

Limiting behavior in simulation when p1 = p2 = 0.167, p3 = p4 = 0.05,

p5 = 0.284, and p6 = 0.282 . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33

1

Limiting behavior in simulation when p1 = p2 = 16 , p3 = p4 = 20

, and

17

p5 = p6 = 60 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

Limiting behavior in simulation when p1 = p2 = 0.2, p3 = p4 = p5 = 0.1,

and p6 = 0.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38

Limiting behavior in simulation when p1 = p2 = 0.2, p3 = p4 = 0, and

p5 = p6 = 0.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

Limiting behavior in simulation when p1 = p2 = 0.2, p3 = p4 = 0.08, and

p5 = p6 = 0.22 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40

Limiting behavior in simulation when p1 = p2 = 0.21, p3 = p4 = 0.07, and

p5 = p6 = 0.22 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40

Limiting behavior in simulation when p1 = p2 = 0.21, p3 = p4 = 0.078, and

p5 = p6 = 0.212 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

41

3.3

3.4

3.5

3.6

3.7

3.8

3.9

1

6

for all k . . . . . . . . . . . .

45

3.11 Limiting behavior in simulation when p1 = 0.2, p2 = 0.15, p3 = 0.11, p4 =

0.09, p5 = 0.24, and p6 = 0.21. . . . . . . . . . . . . . . . . . . . . . . . . .

46

3.12 Limiting behavior in simulation when p1 = 0.28, p2 = 0.07, p3 = 0.11,

p4 = 0.09, p5 = 0.24, and p6 = 0.21. . . . . . . . . . . . . . . . . . . . . . . .

47

3.13 Limiting behavior in simulation when p1 = 0.29, p2 = 0.06, p3 = 0.11,

p4 = 0.09, p5 = 0.24, and p6 = 0.21. . . . . . . . . . . . . . . . . . . . . . . .

50

3.10 Limiting behavior in simulation when pk =

LIST OF FIGURES (Continued)

Figure

Page

1

3.14 Limiting behavior in simulation when p1 = p3 = 12

, p2 = p5 = 14 , and

p4 = p6 = 16 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.15 Limiting behavior in simulation when p1 = p3 = p4 = p6 =

5

36

51

and p2 = p5 = 29 . 52

3.16 Limiting behavior in simulation when p1 = p2 = 0.2, p3 = p4 = 0.05, and

p5 = p6 = 0.25, candidate A is the Plurality winner and Borda loser. . . .

53

3.17 Limiting behavior in simulation when p1 = p2 = 0.2, p3 = p4 = 0.05, and

p5 = p6 = 0.25, candidate A is the Plurality winner and Pairwise loser. . .

54

LIST OF TABLES

Table

Page

1.1

Borda’s example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

1.2

Calculating the pairwise tallies from Borda’s example . . . . . . . . . . . .

4

1.3

Summary of results from Gehrlein et al

. . . . . . . . . . . . . . . . . . . .

12

2.1

Condorcet’s example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

26

5.1

Plurality winner is the Pairwise loser, limiting probabilities for different

constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

Plurality winner is the Pairwise loser, limiting probabilities for different

constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

Plurality winner is the Pairwise loser, limiting probabilities for different

constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

58

Plurality winner is the Borda loser, limiting probabilities for different constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

58

Plurality winner is the Borda loser, limiting probabilities for different constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

Plurality winner is the Borda loser, limiting probabilities for different constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

60

Plurality winner is the Borda loser, limiting probabilities for different constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

61

Plurality winner is the Borda loser, limiting probabilities for different constraint categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

62

5.1

5.1

5.2

5.2

5.2

5.2

5.2

Chapter 1

Introduction

1.1 History

The analysis of how a group of individuals’ preferences are combined to create a collective

decision is called social choice theory. Social choice theory is predominantly comprised of

welfare economics and voting theory. The main question of social choice theorists in studying voting is whether there exists a voting method which accurately reflects the preferences

of the voters. Mathematical analysis of this question began during the French Revolution,

most notably with the writings of Jean-Charles de Borda, Marie Jean Antoine Nicholas

Caritat (Marquis de Condorcet), and Pierre-Simon Marquis de Laplace. Condorcet introduced the method of pairwise voting and a criterion for choosing the winning candidate:

The candidate who wins against any other candidate head to head should be the societal

choice. He also discovered a troublesome effect that can occur with pairwise voting, that

candidates can be ranked cyclically. For example, with three candidates {A, B, C}, there

are situations where pairwise voting tallies could produce the ranking that puts A B,

then B C, but also C A. Another voting strategy was suggested by Borda. Voters

rank the candidates and tallies are calculated by assigning points to a candidate based on

how a voter ranks them, then these points are totalled for each candidate. So for our three

candidates {A, B, C}, if a voter has preference B A C, then B will get 1 point, A will

get half a point, and C will get no points. Both Condorcet and Laplace, among others,

felt that the Borda rule showed promise as a fair voting method. Indeed, Borda’s method

of voting was used by the Academy of Sciences in France from 1784 until 1800. It was

discontinued at the behest of new member Napolean Bonaparte [3].

Other mathematicians such as E. J. Nanson, Francis Galton, and the well-known fiction

writer Rev. C. L. Dodgson, proposed other methods and mathematical analyses of voting

theory. However, the first successful axiomatization of voting theory came from Kenneth

Arrow. The well-known Arrow’s Theorem (see Section 1.2 Theorem 1.1) gives the surprising

result that any voting method which satisfies his axioms must be a dictatorship. That is,

Arrow’s axioms are not consistent with each other, that is to say, there is no fair voting

method which satisfies all of them [1].

2

Alternatives to Arrow’s axioms have been proposed most of which are kinds of restrictions on the voter profiles (a profile is a collection of all the voters’ preferences). Duncan

Black showed that if the voter profile satisfies a condition known as single-peakedness,

then all of Arrow’s axioms are satisfied by simple majority rule [1]. Amaryta Sen gives a

similar statement about value-restricted profiles [24]. Most recently, D. G. Saari proposed

relaxing the condition of Independence of Irrelevant Alternatives to Intensity of Binary

Independence. He shows that the Borda rule then satisfies these new axioms and is the

only rule which does so. Saari also showed that the Borda rule satisfies Arrow’s original

axioms when a preference profile has a certain subset of voters removed. [23]

In addition to Arrow’s disturbing result, we also have paradoxes in voting. Borda

illustrated a voting situation where the pairwise rule ranking C B A is totally reversed

by the majority rule ranking A B C (see Table 1.1). Condorcet gave an example

where no positional voting method (such as the Borda rule and the plurality rule) elects

the pairwise method winner [3, 25]. Innumerable other examples can be created, as Saari

shows in [21]. Studying voting paradoxes has been of interest since the beginnings of

voting theory, but powerful mathematical tools were not used on the problem until the

late 1970’s. Such people as Richard Niemi, Peter Fishburn, and William Gehrlein utilized

probability, combinatorics, and computer algorithms. Their main results give probabilities

for finding paradoxes, often with conditioning on the measure of single-peakedness and

other similar parameters [20, 7, 14]. Work along this vein continues to recent years and is

used extensively in this paper. In addition, we consider the vector space approach developed

by Saari which illuminates underlying structure and completely explains paradoxes between

voting methods [23].

Typically, Condorcet’s pairwise criterion was used as a measuring stick against other

voting methods to show how well they represented the voters’ preferences. Arguments made

by Saari and others point out flaws with this pairwise voting method and present evidence

for the Borda rule as the best measure of preferences [21]. Saari identifies subspaces of the

space of profiles (the space of all possible ways a set of voters can have voting preferences)

which are responsible for all discrepancies between voting rules. By using this vector space

approach, an intuitive understanding of these paradoxes is brought to light. He also gives

a number of theorems on the wild variability that can arise in profiles, of which the Borda

rule is mostly exempt, further supporting the Borda rule [23, 21].

Recent results show that paradoxes are unlikely to show up when the number of candidates is small and the number of voters is large. However, as the number of candidates, or

3

alternative choices, gets bigger, with ten being considered big, discrepancies among different voting methods becomes more of an issue. Thus, it would seem that large elections like

that of the US president are probably exempt from these specific paradoxes due to the large

number of voters (but of course are susceptible to other types of manipulation). In many

instances where a choice must be made amongst several alternatives by a small number of

individuals, such as a university choosing a new faculty member or the judging of a sports

competition, we should be wary of pardoxes. In these situations we see many complicated

and varied voting methods which we should view with suspicion, and which, some would

argue, should be replaced with the Borda rule. Our results indicate that paradoxes can

occur with high probability with only three candidates if certain conditions occur.

1.2 Basic Definitions and Setting

Individuals in a group faced with making a choice amongst a set of alternatives are called

voters, and those alternatives are called the candidates (other social choice situations can

be analogously defined). A voter’s transitive ranking of the candidates is called a voter

preference, and the set of all the voters’ preferences is called the voter profile or also the

voting situation. The voting method is a map from the voter profile to a voting tally or

voting count. The voting method specifies how to assign points to each candidate based on a

voter’s preference, then the tally is found by summing up each candidate’s votes, expressed

as a vector (|A|, |B|, |C|). From the voting tally we get the ranking (more generally called

the societal outcome) by simply listing the candidates from largest to smallest number of

votes obtained.

Voter preference

ABC

ACB

BAC

Table 1.1: Borda’s example

number of voters Voter preference number of voters

0

CAB

0

5

BCA

4

0

CBA

3

For example, take Borda’s own example with three candidates shown in Table 1.1. The

Borda method (also called the Borda count) assigns one point to a candidate every time

they are ranked first and

1

2

point every time they are ranked second. Using the Borda

count, the voting tally is (|A|, |B|, |C|) = (5, 5.5, 7.5) which gives the ranking C B A.

The Plurality method assigns one point to a candidate every time they are ranked first.

4

Using this method the voting tally is then (|A|, |B|, |C|) = (5, 4, 3) which gives the ranking

A B C, the total reverse of the Borda ranking! Thus, one is led to the question, which

voting method truly reflects the voters’ prefernces?

The Borda count and the plurality count are each a kind of positional voting method.

For three candidates, any positional procedure can be expressed as a vector (1, λ, 0) = wλ

which specifies that for each voter’s preference, the first ranked candidate gets one point,

the second ranked candidate gets 0 ≤ λ ≤ 1 points, and the lowest ranked candidate

gets zero points. Thus, the Borda count is represented by the vector w1/2 and the plurality

count is represented by w0 . Negative plurality count is represented by w1 , and is equivalent

to assigning one point to the lowest ranked candidate, then ranking candidates based on

the smallest number of points.

preference

AB

BA

Table 1.2: Calculating the pairwise tallies from Borda’s example

no. of voters preference no. of voters preference no. of voters

5

AC

5

BC

4

7

CA

7

CB

8

⇒ BA

⇒ CA

⇒ CB

The other voting method which features prominently in voting theory is the pairwise

method. The winner of the pairwise method is the candidate who wins in every head to

head contest with every other candidate. The pairwise loser is found similarly. The ranking

is then found by comparing the rest of the two candidate subset plurality elections. For

example, the pairwise ranking from Borda’s example is found by comparing the plurality

tallies for all six 2-candidate subsets, see Table 1.2. We see that the pairwise winner is

C and the pairwise loser is A. The rankings C B and B A tell us that B is middle

ranked. Clearly, the pairwise method is not a positional method, but it is the standard in

the field for measuring whether a voting method is satisfactory, often dubbed the Condorcet

criterion.

The following famous theorem combines common sense restrictions on voters’ preferences and conditions that should define a voting method which accurately reflects voters’

preferences as a whole. The theorem, however, gives a rather unsatisfactory conclusion. It

basically says that no fair voting method satisfies all of the conditions.

Theorem 1.1. Arrow’s Impossibility Theorem[1, 21]

If the voting method used in an election with 3 or more candidates satisfies the following

5

conditions, then it must be a dictatorship. That is, one voter’s preference decides the

societal outcome.

1. Each voter’s rankings of the candidates form a complete, strict, transitive ranking.

2. There are no restrictions on how the voters can rank the candidates.

3. If all voters share the same ranking of a pair of candidates, then that should be the

societal outcome.

4. The societal ranking of a particular pair of candidates depends only on how the voters

rank that pair and not how they rank the other candidates.

Condition 1 is called individual rationality and means that a voter does not have a

cyclic preference ordering such as A B C A. Condition 2 reflects freedom of choice,

desirable in any democratic society. Condition 3 is called Pareto and is equivalent to saying

that if we have a new election and a certain individual ranks a candidate higher, with all

other voters voting the same way as in the old election, the new social outcome should not

then have that candidate ranked lower; the societal outcome should either not change or

should also rank that candidate higher. Condition 4 is called the Independence of Irrelevant

Alternatives. This condition reflects the belief that the pairwise voting method is the

preferred method, that is, if a method satisfies this condition, it will give the same ranking

as the pairwise method. The theorem gives the irritating result that no fair voting method

satisfies all of these conditions because there is some voter whose preference determines

the ranking. If that one voter changes their preference, then the whole ranking is changed

to match it.

Subsequent to this result, many believed the endeavor of finding a fair voting method

was useless. Take the much quoted W. H. Riker: “the choice of a positional voting method

is subjective”. And this quote from D. G. Saari [23]:

A naı̈ve, false belief is that the choice of an election procedure does not matter

because the outcome is essentially the same no matter which method is used,

no matter what candidates are added or dropped. While this belief identifies

a tacit but major goal, it is so stringent that it cannot be satisfied even with

an unanimity profile. (The three candidate unanimity plurality outcome differs

from the BC outcome which differs from the outcome where each voter votes

6

for two candidates.) Indeed, there is no reason to expect these conditions ever

to be satisfied.

In the very least, only a winner could be reliably found, but not a full ranking. The

findings of Gehrlein et al [8, 4, 7], though, seem to indicate that paradoxes are hard to

come by in large elections, and when conditioned upon measures of group coherence, are

even rarer. Duncan Black showed that, for three candidates, if the voters’ preferences are

single-peaked, then the majority rule satisfies Theorem 1.1’s four conditions [3]. A profile

is single-peaked if there exists some candidate who is never ranked last. This means that

all candidates are evaluated along some common scale, for example in modern politics

there is the scale of conservatism vs. liberalism [3]. This implies that the ranking will be

transitive, that is, it will not be cyclic (see Fact 2.3). Other conditions of group coherence

such as single-troughed and perfectly polarizing also ensure that cyclic pairwise rankings

do not occur. A profile is single-troughed when some candidate is never ranked first. A

profile is perfectly polarizing if there is some candidate who is never ranked second place.

These conditions guarantee that there is a pairwise winner and pairwise loser. However,

paradoxes can still occur where the Borda winner(loser) is not the pairwise winner(loser),

even though the Borda count must rank the pairwise winner above the pairwise loser [19]

(see Fact 1.2).

Such discrepancies between the positional methods and the pairwise method are due

to a specific subspace of the space of all voter profiles. D. G. Saari constructs the profile

decomposition based on which parts of the profile space are affected by different voting

methods. He shows that by removing a certain subspace of the profile, Borda count will

satisfy all the conditions of Arrow’s Theorem. D. G. Saari also showed [21] that the Borda

count satisfies the following modified version of Arrow’s theorem:

Theorem 1.2. Arrow’s Modified Theorem

If the Independence of Irrelevant Alternatives condition in Arrow’s Theorem is modified to

the Intensity of Binary Independence condition,

• The societal ranking for a pair only depends on how voters rank that pair AND the

intensity of that ranking, i.e. how many rankings are between the pair.

then the Borda Count is the only positional voting method which satisfies the conditions.

Here, the condition of Independence of Irrelevant Alternatives is strengthened to require

that voting methods consider the information lost by using the pairwise voting method.

7

In using the pairwise method, voter rationality is lost because several voting profiles can

give the same pairwise tallies. Consider the following profile where 2 voters have the

irrational preference of A B C A and one voter has the irrational preference of

A C B A (consequently, these are the only cyclic preferences for three candidates).

By looking at the 2 candidate subsets, we arrive at the following pairwise preference profile

|A B| = 2 |B C| = 2 |C A| = 2

|A C| = 1 |B A| = 1 |C B| = 1

from which we can recombine the pairwise orderings to obtain the rational profile

|A B C| = 1 |B C A| = 1 |C A B| = 1.

This profile, of course, gives a positional method tally of a complete tie while the pairwise

ranking is the cyclic A B C A.

1.3 Recent Results and Methods

From the literature, we consider two major avenues of analysis. The first is the calculation of probabilities of finding paradoxes, conditioned upon certain profile restrictions

or assumptions. William Gehrlein has written extensively in this area [11, 10, 9]. The

second is the characterization of the structure of voting profiles with respect to the voting

methods, spearheaded by Donald Saari [23]. It is the aim of this exposition to corroborate

Gehrlein’s numerical findings and use simulations to explore frequencies of paradoxes for

finite numbers of voters. We will see a connection between Saari’s profile decomposition

and the probabilities of finding paradoxes.

We start by defining the assumptions on the voter profiles. We assume that voters have

strictly transitive preferences on the candidates {A, B, C}. Thus, the set of preferences is

{A B C , A C B , B A C , C A B , B C A , C B A}.

The number of voters is n and the distribution of these n voters amongst the 6 preferences

can be given in a number of ways. The model we use here is the multinomial distribution

where nk is the number of voters with the kth preference and the probability that a voter

has the kth preference is pk . A voting profile is obtained by making n sequential random

assignments of the possible preference rankings to voters according to the pk probabilities.

8

The probability of a profile p~ = (n1 , n2 , n3 , n4 , n5 , n6 ) is then

P

!

|A B C| = n1 , |A C B| = n2 , |B A C| = n3 ,

|C A B| = n4 , |B C A| = n5 , |C B A| = n6

=

n

n1 n2 · · · n6

Y

6

pnk k .

k=1

When pk = 1/6 for all k, the above distribution is called Impartial Culture (IC). This

model represents complete independence between the voters’ preferences [4, 14].

Another model for the voter profiles is that of Impartial Anonymous Culture (IAC)

where each voting profile has equal probability of being observed. Thus, P (~

p) = 1/ n+5

5

for any profile p~. In this model, there is a degree of dependence between the voters’

preferences. It is termed anonymous because unlike the IC assumption, if we were to

change the preferences of some voters, the probability of the profile would be the same.

The multinomial counts the different ways n non-anonymous voters can be arranged, which

is precisely why the multinomial coefficient shows up in the distribution function.

For both assumptions about voting preferences, as n → ∞, all candidates will tie in

head to head comparisons. Thus more voter profiles will have a small degree of group

cohesiveness, and thus conditioning upon IC or IAC will overestimate any paradoxical

behavior[4]. However, Gehrlein shows that the probability of a profile being single-peaked

reaches a finite value,

15

16 ,

as n → ∞ but for odd n (for even n, there is a similar limiting

value) [11].

Gehrlein also uses the voter model of IAC*, where all profiles which are consistent with

single-peaked preferences are equally likely. There are n(n + 1)(n + 5)/2 of these profiles.

The ratio of IAC* to IAC profiles is (60n)/((n + 4)(n + 3)(n + 2)), so the proportion of

profiles which are single-peaked goes to zero as n → ∞ [11].

Preferences which are single-peaked can be said to be unidimensional [20]. For three

candidates, this is equivalent to saying that there is some candidate who is never ranked last.

This creates a continuum in one dimension on which all voters rank the three candidates.

Unidimensional rankings therefore prevent cyclic pairwise outcomes and paradoxes [21, 3,

1]. Measuring how close a profile is to being single-peaked, single-troughed, or polarizing

correlates with the probability of not getting certain paradoxes. Gehrlein defines these three

measures of group coherence, b, t, and m, respectivley single-peaked, single-troughed, and

9

polarizing:

b = min

t = min

m = min

!

|B C A| + |C B A|, |A C B| + |C A B|,

|A B C| + |B A C|

!

|A B C| + |A C B|, |B A C| + |B C A|,

|C B A| + |C A B|

!

|B A C| + |C A B|, |A B C| + |C B A|,

|A C B| + |B C A|

.

We say that a candidate who is never ranked last is the positively unifying candidate and

the candidate who is never ranked first is the negatively unifying candidate. The candidate

who is never ranked in the middle is the polarizing candidate since this candidate is either

first or last in everyone’s preferences [13].

In a series of papers, W. V. Gehrlein and others provide closed form representations

and limiting representations for various probabilities involving voting paradoxes. He uses

a brute force algorithm to calculate conditional probabilities for so called Borda paradoxes

conditioned upon b, t, or m, and IC, IAC, or IAC*. The general conclusion is that the

probabilities are small as n increases (less than 5% in most cases), and that probabilities

become smaller as b, t, or m tend toward zero. A summary of some of these results is given

in Table 1.3 at the end of this section.

The following bizarre theorem from Saari [21] guarantees the existence of paradoxical

profiles but gives no indication of their frequency.

Theorem 1.3. Ranking and dropping candidates

Let N represent the number of candidates and suppose there are at least 3 candidates.

• Rank the candidates in any way and select a positional election method to tally the

ballots.

• There are N ways to drop one candidate. For each of these, rank the remaining

(N − 1) candidates in any way and select a positional election method to tally the

ballots.

• Continue for each subset of 3 or more candidates.

10

• For each pair of candidates assign a ranking and use the plurality method to tally the

ballots.

For almost all choices of positional voting methods, there exists a profile with the above

choices for rankings and methods at each stage. The only method which does not guarantee

this is the Borda count.

Saari’s profile decomposition breaks the space of all profiles into orthogonal subspaces

in the sense that the rankings of certain voting methods are affected by one subspace and

not another. In Section 2.1 we explain the decomposition in more detail. The following

theorem gives a general sense of how these subspaces behave.

Theorem 1.4. Profile decomposition [22]

All profiles can be expressed as a linear combination of vectors from the following subspaces:

• All voting methods yield a complete tie for profiles from the Kernel.

• The Basic subspace gives identical rankings for all positional methods and the pairwise

method.

• Every positional method gives a zero tie on the Condorcet subspace, while the pairwise

method gives the cyclic ranking A B C A.

• On Reversal profiles, different positional methods give different rankings but the pairwise method and Borda count give a zero tie.

The Condorcet portion of a profile is completely responsible for all discrepancies between positional methods and the pairwise method. The Reversal portion of a profile is

responsible for all differences between different positional methods. Necessary and sufficient conditions for agreement between the various methods are given in [22] and are found

by simple algebraic manipulations. Saari also gives illuminating geometric interpretations

of these subspaces and their actions. To summarize, we give the following theorem.

Theorem 1.5. Ranking possibilities for three candidates [22]

Choose any ranking of the three candidates and any ranking for the pairs. If the Borda

ranking is not equal to any of the positional rankings, then there is a profile where the

pairwise rankings and the positional rankings are as described. Only the Borda ranking

must be related to the pairwise rankings.

11

The last part of this theorem is referring to the fact that the Borda count must rank

the pairwise winner above the pairwise loser.

Fact 1.6. Borda count always ranks the pairwise winner above the pairwise loser.

Proof. Suppose without loss of generality that the pairwise ranking is A B C. We

must then have that

|ABC| + |ACB| + |CAB| > |BAC| + |BCA| + |CBA| that is, A B

|ABC| + |BAC| + |BCA| > |ACB| + |CAB| + |CBA|

i.e. B C

|ABC| + |ACB| + |BAC| > |CAB| + |BCA| + |CBA| and this is A C.

On the other hand, the Borda tallies are

1

A’s tally = |ABC| + |ACB| + (|BAC| + |CAB|)

2

1

B’s tally = |BAC| + |BCA| + (|ABC| + |CBA|)

2

1

C’s tally = |CAB| + |CBA| + (|ACB| + |BCA|).

2

Recall that

n1 = |ABC|, n3 = |BAC|, n5 = |BCA|, n2 = |ACB|, n4 = |CAB|, n6 = |CBA|.

If we sum the inequalities which come from the pairwise conditions for A B and A C,

and sum the inequalities which arise from the conditions B C and A C we get

2(n1 + n2 ) + n3 + n4 > 2(n5 + n6 ) + n3 + n4 ⇒ n1 + n2 > n5 + n6

2(n1 + n3 ) + n2 + n5 > 2(n4 + n6 ) + n2 + n5 ⇒ n1 + n3 > n4 + n6 .

Now add the resulting inequalities,

2n1 + n2 + n3 > n4 + n5 + 2n6 ⇒ 2n1 + 2n2 + n3 + n4 > 2n4 + 2n6 + n2 + n4 ,

which is equivalent to saying that the Borda count ranks A over C.

Table 1.3: Summary of results from Gehrlein et al

Voter

distribution

IC

Event

Pairwise winner exists

Borda winner = Plurality winner

Pairwise loser = Plurality winner

Borda winner = Pairwise winner

Plurality winner = Pairwise winner

Pairwise creates a cyclic outcome, given b ∈ [ n3 , 0]

λ-rule winner = Pairwise loser, given one exists

n→∞

Pairwise winner exists, odd n

IAC

IAC*

λ-rule winner = Pairwise loser, n → ∞

λ-rule winner = Pairwise winner, given one exists

n→∞

all λ-rule winners = Pairwise winner

n → ∞ given on exists

λ-rule winner = Pairwise winner, given one exists

n→∞

all λ-rule winners = Pairwise winner

n → ∞ given on exists

Result/Range

Prb=0.91226

Prb=0.758338

Prb=0.033843

Prb=0.822119

Prb=0.690763

Prb: 0.25 to 0

λ: 0 to 1/2 to 1,

Prb: 0.0371 to 0 to 0.0371

2

15(n+3)

Prb= 16(n+2)(n+4)

3 (12−9λ−2λ2 )

Prb= (2λ−1)405(λ−1)

3

λ: 0, 1, 1/2,

Prb: 119/135, 68/108, 123/135

Prb=3437/6480 ≈ 0.53040

λ: 0, 1, 1/2,

Prb: 31/36, 3/4, 11/12

Method used

mulitvariate normal

multivariate normal

multivariate normal

multivariate normal

multivariate normal

computer

multivariate normal

Reference

[15]

[15]

[15]

[15]

[15]

[20]

[4]

computer

[12]

polyhedra volume

EUPIA2

[4]

[11]

EUPIA2

[11]

EUPIA2

[11]

EUPIA2

[11]

Prb=11/18

12

13

Chapter 2

Notation and Methods

In the subsequent analyses, the set of candidates is {A, B, C}. We assume the distribution

of voter profiles follows a multinomial distribution as explained in Section 1.3 and that

voters’ preferences are strictly transitive. The set of preferences is S = {A B C , A C B , B A C , C A B , B C A , C B A} which is the

sample space. The set of voters’ preferences is denoted as {v i }ni=1 , where v i ∈ S. We

will shorten a preference in S by leaving off the ’s, for example we will write ACB in

place of A C B. The following describes the various voting methods in terms of sums

of binomial random variables and calculates expectation, variance, and covariance for the

general case. By presenting the basic components of our probabilistic events in this way,

it is easily seen how one can use the Central Limit Theorem (see Theorem 2.1) to obtain

limiting probabilities which serve as approximations of the likelihoods of voting paradoxes.

2.1 Key Definitions

A voting sum will be denoted as Sn (E) =

n

X

1E (vi ), where E ⊂ S. The characteristic

i=1

function

1E (vi ) takes values from the sample space and is equal to 1 when vi ∈ E and 0

when v i ∈

/ E. Based on our assumptions about the voting profiles, Sn (E) is a binomial

random variable with mean nP (E) and variance nP (E)(1 − P (E)). All of the different

positional voting method outcomes and the pairwise method can be defined in terms of

Sn (E). For example, the plurality sums are

PA =

n

X

i=1

1{ABC,ACB} (v ), PB =

i

n

X

i=1

1{BAC,BCA} (v ), PC =

i

n

X

1{CAB,CBA} (vi ).

i=1

Then, the plurality tally is the vector (PA , PB , PC ). The ranking is given by listing the

candidates in order of largest to smallest of the PK , for K ∈ {A, B, C}.

14

We also define the negative plurality sums and the middle sums, NK and MK , respectively.

NA =

n

X

1{BCA,CBA} (v ), NB =

i

i=1

n

X

1{ACB,CAB} (v ), NC =

i

i=1

n

X

1{ABC,BAC} (vi )

i=1

and

MA =

n

X

1{BAC,CAB} (v ), MB =

i

i=1

n

X

1{ABC,CBA} (v ), MC =

i

i=1

n

X

1{ACB,BCA} (vi ).

i=1

The Borda method sum, BK , is then a combination of the plurality and middle sums,

with λ = 12 : BK = PK + 12 MK .

Recall, pk is the probability that a voter has preference k. Specifically we have,

p1 = P (v i = A B C) p2 = P (v i = A C B) p3 = P (v i = B A C)

p4 = P (v i = C A B)

p5 = P (v i = B C A)

p6 = P (v i = C B A).

For each of the six voting preferences on three candidates, Gehrlein uses nk for the

total number of voters who hold that preference:

n1 =

n

X

1{ABC} (v ), n2 =

i

i=1

n4 =

n

X

n

X

1{ACB} (v ), n3 =

i

i=1

1{CAB} (v ), n5 =

i

i=1

n

X

n

X

1{BAC} (vi ),

i=1

1{BCA} (v ), n6 =

i

i=1

n

X

1{CBA} (vi ).

i=1

These nk are each binomial random variables and will be the fundamental random variables

that we use in this analysis. We restate the voting sums in terms of these nk ,

PA =

n

X

1{ABC,ACB} (vi ) = n1 + n2 , PB =

i=1

n

X

1{BAC,BCA} (vi ) = n3 + n5 ,

i=1

PC =

n

X

i=1

1{CAB,CBA} (vi ) = n4 + n6 .

15

are the plurality sums. The Borda sums are just

1

1

BA = PA + MA = n1 + n2 + (n3 + n4 ),

2

2

1

BB = PB + MB = n3 + n5 +

2

1

BC = PC + MC = n4 + n6 +

2

1

(n1 + n6 ),

2

1

(n2 + n5 ).

2

For a general voting sum Sn (F ) for F ⊂ S, we can calculate the expectation, variance,

and covariance:

n

n

hX

i X

i

E[Sn (F )] = E

1F (v ) =

E 1F (v i ) = nE 1F (v 1 ) = nP [F ],

i=1

i=1

and since Sn (F ) is a binomial random variable with mean nP [F ],

2

Var[Sn (F )] = E (Sn (F ))2 − E[Sn (F )] = nP [F ](1 − P [F ]);

n

n

n X

n

hX

i X

h

i

X

Cov Sn (F ), Sn (G) = Cov

1F (vi ), 1G (vi ) =

Cov 1F (v i ), 1G (v j )

i=1

i=1

i=1 j=1

where

Cov

h

i

1F (vi ), 1G (vj ) =

P [F ∩ G] − P [F ]P [G]

if i = j

0

if i 6= j

so that we get

n

X

Cov Sn (F ), Sn (G) =

Cov 1F (v i ), 1G (v i ) = nCov 1F (v 1 ), 1G (v 1 )

i=1

= n E 1F (v 1 ) 1G (v 1 ) − E 1F (v 1 ) E 1G (v 1 )

= n E 1F ∩G (v 1 ) − P [F ]P [G] = n P [F ∩ G] − P [F ]P [G] .

P

As an example, take F =“A is ranked first place”. Then Sn (F ) = ni=1 1{ABC,ACB} (v i ) =

Pn

Pn

i

i

i=1 1{ABC} (v ) +

i=1 1{ACB} (v ) counts the number of times A is first place in all of

16

the voters’ preferences. We calculate the expectation to be

n

n

n

n

X

X

X

X

i

i

i

E Sn ({ABC, ACB}) = E

1{ABC} (v )+ 1{ACB} (v ) =

E[1{ABC} (v )]+

E[1{ACB} (v i )]

i=1

=

n

X

P (v i = ABC) +

i=1

i=1

n

X

i=1

P (v i = ACB) =

i=1

n

X

p1 +

i=1

n

X

i=1

p2 = n(p1 + p2 ),

i=1

and the variance is then

Var Sn ({ABC, ACB}) = nP [{ABC, ACB}](1−P [{ABC, ACB}]) = n(p1 +p2 )(1−(p1 +p2 )).

Next we explain Saari’s profile decomposition. The space of all voter profiles where

preferences are strictly transitive can be viewed as a n! dimensional vector subspace in Rn! ,

P

denoted P n = {(n1 , n2 , n3 , n4 , n5 , n6 ) : 6k=1 nk = n, nk ∈ Z}. Basis vectors of this space

are called profile differentials. The Kernel is spanned by the profile K = (1, 1, 1, 1, 1, 1)

for n = 3 candidates. For n ≥ 3, there is a space called the Universal Kernel, UKn , of

dimension n! − 2n−1 (n − 2) − 2 in which K n = (1, 1, . . . , 1) ∈ Rn! is contained. All the

profiles of this space result in complete ties for all positional methods and the pairwise

method. It is interesting to note that for n ≥ 5, the dimension of this space is more than

half the dimension of P n , and approaches the dimension of P n as n → ∞. In essence this

is an illustration of the law of large numbers since profiles which have no effect on election

outcomes become the largest part of the profile space [23].

The Basic subspace of profiles gives the same tally for any positional procedure and

the pairwise ranking agrees with the positional ranking. For n = 3, this is a 2-dimensional

subspace spanned by any two of the profile differentials {BA , BB , BC } where

BA + BB + BC = (0, 0, 0, 0, 0, 0, ),

BB = (0, −1, 1, −1, 1, 0),

BA = (1, 1, 0, 0, −1, −1),

BC = (−1, 0, −1, 1, 0, 1).

The Condorcet subspace is spanned by the profile differential C = (1, −1, −1, 1, 1, −1).

For n ≥ 3, this subspace has dimension 21 (n − 1)! and the Basic subspace has dimension

(n−1). For all positional methods, the tallies are all zero ties, and for the pairwise method,

the ranking is the cyclic A B C A.

17

The last subspace is the 2-dimensional Reversal subspace (for n ≥ 3 this space is

(n − 1)-dimensional). The profiles of this space result in a Borda count zero tie but

positional procedures other than the Borda rule have non-zero tallies which differ for each

rule. These profiles are

RA = (1, 1, −2, −2, 1, 1),

RB = (−2, 1, 1, 1, 1, −2),

and also

RC = (1, −2, 1, 1, −2, 1),

RA + RB + RC = 0.

We shall write a decomposition of a profile p~ = (n1 , n2 , n3 , n4 , n5 , n6 ) as p~ = aBA +

bBB + rA RA + rB RB + γC + kK and the vector ~v = (a, b, rA , rB , γ, k) as the vector of decomposition coefficients. Saari gives a way to find all the coefficients for the decomposition.

The matrix T is

2

1

1

0

6 1

1

1

1

−1

−1

−1

−1

1

−1 −1 −2

2

2

1 −1

1

1 −1 0

.

0

0 −1 1

−1 1

1 −1

1

1

1

1

1

It is the inverse of the matrix (BA , BB , RA , RB , C, K), where the columns are the basis

vectors expressed in the canonical basis of R6 .

We can do matrix multiplication to get T p~ = ~v , that is, we can find the decomposition

coefficients from multiplying the profile by T [22]. Conversely, we can get the decomposition

from the coefficients simply by using the definition of the profile decomposition vectors.

2.2 Central Limit Theorem Approximations

Theorem 2.1. Central Limit Theorem in Rm [2]

For each i, let Xi = (Xi1 , . . . , Xim )T be an independent random vector, where all Xi have

2 ] < ∞ for 1 ≤ k ≤ m; let the vector of means

the same distribution. Suppose that E[Xik

be c = (c1 , . . . , cm ) where ck = E[Xik ], and let the covariance matrix be Σ = [σij ] where

P

σij = E[(Xi − ci )(Xj − cj )]. Put Sn = ni=1 Xi .

18

Then the distribution of the random vector

√1 (Sn −nc)

n

converges weakly to the centered

multivariate normal distribution with covariance matrix Σ and density

fZ (z) =

1

(2π)m/2

1 T

|Σ|1/2

e− 2 z

Each nk is a sum of Bernoulli random variables

with mean pk . Applying Theorem 2.1 to the vector

Σ−1 z

.

1 , where Ek is the kth preference,

Ek

nk√

−npk

n

T

k=1,...,5

gives convergence to

the multivariate standard normal distribution,

n1√

−np1

n

n2 −np

√ 2

n D

Z1

Z2

→

. −

..

..

.

n5√

−np5

Z5

n

where Z = (Z1 , . . . , Z5 )T is a multidimensional normal random variable with density

fZ (z) =

1

(2π)5/2

1 T

e− 2 z

|Σ|1/2

Σ−1 z

,

and where Σ = [E[(1Ei − pi )(1Ej − pj )]]1≤i,j≤5 is the covariance matrix

p1 (1 − p1 )

−p1 p2

···

p2 (1 − p2 ) · · ·

−p2 p1

..

..

..

.

.

.

−p5 p1

Note that we can calculate Z6 , since

−p5 p2

P6

k=1 nk

···

−p1 p5

−p2 p5

..

.

.

p5 (1 − p5 )

= n and

P6

k=1 pk

= 1 gives Z6 = −(Z1 +

· · · + Z5 ).

Subsequently, we give probabilities for two different paradoxes where a winner in one

method is the loser in another method. In calculating the paradoxes, we find that changing the probabilities pk in the voter model yields different values for the probabilities of

paradoxes. The following lemma will be used to calculate some of these probabilities.

Lemma 2.2. A Bivariate Normal Probability

Let (X1 , X2 )T be a centered bivariate normal random vector with covariance matrix Σ =

19

[σi,j ]i,j=1,2 , σ1,2 = σ2,1 . Then P (X1 > 0, X2 > 0) =

Proof. Let √

X1

V ar[X1 ]

= X and

X2

Var[X2 ]

1

4

+

1

2π

sin−1

√ σ12

σ11 σ22

.

= aX + bY , where a, b ∈ R. We will require that

Var[X] = Var[Y ] = 1 and Cov[X, Y ] = 0 so that (X, Y ) is a bivariate normal random

vector with mean zero and covariance matrix equal to the identity matrix. We need to find

the values for a and b:

X2

1 = Var

= Var[aX + bY ] = a2 + b2

Var[X2 ]

"

=⇒ a2 + b2 = 1.

#

σ12

X2

Cov[X, aX + bY ] = Cov p

=√

,

σ11 σ22

V ar[X1 ] Var[X2 ]

X1

=⇒ a = √

=⇒ b =

1 − a2 =

Cov[X, aX + bY ] = a

σ12

,

σ11 σ22

s

p

and

2

σ12

1−

=

σ11 σ22

s

2

σ11 σ22 − σ12

.

σ11 σ22

Also, we will need that

s

2

a

σ12

− =−

2 .

b

σ11 σ22 − σ12

So with these we get that

P (X1 > 0, X2 > 0) = P

!

X2

a

p

> 0,

> 0 = P X > 0, Y > −

Var[X2 ]

b

V ar[X1 ]

X1

s

X > 0, Y > −

=P

∞Z ∞

Z

=

− ab x

0

2

σ12

2

σ11 σ22 − σ12

!

1 −(x2 +y2 )/2

e

dydx.

2π

Next, make a change of variables to polar coordinates. The integral becomes

Z

0

∞ Z π/2

α

1 −r2 /2

e

rdrdθ

2π

20

where

s

−1

α = tan

−

2

σ12

2

σ11 σ22 − σ12

!

⇒ α = sin−1

−σ12

√

σ11 σ22

.

Thus the integral is

Z

∞Z ∞

0

=

1

2π

α

1 −r2 /2

e

rdrdθ =

2π

Z

∞

0

1 −r2 /2

e

rdr

2π

Z

π/2

dθ

α

π

σ12

1

σ12

1

= +

.

− sin−1 − √

sin−1 √

2

σ11 σ22

4 2π

σ11 σ22

2.3 Simulation methods

Using MATLAB 2013b version, we have written algorithms to calculate the frequency of

two voting paradoxes. The first is where the Plurality winner is the Pairwise loser and the

second is where the Plurality winner is the Borda loser, see Alorithm 2.1 and Algorithm 2.2

below. The MATLAB code is included in the Appendix. We use two versions of these

algorithms. In one version, we do not count ties between candidates. For example, if

candidates A and B are both the Plurality winner but only candidate A is the Pairwise

loser, we don’t count this as a paradox. Similarly if two candidates are tied losers, or two

candidates tie as Plurality winners and Pairwise losers, etc, we do not count this. The

other version is where we do count these tied situations as a voting paradox. These two

approaches do not appear to be produce significantly different limiting behavior.

Algorithm 2.1. Finds the average number of times cadidate A is the Plurality winner and

Pairwise loser

• generate 10, 000 trials of random preference profiles based on the multinomial distribution with probabilities pk

• for i = 1 to 10000

– c = 0 counts whether a paradox occured

– calculate the Plurality ranking and Pairwise ranking from the ith random preference profile using the definitions in Section 2.1

21

– test whether candidate A is the Plurality winner and whether candidate A is the

Pairwise loser

– if both these occur, c = c + 1, increase the count by one

end for loop

• the average number of times a paradox occured is then c/1000

Algorithm 2.2. Finds the average number of times cadidate A is the Plurality winner and

Borda loser

• generate 10, 000 trials of random preference profiles based on the multinomial distribution with probabilities pk

• for i = 1 to 10000

– c = 0 counts whether a paradox occured

– calculate the Plurality ranking and Borda ranking from the ith random preference

profile using the definitions in Section 2.1

– test whether candidate A is the Plurality winner and whether candidate A is the

Borda loser

– if both these occur, c = c + 1, increase the count by one

end for loop

• the average number of times a paradox occured is then c/1000

To get the random preference profiles, we use MATLAB’s function makedist to create

the multinomial distribution using the six probabilities. Then using the function random,

we can generate n preferences for the voters, from which we create the preference profile.

It does this 10, 000 times, the number of trials we wish to produce.

Apart from these two algorithms, we also make use of the function mvncdf to calculate

the limiting probabilities. Suppose we want to calculate P (Y1 > 0, Y2 > 0, Y3 > 0, Y4 > 0)

where the centered and scaled normal variables {Yi }4i=1 have the covariance matrix Σ which

we name “Sigma” in our MATLAB work space. Then, mvncdf([0 0 0 0],[],Sigma)=

P (Y1 < 0, Y2 < 0, Y3 < 0, Y4 < 0) which then equals P (−Y1 > 0, −Y2 > 0, −Y3 > 0, −Y4 > 0)

and this equals P (Y1 > 0, Y2 > 0, Y3 > 0, Y4 > 0) since the variables are centered. We

22

shall use this function for the three dimensional and four dimensional normal distribution

probability calculations. For bivariate and trivariate distributions, mvncdf uses adaptive

quadrature on a transformation of the t density, based on methods developed by Drezner

and Wesolowsky and by Genz. The default absolute error tolerance for these cases is

1e − 8. For four or more dimensions, mvncdf uses a quasi-Monte Carlo integration algorithm based on methods developed by Genz and Bretz, as described in the references. The

default absolute error tolerance for these cases is 1e − 4. [5, 6, 16, 17, 18]

2.4 Simple Examples

The following examples give of flavor of the content of Chapter 3, showing how the preceding methods work to give probabilities and simulations. We also give and example of a

particular profile decomposition as well as how to compute a profile decomposition. Lastly,

we look at a single-peaked profile and see what its profile decomposition tells us.

2.4.1 The probability that A wins by the pairwise method

The candidate A wins by the pairwise method whenever n1 + n2 + n4 > n3 + n5 + n6 and

n1 +n2 +n3 > n4 +n5 +n6 , or equivalently, whenever n1 +n2 +n4 >

n

2

and n1 +n2 +n3 > n2 .

So then the probability of A being the pairwise winner is

P n1 +n2 +n4 >

n

n

, n1 +n2 +n3 >

2

2

!

−np2

−np4

−np1

n1√

+ n2√

+ n4√

>

n

n

n

=P

−np2

−np3

−np1

n1√

+ n2√

+ n3√

>

n

=P

n1√

−np1

n

n1√

−np1

n

n2√

−np2

n

n2√

−np2

+

n

+

n4√

−np4

n

n3√

−np3

+

n

+

√

n

n

n( 12 − (p1 + p2 + p4 )),

√

> n( 12 − (p1 + p2 + p3 ))

>

n

√

−

2 n

n(p1 +p2 +p4 ) ,

√

n

!

n

√

−

2 n

n(p1 +p2 +p3 )

√

n

!

.

Letting n → ∞ we get convergence to the multivariate normal in R2 . Let Z1 +Z2 +Z4 =

Y1 and Z1 + Z2 + Z3 = Y2 . Then the vector (Y1 Y2 )T has covariance matrix Σ,

23

(p1 + p2 )(1 − (p1 + p2 ))

p1 + p2 + p4 − (p1 + p2 + p4 )2

!

Σ=

(p + p )(1 − (p + p ))

1

2

1

2

−(p3 + p4 )(p1 + p2 ) − p4 p3

!

−(p3 + p4 )(p1 + p2 ) − p4 p3

.

p1 + p2 + p3 − (p1 + p2 + p3 )2

We get a positive probability whenever both p1 + p2 + p4 ≥

1

2

and p1 + p2 + p3 ≥ 12 .

There will be four cases depending on whether we take equality or strict inequality. In the

first case, if both p1 + p2 + p4 =

1

2

and p1 + p2 + p3 = 12 , then the covariance matrix can

be expressed more simply as

Σ=

1

4

1

4

1

4

− p3

Z

∞

− p3

1

4

!

,

and the probability is

Z

∞

P (Y1 > 0, Y2 > 0) =

y1 =0

y2 =0

−yt Σy

1

e 2 dy.

1/2

2π |Σ|

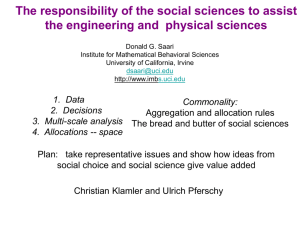

Figure 2.1: Limiting behavior in simulation when pk =

1

6

for all k

24

Using Lemma 2.2, this integral reduces to

we get

1

4

+

1

2π

1

4

1

+ 2π

sin−1 (1 − 4p3 ). When we let p3 = 1/6,

sin−1 (1/3) ≈ 0.3041 as given in [4]. Therefore, the probability of getting a

pairwise winner is 3 ∗ 0.3041 ≈ 0.9123, whenever pk = 1/6 for all k. Figure 2.1 shows the

simulation results for these values of pk . We see the convergence is practically immediate.

In Figure 2.2 we see that the limiting probability goes to zero as p3 → 12 . When p3 is

1/2 then by the condition p1 + p2 + p3 = 1/2, we get that p1 = p2 = 0. This means that

the probability that a random voter has preference ABC or ACB is zero, and so there is

literally no chance that candidate A will get any first place votes, and thus cannot be the

pairwise winner.

Figure 2.2: Probability of A being the pairwise winner under conditions p1 + p2 + p4 =

and p1 + p2 + p3 = 21

Alternately, if p1 + p2 + p4 >

1

2

and p1 + p2 + p3 = 12 , we get the probability

Z

∞

Z

∞

P (Y1 > −∞, Y2 > 0) =

y1 =−∞

y2 =0

−yt Σy

1

2

e

dy,

2π |Σ|1/2

where now the covariance matrix is

1

2

1

4

+ p4 − p3

1

4

− 12 (p3 + p4 )

− 12 (p3 + p4 )

1

4

!

,

with the equivalent constraints

p4 > p3 ,

1

p1 + p2 + p3 = ,

2

1

p4 + p5 + p6 = .

2

1

2

25

This probability is just

1

2

since the centered normal distribution is symmetric about the

origin. Thus, these conditions on the pk force A to be the pairwise winner half the time.

As an illustration, if we maximize p4 = pCAB at 1/2 and make p3 = pBAC close to 1/2,

then p5 = p6 = 0 and also, there will be enough of a probability that A is ranked above B

and C via the small but non-zero probabilities of p1 = pABC and p2 = pACB . In addition,

p5 and p6 are zero, which means there is no chance that A is last. One can deduce that

the most likely ranking is then A B C.

This result is analogous for the similar conditions

1

p1 + p2 + p4 = ,

2

p1 + p2 + p3 >

1

2

equivalent to

p4 < p3 ,

1

p1 + p2 + p3 = ,

2

1

p4 + p5 + p6 = ,

2

which give the probability

1

P (Y1 > 0, Y2 > −∞) = .

2

If we maximize p3 at 1/2 we can make similar arguments to find that the most likely

ranking will be A C B.

Now, suppose we let p5 +p6 = 1/2 under the constraints p1 +p2 +p3 =

1

2

1

2

and p4 +p5 +p6 =

with either p4 > p3 or p3 > p4 . Then we get that p4 = p3 = 0 which implies then that

p1 + p2 = 1/2. In this situation, A is ranked first half of the time and last half of the time

(so to speak). All of this makes sense because the pk are simply the frequency with which

voters will have preference k and thus directly relate to the actual numbers of those voters

with preference k, to within the variance of the random variables, which depends on the

underlying assumption about the distribution of voter preferences.

2.4.2 Condorcet’s Example

Condorcet created the example where no positional procedure ranks the pairwise winner

as the positional winner. The profile vector is p~ = (30, 1, 29, 10, 10, 1), and the table below

gives the profile in terms of the preferences.

Using the matrix T to find the vector of coefficients [23], we get that p~ = 16 (68BA +

76BB − 28RA − 20RB + 19C + 81K). The coefficients on BA and BB will ensure a Borda

rule ranking of B A C since 76 is greater than 68. The plurality ranking is also

26

Preference

|A B C|

|A C B|

no. of votes

30

1

Table 2.1: Condorcet’s example

Preference

no. of votes

Preference

|B A C|

10

|B C A|

|C A B|

1

|C B A|

no. of votes

10

29

B A C. The coefficients on RA and RB change only the anti-plurality ranking to

A ∼ B C. Details on how the coefficients for these reversal terms give the desired

rankings are complicated, see [22]. The Condorcet term 19C changes the pairwise winner

to A to give the pairwise ranking A B C. Notice that the Borda ranks the pairwise

winner above the pairwise loser, as it must.

2.4.3 Single-peaked profiles

Fact 2.3. If a profile is single peaked, then there will be a Pairwise winner and a Pairwise

loser, that is, there cannot be a cyclic pairwise outcome.

Proof. Without loss of generality, take A as our candidate who is never ranked last. Then

we have that |BCA| = 0 and |CBA| = 0. Suppose, by way of contradiction, that we have

a cyclical outcome, A B C A. This is equivalent to the following three inequalities:

|ABC|+|ACB|+|CAB| > |BAC|+|BCA|+|CBA|,

and

|BCA|+|BAC|+|ABC| > |CBA|+|CAB|+|ACB|,

|CAB| + |CBA| + |BCA| > |ACB| + |ABC| + |BAC|

which reduce to

|ABC| + |ACB| + |CAB| > |BAC|,

and

|BAC| + |ABC| > |CAB| + |ACB|,

|CAB| > |ACB| + |ABC| + |BAC|

Adding the second and last inequalities, we get that |BAC| + |ABC| + |CAB| > |CAB| +

|ACB| + |ACB| + |ABC| + |BAC| ⇒ 0 > 2|ACB| which is impossible. The proof is similar

for the other cyclic cases. Thus, for single peaked profiles, there cannot be a paradoxical

Pairwise outcome.

Regardless of the above fact, we can construct a paradoxical profile even if it is unidimensional. We will investigate what the decomposition of a single-peaked profile looks

27

like. Take as our profile p~ = (n1 , n2 , n3 , n4 , 0, 0) where the candidate A is never ranked

last. Using the matrix T from earlier, the coefficients for the decomposition can be found,

2

1

1

−1 −1 −2

1 −1 2 −2

1 −1 −1

1

0

6 −1 1

0

0

1 −1 −1 1

1

1

1

1

1

1

1

1

1

n1

2n1 + n2 + n3 − n4

n1 − n2 + 2n3 − 2n4

−1

n2

n 1

0

n

−

n

−

n

2

3

4

3

.

=

n4 6

−1

−n

+

n

1

2

−1 0

n1 − n2 − n3 + n4

0

n1 + n2 + n3 + n4

1

So, in the decomposition p~ = (aBA + bBB + rA RA + rB RB + γC + kK), the coefficients are

6a = 2n1 + n2 + n3 − n4 ,

6rA = n2 − n3 − n4 ,

6γ = n1 − n2 − n3 + n4 ,

6b = n1 − n2 + 2n3 − 2n4 ,

6rB = −n1 + n2 ,

6k = n1 + n2 + n3 + n4 .

This illustrates that, for any n, there still exist profiles where γ, rA , and rB are non-zero

and thus where differences between voting methods will occur. For example, the profile

p~ = (12, 6, 22, 2, 0, 0), with γ = −2, has the plurality and pairwise ranking as B A C

while the Borda ranking is A B C.

Another profile with the same number of voters, p~ = (16, 2, 19, 5, 0, 0), has γ = 0 but

rA = −11/3 and rB = −7/3. The ranking for all methods is A B C except for the

plurality which has ranking B A C.

These two profiles are just examples. We can multiply each of them by a constant and

ensure that for any number of voters N , there exists some profile with number of voters

equal to or larger than N which exhibits a paradox. Thus, while single peaked profiles

cannot have cyclic Pairwise rankings, they can still exhibit other voting paradoxes.

28

Chapter 3

Results

3.1 Main Analyses

3.1.1 Plurality Winner is the Pairwise Loser

The so-called Strong Borda Paradox occurs when one candidate is both the plurality winner

and the pairwise loser. Without loss of generality, we choose this candidate to be A. This

situation is then equivalent to these four inequalities:

n1 +n2 > n3 +n5 ,

n1 +n2 > n4 +n6 ,

n3 +n5 +n6 > n4 +n2 +n1 ,

n4 +n5 +n6 > n3 +n2 +n1 .

The first two inequalities ensure that A’s plurality count is larger than both B’s and C’s

plurality counts. The last two inequalities give that the pairwise count for A B is less

than that for B A, and that the pairwise count for A C is less than that for C A.

P

This ensures that A is the pairwise loser. Since 6k=1 nk = n, these become

2(n1 +n2 )+n4 +n6 > n ,

2(n1 +n2 )+n3 +n5 > n ,

n3 +n5 +n6 >

n

,

2

n4 +n5 +n6 >

To use the normal approximation, we center and scale the random variables nk ,

2

√

n2 − np2 n4 − np4 n6 − np6

n1 − np1

√

√

√

+2 √

+

+

> n 1 − (2p1 + 2p2 + p4 + p6 ) ,

n

n

n

n

2

√

n1 − np1

n2 − np2 n3 − np3 n5 − np5

√

√

√

+2 √

+

+

> n 1 − (2p1 + 2p2 + p3 + p5 ) ,

n

n

n

n

√ 1

n3 − np3 n5 − np5 n6 − np6

√

√

√

+

+

> n − (p3 + p5 + p6 ) ,

2

n

n

n

√ 1

n4 − np4 n5 − np5 n6 − np6

√

√

√

+

+

> n − (p4 + p5 + p6 ) .

2

n

n

n

n

.

2

29

So then the probability of a Strong Borda Paradox is

n2√

−np2

−np4

−np6

−np1

2 n1√

+

+ n4√

+ n6√

n

n n

n

−np1

n2√

−np2

n3√

−np3

n5√

−np5

2 n1√

+

+

+

n

n

n

n

P

n3√

−np3

n5√

−np5

n6√

−np6

+

+

>

n

n

n

n4√

−np4

n5√

−np5

n6√

−np6

+

+

>

n

n

n

√

n 1 − (2p1 + 2p2 + p4 + p6 ) ,

√

> n 1 − (2p1 + 2p2 + p3 + p5 ) ,

.

√ 1

n 2 − (p3 + p5 + p6 ) ,

√ 1

n 2 − (p4 + p5 + p6 )

>

Letting n → ∞ we get that

n2√

−np2

−np4

n1√

−np1

2

+

+ n4√

+

n

n n

−np1

n2√

−np2

n3√

−np3

2 n1√

+

+

+

n

n

n

−np5

−np6

n3√

−np3

+ n5√

+ n6√

n

n

n

n4√

−np4

n5√

−np5

n6√

−np6

+

+

n

n

n

n6√

−np6

n

n5√

−np5

D

n −

→

Y1

2(Z1 + Z2 ) + Z4 + Z6

2(Z1 + Z2 ) + Z3 + Z5

=: Y2

Y

Z3 + Z5 + Z6

3

Y4

Z4 + Z5 + Z6

where YT = (Y1 , Y2 , Y3 Y4 )T is a multidimensional normal random vector with density

1

(2π)2 |Σ|1/2

exp (− 12 yT Σ−1 y) and where Σ is the covariance matrix with the entries

Σ1,1 = Var[2(Z1 + Z2 ) + Z4 + Z6 ] = 4(p1 + p2 ) + (p4 + p6 ) − (2(p1 + p2 ) + p4 + p6 )2 ,

Σ2,2 = Var[2(Z1 + Z2 ) + Z3 + Z5 ] = 4(p1 + p2 ) + (p3 + p5 ) − (2(p1 + p2 ) + p3 + p5 )2 ,

Σ3,3 = Var[Z3 + Z5 + Z6 ] = (p3 + p5 + p6 ) − (p3 + p5 + p6 )2 ,

Σ4,4 = Var[Z4 + Z5 + Z6 ] = (p4 + p5 + p6 ) − (p4 + p5 + p6 )2 ,

Σ1,2 = Σ2,1 = Cov[2(Z1 + Z2 ) + Z4 + Z6 , 2(Z1 + Z2 ) + Z3 + Z5 ]

= 2(p1 + p2 ) − 2(p1 + p2 )2 − (p4 + p6 )(p3 + p5 )

Σ1,3 = Σ3,1 = Cov[Z3 + Z5 + Z6 , 2(Z1 + Z2 ) + Z4 + Z6 ]

= p6 − (2(p1 + p2 ) + p4 + p6 )(p3 + p5 + p6 )

,

Σ1,4 = Σ4,1 = Cov[Z4 + Z5 + Z6 , 2(Z1 + Z2 ) + Z4 + Z6 ]

= (p4 + p6 ) − (2(p1 + p2 ) + p4 + p6 )(p4 + p5 + p6 )

Σ2,3 = Σ3,2 = Cov[Z3 + Z5 + Z6 , 2(Z1 + Z2 ) + Z3 + Z5 ]

= (p3 + p5 ) − (2(p1 + p2 ) + p3 + p5 )(p3 + p5 + p6 )

,

,

,

30

Σ2,4 = Σ4,2 = Cov[Z4 + Z5 + Z6 , 2(Z1 + Z2 ) + Z3 + Z5 ]

= p5 − (2(p1 + p2 ) + p3 + p5 )(p4 + p5 + p6 )

Σ3,4 = Σ4,3 = Cov[Z3 + Z5 + Z6 , Z4 + Z5 + Z6 ]

= (p5 + p6 ) − (p3 + p5 + p6 )(p4 + p5 + p6 )

,

.

The limiting probability is then

2(Z1 + Z2 ) + Z4 + Z6 > limn→∞

√

n 1 − (2p1 + 2p2 + p4 + p6 )

√

2(Z1 + Z2 ) + Z3 + Z5 > limn→∞ n 1 − (2p1 + 2p2 + p3 + p5 )

.

P

√ 1

Z

+

Z

+

Z

>

lim

n

−

(p

+

p

+

p

)

3

5

6

n→∞

3

5

6

√ 12

Z4 + Z5 + Z6 > limn→∞ n 2 − (p4 + p5 + p6 )

This probability will be non-zero whenever the following inequalities are changed to strict

inequality or equality, or any mixture of strict inequality and equality:

2p1 + 2p2 + p4 + p6 ≥ 1,

2p1 + 2p2 + p3 + p5 ≥ 1,

1

p4 + p5 + p6 ≥ .

2

1

p3 + p5 + p6 ≥ ,

2

We shall henceforth refer to these four relations as the constraints on pk . Table 5.1 in

the Appendix shows all the possibilities that can arise, and their calculated probabilities.

Below we look at each category of the constraints in more detail.

3.1.1.1 All Equality Constraints

When the constraints are all equality relations, we have

2p1 + 2p2 + p4 + p6 = 1,

2p1 + 2p2 + p3 + p5 = 1,

1

p3 + p5 + p6 = ,

2

p4 + p5 + p6 =

1

2

which corresponds to the limiting probability

P

!

2(Z1 + Z2 ) + Z4 + Z6 > 0, Z3 + Z5 + Z6 > 0,

2(Z1 + Z2 ) + Z3 + Z5 > 0, Z4 + Z5 + Z6 > 0

.

When we are in this case, a numerical computation yields the value of 0.0113 which is

the same as Gehrlein’s value obtained in [7]. The equalities reduce to

p1 + p2 =

1

3

1

and p3 = p4 = p5 = p6 = ,

6

31

Figure 3.1: Limiting behavior in simulation when pk =

1

6

for all k

which represent the most uniform situation we can have in the probabilities, aside from

p1 and p2 being variable. So long as p1 + p2 =

1

3,

we still get the value 0.0113 for the

probability.

Simulations for various finite values of the number of voters is shown in Fig 3.1. Two

simulations are run, one where we count ties and one where we do not. The limiting

probability is nearly attained for relatively low numbers of voters.

3.1.1.2 One Equality Constraint

There are four different ways for the four constraints to be three strict inequalities and one

equality. In all four of these cases, the probability will be 12 . This is due to the fact that

three of the centered and scaled normal random variables Y1 , Y2 , Y3 , or Y4 are being taken

32

Figure 3.2: Limiting behavior in simulation when p1 = p2 = 0.20, p3 = p4 = 0.05, p5 = 0.35,

and p6 = 0.15

over all real numbers. That is, we take Yj > −∞ in the limiting probability. This reduces

the probability to P (Yj > 0) for j = 1, 2, 3, 4, which is 21 .

Figure 3.2 shows a simulation using p1 = p2 = 0.20, p3 = p4 = 0.05, p5 = 0.35, and

p6 = 0.15. This corresponds to the constraints

2p1 + 2p2 + p4 + p6 = 1,

2p1 + 2p2 + p3 + p5 > 1,

1

p3 + p5 + p6 > ,

2

1

p4 + p5 + p6 > ,

2

which yield the probability P (2(Z1 + Z2 ) + Z4 + Z6 > 0). As we see, the probability converges to 12 .

Now, Figure 3.3 shows a simulation using p1 = p2 = 0.167, p3 = p4 = 0.05, p5 = 0.284,

and p6 = 0.282. These probabilities are within 0.002 of being in the two equalities case

33

Figure 3.3: Limiting behavior in simulation when p1 = p2 = 0.167, p3 = p4 = 0.05,

p5 = 0.284, and p6 = 0.282

three, which has limiting probability 13 . Notice that the limiting behavior lingers around

the value of 13 , with a very slow upward slope. Even as the probabilities fit the constraints

for this case, one can see that their “closeness” to the other case retards the convergence.

This is a typical phenomenon that we illustrate with other simulations.

3.1.1.3 Two Equality Constraints

There are six different ways for the four constraints to be two strict inequalities and two

equalities. We will use Lemma 2.2 to find the probabilities in these six cases. Certain pairs

of cases will have the same probability due to the symmetry between p1 and p2 , p3 and p4 ,

or p5 and p6 .

34

The first two cases are when the conditions on the pk are

2p1 + 2p2 + p4 + p6 = 1,

1

p3 + p5 + p6 = ,

2

2p1 + 2p2 + p3 + p5 > 1,

1

p4 + p5 + p6 > ,

2

corresponding to the probability

P (2(Z1 + Z2 ) + Z4 + Z6 > 0, Z3 + Z5 + Z6 > 0) ,

or when we have the conditions on the pk being

2p1 + 2p2 + p4 + p6 > 1,

1

p3 + p5 + p6 > ,

2

2p1 + 2p2 + p3 + p5 = 1,

1

p4 + p5 + p6 = ,

2

corresponding to the probability

P (2(Z1 + Z2 ) + Z3 + Z5 > 0, Z4 + Z5 + Z6 > 0) .

This second case results from the first case replacing p4 with p3 and p6 with p5 . Therefore,

we will just look at the first case of P (2(Z1 + Z2 ) + Z4 + Z6 > 0, Z3 + Z5 + Z6 > 0). It

will have covariance matrix

Σ=

1 − 2p4 p4 −

p4 −

1

2

1

2

!

1

4

.

The conditions restrict us to 0 ≤ p4 < 16 . The Lemma 2.2 tells us that the probability will

p

1

be 14 − 2π

arcsin( (1 − 2p4 )) which is zero when p4 = 0 and increases to 0.098 for p4 = 16 .

The third case is when the conditions on the pk are

2p1 + 2p2 + p4 + p6 = 1,

2p1 + 2p2 + p3 + p5 = 1,

1

p3 + p5 + p6 > ,

2

1

p4 + p5 + p6 > ,

2

corresponding to the probability

P (2(Z1 + Z2 ) + Z4 + Z6 > 0, 2(Z1 + Z2 ) + Z3 + Z5 > 0) .

The covariance matrix for this case will be simply

value of

1

3

!

2/3 1/3

1/3 2/3

regardless of how we distribute values for the pk .

Figure 3.4 shows a simulation using p1 = p2 =

1

6,

, and Lemma 2.2 gives the

p3 = p4 =

1

20 ,

and p5 = p6 =

17

60 .

Compare this to Figure 3.3 which shows the simulation with these probabilities perturbed

35

Figure 3.4: Limiting behavior in simulation when p1 = p2 =

17

p5 = p6 = 60

1

6,

p3 = p4 =

1

20 ,

and

by 0.002 to make the second constraint 2p1 + 2p2 + p3 + p5 > 1. The convergence here is

practically immediate.

The fourth and fifth cases have a symmetry analogous to the first and second cases.

Case four is when the conditions on the pk are

2p1 + 2p2 + p4 + p6 = 1,

2p1 + 2p2 + p3 + p5 > 1,

1

p3 + p5 + p6 > ,

2

giving the probability

P (2(Z1 + Z − 2) + Z4 + Z6 > 0, Z4 + Z5 + Z6 > 0) .

1

p4 + p5 + p6 = ,

2

36

The fifth case has the conditions

2p1 + 2p2 + p4 + p6 > 1,

2p1 + 2p2 + p3 + p5 = 1,

1

p3 + p5 + p6 = ,

2

p4 + p5 + p6 >

1

2

yielding the probability

P (2(Z1 + Z − 2) + Z3 + Z5 > 0, Z3 + Z5 + Z6 > 0)

The covariance matrices for case four, Σ4 , and for case five, Σ5 , are

Σ4 =

where

1

6

< p5 <

1

2

or

1

6

1

2

+ p5 −p5

< p6 <

(p6 = 0) and 0.183 when p5 =

and

1

4

−p5

1

6

!

1

2.

1

2

Σ5 =

+ p6 −p6

−p6

!

1

4

,

The resulting probability will be zero when p5 = 0

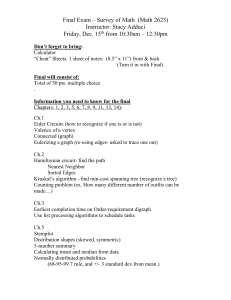

(p6 = 16 ).