New Tools for Tracking the Dynamics of Mental Representations Sam Gershman

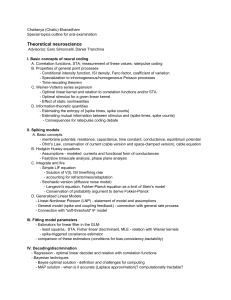

advertisement

New Tools for Tracking the Dynamics of Mental Representations Sam Gershman Princeton University June 14, 2012 / OHBM Resist the tyranny of the voxel! unit of measurement 6= unit of analysis Problems with voxel-based approaches I Voxels impose a discretization of the anatomical space; voxel-based models elide this measurement discretization into parametric discretization. Why should doubling the number of measurements necessitate doubling the number of parameters? I Explosion of parameters to estimate. I Hard to align results across subjects. I Parameters should reflect meaningful theoretical quantities. A new appproach I Assume, as in latent factor models, that the observed neural data arises from a superposition of basis images. This allows us to parsimoniously capture the underlying modes of the system. I Assume, as in supervised models (e.g., GLMs), that the superposition is covariate-dependent: which basis images are active depends on which covariates are active I The main departure from these models is that the basis images are topographic and voxel-free. Basis images I Each basis image is specified by a function over space that is discretely sampled to give rise to voxel activations. I The function is parameterized by a center µk (in image space coordinates) and a width λk : ( ) X −1 2 fkv = exp −λk (µkd − rvd ) , d where fk is the k th basis image and rvd is the coordinate of voxel v in the dth dimension. I We call each of these functions a latent source to emphasize their similarity to physically extended regions. Hence, the model is called Topographic Latent Source Analysis (TLSA). The generative process V A N Y Neural data C = X K V W F Design Weights matrix B + - Basis images K Modeling multi-subject data Image space Subject-level sources Group template How many sources? I How do we decide how many sources to use? I We can approach this probabilistically by defining a nonparametric prior on the weight matrix. I This allows an unbounded number of sources, but with a bias towards a small number. I Thus, the effective number of sources can be determined automatically. I Specifically, each weight is modeled as a “spike and slab” prior (the beta process). Construction of the spike and slab prior wck = uck zk , uck ∼ N (0, σu2 ) (loading of source k on covariate c) (continuous “slab”) zk ∼ Bernoulli(πk ) (binary “spike”) πk ∼ Beta(α, 1) (probability of spike) I As K → ∞, we obtain a nonparametric prior, the beta process. I Even though there are theoretically an infinite number of sources, the number of active sources follows a Poisson(α) distribution. Spike and slab prior 0.7 Slab only Spike & slab 0.6 0.5 P(r) 0.4 0.3 0.2 0.1 0 −3 −2 −1 0 r 1 2 3 Inference I Our goal is to compute the posterior over parameters θ given the neural data Y and covariates X: P(θ|X, Y) ∝ P(Y|θ, X)P(θ). However, computing this exactly is intractable. Therefore we approximate it with a distribution Q(θ) taken from a family of distributions Q for which inference is tractable. The optimization problem is to find the distribution Q ∗ that minimizes the KL-divergence between Q(θ) and P(θ|X, Y): Q ∗ = argmin KL[Q||P]. Q∈Q I This technique is known as variational Bayes. It is computationally efficient and involves closed-form updates that are guaranteed to converge to a local optimum. Illustration: reconstructed maps Class 3 Class 2 Class 1 TLSA OLS Extension to spatiotemporal data I The high temporal and low spatial resolution of EEG presents a special challenge: how can we capture spatiotemporal patterns? I We can capture these patterns by replacing the spatial basis functions with spatiotemporal basis functions, consisting of spatial and temporal receptive fields. I The inference algorithm works in exactly the same way. Illustration: Extracting the P300 Oddball contrast for one subject: −3 1.6 x 10 400 ms 1.4 AF7 F7 F5 Fp1 Fp2 AF3 AF4 F3 F1 Fz 1.2 AF8 F6 F4 F2 F8 FT9 1 FT10 T7 C5 C1 C3 FT8 FC2 FC4 FC6 Cz C2 C6 C4 Activation FT7 FC5 FC3 FC1 T8 CP3 CP1 CPz CP2 CP4 CP6 TP7 CP5 TP8 TP9 TP10 P7 P5 PO7 PO9 P3 P1 PO3 O1 Pz POz Oz P2 P4 PO4 O2 P6 P3 Pz C3 FC4 CP2 0.8 0.6 P8 PO8 0.4 PO10 0.2 0 0 100 200 300 Time (ms) 400 500 600 Nonparametric surface priors I So far we’ve been using radial basis functions to represent sources. I Can we construct a more flexible surface representation? I Bayesian nonparametric approach: place a prior over surfaces that allows a wide range of shapes, but prefers smooth ones. I Specifically, we use Gaussian processes (GPs) as a surface prior. Gaussian processes I Definition: collection of random variables, any finite subset of which are jointly Gaussian-distributed. I Draws from a GP are functions (e.g., over space or time). I Parameters of the GP specify the smoothness properties of random functions. from Rasmussen and Williams (2006) Gaussian process TLSA I We use the GP prior to model spatial smoothness in the residuals—i.e., after subtracting the parametric TLSA predictions from the image. This allows us to represent sources that are both anatomically localized and irregularly shaped. I We’ve applied this to retinotopic orientation maps in V1 (data courtesy of Jeremy Freeman at NYU). GP-TLSA: example source Slice 1 Slice 2 Slice 3 Top: Radial basis function source Bottom: Gaussian process source GP-TLSA: predicted retinotopy map Each circle represents a datapoint. Gaussian process predictions are plotted at a finer resolution. Quantitative assessment I Reconstruction: Given a set of covariates, how well can we predict the neural data? I Decoding: Given neural data, how well can we predict the covariates? I All analyses used separate training and test sets (i.e., cross-validation). GP-TLSA: reconstruction results on orientation maps 5 Reconstruction error 2 x 10 1.5 1 0.5 0 MAP GP GNB MAP: maximum a posteriori fitting of TLSA with RBF sources GP-TLSA: TLSA with GP sources GNB: Gaussian naive Bayes GP-TLSA: classification results on orientation maps 7000 Cross−entropy error 6000 5000 4000 3000 2000 1000 0 MAP GP GNB Conclusions I TLSA was designed to capture several ideas about the statistical structure of fMRI data. Along the way, we can perform many tasks: classification, regression, reconstruction, hypothesis testing. I Beta process (spike and slab) prior allows us to automatically infer how many sources to use. I Extension to EEG: spatiotemporal sources. I Gaussian process priors for flexible surface models. Thanks! I Ken Norman (Princeton) I David Blei (Princeton) I Minqi Jiang (Princeton) I Abu Saparov (Princeton) I Per Sederberg (Ohio State) I Jeremy Freeman (NYU) Funding: NSF CRCNS grant IIS-1009542