Classification of Handwritten Digits Using an Artificial Neural Network Michael Mason

advertisement

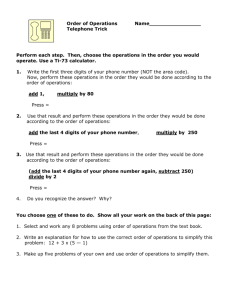

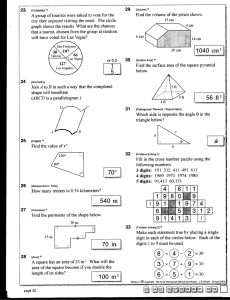

The Problem The Solution(s) The Problem Remembered Classification of Handwritten Digits Using an Artificial Neural Network Michael Mason Colorado School of Mines April 20, 2015 1/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered 2/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Results Group Belongie et al. Meier et al. Ranzato et al. LeCun et al. Method K-NN Committee of 25 NN Large CNN Virtual SVM Test Classification Error 0.63% 0.39% 0.39% 0.80% Table 1: Selection of Results 3/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Description 4/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Logistic Classifier ■ Determines probability of each digit for a given input. ■ The input is mapped with the softmax function(weight matrix W and bias vector b) to a probability density e Wi x+bi P(Y = i|x, W, b) = ∑ W x+b j j je ■ (1) Digit (i) with the highest probability is the output of the classifier Operating on raw input data, a logistic classifier can achieve ˜93% classification accuracy 5/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered Figure 1: Learned projection to zero-digit A Solution - The ANN Figure 2: Learned projection to one-digit 6/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Hidden Layer A one-hidden-layer NN is a universal function approximator and can be formally expressed as a function f : Rinputsize → Routputsize f (x) = G (b (2) + W (2) (s(b (1) + W (1) x))) (2) ■ Added complexity allows for general function fitting ■ Utilizes a nonlinear ”activation function” s to transform inputs ■ Can be chained for increased complexity 7/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Back Propagation ■ Maximize P(Y = i|x, W, b) = L(x, W, b; Y = i), referred to as the likelihood ■ Use stochastic gradient descent to alter W and b as well as internal values. ■ Optimize output layer first and propagate backwards ■ Rate of gradient traversal dictated by a learning rate 8/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Known Issues ■ Prone to over-fitting ■ Can get stuck in local minima ■ Does not respect structure of the data 9/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN Implementation Utilized Theano Theano ■ Theano allows for manipulation of symbolic variables ■ Many useful, optimized functions for machine learning such as softmax, gradient etc. ■ Graphics card utilization through CUDA Tutorial at: http://deeplearning.net/tutorial/ 10/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered A Solution - The ANN My Results Specifics ■ 150 hidden layer nodes ■ Initial learning rate of 0.02, decaying ■ Batch size of 15 Achieved a classification accuracy of 98.1% 11/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered Limitations Figure 3: Mystery Digit (it’s an eight) ■ Difficult to ask computers to recognize what humans can’t (without more information) ■ Lack of context clues 12/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered Figure 4: Note number of chainz 13/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered (Immediate) Future Work To Do - Increased Robustness ■ Deepen Network ■ Implement noise & Dropout ■ Improve on SGD 14/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered Works Cited 1 D. Ciresan, U. Meier, and J. Schmidhuber. ”Multi-column deep neural networks for image classification. In Computer Vision and Pattern Recognition (CVPR)”, 2012 IEEE Conference on, pages 3642-3649.. IEEE, 2012. 2 R. Ghosh, and M. Ghosh, ”An Intelligent Offline Handwriting Recognition System Using Evolutionary Neural Learning Algorithm and Rule Based Over Segmented Data Points”, Journal of Research and Practice in Information Technology, Vol. 37, No. 1, pp. 73-87, Feb. 2005. 3 Y. LeCun, L. Bottou, G. Orr and K. Muller: Efficient BackProp, in Orr, G. and Muller K. (Eds), ”Neural Networks: Tricks of the trade”, Springer, 1998 4 D.W. Opitz, J.W. Shavlik, ”Generating accurate and diverse members of a neural network Ensemble”, in: Advances in Neural Information Processing Systems, MIT Press, 1996, pp. 535-541. 15/16 Michael Mason A Tale of Ten Digits The Problem The Solution(s) The Problem Remembered Thank you for your time. Questions? 16/16 Michael Mason A Tale of Ten Digits