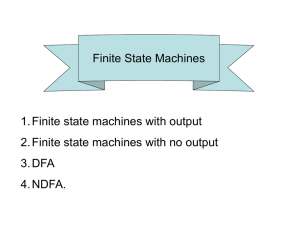

Finite-State • – Lecture # 17

Lecture # 17

Session 2003

Finite-State Techniques for Speech Recognition

• motivation

• definitions

– finite-state acceptor (FSA)

– finite-state transducer (FST)

– deterministic FSA/FST

– weighted FSA/FST

• operations

– closure, union, concatenation

– intersection, composition

– epsilon removal, determinization, minimization

• on-the-fly implementation

• FSTs in speech recognition:recognition cascade

• research systems within SLS impacted by FST framework

• conclusion

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 1

Motivation

• many speech recognition components/constraints are finite-state

– language models (e.g., n -grams, on-the-fly CFGs)

– lexicons

– phonological rules

– N -best lists

– word graphs

– recognition paths

• should use same representation and algorithms for all

– consistency

– make powerful algorithms available at all levels

– flexibility to combine or factor in unforeseen ways

• AT&T [Pereira, Riley, Ljolje, Mohri, et al.]

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 2

Finite-State Acceptor (FSA) b a

0

ε a

1 2 accepts ( a | b )

∗ ab b

3

• definition:

– finite number of states

– one initial state

– at least one final state

– transition labels:

∗ label from alphabet Σ must match input symbol

∗ consumes no input

• accepts a regular language

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 3

Finite-State Transducer (FST) b:a a:b

0

ε

:

ε a:

ε

1 2 e.g., ( aba ) a → ( bab ) a

ε

:a

3

• definition, like FSA except:

– transition labels:

∗ pairs of input:output labels

∗ on input consumes no input

∗ on output produces no output

• relates input sequences to output sequences (maybe ambiguous)

• FST with labels x : x is an FSA

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 4

Finite-State Transducer (FST)

• final states can have outputs, but we use transitions instead

0 a:b

1 b

0 a:b

1

ε

:b

2

• transitions can have multiple labels, but we split them up a,b:a

0 1 c:b,c

2

0 a:a

1 b:b

2 c:c

3

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 5

Weights

0 a/0.6 a/0.4

1 b/0.7 b/0.3

2/0.5

• transitions and final states can have weights (costs or scores)

• weight semirings ( ⊕ , ⊗ , 0 , 1 ) , ⊕ ∼ parallel, ⊗ ∼ series:

– 0 ⊕ x = x , 1 ⊗ x = x , 0 ⊗ x = 0 , 0 ⊗ 1 = 0

– (+ , × , 0 , 1) ∼ probability (sum parallel, multiply series) a/1 b/1

0 1 2/0.5

ab/ 0 .

5

– ( min , + , ∞ , 0) ∼ − log probability (best of parallel, sum series) a/0.4 b/0.3

0 1 2/0.5

ab/ 1 .

2

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 6

Deterministic FSA or FST

• input sequence uniquely determines state sequence

• no transitions

• at most one transition per label for all states a

ε a

0 1 non-deterministic (NFA) a a

0 1

2 deterministic (DFA)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 7

Operations

• constructive operations:

– closure A

∗ and A +

– union A ∪ B

– concatenation AB

– complementation A

– intersection A ∩ B

– composition A ◦ B

• identity operations (optimization):

– epsilon removal

– determinization

– minimization

6.345

Automatic Speech Recognition

(FSA only)

(FSA only)

(FST only, FSA ≡ ∩ )

Finite-State Techniques [Hetherington] 8

Closure: A , A

∗

A =

0 a b

1

2

A + =

A

∗

=

0 b

ε a

ε

1

2

0 b

ε a

ε

1

2

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 9

Union: A ∪ B parallel combination, e.g.,

0 a 1

ε b

2

∪ 0 c d

1

=

0

ε

ε a

1

2 c d

3

ε b

4

5

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 10

Concatenation: AB serial combination, e.g.,

=

0 a b

1

2

0 c d

1

0 a b

1

2

ε

ε

3 c d

4

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 11

FSA Intersection: A ∩ B

• output states associated with input state pairs ( a, b )

• output state is final only if both a and b are final

• transition with label x only if both a and b have x transition

• weights combined with ⊗ x x z

0 x y

1

∩

0 y

1 x x (1,0)

=

(0,0) y

⇒ x

∗

( x | y ) ∩ x

∗ yz

∗

= x

∗ y

(1,1)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 12

FST Composition: A ◦ B

• output states associated with input state pairs ( a, b )

• output state is final only if both a and b are final

• transition with label x : y only if a has x : α and b has α : y transition

• weights combined with ⊗

/ih/:[ih]

/t/:[t]

IT:/ih/

ε

:/t/

0 1 2

◦

0

/t/:[tcl]

ε

:[t]

1

ε

:[t]

= (0,0)

IT:[ih]

(1,0)

ε

:[tcl]

ε

:[t]

(2,0)

(2,1)

• (words → phonemes) ◦ (phonemes → phones) = (words → phones)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 13

FST Composition: Interaction

• A output allows B to hold

• B input allows A to hold a:

ε

0 1

◦ a:

ε (1,0)

=

(0,0) a:b

ε

:b

(0,1)

0

ε

:b a:

ε

ε

:b•

(1,1)

1

• multiple paths typically filtered (resulting in dead end states)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 14

FST Composition: Parsing

• language model from JSGF grammar compiled into on-the-fly recursive transition network (RTN) transducer G :

<top> = <forecast> | <conditions> | ...

;

<forecast> = [what is the] forecast for <city> {FORECAST};

<city> = boston [massachusetts] {BOS}

| chicago [illinois] {ORD};

• “what is the forecast for boston’’ ◦ G →

– BOS FORECAST output tags only

– < forecast > what is the forecast for < city > boston < /city >

< /forecast > bracketed parse

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 15

FST Composition Summary

• very powerful operation

• can implement other operations:

– intersection

– application of rules/transformations

– instantiation

– dictionary lookup

– parsing

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 16

Epsilon Removal (Identity)

• required for determinization

• compute -closure for each state:set of states reachable via

∗ a c

0

ε

1 a

ε c b

2

⇒

0 b b c

1

• can dramatically increase number of transitions (copies)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 17

FSA Determinization (Identity)

• subset construction

– output states associated with subsets of input states

– treat a subset as a superposition of its states

• worst case is exponential ( 2 N

)

• locally optimal:each state has at most | Σ | transitions a a a

0 a

1

⇒ a 1 b b

0 b

• weights:subsets of (state, weight)

– weights might be delayed

– transition weight is ⊕ subset weights

– worst case is infinite (not common) a

2

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 18

FST Determinization (Identity)

• subsets of (state, output

∗

, weight)

• outputs and weights might be delayed

• transition output is least common prefix of subset outputs

/t/:TWO

1

/uw/:

ε

0 /t/:TEA /iy/:

ε

3

2

⇓

(0,

ε

)

/t/:

ε

(1,TWO)

(2,TEA)

/uw/:TO

/iy/:TEA

(3,

ε

)

• worst case is infinite (not uncommon due to ambiguity)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 19

FST Ambiguity

• input sequence maps to more than one output (e.g., homophones)

• finite ambiguity (delayed to output states):

0

0 a:

ε a:x a:y

1

1

2

⇓ b:

ε b:

ε b:

ε

2

3

ε

:x

ε

:y

3

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 20

FST Ambiguity

• cycles (e.g., closure) can produce infinite ambiguity

• infinite ambiguity (cannot be determinized): a:x

0 a:y b:

ε

1

• a solution:our implementation forces outputs at #, a special a:x

0 a:y

1 b:

ε

#:

ε

2

6.345

Automatic Speech Recognition

0 a:

ε 1

#:x

#:y b:

ε

2

Finite-State Techniques [Hetherington] 21

Minimization (Identity)

• minimal ≡ minimal number of states

• minimal ≡ deterministic with minimal number of states

• merge equivalent states, will not increase size

• cyclic O ( N log N ) , acyclic O ( N )

/s/:BOSTON

5

/t/:

ε

8

/ax/:

ε

11

0

/b/:

ε

/ao/:AUSTIN

1

/ao/:

ε

3

/z/:BOSNIA

6

/n/:

ε

9

/iy/:

ε

12

/s/:

ε

/t/:

ε

/ax/:

ε

/n/:

ε

2 4 7 10 13

/n/:

ε

/ax/:

ε

14

15

0

/b/:

ε

/ao/:AUSTIN

1

/ao/:

ε

2

3

/s/:

ε

/z/:BOSNIA

/s/:BOSTON

5

4

/n/:

ε

/t/:

ε

7

6

/iy/:

ε

/ax/:

ε

9

8

/ax/:

ε

/n/:

ε

10

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 22

Example Lexicon

0

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

ε

:

ε

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17 z:zinc

18 z:zillions z:zigzag

19

20 z:zest z:zeroth

21

22 z:zeros

23 z:zeroing z:zeroes

24

25 z:zeroed z:zero

26

27 z:zenith

28 z:zebras z:zebra

29

30 z:zealousness

31 z:zealously

32 z:zealous

33 z:zeal

34 r:

ε r:

ε r:

ε r:

ε r:

ε n:

ε l:

ε g:

ε s:

ε a:

ε a:

ε a:

ε a:

ε r:

ε n:

ε b:

ε b:

ε

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68 o:

ε i:

ε r:

ε r:

ε l:

ε l:

ε l:

ε l:

ε o:

ε o:

ε o:

ε o:

ε o:

ε c:

ε l:

ε z:

ε t:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε i:

ε e:

ε i:

ε i:

ε

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85 i:

ε a:

ε t: e: e:

ε s:

ε i:

ε

ε

ε

86

87

88

89

90

91

92 t:

ε a:

ε a:

ε o:

ε o:

ε o:

ε

93

94

95

96

97

98 o:

ε g:

ε h:

ε

101 n:

ε s:

ε d:

ε

99

100

102

103

104 h:

ε s:

ε

105

106 u:

ε u:

ε u:

ε

107

108

109 n:

ε

110 s:

ε

115 g:

ε s:

ε s:

ε s:

ε

111

112

113

114 n:

ε l:

ε

116

117 e:

ε y:

ε

118

119 s:

ε

120 s:

ε

121

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 23

Example Lexicon: Removed

0 z:zinc z:zillions z:zigzag z:zest z:zeroth z:zeros z:zeroing z:zeroes z:zeroed z:zero z:zenith z:zebras z:zebra z:zealousness z:zealously z:zealous z:zeal

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17 e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε i:

ε i:

ε i:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε e:

ε

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34 r:

ε r:

ε r:

ε r:

ε r:

ε r:

ε n:

ε n:

ε l:

ε g:

ε s:

ε a:

ε a:

ε a:

ε b:

ε b:

ε a:

ε

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51 l:

ε l:

ε l:

ε i:

ε r:

ε r:

ε l:

ε o:

ε o:

ε o:

ε o:

ε o:

ε o:

ε c:

ε l:

ε z:

ε t:

ε

88

52

53

89

54

55

56

57

58

90

59

60

61

62

63

64

91 i:

ε a:

ε t:

ε s:

ε i:

ε e:

ε e:

ε

67

92

70

68

69

65

66 o:

ε g:

ε h:

ε n:

ε s:

ε d:

ε

76

94

95

77

96

97 t:

ε a:

ε a:

ε o:

ε o:

ε o:

ε

71

72

93

73

74

75 h:

ε s:

ε

98

99 u:

ε u:

ε u:

ε

78

79

80 n:

ε

81 g:

ε

100 s:

ε s:

ε s:

ε s:

ε

82

83

101 n:

ε l:

ε

102

84

85 e:

ε y:

ε

86

103 s:

ε

87 s:

ε

104

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 24

Example Lexicon: Determinized

0 z:

ε

1 i:

ε 2 n:zinc l:zillions g:zigzag

4

5

6 e:

ε

3 c:

ε l:

ε z:

ε

12

13

14 i:

ε

15 n:zenith

7 s:zest r:

ε b:

ε a:

ε

8 t:

ε o:

ε

16

17 9

10 r:

ε

18

11 l:

ε

19 i:

ε a:

ε t:

ε

ε

:zero

20

21

22

48 i:zeroing e:

ε s:zeros t:zeroth

23

24

25

26 a:

ε

27

ε

:zeal o:

ε

49

28 o: g: h: n:

ε s:zeroes d:zeroed h:

ε

ε

ε

ε

ε

:zebra s:zebras u:

ε

31

34

35

50

29

30

32

33

36

37 n:

ε

38 g:

ε

39 s:

ε

40 s:

ε

41

ε

:zealous n:zealousness l:zealously

51

42

43 e:

ε y:

ε

44

45 s:

ε

46 s:

ε

47

• lexical tree

• sharing at beginning of words:can prune many words at once

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 25

Example Lexicon: Minimized

0 z:

ε

1 e:

ε a:

ε i:

ε

11

3 s:zest n:zenith r:

ε b:

ε 9

8

10 l:

ε

17 o:

ε

2 n:zinc l:zillions g:zigzag

5

24 u:

ε

26 l:

4

ε

12

6 s:

ε

28 z:

ε i:

ε n:zealousness

18

29 l:zealously

13

ε

:zeal

7 i:

ε

14 o:

ε r:

ε t:

ε t:

ε

15 c:

ε o: e: a:

ε

ε

ε i:zeroing t:zeroth

25

31

ε

:zealous

21

20 e:

ε n: s:

30 n:

ε

ε

ε s:zeros

ε

:zero a:

ε

16

27 y:

ε

19 h:

ε

22 d:zeroed s:zeroes

32

23

ε s: g:

ε

ε

:zebra s:zebras

• sharing at the end of words

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 26

On-The-Fly Implementation

• lazy evaluation:generate only relevant states/transitions

• enables use of infinite-state machines (e.g., CFG)

• on-the-fly:

– composition, intersection

– union, concatenation, closure

– removal, determinization

• not on-the-fly:

– trimming dead states

– minimization

– reverse

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 27

FSTs in Speech Recognition

• cascade of FSTs:

( S ◦ A ) ◦ ( C ◦ P ◦ L ◦ G )

R

– S :acoustic segmentation*

– A :application of acoustic models*

– C :context-dependent relabeling (e.g., diphones, triphones)

– P :phonological rules

– L :lexicon

– G :grammar/language model (e.g., n -gram, finite-state, RTN)

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 28

FSTs in Speech Recognition

• in practice:

– S ◦ A is acoustic segmentation with on-demand model scoring

– C ◦ P ◦ L ◦ G :precomputed and optimized or expanded on the fly

– composition S ◦ A with C ◦ P ◦ L ◦ G computed on demand during forward Viterbi search

– might use multiple passes, perhaps with different G

• advantages:

– forward search sees a single FST R = C ◦ P ◦ L ◦ G , doesn’t need special code for language models, lexical tree copying, etc.

.

.

– can be very fast

– easy to do cross-word context-dependent models

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 29

N -gram Language Model ( G ): Bigram in/1.2 in/3.3 in ε

/8.2 in/8.7

Boston/2.8

*

Boston/4.3

ε

/7.2

Nome/6.3

Boston

ε

/3.2

Nome

• each distinct word history has its own state

• direct transitions for each existing n -gram

• transitions to back-off state (*), removal undesirable

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 30

Phonological Rules ( P )

• segmental system needs to match explicit segments

• ordered rules of the form:

{V SV} b {V l r w} => bcl [b] ;

{m} b {} => [bcl] b ;

{} b {} => bcl b ;

{s}

{} s {} s {}

=> [s]

=> s

;

;

• rule selection deterministic, rule replacement may be ambiguous

• compile rules into transducer P = P l

◦ P r

– P l applied left-to-right

– P r applied right-to-left

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 31

EM Training of FST Weights

• FSA A x given set of examples x

– straightforward application of EM to train P ( x )

– our tools can also train an RTN (CFG)

• FST T x : y given set of example pairs x : y

– straightforward application of EM to train T x,y

– T x | y

= T x,y

◦ det ( T y

)

− 1 ⇒ P ( x | y )

⇒ P ( x, y )

[Bayes’ Rule]

• FST T x | y within cascade S v | x

◦ T x | y

◦ U z given v : z

– x = v ◦ S

– y = U ◦ z

– train T x | y given x : y

• We have used these techniques to train P , L , and ( P ◦ L ) .

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 32

Conclusion

• introduced FSTs and their basic operations

• use of FSTs throughout system adds consistency and flexibility

• consistency enables powerful algorithms everywhere

(write algorithms once)

• flexibility enables new and unforeseen capabilities

(but enables you to hang yourself too)

• SUMMIT (Jupiter) 25% faster when converted to FST framework, yet much more flexible

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 33

References

• E.

Roche and Y.

Schabes (eds.), Finite-State Language Processing ,

MIT Press, Cambridge, 1997.

• M.

Mohri, “Finite-state transducers in language and speech processing,’’ in Computational Linguistics , vol.

23, 1997.

• M.

Mohri, M.

Riley, D.

Hindle, A.

Ljolje, F.

Pereira, “Full expansion of context-dependent networks in large vocabulary speech recognition’’, in Proc.

ICASSP, Seattle, 1998.

6.345

Automatic Speech Recognition Finite-State Techniques [Hetherington] 34