Introduction to Simulation - Lecture 13 Convergence of Multistep Methods Jacob White

advertisement

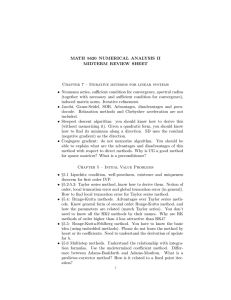

Introduction to Simulation - Lecture 13 Convergence of Multistep Methods Jacob White Thanks to Deepak Ramaswamy, Michal Rewienski, and Karen Veroy Outline Small Timestep issues for Multistep Methods Local truncation error Selecting coefficients. Nonconverging methods. Stability + Consistency implies convergence Next Time Investigate Large Timestep Issues Absolute Stability for two time-scale examples. Oscillators. Basic Equations Multistep Methods General Notation Nonlinear Differential Equation: k-Step Multistep Approach: xˆ xˆ l −k l −2 ˆx l −1 xˆ l d x(t ) = f ( x(t ), u (t )) dt k k j =0 j =0 ( l− j l− j ˆ ˆ α x = ∆ t β f x , u ( tl − j ) ∑ j ∑ j Multistep coefficients Solution at discrete points tl − k tl −3 tl − 2 tl −1 tl Time discretization ) Basic Equations Multistep Methods Common Algorithms Multistep Equation: Multistep Coefficients: BE Discrete Equation: Multistep Coefficients: Trap Discrete Equation: Multistep Coefficients: k j =0 j =0 ( l− j l− j ˆ ˆ α x = ∆ t β f x , u ( tl − j ) ∑ j ∑ j Forward-Euler Approximation: FE Discrete Equation: k ) x ( tl ) ≈ x ( tl −1 ) + ∆t f ( x ( tl −1 ) , u ( tl −1 ) ) ˆxl − xˆ l −1 = ∆t f ( xˆ l −1 , u ( tl −1 ) ) k = 1, α 0 = 1, α1 = −1, β 0 = 0, β1 = 1 xˆ l − xˆ l −1 = ∆t f ( xˆ l , u ( tl ) ) k = 1, α 0 = 1, α1 = −1, β 0 = 1, β1 = 0 ∆t f ( xˆ l , u ( tl ) ) + f ( xˆ l −1 , u ( tl −1 ) ) 2 1 1 k = 1, α 0 = 1, α1 = −1, β 0 = , β1 = 2 2 xˆ l − xˆ l −1 = ( ) Multistep Methods Basic Equations Definitions and Observations Multistep Equation: k k j =0 j =0 ( l− j l− j ˆ ˆ α x = ∆ t β f x , u ( tl − j ) ∑ j ∑ j ) 1) If β 0 ≠ 0 the multistep method is implicit 2) A k − step multistep method uses k previous x ' s and f ' s 3) A normalization is needed, α 0 = 1 is common 4) A k -step method has 2k + 1 free coefficients How does one pick good coefficients? Want the highest accuracy Simplified Problem for Analysis Multistep Methods d Scalar ODE: v ( t ) = λ v(t ), v ( 0 ) = v0 dt Why such a simple Test Problem? λ∈ • Nonlinear Analysis has many unrevealing subtleties • Scalar is equivalent to vector for multistep methods. multistep d x ( t ) = Ax(t ) discretization dt Let Ey (t ) = x(t ) Decoupled Equations k k l− j l− j ˆ ˆ α x = ∆ t β Ax ∑ j ∑ j k j =0 k l− j l− j −1 ˆ ˆ α y = ∆ t β E AEy ∑ j ∑ j j =0 j =0 l− j ˆ y α ∑ j ⎡λ1 = ∆t ∑ β j ⎢⎢ j =0 ⎢⎣ k j =0 j =0 k ⎤ ⎥ yˆ l − j ⎥ λn ⎥⎦ Simplified Problem for Analysis Multistep Methods Scalar ODE: d v ( t ) = λ v(t ), v ( 0 ) = v0 dt k k Scalar Multistep formula: λ∈ l− j l− j ˆ ˆ α v = ∆ t β λ v ∑ j ∑ j j =0 j =0 Must Consider ALL λ ∈ Im ( λ ) Decaying Solutions O s c i l l a t i o n s Growing Solutions Re ( λ ) Multistep Methods Convergence Analysis Convergence Definition Definition: A multistep method for solving initial value problems on [0,T] is said to be convergent if given any initial condition max ⎡ T ⎤ l∈⎢0, ⎥ ⎣ ∆t ⎦ ˆvl − v ( l ∆t ) → 0 as ∆t → 0 ˆv l computed with ∆t ∆t l vˆ computed with 2 vexact Multistep Methods Convergence Analysis Order-p convergence Definition: A multi-step method for solving initial value problems on [0,T] is said to be order p convergent if given any λ and any initial condition max vˆ − v ( l ∆t ) ≤ C ( ∆t ) l ⎡ T ⎤ l∈⎢0, ⎥ ⎣ ∆t ⎦ p for all ∆t less than a given ∆t0 Forward- and Backward-Euler are order 1 convergent Trapezoidal Rule is order 2 convergent Multi-step Methods 10 M a x E r r o r 0 10 -2 10 -4 10 10 Reaction Equation Example 2 10 Convergence Analysis Backward-Euler Trap rule Forward-Euler -6 -8 10 -3 10 -2 Timestep 10 -1 10 0 For FE and BE, Error ∝ ∆t For Trap, Error ∝ ( ∆t ) 2 Multistep Methods Convergence Analysis Two Conditions for Convergence 1) Local Condition: “One step” errors are small (consistency) Typically verified using Taylor Series 2) Global Condition: The single step errors do not grow too quickly (stability) All one-step (k=1) methods are stable in this sense. Multi-step (k > 1) methods require careful analysis. Convergence Analysis Multistep Methods Global Error Equation k Multistep formula: Exact solution Almost satisfies Multistep Formula: ∑α j vˆ l− j j =0 k k − ∆t ∑ β j λ vˆl − j = 0 j =0 k d l α − ∆ β = v t t v t e ( ∑ ∑ j ( l− j ) j l− j ) dt j =0 j =0 Global Error: E l ≡ v ( tl ) − vˆ l Local Truncation Error (LTE) Difference equation relates LTE to Global error l l −1 l −k l α − λ ∆ t β E + α − λ ∆ t β E + + α − λ ∆ t β E = e ( 0 ( 1 ( k 0) 1) k) Convergence Analysis Forward-Euler Consistency for Forward Euler Forward-Euler definition vˆ l +1 − vˆ − ∆t λ vˆ = 0 l l τ ∈ ⎡⎣l ∆t , ( l + 1) ∆t ⎤⎦ Substituting the exact v ( t ) and expanding 2 dv ( l ∆t ) ( ∆t ) d 2 v (τ ) v ( ( l + 1) ∆t ) − v ( l ∆t ) − ∆t = 2 l d v = λv dt dt 2 dt el where e is the LTE and is bounded by 2 d v (τ ) 2 l e ≤ C ( ∆t ) , where C = 0.5 maxτ ∈[0,T ] 2 dt Convergence Analysis Forward-Euler Global Error Equation Forward-Euler definition l +1 l l vˆ = vˆ + ∆t λ vˆ Using the LTE definition v ( ( l + 1) ∆t ) = v ( l ∆t ) + ∆t λ v ( l ∆t ) + e l Subtracting yields global error equation l +1 l l E = ( I + ∆t λ ) E + e l Using magnitudes and the bound on e E l +1 ≤ I + ∆t λ E + e ≤ (1 + ∆t λ ) E + C ( ∆t ) l l l 2 Convergence Analysis Forward-Euler A helpful bound on difference equations A lemma bounding difference equation solutions If u Then l +1 ≤ (1 + ε ) u + b, u = 0, ε > 0 εl e l u ≤ b ε l l 0 To prove, first write u as a power series and sum l −1 u ≤ ∑ (1 + ε ) l j =0 1 − (1 + ε ) b= b 1 − (1 + ε ) l j One-step Methods Convergence Analysis A helpful bound on difference equations cont. To finish, note (1 + ε ) ≤ e ⇒ (1 + ε ) ≤ e ε l εl εl 1 − 1 + ε 1 + ε − 1 ( ) ( ) e l u ≤ b= b≤ b 1 − (1 + ε ) ε ε l l Convergence Analysis One-step Methods Back to Forward Euler Convergence analysis. Applying the lemma and cancelling terms ⎛ ⎞ l ∆t λ 2 l +1 l 2 E ≤ ⎜ 1 + ∆t λ ⎟ E + C ( ∆ t ) ≤ e C ( ∆t ) ⎜⎜ ⎟⎟ ∆t λ ε ⎠ ⎝ b Finally noting that l ∆t ≤ T , max l∈[0, L] E ≤ e l λT C λ ∆t Convergence Analysis Forward-Euler Observations about the forward-Euler analysis. max l∈[0, L] E ≤ e l λT C λ ∆t • forward-Euler is order 1 convergent • Bound grows exponentially with time interval. • C related to exact solution’s second derivative. • The bound grows exponentially with time. Convergence Analysis Forward-Euler Exact and forward-Euler(FE) Plots for Unstable Reaction. 12 10 RFE 8 Rexact 6 4 TempExact TFE 2 0 0 0.5 1 1.5 2 Forward-Euler Errors appear to grow with time 2.5 Convergence Analysis Forward-Euler forward-Euler errors for solving reaction equation. 1.2 1 E 0.8 r r 0.6 o0.4 r Rexact-RFE 0.2 Texact - TFE 0 -0.2 0 0.5 1 Time 1.5 2 2.5 Note error grows exponentially with time, as bound predicts Convergence Analysis Forward-Euler Exact and forward-Euler(FE) Plots for Circuit. 1 v1exact 0.8 v1FE 0.6 0.4 v2FE 0.2 0 0 v2exact 0.5 1 1.5 2 2.5 3 3.5 4 Forward-Euler Errors don’t always grow with time Convergence Analysis Forward-Euler forward-Euler errors for solving circuit equation. 0.03 v1exact - v1FE E 0.02 r r 0.01 o 0 r -0.01 v2exact-v2FE -0.02 -0.03 0 0.5 1 1.5 Time2 2.5 3 3.5 4 Error does not always grow exponentially with time! Bound is conservative Making LTE Small Multistep Methods Exactness Constraints k k d l α − ∆ β = v t t v t e ( ∑ Local Truncation Error: ∑ j ( l − j ) j l− j ) dt j =0 j =0 Can't be from d v (t ) = λv (t ) dt LTE d p −1 If v ( t ) = t ⇒ v ( t ) = pt dt p k ∑ α ( ( k − j ) ∆t ) v (t ) j =0 j k− j p k − ∆t ∑ β j p ( ( k − j ) ∆ t ) j =0 d v ( tk − j ) dt p −1 =e k Making LTE Small Multistep Methods Exactness Constraints Cont. k ∑ α ( ( k − j ) ∆t ) j =0 j ( ∆t ) If p p k − ∆t ∑ β j p ( ( k − j ) ∆ t ) p −1 = j =0 k ⎛ k p p −1 ⎞ k ⎜ ∑α j (l − j ) − ∑ β j p (l − j ) ⎟ = e j =0 ⎝ j =0 ⎠ k ⎛ k p p −1 ⎞ ⎜ ∑α j (( k − j )) − ∑ β j p ( k − j ) ⎟ = 0 j =0 ⎝ j =0 ⎠ then e k = 0 for v(t ) = t p As any smooth v(t) has a locally accurate Taylor series in t: if k ⎛ k p p −1 ⎞ ⎜ ∑ α j ( k − j ) − ∑ β j p ( k − j ) ⎟ = 0 for all p ≤ p0 j =0 ⎝ j =0 ⎠ k ⎛ k ⎞ l d p0 +1 Then ⎜ ∑ α j v ( tl − j ) − ∑ β j v ( tl − j ) ⎟ = e = C ( ∆t ) dt j =0 ⎝ j =0 ⎠ Multistep Methods Making LTE Small Exactness Constraint k=2 Example k ⎛ k p p −1 ⎞ Exactness Constraints: ⎜ ∑ α j ( k − j ) − ∑ β j p ( k − j ) ⎟ = 0 j =0 ⎝ j =0 ⎠ For k=2, yields a 5x6 system of equations for Coefficients p=0 p=1 p=2 p=3 p=4 ⎡1 ⎢2 ⎢ ⎢4 ⎢ ⎢8 ⎢⎣16 1 1 1 1 1 1 0 0 −1 0 −4 0 −12 0 −32 0 −1 −2 −3 −4 ⎡α 0 ⎤ 0 ⎤ ⎢ ⎥ ⎡0⎤ α1 ⎥ ⎢ ⎥ ⎥ ⎢ −1⎥ 0 ⎢α 2 ⎥ ⎢ ⎥ 0 ⎥ ⎢ ⎥ = ⎢0⎥ ⎥ ⎢ β0 ⎥ ⎢ ⎥ 0⎥ 0⎥ ⎢ ⎢ β1 ⎥ 0 ⎥⎦ ⎢ ⎥ ⎢⎣0 ⎥⎦ ⎢⎣ β 2 ⎥⎦ Note ∑αi = 0 Always Making LTE Small Multistep Methods Exactness Constraints for k=2 ⎡1 ⎢2 ⎢ ⎢4 ⎢ ⎢8 ⎢⎣16 Exactness Constraint k=2 Example Continued ⎡α 0 ⎤ 0 0 ⎤ ⎢ ⎥ ⎡0⎤ α1 −1 −1⎥⎥ ⎢ ⎥ ⎢⎢ 0 ⎥⎥ ⎢α 2 ⎥ 1 0 −4 −2 0 ⎥ ⎢ ⎥ = ⎢ 0 ⎥ ⎥ β0 ⎢ ⎥ 1 0 −12 −3 0 ⎥ ⎢ ⎥ ⎢ 0 ⎥ ⎢ β1 ⎥ 1 0 −32 −4 0 ⎥⎦ ⎢ ⎥ ⎢⎣ 0 ⎥⎦ ⎢⎣ β 2 ⎥⎦ 1 1 1 0 0 −1 Forward-Euler α 0 = 1, α1 = −1, α 2 = 0, β 0 = 0, β1 = 1, β 2 = 0, 2 FE satisfies p = 0 and p = 1 but not p = 2 ⇒ LTE = C ( ∆t ) Backward-Euler α 0 = 1, α1 = −1, α 2 = 0, β 0 = 1, β1 = 0, β 2 = 0, 2 BE satisfies p = 0 and p = 1 but not p = 2 ⇒ LTE = C ( ∆t ) Trap Rule α 0 = 1, α1 = −1, α 2 = 0, β 0 = 0.5, β1 = 0.5, β 2 = 0, 3 Trap satisfies p = 0,1, or 2 but not p = 3 ⇒ LTE = C ( ∆t ) Multistep Methods Making LTE Small Exactness Constraint k=2 example, generating methods First introduce a normalization, for example α 0 = 1 ⎡1 ⎢1 ⎢ ⎢1 ⎢ ⎢1 ⎢⎣1 0 0 ⎤ ⎡α1 ⎤ ⎡ −1 ⎤ −1 −1⎥⎥ ⎢⎢α 2 ⎥⎥ ⎢⎢ −2 ⎥⎥ 0 −4 −2 0 ⎥ ⎢ β 0 ⎥ = ⎢ −4 ⎥ ⎥⎢ ⎥ ⎢ ⎥ 0 −12 −3 0 ⎥ ⎢ β1 ⎥ ⎢ −8 ⎥ 0 −32 −4 0 ⎥⎦ ⎢⎣ β 2 ⎥⎦ ⎢⎣ −16 ⎥⎦ 1 0 0 −1 Solve for the 2-step method with lowest LTE α 0 = 1, α1 = 0, α 2 = −1, β 0 = 1/ 3, β1 = 4 / 3, β 2 = 1/ 3 Satisfies all five exactness constraints LTE = C ( ∆t ) 5 Solve for the 2-step explicit method with lowest LTE α 0 = 1, α1 = 4, α 2 = −5, β 0 = 0, β1 = 4, β 2 = 2 Can only satisfy four exactness constraints LTE = C ( ∆t ) 4 Making LTE Small Multistep Methods LTE Plots for the FE, Trap, and “Best” Explicit (BESTE). 0 10 d v (t ) = v (t ) d FE -5 L 10 T E Trap -10 10 Beste Best Explicit Method has highest one-step accurate -15 10 -4 10 -3 10 Timestep -2 10 -1 10 0 10 Making LTE Small Multistep Methods Global Error for the FE, Trap, and “Best” Explicit (BESTE). 0 10 M d a -2 d v (t ) = v (t ) 10 x E -4 r 10 r -6 10 o r t ∈ [0,1] FE Where’s BESTE? Trap -8 10 -4 10 -3 10 -2 10 Timestep -1 10 0 10 Multistep Methods Global Error for the FE, Trap, and “Best” Explicit (BESTE). worrysome 200 M 10 a x 100 E 10 r r 0 10 o r 10 Making LTE Small Best Explicit Method has lowest one-step error but global errror increases as timestep decreases d v (t ) = v (t ) d Beste FE Trap -100 10 -4 10 -3 10 Timestep -2 10 -1 10 0 Multistep Methods Stability of the method Difference Equation Why did the “best” 2-step explicit method fail to Converge? Multistep Method Difference Equation l l −1 α − λ ∆ t β E + α − λ ∆ t β E + ( 0 ( 1 0) 1) v ( l ∆t ) − vˆ l + (α k − λ∆t β k ) E l − k = el LTE Global Error We made the LTE so small, how come the Global error is so large? An Aside on Solving Difference Equations Consider a general kth order difference equation a0 x + a1 x l l −1 + + ak x l −k =u l Which must have k initial conditions −1 x = x0 , x = x1 , 0 ,x −k = xk As is clear when the equation is in update form 1 0 x = − ( a1 x + a0 1 + ak x − k +1 −u 1 ) Most important difference equation result l x can be related to u by x = ∑ h u l j =0 l− j j An Aside on Difference Equations Cont. If a0 z + a1 z k k −1 l + + ak = 0 has distinct roots ς 1 , ς 2 ,… , ς k k Then x = ∑ h u where h = ∑ γ j ( ς j ) l l− j j l j =0 j =1 To understand how h is derived, first a simple case Suppose x =ς x + u and x = 0 1 0 1 1 2 1 2 1 2 x =ς x + u = u , x = ς x + u = ς u + u l −1 l l x =∑ς l j =0 l l− j u 0 j l An Aside on Difference Equations Cont. Three important observations If ς i <1 for all i, then x ≤ C max j u where C does not depend on l l j If ς i >1 for any i, then there exists j l a bounded u such that x → ∞ If ς i ≤ 1 for all i, and if ς i =1, ς i is distinct then x ≤ Cl max j u l j Multistep Methods Stability of the method Difference Equation Multistep Method Difference Equation l l −1 α − λ ∆ t β E + α − λ ∆ t β E + ( 0 ( 1 0) 1) + (α k − λ∆t β k ) E l − k = el Definition: A multistep method is stable if and only if As ∆t → 0 max T E ≤ C max ⎡ T ⎤ el l∈⎢0, ⎥ ∆t ⎣ ∆t ⎦ l ⎡ T ⎤ l∈⎢0, ⎥ ⎣ ∆t ⎦ for any el Theorem: A multistep method is stable if and only if The roots of α 0 z k + α1 z k −1 + + α k = 0 are either Less than one in magnitude or equal to one and distinct Multistep Methods Stability of the method Stability Theorem “Proof” Given the Multistep Method Difference Equation l l −1 α − λ ∆ t β E + α − λ ∆ t β E + ( 0 ( 1 0) 1) + (α k − λ∆t β k ) E l − k = el k If the roots of k− j α z ∑ j =0 j =0 are either • less than one in magnitude • equal to one in magnitude but distinct Then from the aside on difference equations E ≤ Cl max l e l l From which stability easily follows. Multistep Methods Stability of the method Stability Theorem “Proof” roots of k ∑α j =0 j z k− j =0 -1 roots of Im As ∆t → 0, roots move inward to match α polynomial 1 k Re k− j α − λ ∆ t β z = 0 for a nonzero ∆t ∑( j j) j =0 Multistep Methods Stability of the method The BESTE Method Best explicit 2-step method α 0 = 1, α1 = 4, α 2 = −5, β 0 = 0, β1 = 4, β 2 = 2 Im roots of z + 4 z − 5 = 0 2 -5 -1 1 Method is Wildly unstable! Re Multistep Methods Stability of the method Dahlquist’s First Stability Barrier For a stable, explicit k-step multistep method, the maximum number of exactness constraints that can be satisfied is less than or equal to k (note there are 2k coefficients). For implicit methods, the number of constraints that can be satisfied is either k+2 if k is even or k+1 if k is odd. Convergence Analysis Multistep Methods Conditions for convergence, stability and consistency 1) Local Condition: One step errors are small (consistency) Exactness Constraints up to p0 (p0 must be > 0) ⇒ max ⎡ T ⎤ l∈⎢0, ⎥ ⎣ ∆t ⎦ e l ≤ C1 ( ∆t ) p0 +1 for ∆t < ∆t0 2) Global Condition: One step errors grow slowly (stability) roots of k k− j α z ∑ j = 0 inside or simple on unit circle j =0 ⇒ max T E ≤ C2 max ⎡ T ⎤ el l∈⎢0, ⎥ ∆t ⎣ ∆t ⎦ l ⎡ T ⎤ l∈⎢0, ⎥ ⎣ ∆t ⎦ Convergence Result: max E ≤ CT ( ∆t ) l ⎡ T ⎤ l∈⎢ 0, ⎥ ⎣ ∆t ⎦ p0 Summary Small Timestep issues for Multistep Methods Local truncation error and Exactness. Difference equation stability. Stability + Consistency implies convergence. Next time Absolute Stability for two time-scale examples. Oscillators. Maybe Runge-Kutta schemes