A fully Galerkin method for parabolic problems by David Lamar Lewis

advertisement

A fully Galerkin method for parabolic problems

by David Lamar Lewis

A thesis submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in

Mathematics

Montana State University

© Copyright by David Lamar Lewis (1989)

Abstract:

This thesis contains the formulation and development of a space-time Sinc-Galerkin method for the

numerical solution of a parabolic partial differential equation in one space dimension. The space-time

adjective means that a Galerkin technique is employed simultaneously over the spatial and temporal

domains. Salient features of the method include: exponential convergence rate, an easily assembled

discrete system, production of a global approximation to the solution, and the ability to handle singular

problems. Methods of solution for the discrete system and implementation of the method for a number

of test problems are included. A FU LL Y G A L E R K IN M E T H O D

F O R P A R A B O L IC P R O B L E M S

by

David Lamar Lewis

A thesis submitted in partial fulfillment

of the requirements for the degree

of

Doctor of Philosophy

in

Mathematics

MONTANA STATE UNIVERSITY

Bozeman, Montana

December 1989

ii

A PPRO VAL

of a thesis submitted by

David Lamar Lewis

This thesis has been read by each member of the thesis committee and has

been found to be satisfactory regarding content, English usage, format, citations,

bibliographic style, and consistency, and is ready for submission to the College of

Graduate Studies.

& i/

Chairperson, Graduate Committee

Date

Approved for the Major Department

Approved for the College of Graduate Studies

y7,

- y . / f S'*?

Date

-----

Graduate Dean

iii

S T A T E M E N T O F P E R M IS S IO N TO U S E

In presenting this thesis in partial fulfillment of the requirements for a doc­

toral degree at Montana State University, I agree that the Library shall make it

available to borrowers under rules of the Library. I further agree that copying of

this thesis is allowable only for scholarly purposes, consistent with “fair use” as

prescribed in the U.S. Copyright Law. Requests for extensive copying or repro­

duction of this thesis should be referred to University Microfilms International,

300 North Zeeb Road, Ann Arbor, Michigan 48106, to whom I have granted “the

exclusive right to reproduce and distribute copies of the dissertation in and from

microfilm and the right to reproduce and distribute by abstract in any format.”

Signature

Date.

4/

77

iv

.

ACKNOW LEDGM ENTS

Several people were of particular help in the various phases of this endeavor.

Thank you.

To John Lund, whose knowledge, guidance and tenacity permeates each

phase. This project may not have driven him to distraction, but it certainly

got him to the outlying suburbs.

To Ken Bowers, who assured me that it was neither the computer nor the

program.

To Rene’ Tritz, whose unsurpassed skills and flying fingers enabled existent

deadlines to be met.

To Sheri, whose support and counseling during several digressions put the

train back, on track.

To Mom and Dad for always being there.

. And to serendipity.

„

-

f

V

TA B L E O F C O N T E N T S

Page

LIST OF T A B L E S ............................ : ................................................................vi

LIST OF F IG U R E S ................................................................• • ....................... vii

A B S T R A C T ...................

v'iii

1. IN T R O D U C T IO N ............................................................................................

I

2. THE DISCRETE SYSTEM FOR THE SPATIAL D O M A IN ....................

9

3. THE DISCRETE SYSTEM FOR THE TEMPORAL DOMAIN . . . . 20

4. ASSEMBLY OF THE ONE-DIMENSIONAL SYSTEMS

5. NUMERICAL IM PLEM EN TA TIO N ...................

REFERENCES CITED

............................30

37

. . . ............................................................................. 4 9

vi

L IS T O F TA B L ES

Table

Page

1. Numerical results for Example I ........................................................................ 41

2. Numerical results for Example 2 ....................................................................... 42

3. Numerical results for Example 3 ........................................................................43

4. Numerical results for Example 4 ........................................................................43

5. Numerical results for Example 5 . . ................................................................. 44

6. Numerical results for Example 6 ........................................................................46

7. Error for Example 6 at time levels t = 0.2079, I

and 4.8105 when h = 0.7854 .................................... ........................................ 47

8. Numerical results for Example 7 .................................................................... 48

Vii

L IS T O F F IG U R E S

Figure

Page

1. The domains P and Sd .................................................................................... 11

2. The domains De and Sd

....................................................................................16

3. The domains Dw and Sd

....................................................................................21

viii

ABSTRACT

This thesis contains the formulation and development of a space-time SincGalerkin method for the numerical solution of a parabolic partial differential

equation in one space dimension. The space-time adjective means that a Galerkin:

technique is employed simultaneously over the spatial and temporal domains.

Salient features of the method include: exponential convergence rate, an easily

assembled discrete system, production of a global approximation to the solution,

and the ability to handle singular problems. Methods of solution for the discrete

system and implementation of the method for a number of test problems are

included.

I

CH A PTER I

IN T R O D U C T IO N

A two-dimensional Galerkin scheme for the solution of the differential equa­

tion

Lu = g

(w )

on a domain D C IR2 can be summarized as follows. Let uN be an approximation

to u defined by

N

(1.2)

where the

uN (x,t) =

U0

(x, t) + ^ 2 ai fa (%' *)

j=i

are known basis functions and

U0

is defined to satisfy the boundary

conditions. Define the residual R by

R*{ q*i ^ 0/2 j • • • 5 On yX y t ) —- L u n

g

•

A standard weighted inner product will be used, given by

(1.3)

[f,g) = J J

f{x,t)g{x,t)w(x,t)dxdt

.

A Galerkin scheme determines the unknown coefficients by orthogonalizing the

residual with respect to the basis functions for k = I , . .. , N

(1.4)

{R,fa) = 0

.

The properties of the method are governed by the basis functions <t>j in (1.2), the

inner product defined in (1.3) and the quadrature used to evaluate the integrals

arising from (1.4).

2

The linear operator considered here is the constant coefficient parabolic op­

erator with zero boundary conditions,

Pu(x,t) = ut (x,t) - uxx(x,t) = g(x,t) ; (z,t) E D

(1.5)'

u(x,i) — 0 ; (x, t) E dD

D — {(x,t) : 0 < x < I , 0 < t < oo}

.

The subject of this thesis is the numerical solution of (1.5) using a Galerkin method

simultaneously in space and time with emphasis placed on the temporal approx­

imation. Nonhomogeneous boundary data may be handled by transforming the

problem to one of the form of (1.5). This procedure is illustrated in Examples

6 and 7 of Chapter 5. For spatial product regions contained in IR", n > 2, the

technique of the present thesis is applicable via Kronecker products of the m atri­

ces developed in this thesis. This has been developed and discussed in [2]. For

more general spatial domains in IR", n > 2, the procedure developed in [14] is

applicable. As this approach is somewhat unconventional, a brief description of

!

the standard approach used for constructing an approximation to (1.5) is provided

for contrast.

Generally, construction of an approximate to the solution u of (1.5) begins

with a discretization of the spatial domain using a finite difference, finite element,

collocation or Galerkin method [4,6,7,10]. This approach yields a system of ordi­

nary differential equations in the time variable. To complete the approximation,

one uses a time-differencing scheme in which the approximation at a given time

is dependent on the approximation constructed at the previous time level or in

some cases on several previous time levels. Stability constraints on the discrete

evolution operator result in low order methods that require “small” time steps to

obtain accurate approximations [I]. When the approximate solution is desired at

3

moderate (or large) times, a large number of applications of the discrete evolution

operator must be used to reach that time.

A great deal of effort has been expended on developing methods which im­

prove the approximation of the time derivative. The survey paper [10] classifies

methods of order greater than two for the time integration. In [4], methods which

are up to fourth order accurate in time are developed by employing various extrap­

olations at each time level. While each of these approaches possess certain advan­

tages they all result in methods that converge algebraically in time. When used in

conjunction with an exponential order spatial approximation (e.g. Galerkin, spec­

tral) the result is a method that is exponentially convergent in the spatial domain

and algebraically convergent in the temporal domain. A type of space-time finite

element technique was developed in [15]. When piecewise polynomials are used in

the temporal domain, the resulting method remains an algebraically convergent

method. In contrast the method presented here, enjoys an exponential convergence

rate in each coordinate direction, and appropriate parameter selections allow one

to balance these rates.

The present method maintains its exponential convergence rate in the case

of problem (1.5) whose solution has a singularity on the boundary of D. This is

in marked contrast to polynomial based methods whose approximation qualities

deteriorate in the presence of singularities.

The basis elements employed in the Sinc-Galerkin method are translates of

the sine function, denoted sinc{x), which is defined by

(1:6) .

v

'

sinc(x) = ——

WX

,

XGlR

.

The approximation procedures herein are based on the following foripal expansion

I

4

of a function / :

(1.7)

C(f,h,x) =

f(kh)sinc

fc =

— OO

x — kh

h

, h> O .

The expansion (1.7) was developed and studied by E.T. W hittaker [16] who

dubbed the function C ( f , h , x ) the “cardinal function” of / (whenever the series

converges). He conjectured that given a function with singularities and fluctu­

ations, the “cardinal function” could be substituted for it “in all practical and

some theoretical investigations since they are identical at an infinite number of

values.” He called C ( f , h , x ) “a function of royal blood in the family of entire

functions, whose distinguished properties separate it from its bourgeois brethren.”

Currently, the function C ( f , h , x ) is called the W hittaker cardinal function. The

cardinal function used as a tool in numerical analysis is of relatively recent vin­

tage. The survey paper [13] provides both historical information on the cardinal

function as well as an exhaustive list of its properties through 1981.

F. Stenger [11] showed that for functions defined on the real line, interpolation

and integration procedures based on the W hittaker cardinal function have the

exponential convergence rate O(exp —k ^/ N ) where /c > O is a constant independent

of N and 2 N + I basis elements are used in the procedure. By using conformal

maps, he expanded these procedures to include functions defined on an-arbitrary

interval (a, b) and showed that the exponential convergence rate was maintained.

In [12] Stenger showed that the convergence rate O(exp —k ^ / N ) was optimal

over the class of jV-point approximations whether or not the function being ap­

proximated had singularities at the endpoints of the interval, (a, b). Included in

[12] is an initial development of the Sinc-Galerkin method to approximate the

solution of linear and nonlinear second order ordinary differential equations. The

approximate solution of some elliptic and parabolic partial differential equations is

5.

also discussed. The reduction of the differential equation to a system of algebraic

equations was accomplished via explicit approximations of the weighted inner

product defining the SincrGalerkin scheme. In each case the m atrix formulation

of the system of algebraic equations can be expressed in the form

(1.8)

Au = b

where u is the vector of unknowns and the components of the m atrix A and the

vector b are known. Unlike the sparse matrices arising from polynomial approxi­

mations, the m atrix A for the Sinc-Galerkin method is full.

Specifically, when applying the method to a second order ordinary differential

equation Stenger showed that the discretization yields a m atrix A represented by

a sum of matrices

(1.9)

A = I ^ D 2 A-I m D 1 + D 0

where I ^ is a full skew-symmetric matrix, I (2) is a full negative definite sym­

metric m atrix and the matrices D0, D i and D2 are diagonal and dependent upon

the domain of definition. In the case of a self-adjoint second order problem, the

matrix A is not in general a symmetric matrix. However, the method is applicable

to any second order problem including those with regular singular points. While

it is possible to make assertions about the spectrum of each term of A, a specific

characterization of the spectral properties of A is domain dependent.

Lund [9] showed that for self-adjoint second order differential equations, the

skew-symmetric m atrix

could be eliminated from A in (1.9) by selecting a

weight function different from that used in [12]. The selected weight produced

a symmetric discrete system (1.8). Numerical testing showed an unexpected in­

crease in the accuracy of the approximation produced by the symmetrized method

6

over th at produced by the method of [12]. In exchange for the symmetrization,

a stronger assumption is required on the behavior of the true solution. As a

consequence, the methodology of [9] applies to a more restrictive class of prob­

lems than the methodology in [12]. Due to the nature of the solution of problem

(1.5), the method of [9] is applicable and is the method of choice for the spatial

approximation in this thesis.

Initially the methodology outlined in [12] was used in the time domain. When

this method was applied to a variety of test cases, the numerical results often failed

to exhibit the expected exponential rate of convergence. Whereas the accuracy

of the interpolation for the various derivative approximations can be guaranteed,

a closer examination of the discrete system for (1.5) indicated th at matrix condi­

tioning was the source of these discrepancies. When the development was carried

out using a general weight it was clear that the conditioning was dependent on the

weight function used in the inner product. It is shown that both the eigenvalues

of the discrete system as well as matrix conditioning are controlled by selecting

a different weight than that proposed in [12]. Additionally, when the new weight

was tested, the numerical results in each test case exhibited the anticipated rate of

convergence. As in the symmetrization method of [9], this new selection requires

a stronger assumption on the behavior of the true solution.

Chapter 2 contains an outline of the approach taken to produce a numeri­

cal approximation to the solution of (1.5) using the Sinc-Galerkin method. This

technique is a product approximation and as such the spatial and temporal approx­

imations are given separately. Various results from the work of Stenger [11,12,13]

germaine to the development are included for completeness. The chapter con­

cludes with the symmetric Sinc-Galerkin method applied to the one-dimensional

7

problem

u"{x) = g(x)

,

XG(O5I)

(1.10)

u(0) = u(l) = 0

.

Chapter 3 begins with a detailed development of the Sinc-Galerkin method

using an arbitrary weight function in the inner product for the one-dimensional

problem

u>(Pl = Sit)

,

t G (0,oo)

( 1 . 11 )

u (0) = 0

.

The focus here is toward identifying the impact of the weight function on the

conditioning of the resulting discrete system. The analysis shows that in the one

parameter family of weight functions identified, there is a range of values of the

parameter for which the conditioning is controlled. Correspondingly there is a

range of values of the parameter for which the exponential convergence rate of

the method is enhanced. In the intersection of these two ranges is a parameter,

clefining the weight function for which the conditioning of the system is controlled

and the exponential convergence rate is maintained.

The approximations in Chapters 2 and 3 are assembled in Chapter 4 to

produce the discrete system which determines the numerical approximation of

the solution to (1.5). Three methods for solving the discrete system are dis­

cussed. The first method is in the form of (1.8) where the coefficient matrix is the

Kronecker sum of the matrices developed in Chapters 2 and 3. This form is

amenable to either direct or iterative solution methods. The second method is

based on the diagonalizability of the matrices from the spatial and temporal dis­

cretizations. Due to the weight selection from [9] the former is orthogonally diagonalizable. The diagonalizability of the latter is a conjecture of this thesis. This

8

method is included both to numerically test the conjecture and to clearly reveal

the eigenstructure of the system. The validity of the numerical results of this the­

sis do not depend on this diagonalizability conjecture. Indeed, the third method

of solution presented, based on the Schur decomposition, produces identical nu­

merical results without the assumption of diagonalizability.

: Finally, Chapter 5 includes the numerical results for seven examples. From

the many examples tested, these were selected to illustrate implementation aspects

of the method. Both homogeneous and nonhomogeneous problems are included.

Specifically, the first four examples were selected to numerically illustrate the ex­

ponential convergence rate for singular and nonsingular problems. In the former

case, problems exhibiting algebraic or logarithmic singularities are included. The

last three examples were taken from the literature and could provide an initial ba­

sis for a comparative study of this Sinc-Galerkin method and alternative methods

of solution of parabolic problems.

•:vx:

9

CH A PTER 2

T H E D IS C R E T E S Y S T E M F O R T H E SP A T IA L D O M A IN

The development of the Sinc-Galerkin method for either of problems (1.10) or

(1.11) depends on the accurate interpolation and quadrature formulas of [11,12,13]

for the approximate inner products defining the method. Both to see why the two

problems (1.10) and (1.11) arise from (1.5) as well as to review the results needed

from [11,12,13], an outline of the space-time Sinc-Galerkin method is given in this

chapter. Following this general discussion, the specific development for problem

(1.10) is presented.

The space-time Sinc-Galerkin scheme to approximate the solution of the

parabolic problem

Pu{x, t)

( 2 . 1)

EE U t

(x, t) - uxx (x, t) = g(x, t) ; (x,t) G D

u(x,t) = 0 , ( x , t ) £ d D

D = {{x,t) : 0 < x < I ; 0 < t < oo}

may be summarized as follows: Select the basis functions [Si (z), SI (t)} and define

an approximate solution to (2.1) by way of the formula

(2 .2)

i= —M x j =

where

m x = M x + N x + I and m t — M t + N t + I

(2.3)

.

The coefficients {u,y} in (2.2) are determined from the discrete system

(2.4)

[Pu

10

where (•, •) is an appropriate inner product.

The choice of the inner product

in (2.4) coupled with the choice of the basis functions in (2.2) determines the

properties of the approximating method.

For the Sinc-Galerkin method, the basis functions are compositions of the sine

function (1.6) with conformal maps and will be defined later. The inner product

indicated in (2.4) is defined by

(2.5)

(u,u) = I

/

Jo

Jo

u(x, t)v(x, t)w(x, t)dxdt

where u/ is a weight function that will be selected as the development proceeds. To

motivate the one-dimensional development that follows, assume that the weight

w(x,t) = w1(x)w2{t) so that the residual in (2.4) with (2.5) may be written

ut (z, t)Sk (:r)S7 (^iv1 (x)w2 (t)dxdt

i

uxx (x,t)Sk (x)S* (t)wi (x)u)2 (t)dxdt

i

g(x,t)Sk [x)SZ (t)ivi {x)w2 (t)dxdb

.

While construction of the discrete system defined in (2.4) is the result of direct

computation, it is tedious, obscures important parameter selections and fails to

provide direction in selecting the weight function. A product-method of approach

is achieved through developing the Sinc-Galerkin method for the one-dimensional

problems

u"{x) — g(x) , 0 < z < I

(2 .6 )

u(0) = it(l) = 0

and

u' (t) — g(t) , t > 0

(2.7)

u (0) = 0

.

11

The latter approach produces matrices that are identical to those derived from

a direct discretization of (2.4) and illuminates parameter and weight function

selection.

Before attacking either problem (2.6) or (2.7), the foundation and basic

assumptions necessary for the Sinc-Galerkin method are presented. Since the

domain of concern differs in problems (2.6) and (2.7), the preliminary results are

developed on the general interval (o, b) with a ^ b.

Let a and b be real and let P be a simply connected domain in the complex

plane such that a, 6

dD. Let d > 0 and <j>: D

Sd be a. conformal single valued

mapping onto the infinite strip

(2.8)

Sd = {$ = [i + iv : |i/| < d}

.

Furthermore, select (j) such that (f>(a) = —oo and (f)(b) — oo. Let </>~1 denote the

inverse of (f) and set F = (o, b) — <f>~1 ((—oo, oo)) and xk = <t>~l {kh) where /i > 0

and k is an integer. A graphic respresentation of the situation is supplied in Figure

I.

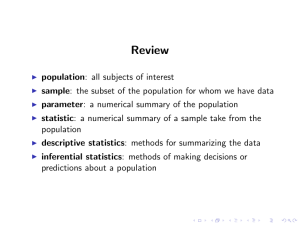

Figure I. The domains V and Sd.

12

The basis functions used in the Sinc-Galerkin method are translates of the

sipc function (1.6) composed with the conformal mapping <f>:

Sk (x) = S(k,h) o <f>(x)

stnc

(2.9)

4>{x) —kh

sin [=■

- kh)}

h (^(%) “ kh)

It is convenient to note a property of the basis functions and to introduce some

notation at this time. Define

( 2 . 10 )

hn

S(k,h) o <f)(x)

so that, in particular,

( 2 . 11 )

( 2 . 12)

(°) _ I I if j = k

" I 0 if j # fc

C '1 = I I - - ) '- '

j —k

’

if j = k

ifj^k

’

and

(2.13)

if j = k

6. ^ =

U-k)3

ifj^k

Of primary importance to the Sinc-Galerkin method is the quadrature rule

I

used to evaluate the integrals resulting from the various inner products in (2.4);

in particular, the form of the error incurred by the quadrature rule is needed.

The following theorems are given without proof (see [11,12] for the proofs) and

embody the tools required to construct an approximation to the solution u of

either (2.6) or (2.7) using a finite set of the basis elements. The theorems are

stated for a contour F in the complex plane though they are used in this thesis on

13

subintervals of the real line. Theorem 2.1 identifies the class of functions for which

sine interpolation yields an exponentially accurate sine interpolant. The result of

Theorem 2.2 exhibits the exponential rate of convergence of the quadrature rule

and Theorem 2.3 provides a technique for truncating the infinite sum resulting

from the quadrature rule.

Let B(D) denote the class of functions / that are analytic in D such that

(2.14)

where

L = {iu : \u\ < d}

and

(2.15)

T h eo re m 2.1:

Let zh = <j> 1(kh) and f G B(D). Then for every z G L

(2.16)

/(to)

dw

so [<£M -<£(z)]sin(£- <j>(w))

2m

and

(2.17)

T h eo rem 2 .2:

Let / G B(D). Then

(2.18)

i_ f

2

JaD

K(<t>, h)(w)f(w) dw

sm(7T(f)(w)/ h)

14

where

(2.19)

K{<j>,h){w) = exp {i'K(f){w)sgn [lm((f>(w))] / h }

Furthermore, a short calculation produces the identity

( 2 . 20 )

= exp(—7rd/h)

Iif(M )M I

W &d P

and the inequality

e-itd/h

141 < 2sinh(ird/h)

( 2 . 21 )

T h eo rem 2 .3 :

^

Suppose / is analytic in D and that there exist positive

constants a, /3 and C such that

exp(-oi|<£(z)|

\ e x p ( - / 3|^(2)|

f i z)

<f>'{z) -

( 2 . 22 )

, 2 G Fl

, z G Ta

where

F l == {z : z G

1 ((—oo,0)) }

and

F e = {z : z G <?i ^[O 5Oo)))

Then

y-

/(gfc) _

y -'

±1

(2.23)

/ ( gfc)

t w

—M — I

<

E

/c =

~ OO

/M )

+

E

/c = TV + 1

/M )

<6'(zk)

< C { (l/a ) exp(-o;M /i) + (l//3) exp(—^iV/i)}

15

Combining the result of Theorems 2.2 and 2.3, the error in the finite sine

quadrature can be expressed as

f i zk)

I Hz)dz- £

k = —M

(2.24)

I

P(Zk)

< C { (l/a ) e x p ( - a M h ) + (l//?) exp(—(3Nh)}

e- ”dl hN { f , V)

2sm/i(7rd/h)

The boundary integral and truncation errors in the finite sine quadrature (2.24)

I

are asymptotically balanced when nd/ h = a M h and a M h = f3Nh or when

(2.25)

h=

I Trd

VaM

and

(2.26)

N =

aM

P ' I

where | - ] denotes the greatest integer function. The inequality in (2.24) is main­

tained if I" M is an integer and N = j M. The addition of I in (2.26) is required

only when j M is not an integer.

It should be noted that the exponential rate of convergence in (2.21) and

(2.23) does not depend on the boundedness of derivatives o f / . It is this fact that

preserves the exponential accuracy of the Sinc-Galerkin method when applied to

problems with singular solutions.

The inner product employed for either of problems (2.6) or (2.7) is defined

by

(2.27)

fb

(u,v) = / u(x)v(x)(<f>,( x ) y dx

Ja

The value of a is problem dependent and will be identified during the ensuing

development for each problem.

16

The remainder of this chapter is devoted to identifying the form of the dis­

crete system which produces the Sinc-Galerkin approximation of (2.6). A detailed

development of the approximation is provided in [9], thus only the results are in­

cluded here. Select the conformal map

(2.28)

H z) = in ( i 3 ^ )

which carries the “eye”-shaped region

(2.29)

Ve - {z = x-\-i y : |orsr[z/(l - z)]| < d <

tt/ 2}

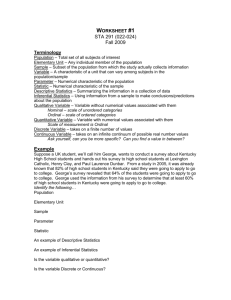

onto the infinite strip Sd in (2.8). These domains are depicted in Figure 2.

Figure 2. The domains Ve and Sd.

<t>

The inverse map, 4) 1, is given by

(2.30)

= Cf Z(H-Ct )

,

thus the sine nodes are

(2.31)

xk = (f> 1 [kh) = Cfch / ( I + ek>l)

17

The basis elements, Sk (x), are defined as in (2.9) and an approximate solution of

(2.6) is defined by

JV

(2.32)

um (x) = ^

Uj S3(X) , m = M + N + l

.

J = —M

The discrete system produced by orthogonalizing the residual with respect to the

basis functions Sk (re),

(2.33)

(u" - g, 5fc) = 0 , - M < k < N

,

provides the defining relationship which determines the coefficients {u,} of (2.32).

The use of u in (2.33) as opposed to um is a convenience which facilitates the

derivation of the discrete system. The weighted inner product (2.27) with a =

—1/2 assumes the form

(2.34)

(u,v) = f u(x)v(x)(<f>'(x)) 1/2dx

.

Jo

In [9], it was shown that this selection produces a symmetric discrete system. The

initial step in constructing the discrete system is achieved by setting

0 = (u" — g, Sk) =

f

Jo

[u"(x) - g(x)]Sk (x){<l>'(x)] 1/2dx

and is followed by two applications of integration by parts. This leads to the

equality

(2.35)

f

g(x)Sk (x)(<f>'(x)) 1/2dx = B T + f

Jo

u(x) Sk (x) (ft(x)) -

Jo

where the boundary term B t is given by

B t = u ' ( x ) S k ( x ) ( f t ( x ) y 1/2

—u(x)

±

(S1(Z ))^ (Z ))1' 2 - i S1 (Z )(^ (Z ))-azV ( Z ) )

1/ 2

'

18

If the function u satisfies the inequality

(2.36)

0<x<l/2

|u (x )(^ (x ))1/2| < Z , | ^

1/2 < x < I

then with the weight function selected, B r = 0 if a > 0 and /? > 0.

Applying the quadrature rule of Theorem 2.2 to the integrals in (2.35) and

truncating the infinite sum according to Theorem 2.3 produces

N

r

- s°kj{2) - -4 %■

6{0)

(2.37) ° = h S z { jh2

J=-M ^

Let

denote the m x m ( m = M + iV + l) m atrix whose k j th. element is

given by (2.13) and define

(2.38)

A = D[(f>'} J l r(2) _ i /(o) DW]

/t2

4

.

The m atrix D[<f>'] is an m X m diagonal m atrix with elements ^'(xy),

j — —M, . . . , N and

is the m X m identity matrix.

If an approximate solution of (2.6) is (2.32) and if one replaces u(x, ) with

in (2.37) then the discrete Sinc-Galerkin system for (2.6) is

(2.39)

Av = D[(4>') 1/ 2fif]l

where v = Z)[(^') 1/,2]u, u — (u- m , . . . ,

U0 , .

.. , Ujv)T and I = ( I , . . . , I )3

The specific parameter selections made in (2.39) are defined by

(2.40)

h = (wd/faM))

and

(2.41)

i M+1

1/2

U 3-

19

It has been shown in [9] that, asymptotically, the error incurred by approximating

the true solution of (2.6) by (2.32) has the order 0^exp(—(TrdaM)1/ 2) j . As

shown in [12], the m atrix J (2) in (2.38) is a negative definite symmetric matrix

with condition number of order 0((N + M + 2) / 2) 2.

The weight (<£')-1 / 2 in (2.34) produces a symmetric system to determine the

coefficients of the approximation to the solution of (2.6) unlike the selection (^ ')_ 1

used in [12]. For a general (j), the convergence rate is less rapid when the weight

function selected is ( ^ ) - 1 / 2. For the map <j) in (2.28) it was shown in [9] that the

convergence rate is the same whether (4>')~ 1Z2 or {4>')~1 is used. This plays a role

for the problem (2.1) in the parameter selection of Chapter 4.

20

CH A PTER 3

T H E D IS C R E T E S Y S T E M F O R T H E T E M P O R A L D O M A IN

The Sinc-Galerkin method for problems (2.6) and (2.7) differs in two major

respects, the conformal map and the choice of the weight used in the inner product.

The difference in conformal maps is due to the obvious difference in domains, (0 ,1)

versus (0, oo). The selection of the weight for problem (2.7) is not as obvious. This

chapter is devoted to the development of the discrete system for (2.7) and is carried

out with an arbitrary weight in the form indicated in (2.27). More detail will be

devoted to the selection of o both with respect to the elimination of the boundary

terms in the integration by parts and in the conditioning of the discrete system.

Recall problem (2.7)

u'{t) — g{t) , t > 0

(s.i)

u(0) = 0

.

Select the conformal map

(3.2)

il)(z)=tnz

which carries the wedge shaped region Dw — {z = t + is : |arg(z)| < d <

tt/ 2}

onto the strip Sd (2.8). The domains Dw and Sd are depicted graphically in Figure

3. The sine nodes in Dw are defined by

(3.3)

tk = Vr 1(A;/i) = ehh

.

21

Figure 3. The domains Vw and Sd.

0

The basis functions S'* (t) are defined as in (2.9) with 4>and x replaced by ip and

t respectively. An approximate solution of (3.1) is given by

N

(3.4)

um (t) =

^ 2 UySy (t) , m — M + N + I

.

J= -M

The weighted inner product for (3.1) assumes the form

(u, v) = f

1 (3-5)

u(t)v(t)[ip'(t)Y dt

Jo

where a is real and as yet unspecified. Orthogonalizing the residual using the

inner product in (3.5) yields, after a single application of integration by parts,

O = (u' - g , S * ) =

(3.6)

==Bt~ Jo

where

f

Jo

{“ (*)

[u'(t) - g(t)]S*{t)(ip'(t)y dt

(()( f W ) ' ] ' +

s ; (()(tA'(;))*} dt

22

(3.7)

JGf, = «(()% (t) (if'( ( ) ) '

o

Again, u is used in (3.6) instead of um to derive the discrete system.

If it is assumed that u vanishes at a sufficiently rapid rate at infinity and that

u is Lip 7 at t = 0 then, coupled with the initial condition in (3.1), Bt = 0 for

all o" < 7 . The assumed vanishing of u at infinity is not a restriction for most

parabolic problems and will be discussed in what follows. Taking the derivative

of the bracketed term in (3.6) yields the equality

O = jH -{ « (* )

(St1M)IV-1M ] " " +

V (< )

\ dt

(3-8)

^ (St1M)V-1M 4

0 '(O

+g(t)S*k ( t ) W ( t ) Y } d t

.

Applying the sine quadrature (Theorem 2.2) to the integral in (3.8) yields

(3.9) O = -A £

J =

— OO

I <5(1) +

A

+ % )2

uA lP iY f ~ hgk{iPkY 1 + Iu + I g

where the following notation has been introduced: /W represents the ith deriva­

tive, of / evaluated at the sine node t3-. The elements

and Sjc^ are as defined

in (2.12) and (2.11), respectively. The integrals Iu and Ig are defined by (2.18)

with / replaced by u[S£ {ip'Y}' and gS£ (ip')*, respectively.

The boundary integrals I u and Ig are bounded using (2.20) and the following

inequalities:

(3.10)

sin

(w)/A)

-^ 2

tv € 5 Dw

23

and

d

(3.11)

dip ( s ; m )

Sin(Tr^(Iy)ZZi)

Trd + tank (W/Zi)

.

»

- Trd2 tanh(7rd/Zi) ~ C^ h ' d)

to G d Pyv

In the interest of completeness, the bound on the equality |Jtt | proceeds as follows:

1 4 1=

/

sin(7rV»(f)/Zi)

JdVw

<1

- 2 JdDw (

/

Sin(TrV-(^)ZZi)

K[1>>h){S)u{$)SZ(s)<r[rF M

sin(TrV-(f)Z^)

<

\ exp

] " ' 1 V’" M

(~jt) [c'{h'd)L

lrffI

+ \o\c0{h,d) f . |tiM[V’,M]tr"1V'"M^|}

JdDw

,

where it is assumed that the final integrals are bounded as in Theorem 2.1.

To truncate the infinite sum in (3.9) suppose that uty')* is analytic in the

domain D and satisfies

(3.i2)

H W (t)r\< K ^_„

- “ g® <1

for positive constants J f , 7 and 8 (assumption (2.22) with f

replaced by

u { f i y +,r). Employing the results of Theorem 2.3 on the infinite sum in (3.9)

24

yields the inequality

JV

-h

- Ay*(V'*)<t~ i

J = -M

< K

(3.13)

exp(-' )Mh) + ^

exp(—5JV/i)|

+ 5 exP ( z ^ r ) { o , ( » . o I o

M ? )[^ (f)]" + i« i

J d Vw

+ C0(/i,d) /

Jap*

|g(f)[V’'

I

J

.

In (3.13) the quantity

(3.14)

Ay = rh C*' + [«']

C

= i C 1

since, from (3.2), Vy" /[^ ;]2 = —I for all j . The quantity |/3| on the right-hand side

of (3.13) is, from (2.12) and (3.14), bounded by

I A < ( i + M)

•

Assuming the boundary integrals on the extreme right of (3.13) are bounded, the

exponential error terms in (3.13) are asymptotically balanced when the parameters

h, M , N , 7 and 6 satisfy nd/ h = ^ M h = 6Nh. This yields the selections

(3.15)

and

(3.16)

h — (tt^ /^ M ) ) 1/2

25

Before identifying the final form of the discrete system given by the left-hand

side of (3.13), it should be noted that the calculation of N in (3.16) only assumes

that the solution u decays algebraically at infinity, (3.12). For many parabolic

problems the solution u satisfies

(3.17)

u(t) = 0(exp(—5t)) as t —►oo

for some 6 > 0. In this case, the upper sum is bounded by

(3.18)

£

IPkiuA^iYl < Q + kl)

i=N +l

£

Gxp(-fty) .

' j= N +1

'

Upon noting that

h

ex p (-5 t, ) <

J =

f

e~Se*dt

JV + I

“ e- 6 u ^ i

= l

U

Nh

OO

<-l

e Udu

I e- - " *

the truncation of the sum on the right-hand side of (3.18) is bounded by

(3,19)

(

T + M j - 7 exp(-5ejVh)

In this case, the truncation errors are asymptotically balanced if

(3.20)

N =

in ^M h /6 ) | i

h

as opposed to (3.16). As the examples in Chapter 5 will show, this choice of N

significantly reduces the dimension of the discrete system while maintaining the

exponential convergence rate. If (3.4) is assumed to be an approximate solution of

26

(3.1) then, since um (t3) = U3-, the discrete Sinc-Galerkin system which determines

the {«,•} is given by

(3.21)

Bv = -D [ W y g ] l

where

(3.22)

B = D [ f ] ( { l / h ) I {1) - a l ^ )

.

/ ( 1I is the m x m m atrix whose fc/th element is defined by (2.12) and /( 0) is the

m x m identity matrix. The vector v is defined by

(3.23)

v = D [W y}u

where u = (tt_M , u_M+ i , ... ,uN)T and the matrices D[f] are m X m diagonal

matrices with diagonal elements f ( t 3), j = —M , . . . , N .

A unique solution to (3.21) is guaranteed provided B is nonsingular. This

may be seen as follows: The matrix I (1) is a real skew-symmetric matrix and

therefore has spectrum that is pure imaginary. Hence for all non-zero a the matrix

( I f h ) I ^ —<7/(°) is invertible. Since 0 is conformal the m atrix D[V>'] is invertible

so th at B , as the product of invertible matrices, is invertible.

As important as the invertibility of B in (3.22) is its distribution of eigen­

values. This plays a role both in the conditioning of B as well as the method of

solution employed in the two-dimensional problem (detailed in Chapter 4). Indeed

this author believes that the eigenvalues of B are distinct. There is ample numer­

ical evidence indicating that B is diagonalizable but an analytic proof remains

elusive. Another concern which surfaces in Chapter 4 is how the eigenvalues of A

in (2.38) and B intermingle. As will be seen, the solution requires a division by

27

the sum of the eigenvalues of B with the eigenvalues of A (in all possible combi­

nations). Recalling that A is a negative symmetric definite m atrix, it is required

that no real eigenvalue of B is the additive inverse of an eigenvalue of A.

The approach taken is to show that the real part of each eigenvalue of B is

negative provided an adroit selection of a is made. To this end, let C be defined

by

(3.24)

C = ^ J (1> - a l

.

The m atrix / (1) is a skew-symmetric Toeplitz matrix with eigenvalues in the

interval (—*V, tV), thus the eigenvalues of C are translates of those of

where

the translation is dependent on the sign of a. Notice that the m atrix

D (l/y/il/)B D (y/ijS) has the same spectrum as -D(V^r )C-D(VV^r ). It follows from

the definition of C that if A 6 <r(C) then Re(A) < Oor Re(A) > 0 a s a > 0 o r c r < 0 ,

respectively. Hence, the same is true of any spectral point of £)(l/\/V ^)B £>(\/^7)

and since the latter is a similarity transformation of B it follows that if /? G <7(B)

then Re(/8) < O if <7 > O or Re(/3) > O if <7 < 0. Hence, selecting a > 0 guaran­

tees th at the real part of the eigenvalues of A arid B have the same sign, so that

no eigenvalue of A is the additive inverse of an eigenvalue of B. The following

theorem has been shown.

T h eo re m 3.1:

Let the matrices A and B be defined as in (2.38) and (3.22)

respectively, and let /? G O-(B) and a G o(A). If o- > O then

R e(a + /3) < O .

In light of the discussion following (3.7), if u is Lip -y at < = O then Bt = O for

all a where O < o- < 'y. Consequently, one should select a reasonably small a. The

28

selection of a also impacts the convergence rate of the method. Rewriting (3.12)

in terms of a bound on u yields

(3.25)

.

where (3.17) is being assumed. The truncation error term leading to the selection

(3.20) assumes the form exp (—(7 + a )M h ). Hence, to speed the convergence of

the method one should select a “close to” 7. As will be seen in the examples of

Chapter 5, 7 = I is a common case so that the selection <7 = I —e, e > 0, is

optimal with respect to the proceeding sentence. However, besides optimizing the

convergence rate, the selection of a plays a competing role in the conditioning of

the m atrix B in (3.22).

: To begin this investigation note that the matrix C in (3.24) is normal and

the computation

Ct C

—

= ~

<7Jr^

(/o f+ ^ /

shows th at the eigenvalues of C t C are real and lie in the interval (<72, cr2 +Tr2/h 2).

The condition number of C is then

K(C) = ||C||S||C - ‘ ||S

= ^ (c ^ c )-^ ((c -T c -* )

(3.26)

= — y /a2 + 27M

0

where the last equality follows from (3.15) with d =

tt/ 2.

Using (3.26) and the

29

identity

= e ih there follows

k (d (w y

(3.27)

) b ) = ||d ((v.t + ,) c ||=|| d ((16 ') - '- 1) c - ‘ ||2

< l|C||, ||C - 1||,||B ((l6 '),+ ‘) Il= lls a v -')"

< £

1) Il=

m ax I e 2" 1'"-1-1) , e= « M « + i)|

.

Hence for positive a the right-hand side of (3.27) is minimized for a “near” zero

(as opposed to <7 = 7 as in the discussion following (3.25)). In the interest of

balancing the competing inequalities (3.25) and (3.27) governing the selection of

a this author, with the common case 7 = I in mind, selects their average value

a

—

1/ 2 .

30

CH A PTER 4

A SSEM BLY OF TH E O N E -D IM E N SIO N A L SY ST E M S

Recall the parabolic partial differential equation:

Pu(x, f) = Ut (x, i)

(4.1)

Uxx (#; t) —

j (*C) t) G D

u(x,t) = 0 , { x , t ) e d D

D = {(x,t) : 0 < x < I , 0 < t < oo}

,

and the associated Sinc-Galerkin approximation of u

.

(4.2)

umx<mt(x,t) =

N 1

N t

]T

Ui j Si (X)Sjit)

.

i = —M x J = - M t

The subscripts x and t are used to identify parameters of the discrete system that

are similar in nature. For example, the dimension of the coefficient matrix and the

mesh size h will be subscripted since they depend on the independent variables x

and t.

The weighted inner product (2.5) applied to (2.4) yields

(4-3)

O= /

Jo

f [ut (x,t) - uxx{x,t) - 9ixi t)]Sk {x)Sj{t)[<f>' (x)]~1/2[i/}' {x)]1/2 dxdt .

Jo

As in the one-dimensional problems, u is used in (2.4) instead of umx<mt as a

m atter of convenience. Proceeding as in the one-dimensional problems by perform­

ing the necessary integration by parts and applying the quadrature rule (Theorem

2.2) to the result, the following discretization for (4.3) is obtained:

31

O=

t{

J = - O O

^

I

« ’- 1 C

w ] - s/2“ « w ] i / ! }

(4.4)

£{

i H

’- i e

» = — OO

+ ! < r 3 /,g « t o ] - 1/8

•

Assuming th at u satisfies a growth bound of the form

(4.5)

\ u { x ,t ) \ < K t '1+1^ e - stx a+1' 2{ l - x Y +1^

,

the infinite sums in (4.4) can be truncated without impingement to the conver­

gence rate of the method. To transform (4.4) into a finite dimensional m atrix

equation, suppose that - M x < k ,i < N x and - M t < j , i < N t . The discrete

Sinc-Galerkin system can then be written as follows:

(4.6)

D\(4,'t y i’}u d \w ,)-' i*)

= - D [ « ) - 3/= ]G S [(,6;)-/= )

where

^ y M xy- M t

M 1 + ! , —M t

M x, — M t + I

M 1 + 1 , —AT, + I

M xy N t

'

"

Ajf1 + I ,JVt

17 =

L

U N xy- M t

t t JV1 1 - J l f t + !

u JV11JVt

.

The order of the coefficients, Uiy, in the first column of U results from fixing t at

the first time node, t _Mt, and letting k and i assume each value firpm - M x to N x

in (4.4). Subsequent columns of U are generated by advancing to the next time

node and repeating the process. The order of the elements of G in (4.4) is precisely

32

th at of U. This particular ordering was precipitated by mimicking the approach

used in a standard time-differencing scheme. It should be noted th at the SincGalerkin method is not bound to any specific ordering of the grid. Additionally,

it should be noted that the concept of stability, inherent in a time-differencing

scheme, does not apply to the Sinc-Galerkin method in the standard form of the

eigenvalue growth of an iteration matrix. In the present setting the approximate

solution Umiimt is computed at all times ty. The stability is “controlled” via the

selection of a outlined at the end of Chapter 3.

To obtain a more convenient form for the discrete system (4.6), multiply (4.6)

on the left and right by the diagonal matrices £>[(<£')] and Z)[(V>')] respectively and

rewrite the m atrix D [(^')1/2] in the second term on the left of (4.6) as the product

D[(<£,)]jD[(<£')-1 / 2]. The final form of the discrete system is then

(47)

+

One could argue the purported “convenience” of (4.7) over (4.6), however, the

dispersement of the derivative of the conformal maps and associated weights ex­

hibited in (4.7) provide a sense of “symmetry” to the Sinc-Galerkin method. The

matrix equation (4.7) can be written as

(4.8)

VBt +AV = F

where B is defined in (3.22), A is defined in (2.38),

(4.9)

and

(4.10);

f = a[(& ri/=|G D [(W ] .

33

The solvability of the system in (4.8) can be characterized in terms of the

intermixing of the eigenvalues of A with those of B. This characterization is a

well known result from linear algebra but is revealed in the first method of solution

presented for solving the system in (4.8).

Algebraically, (4.8) is equivalent to the system

------ >

(4.11)

Av =

+

------ »

Co(Vr) = Co(F)

:

where the tensor product of an m x n m atrix E with a, p x q m atrix G is defined

by

E ® G = [eijG\mpXnq

.

------------------- ►

The vector v = co(V) is the concatenation of the m x x m t m atrix V obtained by

successively “stacking” the columns of Vr, one upon another, to obtain an m xm t x l

vector. It is known [8] that the eigenvalues of A in (4.11) are given by the sums

{&{ + /!}j}-Mx<i<Nx

so th at the system (4.8) has a unique solution if and only if no eigenvalue of A is

the negative of an eigenvalue of B. Theorem 3.1 implies then th at (4.8) is uniquely

solvable.

A direct method of solving the system (4.11) consists of the implementation

of any of the decomposition methods available for linear systems. From a compu­

tational point of view, a more economical method of solution proceeds as follows:

Since A is orthogonally diagonalizable there exists an orthogonal matrix Q so that

(4.12)

Qt AQ = K

where Aa is a diagonal m atrix containing the eigenvalues Gii of A. Define the

m xm t X m xm t matrix

34

S = I ®Q

(note / is mt x m t ). Factoring S and S t from the m atrix A in (4.11) and pre­

multiplying the result by S t produces

(4.13)

{I® K + B '® I){I® Q t )

co{ V ) = { I ®

Qt)

co(F)

.

The system in (4.12) may be solved either by a block Gauss elimination method

or via a block Gauss Jordan routine.

A second solution method for the system (4.8) is obtained by a diagonalization

procedure. It is the method of solution originally used by Stenger [12] to solve

system (4.8). Specifically, assuming that B in (4.8) is diagonalizable then there is

an invertible m atrix P so that

(4.14)

where

P - 1B t P = A.,

is a diagonal m atrix containing the eigenvalues /?y of B t . If the change

of variables

(4.15)

W = Qr V P

and

(4.16)

H = Qt F P

is made in (4.8) the result is

(4.17)

QW P ~ 1B t + AQ W P - 1 = Q H P ~1

Multiplying (4.17) on the left by Qt and right by P yields

(4.18)

WA0 + A aW = H

.

.

35

The solution of (4.18) in component form is

(4.19)

for - M x < i < Nx and - M t < j < Nt . Once W is determined, V is recovered

via the relationship defined in (4.15) and U follows from (4.9).

Due to Theorem 3.1 the

Wi j

in (4.19) are well defined and the solution U

of (4.7) is obtained via the outlined procedure, the only analytic constraint to

the method being the assumed diagonalizability of B in (4.14). In the interest

of supporting the conjectured diagonalizability of J3, the examples of the next

chapter are all computed via the procedure outlined in (4.14) through (4.19).

To motivate the conjectured diagonalization of B consider the following intu­

itive discussion: The m atrix hB is the product of a positive diagonal m atrix

(-D(VfO) an^ a normal matrix (I*1* — j / ) . The m atrix D(Ip1) B 1) may be written

as D(y/W) [ D y f f i D(Vffi)] D ( I f V W ) hence the eigenvalues of D ( V ) B 1) are

the same as the eigenvalues of the normal m atrix D ( V W ) B 1) D( VW) - As an 0(h)

perturbation of a diagonalizable matrix, it seems reasonable th at for h sufficiently

small, h B and hence B is diagonalizable.

An alternative procedure, based on no diagonalizability assumptions, consists

of replacing (4.14) by the Schur decomposition of B [5]. That is, replace (4.14) by

(4.20)

Bt = ZTZt

where Z is orthogonal and T is upper triangular. The discrete system (4.8) is now

equivalent to

(4.21)

VZT + AVZ = FZ

.

In (4.21) use the diagonalization of A in (4.12) to transform (4.21) to

(4i22)

W T + AW = Q t F Z = M

36

where

W = Qt V Z

.

The components of Wij- are given recursively by

n

(4.23)

wiPtP^ + aiWii = m H

p= l

where, for convenience, it is assumed that all matrices are n X n and indexed from

I to n. Also tpy = 0 for all p > j . Notice that (4.23) yields the same solution

as the diagonalization procedure beginning with (4.14) since (4.8) has a unique

solution.

!

37

CH A PTER 5

N U M E R IC A L IM PLEM EN TA TIO N

The Sinc-GaIerkin method for approximating the solution of the parabolic

problem (4.1) was tested on several examples with known solutions. The numer­

ical results for seven distinct problems are presented here. Problem dependent

parameters are identified and the results for each problem are listed in a tabular

format which reveals the exponential rate of convergence of the method. Exam­

ple I, whose solution is analytic, is included to compare with Examples 2 and 3

which have singular solutions. The latter two examples converge at the same or

a faster rate than that of Example I. As emphasized in Chapters 2 and 3, it is

the Lipschitz character of the solution that governs the rate of convergence of the

Sinc-Galerkin method. Indeed, Examples 2, 3 and 4 demonstrate the method’s

ability to approximate singular solutions whether the singularity is logarithmic

or algebraic (in space and/or time) or both. Examples 5 and 6 are taken from

the literature. The last two examples demonstrate a technique for transforming

a parabolic problem with non-zero boundary conditions to a problem in the form

of (4.1).

Recall that the approximate solution of (4.1) is defined by

(5-1)

X*

Nt

— ^2

53

UijSi{X)Sj (*)

i = —M x ] = - M t

where the basis elements Si (z) and S* (t) are defined in (2.9) with <{>replaced by

(2.28) and (3.2), respectively. The coefficients, {«,, }, are determined by solving

38

the discrete system

(5.2)

VBt +AV = F

for V then recovering the

from the

Vi i

by way of the relationship (4.9). The

matrices V and F are of dimension m x x m t while B t is m t x m t and A i s m x X m x .

The technique used to solve (5.2) is outlined in Chapter 4. Based on the discussion

following (4.19) the solution technique is based on the diagonalization procedure

in (4.14)-(4.19).

The stepsizes hx and ht and limits of summation M x , N x , M i and N t are

selected so th at the errors in each coordinate direction are asymptotically bal­

anced. For solutions u(-,t) analytic in the right half-plane (Pw with d = n/2) the

contour integral error Iu in (3.9) is 0(exp(-7r2/(2/it ))). The contour integral error

for solutions u(x, •) analytic in the disc (PB with d = tt/ 2) is 0 (e x p (-7r2/ ( 2hx))).

<■

Consequently the contour integral errors are asymptotically balanced provided

(5.3)

ht = hx

.

The conditions (2.36) and (3.25) translate to the bound

(5.4)

|u(M )I < K V +1/ 2e - stx a +1^ ( l - z)*+ !/2

on the solution u of (4.1). If the selections

(5.7)

7

39

and

(5.8)

are made, then the errors incurred by truncating the infinite sums are asymp­

totically balanced with those arising from the contour integrals. The balance is

maintained whenever j M x and ^ M x are integers. In practice, the addition of I

in (5.6) and/or (5.7) is required only if f M x and/or ~ M x is not an integer. The

six terms contributing to the error all have the form

(5.9)

A C R = e x p ( —( n y / c i M x / 2 ) )

.

This asymptotic convergence rate provides a measure of the accuracy of the com­

puted solution (4.1) to the true solution of (4.1).

The methodology used in each example was to first determine the parameters

a , /?, 7 and 8 from (5.4), then for a given M x, determine h, N x, M t and Nt from the

relationships (5.5), (5.6), (5.7) and (5.8), respectively. Then the approximation

(5.1) is constructed using the technique of Chapter 4.

To demonstrate the exponential convergence rate of the method, approxima­

tions were constructed for a sequence of values of M x on each problem. Each

approximation was compared to the true solution at the sine nodes

(5.10)

= (ei h / ( e ih +

I ) , C3h)

and the maximum absolute error was recorded. Each table identifies the mesh

size used, h = hx — ht , the lower and upper summation limits M x , N x , M t and

I

Nt and the maximum error

40

—

- M z < i < N x \u i j

U OcIity ) I

at the sine nodes (5.10).

In order to gage the sharpness of the asymptotic convergence rate in (5.9) a

subset of the examples include in their display a column corresponding to

(5.11)

N A C = E u / AC R

.

This “numerical asymptotic constant” should, as M x increases, remain “rela­

tively” constant if the asymptotic convergence rate in (5.10) is an accurate expres­

sion for the convergence rate of the method. The displays in Examples I, 3 and

5 numerically verify this accuracy. These three examples correspond to the cases

when a = 1/2, I and 3/2, respectively. In particular, it is of interest to note that

although the solution in Example I is analytic whereas the solution in Example

2 is singular, the latter converges more rapidly. This corresponds to the fact that

the a in (5.10) is larger for Example 2 than it is for Example I. Similar remarks

regarding increased accuracy apply in comparing the results of Example 3 with

Example 5. These three examples highlight the remarks from the introduction

about the Sinc-Galerkin method’s “permanence” of convergence in the presence

of singularities.

All computations were performed in double precision using the ANS

FORTRAN-77 compiler on a Honeywell Level 66 computer. The notation .xy(—d)

denotes .xy x 10~d in all examples.

E x a m p le I .

If one sets

g(x,t) = e- t [a;(l - t)(l - x) + 2t]

41

in (4.1), the analytic solution is

(5.12)

u(x,t) = te~i x ( l — x)

.

From (5.4) one determines that « = /? = 7 = 1/2 and 6 = 1. The numerical

results for runs with M x = 4,8,16 and 32 are listed in Table I. The values of N t

listed in Table I were determined by (5.8). The dimension of matrices V and F

in (5.2) is 9 X 6, 17 x 11, 33 X 20 and 65 x 37 for the given sequence of M x . Had

Nt been determined from relation (3.16), the dimension would have been 9 x 8,

17 X 14, 33 x 26 and 65 X 50.

Table I. Numerical results for Example I.

h

Mx

1.5708

K

M

M

Eu

AEC

4

4

4

1

0.92(-3)

•21(-1)

1.1107

8

8

8

2

0.27(-3)

•23(-l)

0.7854

16

16

16

3

0.84 (-4)

•45(-l)

0.5554

32

32

32

4

0.71 (-5)

.51(-1)

E x am p le 2.

For this problem, set

g(x,t) = t 1/2e

x ( l - 2) + 2t

The singular solution of (4.1) is

u{x,t) = t3/2e * 2 ( 1 - 2 )

.

42

Here, u has an algebraic singularity at t = O yet the numerical results listed in

Table 2 exhibit the anticipated exponential rate of convergence. For this problem,

the values of the parameters a , /?, 7 and 6 are 1/2, 1/2, I and I respectively.

Table 2. Numerical results for Example 2.

E x am p le 3.

h

Mx

Nx

M

Nt

Eu

1.5708

4

4

2

2

0.17(-2)

1.1107

8

8

4

3

, 0.45(-3)

0.7854

16

16

8

4

0.40(-4)

0.5554

32

32

16

7

0.1l(-5)

If one sets

g(x,t) = ^ Z 3e- t Z -1Z2( I - X ) " 1/ 2

— —t(8x2 —8x + l)

then the solution of (4.1) is

u(x,t) = ^Z2e- t I z ( I - X )]3/ 2 .

In this problem, u has algebraic singularities at each of i = 0, x = 0 and x = I.

The parameters <%, /?, 7 and 8 are readily identified to be « = /3 = 7 = 1 and

5 = 1. Table 3 contains the numerical results for each of M x = 4,8,16 and 32.

Examining the maximum error for each run shows that in spite of the singularities,

the exponential convergence rate is maintained. Note that the convergence rate for

this example is greater than it was for Example I as per the comments following

(5.11).

43

Table 3. Numerical results for Example 3.

h

Mx

Nx

M

Nt

Eu

AEC

1.1107

4

4

4

3

0.36 (-3)

.31(-1)

0.7854

8

8

8

5

0.66(-4)

.35(-l)

0.5554

16

16

16

9

0.81 (-5)

.58(-l)

0.3927

32

32

32

17

0.22 (-6)

.63 (-1)

E x a m p le 4.

If in (4.1)

g(x,t) = t3/2e * (3/(2*) - l ) x t n x - -

then the singular solution is given by

u(x,t) = *3/ 2e t X l n x

.

Note the algebraic singularity at * = 0 and the logarithmic singularity at x = 0.

The parameters used are a = /? = 1/2 and ^ — S = 1. The results for this problem

are listed in Table 4.

Table 4. Numerical results for Example 4.

h

Mx

Nx

Mt

Nt

Eu

1.5708

4

4

2

I

0.40(-2)

1.1107

8

8

4

2

0.11(-2)

0.7854

16

16

8

3

0.21 (-3)

0.5554

32

32

16

4

0.26(-4)

44

The results presented in Tables 2 through 4 emphasize the fact that the expo­

nential convergence rate of the Sinc-Galerkin method is maintained in spite ,of

a singular solution. It is the parameters a, /?, 7 and 6 th at govern the rate of

convergence, not the existence and boundedness of higher order derivatives.

E x a m p le 5.

In this problem

g(x,t) = (I —t)e~t x2(l - x)2 - 2te~t (l - 6 x + 6x2)

the analytic solution of (4.1) is then

u(x,t) = Ie- t X2 (I — x)2

.

A slight variation of this problem was considered by Hlava’cek [6] and by Fairweather and Saylor [4]. The values of a, /3, 7 and 8 are readily identified in the

solution: they are a = (3 = 3/2, 7 = 1/2 and 8 = 1. The numerical results for

M x = 2,4,8 and 16 are listed in Table 5.

Table 5. Numerical results for Example 5.

h

Mx

N x.

M

Nt

Eu

AEC

1.2826

2

2

6

2

0.22(-3)

•10(-1)

0.9069

4

4

12

2

0.57(-4)

•13(-1)

0.6413

8

8

24

4

0.50(-5)

•H (-l)

0.4535

16

16

48

6

0.21 (-6)

.ll(-l)

E x am p le 6.

Evans and Abdullah [3] considered the problem

Wt = Wxx

(5.13)

-

Wx

w(0,i) = tv (l,t) = 0

w(x,0) = exp(x/2)x(l - x)

.

45

Two simple transformations are required to obtain a problem in the form of

(4.1). The change of variables v{x,t) = exp(t/4 - x/2)w (x,t) yields the problem

vt - U== = 0

(5.14)

v(0,t) = v (l,t) = 0

v(x,0) = z (l —x)

which has the solution

CO

v(x,t) = (8/ tt3) ^ (2 A : + I ) " 3 e x p (-( 2A; + l) 27r2t) sin[(2Ar + l)nz]

•

.

k = 0

This problem was also considered by Joubert [7] (replace v by 4u). To get zero

boundary conditions set

u(x,t) = v(x,t) - e~2tx (l - x)

.

This yields a problem in the form of (4.1) with

g{x,t) = - 2 e ~ 2t(x2 - x + 1)

.

From the Sinc-Galerkin approximation to this problem, one can approximate the

solutions of (5.13) and (5.14) by back substitution. For example

«

Vij-

=

Ui j

+ e x p (-2 t,)z <(l -

Xi )

where (rrf,ty) are the sine nodes found in (2.31) and (3.3), respectively.

The parameters used for the Sinc-Galerkin method on this problem are 5 = 2

and a = /3 = 7 = 1/ 2. The results are listed in Table 6.

46

Table 6. Numerical results for Example 6.

h

Mx

Nx

M

Nt

Eu

1.5708

4

4

4

I

0.14(-1)

1.1107

8

8

8

I

0.22 (-2)

0.7854

16

16

16

2

0.29(-3)

0.5554

32

32

32

3

0.29(-4)

A table to illustrate the error distribution at different time levels has been

included for the run with h = 0.7854. Table 7 shows the maximum absolute errors

at t = 0.2079, 1.0 and 4.8105 for a sample of the x grid points as listed. It should

be remembered that the numerical solution (5.1) is actually global and these data

are given only as an indication of the actual time levels generated by the SincGalerkin method and the observed error at these time levels. Note the maximum

absolute error of 0.29 (-3) occurs at x0 = 0.5 and £0 = I which is the “center” sine

node in the grid. Also the error is symmetric about x = 0.5 at each time level.

This may be seen in the last three columns of Table 7.

47

Table 7. Error for Example 6 at time levels t = 0.2079, I and 4.8105

when h = 0.7854.

Eu

i

t _2 = 0.2079

to — I

t2 = 4.8105

-16

0.35(-5)

0.39(-5)

0.10(-5)

0.10(-7)

-12

0.81 (-4)

0.39(-5)

0.10(-5)

0.10(-7)

-8

0.18(-2)

0.43 (-5)

0.27(-5)

0.51 (-6)

-4

0.4l(-l)

0.13(-4)

0.38(-4)

0.11(-4)

0

0.5

0.94(-4)

0.29(-3)

0.91 (-4)

4

0.958576

0.13(-4)

0.38(-4)

0.11 (-4)

8

0.998136

0.43 (-5)

0.27(-5)

0.51 (-6)

12

0.999919

0.39(-5)

0.10(-5)

0.10(-7)

16

0.999996

0.39(-5)

0.10 (-5)

0.10(-7)

E x a m p le 7.

Consider the problem

= wxx

(5.15)

rv(0,t) = ty (l,t) = 0

iu (a;, 0) = sin TTx

.

To transform (5.15) to a problem in the form of (4.1), set

(5.16)

u(x,t) = w(x,t) — e~4t sinTrx

yielding the problem

Ut — uxx = (4 —7r2)e-4t sin Trrc

(5.17)

u(0,t) = tt(l,t) = u(0, x) = 0

48

which has the solution

u{x,t) =

ir^ - c 4t^sinTrz

.

The parameters a, /3 and 7 can be obtained by examining the Taylor series of u

expanded about t = 0, x = 0 and x — I, respectively. One arrives at the values

<* = /? = 7 = I and 6 = 4. The numerical results are listed in Table 8.

Table 8. Numerical results for Example 7.

h

Mx

Nx

M

Nt

Eu

1.5708

4

4

4

0

0.10(-1)

1.1107

8

8

8

I

0.33(-2)

0.7854

16

16

16

I

0.23(-3)

0.5554

32

32

32

2

0.13(-4)

49

R E FE R E N C E S CITED

[1] J.F. Botha and G.F. Finder, Fundamental Concepts in the Numerical Solu­

tion of Differential Equations, Wiley, New York, 1983.

[2] K. Bowers, J. Lund and K. McArthur, “The sine method in multiple space

dimensions: Model problems,” to appear in Numerische Mathematik.

[3] D.J. Evans and A R. Abdullah, “Boundary value techniques for finite ele­

ment solutions of the diffusion-convection equation,” International Journal

for Numerical Methods in Engineering, 23, 1145-1159 (1986).

[4] G. Fairweather and A.V. Saylor, “On the application of extrapolation, de­

ferred correction and defect correction to discrete-time Galerkin methods for

parabolic problems,” JMA Journal of Numerical Analysis, 3 , 173-192, (1983).

[5] G. Golub and C. Van Loan, Matrix Computations, Johns Hopkins University

Press, Baltimore, 1983.

[6] I. Hlava’cek, “On a semi-variational method for parabolic equations,” Insti­

tute for Applied Mathematics, 17, 327-351 (1972).

[7] G.R. Joubert, “Explicit difference approximations of the one-dimensional dif­

fusion equation, using a smoothing technique,” Numerische Mathematik, 17,

409-430 (1971).

[8] P. Lancaster and M. Tismenetsky, The Theory of Matrices, Academic Press,

Orlando, Florida, 1985.

[9] J . Lund, “Symmetrization of the Sinc-Galerkin method for boundary value

problems,” Mathematics of Computation, 47, 571-588 (1986).

[10] W.L. Seward, G. Fairweather and R.L. Johnson, “A survey of higher-order

methods for the numerical integration of semidiscrete parabolic problems,”

IMA Journal of Numerical Analysis, 4, 375-425 (1984).

[11] F. Stenger, “Approximations via W hittaker’s cardinal function,” Journal of

Approximation Theory, 17, 222-240 (1976).

[12] F. Stenger, “A ‘Sinc-Galerkin’ method of solution of boundary value prob­

lems,” Mathematics of Computation, 33, 85-109 (1979).

50

[13} F. Stenger, “Numerical methods based on W hittaker cardinal, or sine func­

tions,” SIAM Review, 23, 165-224 (1981).

[14] F . Stenger, “Sine methods of approximate solution of partial differential equa­

tions,” IMACS Proceedings on Advances in Computer Methods for Partial

Differential Equations, 244-251 (1984).

[15] J.E . Yu and T.R. Hsu, “On the solution of diffusion-convection equations by

the space-time finite element method,” International Journal for Numerical

Methods in Engineering, 23, 737-750 (1986).

[16] E.T. W hittaker, “On the functions which are represented by the expansions

of the interpolation theory,” Proceedings of the Royal Society of Edinburgh,

35, 181-194 (1915).

MONTANA STATE UNIVERSITY LIBRARIES