MIT Department of Brain and Cognitive Sciences Instructor: Professor Sebastian Seung

advertisement

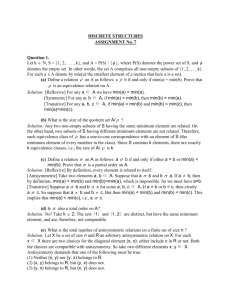

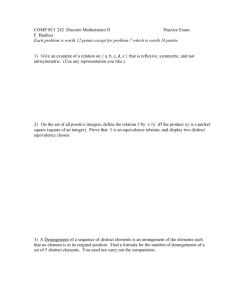

MIT Department of Brain and Cognitive Sciences 9.641J, Spring 2005 - Introduction to Neural Networks Instructor: Professor Sebastian Seung Antisymmetric networks Antisymmetry • Idealization of interaction between excitatory and inhibitory neuron Olfactory bulb • Dendrodendritic connections between and granule (inhibitory) cells • 80% are reciprocal, side-by-side pairs ! Linear antisymmetric network T x˙ = Ax, A = "A • Superposition of oscillatory components T n •!x A x is conserved for even n Eigenvalues of a real antisymmetric matrix are either zero or pure imaginary. Simple harmonic oscillator • antisymmetric after rescaling p q˙ = m p˙ = "kq ! ! x˙1 = "#x 2 x˙ 2 = #x1 Antisymmetric network x˙ = f (b + Ax ), A = "A ! T • bias can be removed by a shift, if A is nonsingular • decay term is omitted because it’s symmetric Conservation law H = 1 F ( Ax ) = " F (( Ax ) i ) T i • H is a constant of motion ! Ax is perpendicular to x x Ax = " x i Aij x j = 0 T Ax ij ! x ! ! Velocity is perpendicular to the gradient of H T H = 1 F ( Ax ) "H T = A f ( Ax ) "x T = A x˙ ! T T ˙ H = x˙ A x˙ = 0 "H "x Cowan (1972) dx dt Action principle • Stationary for trajectories that satisfy x(0)=x(T) T #1 T & T S = ) dt% x˙ Ax˙ "1 F ( Ax )( $2 ' 0 ! Effect of decay term • Introduction of dissipation x˙ + x = f ( Ax ), A = "A T T T L( x ) = 1 F ( Ax ) + 1 F ( x ) ! ! Lyapunov function #L " = AT f ( Ax ) + f "1 ( x ) #x = "Ax˙ " Ax + f "1 ( x ) = "Ax˙ " f "1 ( x˙ + x ) + f "1 ( x ) ! T "L ˙ L = x˙ "x = # x˙ T Ax˙ # x˙ T [ f #1 ( x˙ + x ) # f #1 ( x )] = # x˙ T [ f #1 ( x˙ + x ) # f #1 ( x )] $ 0