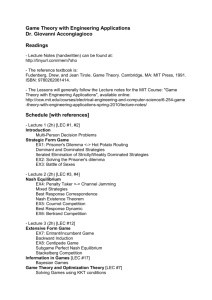

Source Coding for Compression Types of data compression: 1. Lossless

advertisement

Source Coding for Compression

Types of data compression:

1.

2.

Lec 16b.6-1

2/2/01

Lossless - removes redundancies (reversible)

Lossy ­

removes less important information

(irreversible)

M1

Lossless “Entropy” Coding, e.g. “Huffman” Coding

Example – 4 possible messages, 2 bits uniquely specifies each

= 0 .5

∆

= “0”

= 0.25 ∆

forms “comma-less”

= 1 1

code

= 0.125 ∆

= 1 0 0

= 0.125 ∆

= 1 0 1

Example of comma-less message:

11 0 0 101 0 0 0 11 100 ← codeword grouping (unique)

if p( A )

p(B)

p(C)

p(D)

Then the average number of bits per message (“rate”)

R = 0.5 × 1 bit + 0.25 × 2 + 0.25 × 3 = 1.75 bits/messa ge ≥ H

Define “entropy” H = −∑ pi log2 pi of a message ensemble

i

∆

Lec 16b.6-2

2/2/01

Define coding efficiency (here H = 1.75) ηc = H R ≤ 1

M2

Simple Huffman Example

Simple binary message with p{0} = p{1} = 0.5;

each bit is independent, then entropy (H):

H = −2(0.5 log2 0.5 ) = 1 bit/symbol = R

If there is no predictability to the message, then there

is no opportunity for further lossless compression

Lec 16b.6-3

2/2/01

M3

Lossless Coding Format Options

Lec 16b.6-4

2/2/01

MESSAGE

CODED

uniform blocks

non-uniform

non-uniform

uniform

non-uniform

non-uniform

M4

Run-length Coding

Example:

...1 000

N 000000000

N 1111111

N 1111

00

11...

3

7

2

4

9

Huffman code in this sequence ni (…3, 7, 2, 4, 9, …)

run-length coding

optional

Note: opportunity for compression here comes from tendancy

for long runs of 0’s or 1’s

Simple:

p{ni ; i = 1, ∞} ⇒ code

Better:

p{ni ni­ 1 ; i = 1, ..∞} ⇒ code

If ni correlated with ni­ 1

Lec 16b.6-5

2/2/01

M5

Other Popular Codes

Arithmetic codes:

(e.g. see Feb. ’89, IEEE Trans. Comm., 37, 2, pp. 93-97)

One of the best entropy codes for it adapts well to the

message, but it involves some computation in real time.

Lempel-Ziv-Welch (LZW) Codes: Deterministically

compress digitial streams adaptively, reversibly, and

efficiently

Lec 16b.6-6

2/2/01

M6

Information-Lossy Source Codes

Common approaches to lossy coding:

1) Quantization of analog signals

2) Transform signal blocks; quantize the transform

coefficients

3) Differential coding: code only derivatives or changes

most of the time; periodically reset absolute value

4) In general, reduce redundancy and use predictability;

communicate only unpredictable parts, assuming prior

message was received correctly

5) Omit signal elements less visible or useful to recipient

Lec 16b.6-7

2/2/01

N1

Transform Codes - DFT

Discrete Fourier Transform (DFT):

e.g

X(n) =

N−1

∑

x(k )e − jn2π(k N)

k =0

[n = 0, 1, …, N – 1]

1 N−1

x(k ) = ∑ X(n)e jn2π(k N)

N n=0

n=0

n=1

Inverse DFT “IDFT”

n=2

Note: X(n) is complex ↔ 2N #’s

0

Lec 16b.6-8

2/2/01

sharp edges of window ⇒

“ringing” or “sidelobes in the

reconstructed decoded signal

N2

Example of DCT Image Coding

Say 8 × 8 block:

8×8

real #’s

D.C.

term

Can classify blocks, and assign bits

correspondingly

Can sequence coefficients, stopping

when they are too small, e.g.:

may stop here

Contours of typical DCT

coefficient magnitudes

Image Types:

A.

B.

C.

D.

Lec 16b.6-9

2/2/01

Smooth image

Horizontal striations

Vertical striations

Diagonals (utilize correlations)

A

B

C

D

N3

Discrete Cosine and Sine Transforms (DCT and DST)

Discrete Cosine Transform (DCT)

Discrete Sine Transform (DST)

1

1

2

4

3

0

The DC basis function (n = 1) is

an advantage, but the step

functions at the ends produce

artifacts at block boundaries of

reconstructions unless n → ∞

Lec 16b.6-10

2/2/01

2

3

0

Lack of a DC term is a

disadvantage, but zeros

at end often overlap

N4

Lapped Transforms

~DC term

block

Lapped Orthogonal Transform = (LOT)

(1:1, invertible; orthogonal

between blocks)

Reconstructions ring less, but

ring outside the quantized block

extended block

(a) Even Basis Functions

Lec 16b.6-11

2/2/01

(b) Odd Basis Functions

An optimal LOT for N =16, L = 32, and ρ = 0.95

N5

Lapped Transforms

central block

zeros, still lower sidelobes

t MLT = Modulated Lapped Transform

First basis function for MLT

Ref: Henrique S. Malvar and D.H. Staelin, “The LOT: Transform Coding

Without Blocking Effects,” IEEE Trans. on Acous., Speech, and Sign.

Proc., 37(4), (1989).

Lec 16b.6-12

2/2/01

N6

Karhounen-Loéve Transform (KLT)

Maximizes energy compaction within blocks for jointly gaussian processes

Average energy

DFT, DCT, DST

D.C.

term

Example:

0

Average pixel energy

LOT, MLT, KLT

transform

0

n

0

Note: The KLT for a first order

Markov process is the DCT

Lec 16b.6-13

2/2/01

j

j

Lapped KLT (best)

0

j

N7

Vector Quntization (“VQ”)

Value

Example: consider pairs of samples

as vectors. y = [a,b]

a

b

y1

VQ assigns each cell an

integer number, unique

y2

b

b

p(a,b)

more

general

Can Huffman code

cell numbers

a

a

VQ is better because more probable cells are smaller and well packed.

Lec 16b.6-14

2/2/01

VQ is n-dimensional (n = 4 to 16 is typical). There is a

tradeoff between performance and computation cost

P1

Reconstruction Errors

When such “block transforms” are truncated (high frequency

terms omitted) or quantized, their reconstructions tend to ring

The reconstruction error is the superposition of the truncated

(omitted or imperfectly quantized) sinusoids.

⇒

t

window functions

(blocks)

[original f(t)]

t

Reconstructed signal from

truncated coefficients and

quantization errors

Ringing and block-edge errors can be reduced by using orthogonal

overlapping tapered transforms (e.g., LOT, ELT, MLT, etc.)

Lec 16b.6-15

2/2/01

P2

Smoothing with Pseudo-Random Noise (PRN)

Problem: Coarsely quantized images are visually unacceptable

Solution: Add spatially white PRN to image before quantization, and

subtract identical PRN from quantized reconstruction;

result shows no quantization contours (zero!). PRN must

be uniformly distributed, zero mean, with range equal to

quantization interval.

s(x,y)

+

+

+

PRN(x,y)

Lec 16b.6-16

2/2/01

Q

channel

Q

−1

+

-

+

ˆ y)

s(x,

PRN(x,y)

P3

Smoothing with Pseudo-Random Noise (PRN)

+

s(x,y)

+

Q

+

channel

Q

−1

-

ˆ y)

s(x,

s+PRN(x)

s(x)

A

p{prn}

Q

−1

[Q( s(x))]

-A/2 0

A/2

A

PRN

x

0

ˆ )

s(x

d

A

A

d

x

0

s(x)

Lec 16b.6-17

2/2/01

+

PRN(x,y)

PRN(x,y)

0

+

x

0

A

ˆ )=

s(x

Q [ s(x )+PRN(x )]

−PRN(x)

x

0

filtered sˆ (x)

= ŝ(x) ∗ h(x)

x

P4

Example of Predictive Coding

s(t)

+

t

+

δ(t − ∆ )

δ

-

code δ’s

δ(t −∆ )

+

channel

~ s(t − 2∆ )

+

+

ˆ − 2∆ )

s(t

decode δ’s

decode δ’s

δ(t − 2 ∆ )

2∆ delay

ˆ )

s(t

predictor

(3∆)

predictor

(3∆)

t - 2∆

ˆ )

s(t

t - 2∆

∆ = computation time

The predictor can simply predict using derivatives, or can be

very sophisticated, e.g. full image motion compensation.

Lec 16b.6-18

2/2/01

P5

Joint Source and Channel Coding

Source coding

Channel coding

high priority

data

high degree

of protection

medium priority

medium

protection

lowest priority

lowest degree

of protection

+

+

+

+

channel

+

+

For example: lowest priority data may be

highest spatial (or time) frequency components.

Lec 16b.6-19

2/2/01

P6

Prefiltering and Postfiltering Problem

channel

sampler

truth

s(t)

(sound,

image,

etc.)

f(t)

+

+

g(t)

+

“prefilter”

i(t)

n1(t)

O(t)

“observer response function”

h(t)

+

+

“postfilter”

n2(t)

-

O(t)

+

( )

out

2

∫

MSE

(minimize)

Given s(t), f(t), i(t), O(t), n1(t), and n2(t) [channel plus receiver

plus quantization noise], choose g(t), h(t) to minimize MSE.

Lec 16b.6-20

2/2/01

P7

Prefiltering and Postfiltering

g(t)

Typical solution:

some

“sharpening”

Interpretation:

0

“Mexican-hat

function”

g(t)

t

h(t)

0

t

Given S(f ) , N(f )

S(f )

N2 (f ) , + aliasing

s(f ) • G(f )

fo

f

s(f ) G(f ) • H(f )

Solution:

Net N2 (f )

+ aliasing

f

f

fo

fo

By boosting the weaker signals relative to the stronger ones prior to

adding aliasing and n2(t), better weak-signal (high-frequency) performance

follows. Prefilters and postfilters first boost and then attenuate weak

signal frequencies.

Lec 16b.6-21

2/2/01

(Ref: H. Malvar, MIT EECS PhD thesis, 1987)

P8

Analog Communications

Double Sideband Synchronous Carrier “DSBSC”:

Received signal = A c s(t) cos ωc t + n(t)

s(t)

cos ωc (t)

S(f)

kTR

• 4W

n (t ) =

2

N

2

2W

kT 2

∆

= No

0

Lec 16b.6-22

2/2/01

fc

f

R1

DSBSC Receiver

[ A c s(t ) + nc (t)] cos ωc t − ns (t ) sin ωc t

×

y(t)

LPF

sig(f)

2W

yLPF (t)

kT 2

cos ωc t

0

SNRout = ?

fc

f

∆

Let n(t) = nc (t) cos ωc t − ns (t)sin ωc t

slowly varying

slowly varying

So: n2 (t ) = ⎡nc2 (t ) + ns2 (t )⎤ 2 = nc2 = ns2 = 2No 2W

⎣

⎦

y(t) = [ A c s(t ) + nc (t )] cos2 ωc t − ns (t ) ( sin ωc t )(cos ωc t )

Lec 16b.6-23

2/2/01

n (t )

(filtered out by

= s sin 2ωc t

low-pass filter)

2

R2

DSBSC Carrier

2

2

2

2

2

So: n (t) = ⎡nc (t) + ns (t)⎤ 2 = nc = ns = 2No 2W

⎣

⎦

y(t) = [ A c s(t) + nc (t)] cos ωc t − ns (t) sin ωc t cos ωc t

2

n (t )

(filtered out by

= s sin 2 ωc t

low-pass filter)

2

cos2 ωc t = 1 (1 + cos 2ωc t )

2

Therefore yLPF (t) = 1 [ A c s(t) + nc ( t)] (low-pass filtered)

2

Sout Nout = A c2 s2 ( t) nc2 (t) = [Pc 2No W ] s2 (t)

(

let max = 1 4No W

where carrier power Pc = A c2 2

)

(

)

∆

= "CNR"DSBSC = "Carrier-to-Noise Ratio" for s2 = 1

Lec 16b.6-24

2/2/01

R3

Single-sideband “SSB” Systems

(Synchronous carrier)

Pc s2 (t)

Sout Nout =

2No W

W

-fc

0

fc

N

f

Ps 2 watts

Note: Both signal and noise are halved, so

Sout NoutSSBSC = Sout NoutDSBSC

Lec 16b.6-25

2/2/01

R4