Types of Communication

advertisement

Types of Communication

Analog: continuous variables with noise ⇒

P{error = 0} = 0 (imperfect)

Digital:

decisions, discrete choices, quantized, noise ⇒

P{error} → 0 (usually perfect)

Message

S1, S2,…or SM

Modulator

⇒ v(t)

channel; adds

noise and distortion

M-ary

messages, where

M can be infinite

Received

message

S1, S2,…or SM

Demodulator;

hypothesize H1…HN

(chooses one)

The channel can be radio, optical, acoustic, a memory device

(recorder), or other objects of interest as in radar, sonar, lidar,

or other scientific observations.

Lec14.10-1

2/6/01

A1

Optimum Demodulator for Binary Messages

Hypothesis:

H1

Message: S1

OK

Probability

H2

a priori

ERROR

P1

S2 ERROR

vb

va

E.G.

V1

P2

v

Demodulator design

2-D

case

OK

vb

v measured

t

V2

va v ∈ V ⇒

1

" H1"

v ∈ V2 ⇒ " H2 "

Lec14.10-2

2/6/01

How to define V1,V2?

vc

A2

Optimum Demodulator for Binary Messages

v

vb

E.G.

V1

2-D

case

v measured

va

V2

vb

t

How to define V1,V2?

va v ∈ V ⇒ " H "

1

1

v ∈ V2 ⇒ " H2 "

vc

∆

Minimize Perror = Pe = P1∫ p {v S1} dv + P2 ∫ p {v S2 } dv

V2

V1

∫

replace with V

1

= P1 + ∫ ⎣⎡P2p {v S2 } − P1p {v S1}⎤⎦ dv

V1

Note:

Lec14.10-3

2/6/01

∫V1 p{v S1} dv + ∫V2 p{v S1} dv = 1

A3

Optimum Demodulator for Binary Messages

Pe = P1 + ∫ [P2p{v S2 } − P1p{v S1}] dv

V1

To minimize Perror , choose V1 ∋ P1p {v S1} > P2p {v S2 }

Very general solution ↑

[i.e., choose maximum a posteriori P (“MAP” estimate)]

Lec14.10-4

2/6/01

A4

Example: Binary Scalar Signal Case

∆

∆

S1 = A volts , S2 = O volts ,

∴ p{v S1} =

p{v S 2 } =

2 2N

1

−

−

(

v

A

)

e

2πN

p{ v S 2 }

If P1 = P2 :

P2

(bias choise

toward H2 and a

priori information)

noise

1

e − v 2N

2πN

p{ v S1}

P1

0

Decision threshold if P1 = P2

Lec14.10-5

2/6/01

2∆

σn = N , Gaussian

A/2

v

A

p { v S}

P2

A/2

0

H2

P1

A

Threshold if P1 > P2

v

A5

Rule For Defining V1 : (Binary Scalar Case)

Choose V1 ∋ P1p {v S1} > P2p {v S2}

1

− v 2 2N

p {v S2} =

e

2πN

(binary case)

“Likelihood ratio”

{

}

P

A=

> 2 ⇒" V1"

p{v S2 } P1

or (equivalently)

An A > An(P2 P1) ⇒" V1"

∆ p v S1

For additive Gaussian noise,

[

]

(

An A = - (v - A )2 2N + v 2 2N = 2vA − A 2

)

?

2N > An(P2 P1)

A 2 + 2NAn (P2 P1)

A N

, or v > + A n (P2 P1)

∴ choose V1 if v >

2A

2 A Lec14.10-6

2/6/01

bias

A6

Binary Vector Signal Case

For better performance, use multiple independent samples:

v(t)

S1

?

∆ p{ v S1} P2

>

A=

p{ v S2 } P1

t

0

1 2

m

S

2

m

Here P {v1,v 2 ,...,vm S1} = p {vi S1} (independent noise samples)

π

i =1

− (v i − S1i ) 2 2N

1

e

Where p{v i Si } =

2πN

(

i =1

m

p { v Si} =

Lec14.10-7

2/6/01

(

1

2πN

)

m

e

)

− ∑ vi − S1i 2 2N

B1

Binary Vector Signal Case

m

p{ v Si } =

(

1

e

(

)

− ∑ v i − S1i 2 2N

i −1

2πN ) m

Thus the test becomes:

p {v S1} ? P2

A =

>

p {v S2} P1

∆

m

?

⎡m

P2

2

2⎤

1

An A =

vi − S2i ) − ∑ ( vi − S1i ) ⎥ > An

(

∑

⎢

2N ⎣i=1

P1

⎦

i=1

v − S2

But v − S2

2

2

v − S1

2

2

− v − S1 = −2v • S2 + 2v • S1 + S2 • S2 − S1 • S1

∗

∗

S

S

S

S

•

−

•

2

2 + N An ⎛ P2 ⎞

Therefore v ∈ V1 iff v • (S1 − S2 ) > 1 1

⎜P ⎟

2

⎝ 1⎠

Lec14.10-8

2/6/01

Bias = 0

if energy E1 = E2

Bias = 0

if P2 = P1

B2

Binary Vector Signal Case

P

V1 iff v1 • (S1 − S2 ) > S1 • S1 − S2 • S2 + N An ⎜⎛ 2 ⎞⎟

2

⎝ P1 ⎠

m

∑τ

×

S1

v

i=1

+

-

operator

H1

H2

m

∑τ

×

S2

i=1

Multiple hypothesis generalization:

?

Choose Hi if fi = v • Si − Si • Si + N An Pi > all f j≠i

2

This “matched filter” receiver minimizes Perror

∆

Lec14.10-9

2/6/01

B3

Graphical Representation of Received Signals

2

3-D Case:

Average energy = ∑ Si Pi

2

i =1

S1

V1

n

V2

0

S2

S1( t )

S13

S11

n

t

S12

Lec14.10-10

2/6/01

B4

Design of Signals Si

+1

E.G. consider

S1 = −S 2

S1 = +2

vs.

Average energy

S

2

E.G. 2-D space

for S1, S 2 , S3 , S 4 :

= E =1

-1

S2

S2

b

a

S4

n

S4

S1

S2

S1

0

decision

boundaries

Lec14.10-11

2/6/01

E = 2(P1 = P2 )

3-D space

(S1 = S11, S12 , S13 )

(S1 = S11, S12 )

S3

S2 = 0

Better

b

S3

b

ratio

a

B5

Design of Signals Si

2-D space:

S1,..., S16

Si2

19

Si1

16-ary signals

or magnitude/phase

vs

equilateral

triangle

slightly lower average

signal energy for same

p{error}

n-Dimensional sphere packing optimization unsolved

Lec14.10-12

2/6/01

B6

∆

Calculation of p{error} = Pe :

Binary case:

For additive Gaussian noise, optimum is

2

S1 − S2

" H1" if v • (S1 − S2 ) >

2

Where v = S + n

(

∆ 2

[

2

P

+ N An 2

P1

]

N = n ( t ) = NoB = kTsB No 2 W Hz - 1 × 2B, double sideband

)

2

2

⎫

⎧

S

S

−

1

2

P

⎪

⎪

Pe S1 = p⎨v • (S1 − S2 ) <

+ N An 2 ⎬

2

P1 ⎪

⎪⎩

⎭

2

⎧⎪

− S1 − S2

P2 ⎫⎪

= p ⎨n • (S1 − S2 ) <

+ N An ⎬ = p {y < −b}

2

P1

⎪⎩

⎪⎭

Lec14.10-13

2/6/01

y • 2B[GRVZM]

-b • 2B

D1

Duality of Continuous and Sampled Signals

⎫

⎧

2

⎪⎪

− S1 − S2

P2 ⎪⎪

(S1 −

Pe S1 = p⎨ n•

S2 ) <

+ N An ⎬ = p{y < −b}

P1 ⎪

2

⎪y •2B[GRVZM] ⎪⎭

⎪⎩

−b• 2B

Conversion to continuous signals assuming nyquist sampling is

helpful here, S1( t )[0 < t < T] ↔ S1 (2BT samples, sampling theorem)

∆

[

T

y = ∫ n( t ) • [S1( t ) − S2 ( t )] dt

No

WHz −1

2

noise

o

]

T

No

∆1

2

b = ∫ [S1( t ) − S2 ( t )] dt −

An(P2 P1)

2o

2

-B

0

B

f

2⎫

⎧⎡ 2BT

⎤ ⎪

⎪ 1

2∆

2

σ y = E y = E⎨⎢

n j (S1j − S2 j )⎥ ⎬

∑

⎥⎦ ⎪

⎪⎢⎣ 2B j=1

⎩

⎭

[ ]

Lec14.10-14

2/6/01

D2

Calculation of Pe, continued

2⎫

⎧⎡ 2BT

⎤ ⎪

⎪ 1

2 ∆

2

σ y = E y = E⎨⎢

n j (S1j − S2 j )⎥ ⎬

∑

⎥⎦ ⎪

⎪⎢⎣ 2B j=1

⎩

⎭

[ ]

)(

2 ⎧2BT 2BT

1

⎛ ⎞ ⎪

= ⎜ ⎟ E⎨ ∑ ∑ nin j S1i − S2i S1j − S2 j

⎝ 2B ⎠ ⎪⎩ i=1 j=1

(

)

⎫⎪

⎬

⎪⎭

where E ⎣⎡nin j ⎤⎦ = Nδij

2

T

2 No

2

2 ⎛ 1 ⎞

[

]

σ y = ⎜ ⎟ N S1 − S2 =

S

(

t

)

−

S

(

t

)

dt

1

2

∫

2 o

⎝ 2B ⎠

N oB

T

2B ∫ [S1( t ) − S 2 ( t )] dt

2

o

Lec14.10-15

2/6/01

D3

Calculation of Pe, continued

2

T

2 N

⎛ 1⎞

σ2y = ⎜ ⎟ N S1 − S2 = o ∫ [S1( t ) − S2 ( t )]2 dt

2 o

⎝ 2B ⎠

NoB

T

2B ∫ [S1( t ) − S 2 ( t )] dt

2

o

p( y ) =

1

2πσ2y

e

− y 2 2σ 2y

(GRVZM)

Therefore:

P(y)

Pe S1 =

Lec14.10-16

2/6/01

−b

∫

−∞

1

2πσ2y

e

− y 2 2σ2y

dy

-b

0

y

D4

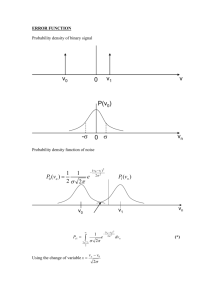

Definition of ERFC(A)

A

∆ 1

“Error function” ERF( A ) =

∫e

π −A

−x2

dx

σ=

1

2

“Complementary error function”

∆

ERFC ( A ) = 1 - ERF(A)

x

-A A

1

Then Pe S1 = ERFC ( A ), where A must be found

2

If we let x 2 = y 2 2σ2y then

A

ERF(A) = 1 ∫ e

π −A

− x2

dx =

1

Aσ y 2

∫

2πσ2y − Aσ y 2

e

where the new limits Aσ 2 and factor 1

Lec14.10-17

2/6/01

− y 2 2σ2y

dy

2πσ2y arise as follows:

D5

Definition of ERFC(A)

A

1

ERF(A) =

e

∫

π −A

−x2

dx =

1

Aσ y 2

∫

e

− y 2σ 2y

2πσ2y − Aσ y 2

where the new limits Aσ 2 and factor 1

dy

2πσ2y arise as follows:

Since x = y σ y 2 , the limit x = A = y σ y 2

becomes a limit where y = Aσ y 2

Also, dx = dy σ y 2 so 1 π becomes 1

Lec14.10-18

2/6/01

2πσ2y

D6

Solution for Pe for Binary Signals

(

Pe S1 = 1 ERFC( A ) = 1 ERFC b σ y 2

2

2

(where the limit

)

and Pe = P1Pe S1 + P2Pe S2

b = Aσ y 2 , so A = b σ y 2 )

If P1 = P2 = 1 , and since Pe S1 = Pe S2 , then

2

(

Pe = 1 ERFC b σ y 2

2

Lec14.10-19

2/6/01

)

T

⎡

⎤

1 [S (t ) − S (t)]2 dt

⎢

⎥

1

2

∫

2

⎢

⎥

0

1

= ERFC ⎢

⎥

2

T

⎢

2 ⎥

⎢ 2 (No 2) ∫ [S1(t ) − S2 (t )] dt ⎥

⎢⎣

⎥⎦

0

D7

Solution for Pe for Binary Signals

T

⎡

⎤

2

1 [S (t) − S (t)] dt

⎢

⎥

1

2

∫

2

⎢

⎥

0

1

Pe = ERFC ⎢

⎥

2

T

⎢

2 ⎥

⎢ 2 (No 2) ∫ [S1(t ) − S2 (t )] dt ⎥

⎣

⎦

0

⎡1 T

⎤

1

2

[

]

−

Pe = ERFC ⎢

S

(

t

)

S

(

t

)

dt No ⎥

1

2

∫

2

⎢⎣ 2 0

⎥⎦

T

T

2

If ∫ S1 ( t )dt + ∫ S 2

2 ( t )dt is fixed for P1 = P2 then

0

0

T

⎛

⎞

2

To minimize Pe , let S2 ( t ) = −S1( t ) ⎜ maximizes ∫ [S1( t ) − S2 ( t )] dt ⎟

⎜

⎟

⎝

⎠

0

Lec14.10-20

2/6/01

D8

Examples of Binary Communications Systems

⎤

⎡1 T

1

2

Pe = ERFC ⎢

∫ [S1(t) − S 2 (t)] dt No ⎥

2

⎥⎦

⎢⎣ 2 0

T

1

Assume P1 = P2 = and define ∫ s12 (t)dt ∆

=E

2

0

Modulation type

s1(t)

s2(t)

Pe

“OOK”

(on-off keying)

A cos ωo t

0

“FSK”

(frequency-shift

keying)

A cos ω1t

A cos ω2 t

1 ERFC E

avg 2No

2

“BPSK”

binary phaseshift keying)

A cos ωt

− A cos ωt

1 ERFC E

avg No

2

Lec14.10-21

2/6/01

1 ERFC E 4N

o

2

= 1 ERFC Eavg 2No

2

D9

Examples of Binary Communications Systems

Note:

Pe = f (E AVG No )

[J]

[W Hz-1 = J]

Cost of communications ∝ cost of energy, Joules per bit

(e.g. very low bit rates imply very low

power transmitters, small antennas)

Lec14.10-22

2/6/01

D10

Probability of Baud Error

Pe

1

10-1

10-2

10-3

10-4

10-5

10-6

10-7

6 dB

OOK (coherent)

FSK

BPSK

FSK non-coherent

3 dB

0

4

8

12

16

20

E/No (dB)

Non-coherent FSK: carrier is unsynchronized so that both sine and

cosine terms admitted, increasing noise. Such

“envelope detectors have a different form of Pe(E/No).

Lec14.10-23

2/6/01

Note how rapidly Pe declines for E No ~

> 12 − 16 dB

D11