( ) ∑ { }

advertisement

5.73 Lecture #11

11 - 1

Eigenvalues, Eigenvectors, and Discrete Variable Representation (DVR)

should have read CDTL pages 94-144

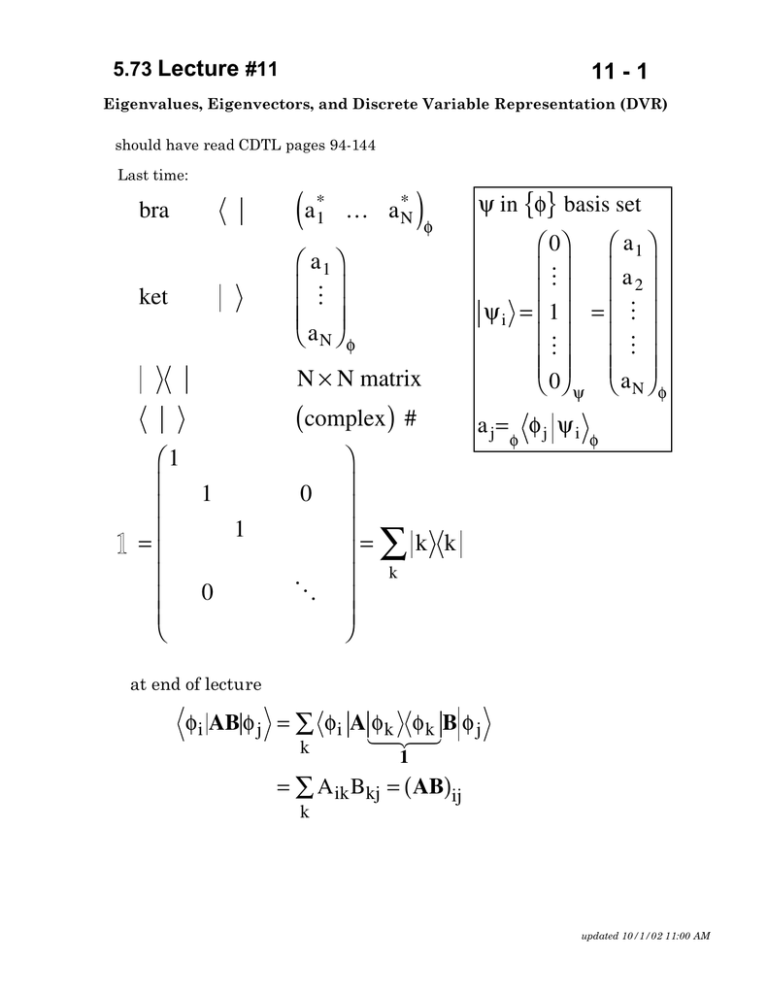

Last time:

bra

(a

*

1

*

… aN

)

ψ in {φ} basis set

φ

a1

0

a

M

2

ψ i = 1 = M

M

M

0 ψ a N φ

a1

M

aN φ

ket

N × N matrix

(complex ) #

1

1

1

1

=

0

0

O

=

∑k

a j= φj ψ i

φ

φ

k

k

at end of lecture

φ i AB φ j = ∑ φ i A φ k φ k B φ j

1

424

3

k

1

= ∑ A ik Bkj = (AB)ij

k

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 2

What is the connection between the Schrödinger and Heisenberg representations?

ψ i ( x) = x ψ i

x 0 = δ( x, x 0 )

eigenfunction of x with eigenvalue x0

Using this formulation for ψi(x), you can go freely (and rigorously) between the

Schrödinger and Heisenberg approaches.

1=

∑

k

Today:

k k =

∫

x x dx

eigenvalues of a matrix – what are they? how do we get them?

(secular equation). Why do we need them?

eigenvectors – how do we get them?

Arbitrary V(x) in Harmonic Oscillator Basis Set (DVR)

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 3

Schr. Eq. is an eigenvalue equation

Âψ = aψ

0

a1

a2

A=

O

aN ψ

0

in matrix language

A ψi = ai ψi

1

0

ψ1 =

0

M ψ

satisfies A ψ 1 = a 1 ψ 1

but that is the eigen-basis representation – a special representation!

What about an arbitrary representation? Call it the φ representation.

N

A

c i i φ = a

ci i φ * * *

* * * i =1

i

A as transformation on each φ i

∑

∑

N unknown coefficients {c i }

Eigenvalue equation

i = 1 to N

How to determine {c i } and a ? Secular Eqn. derive it.

first, left multiply by φ j

∑A c

φ

ji i

=a

i

∑c

N

0=

i

j i =a

i

∑ c [A

i

φ

ji

∑c δ

i ij

i

− aδ ij

i =1

]

one equation

N unknowns

next, multiply original equation by φ k

N

0=

∑ c [A

i

φ

ki

i =1

etc. for all

φ

− aδ ik

]

another equation

.

N linear homogeneous equations in N unknowns – Condition that a nontrivial

(i.e. not all 0’s) solution exists is that determinant of coefficients = 0.

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 4

A11 − a

A12

…

A 22 − a

O

A 21

0=

A1N

A NN − a

Nth order equation – as many as N different values of a satisfy this equation (if

fewer than N, some values of a are “degenerate”). Does everyone know how to

expand a determinant?

{ai} are the eigenvalues of A

(same as what we would have obtained by

solving differential operator eigenvalue

equation)

If we know the eigenvalues, then we can find the N { ψ i

ψi =

∑

cj j

j

φ

} such that

expand the eigenbasis in

(computationally convenient) basis

ψ i Aψ ψ j = a jδ ij

0

a1

Aψ =

0 O aN

A ψ ψ1

1

0

= a1

0

M

But we generally start with Aφ in nondiagonal form

computer

1.

2.

3.

transform to diagonal form by T ≤ Aφ T = A ψ

the diagonal elements are eigenvalues

the diagonalizing transformation is composed of eigenvectors,

column by column of T ≤ .

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 5

Hermitian Matrices

A = A†

A†ij = A*ji

(can use this property to show that all expectation values of A are real)

These matrices can be “diagonalized” (i.e. the set of all eigenvalues can be

found) by a unitary transformation.

T −1 = T ≤

unitary matrix

0

a1

a

2

≤ φ

≡ Aψ

diagonalization T A T =

O

diagonal

not diagonal

0

a

N ψ

≤

φ

≤

T A TT φ j

diagonal Aψ

Eigenvector

expressed in

φ basis set

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 6

0

0

T1≤i

M

M

≤

T

T ≤ φ i = T ≤ 1 = 2 i = ψ i = 1 ← i - th position

M

M

M

≤

i-th

TNi φ

position

0 φ

0 ψ

eigenvector

i-th column

of T†

suppose we apply

Aψ ψ i

0

M

= Aψ 1

M

i-th

0

ψ

0

M

= ai

M

0

ψ

0

M

= ai 1

M

0

ψ

RECAPITULATE:

Start with arbitrary basis set φ

Construct Aφ : Not Diagonal, but basis set was computationally convenient.

a1 0 0

= Aψ

O

Find T (computer) that causes T A T =

aN

0

≤

φ

Eigenstates (eigenkets) are columns of T ≤ in φ basis set.

Columns of T are the linear combination of eigenvectors that

correspond to each basis state. Useful for “bright state” calculations.

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 7

Can now solve many difficult appearing problems!

Start with a matrix representation of any operator that is expressable as a

function of a matrix.

e.g.

e − iH( t −t 0 )/h

propagator

f ( x)

potential curve

,

prescription example

f ( x ) = Tf (T† xT)T†

123

x1

†

T xT =

0

diagonalize x – so f( ) is

applied to each diagonal

element

x2

0

O

xN

f (x1 )

0

f (x 2 )

†

f (T xT) =

O

0

f (x N )

Then perform inverse transformation T f(T† x T)T† – undiagonalizes matrix, to

give matrix representation of desired function of a matrix.

Show that this actually is valid for simple example

f(x) = x N

f(x) = T T†xT T†xT L T†xT T†

[(

[

1

† N

)(

]

2

†

) (

= T T x T T = xN

N

)]

apply prescription

get expected result

general proof for arbitrary f(x) → expand in power series. Use previous result

for each integer power.

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 8

John Light: Discrete Variable Representation (DVR)

General V(x) evaluated in Harmonic Oscillator Basis Set.

we did not do H-O yet, but the general formula for all of the nonzero matrix

elements of x is:

1/2

h

n x n +1 =

2ωµ

(infinite dimension matrix)

x 2 = h

2ωµ

1/2

( n + 1)1/2

ω = (k µ)

1/2

h

x=

2ωµ

1

0

2

0

2

0

3

0

6

0

5

0

0

0

0

0

0

0

0

0

0

0

12

0

0

0

0

0

1

0

1

0

2

M

M

0

6

0

7

0

0

0

0

0

2

L

L

3

M

0

3

M

M

0

4

L 0 0

L 0 0

0 = 0 0

4 0 0

0 0 0

0

0

0 0 0 0

12 0 0 0 0

0

0 0 0

9

0

0 0

0 11 0

0

0 13 0

0

0 15 O

0

0

O O

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

[CARTOON]

etc. matrix multiplication

to get matrix for f(x) diagonalize e.g., 1000 × 1000 (truncated) x matrix that was

expressed in harmonic oscillator basis set.

updated 10/1/02 11:00 AM

5.73 Lecture #11

x1

0

T†xT =

0

0

11 - 9

0 0

0

x2 0

0

0 O

0

0 0 x 1000 x

diagonalized-x basis

{xi} are eigenvalues.

They have no

obvious physical

significance.

0

0

0

V( x 1 )

0

0

V( x 2 ) 0

V( x ) x =

0

O

0

0

0

0

0 V( x 1000 )

full

†

V( x ) H − O = TV( x ) x T = complicated

matrix

H=

next transform back

from x-basis to

H-O basis set

x

1000

T was determined

when x was

diagonalized

× 1000

H−O

p2

+ V(x)

2µ

need matrix for p2, get it from p (the general formula for all non-zero

matrix elements of p)

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 10

1/2

h(ωµ)

1/2

n p n + 1 = −i

n

+

1

(

)

2

0

1

0

0

2

1/2 − 1

h(ωµ)

p = −i

− 2 0

0

2

0

O

0

0

O

0

−1 0

2 0

0 −3 0 O

h(ωµ)

p2 = −

2 0 −5 0

2

0 O O O

0 O O O

if

H=

p2 1 2

+ kx

2µ 2

2

hω 0

H=

4 0

0

0

0 0

0 0

O O

O O

0

0

O

O

0

same structure as x

1 k = 1 ω 2 µ

2

2

0

0

0

1 / 2

0 0 0

0

0

6 0 0 = hω 0 3 / 2

0 10 0

0

0

5

/

2

0

0 0 14

0

0 O

0

but for arbitrary V(x), express H in HO basis set,

2

pHO

H HO =

+ V( x ) HO

2µ

TV(x)xT†

E1 0 0

eigenvalues obtained by S H HOS = 0 O 0

0 0 EN

†

columns of S† are eigenvectors in HO basis set!

updated 10/1/02 11:00 AM

5.73 Lecture #11

11 - 11

1. Express matrix of x in H-O basis (automatic; easy to program a

computer to do this), get xHO.

2. Diagonalize xHO. Get xx and T.

3. Trivial to write V(x)x as V(xi) = V(x)x in x basis

4. Transform V(x)x back to V(x)HO

5. Diagonalize HHO.

Solve many V(x) problems in this basis set.

1000 × 1000 T matrix diagonalizes x ⇒ 1000 xi’s

Save the T and the {xi} for future use on all V(x) problems.

To verify convergence, repeat for new x matrix of dimension 1100 x 1100. There

will be no resemblance between {x i}1000 and {x i}1100 .

If the lowest eigenvalues of H (i.e. the ones you care about) do not change (by

measurement accuracy), converged!

Next: Matrix solution of HO (no wave functions at all)

Start from Commutation Rule!

Then Perturbation Theory.

updated 10/1/02 11:00 AM