10 - 1 Lecture

advertisement

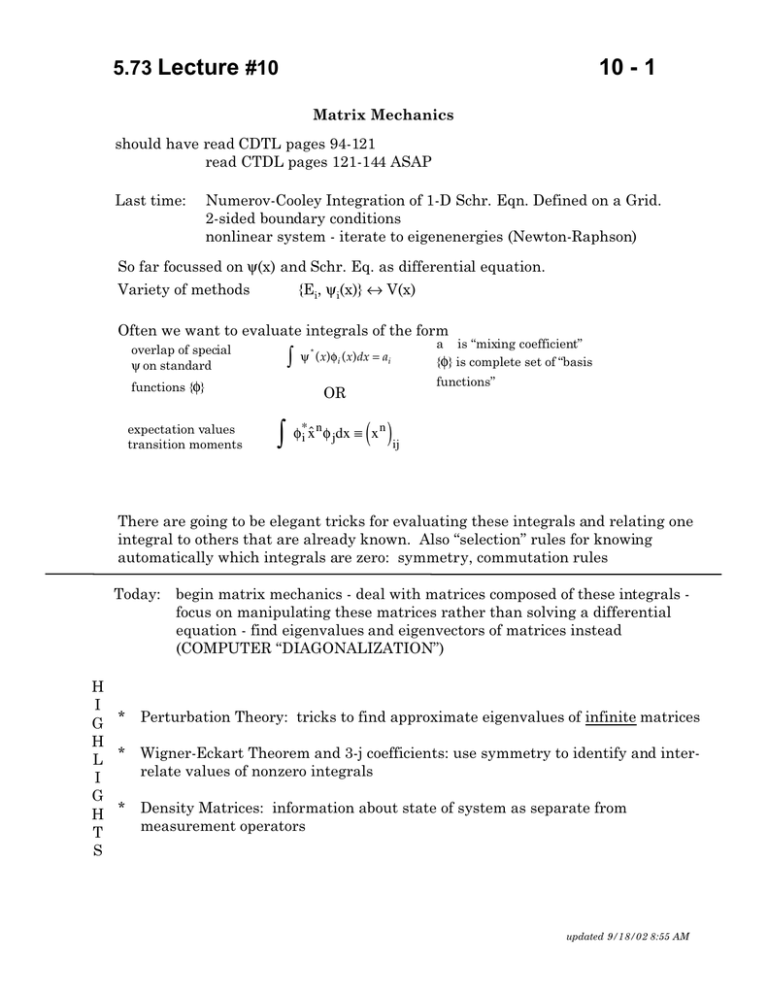

5.73 Lecture #10

10 - 1

Matrix Mechanics

should have read CDTL pages 94-121

read CTDL pages 121-144 ASAP

Last time:

Numerov-Cooley Integration of 1-D Schr. Eqn. Defined on a Grid.

2-sided boundary conditions

nonlinear system - iterate to eigenenergies (Newton-Raphson)

So far focussed on ψ(x) and Schr. Eq. as differential equation.

{Ei, ψi(x)} ↔ V(x)

Variety of methods

Often we want to evaluate integrals of the form

overlap of special

ψ on standard

functions {φ}

expectation values

transition moments

∫

ψ * ( x) φi ( x) dx = ai

OR

a is “mixing coefficient”

{φ} is complete set of “basis

functions”

∫ φ*i x̂nφ jdx ≡ (xn )ij

There are going to be elegant tricks for evaluating these integrals and relating one

integral to others that are already known. Also “selection” rules for knowing

automatically which integrals are zero: symmetry, commutation rules

Today:

begin matrix mechanics - deal with matrices composed of these integrals focus on manipulating these matrices rather than solving a differential

equation - find eigenvalues and eigenvectors of matrices instead

(COMPUTER “DIAGONALIZATION”)

H

I

G * Perturbation Theory: tricks to find approximate eigenvalues of infinite matrices

H

L * Wigner-Eckart Theorem and 3-j coefficients: use symmetry to identify and interrelate values of nonzero integrals

I

G

H * Density Matrices: information about state of system as separate from

measurement operators

T

S

updated 9/18/02 8:55 AM

5.73 Lecture #10

First Goal:

10 - 2

Dirac notation as convenient NOTATIONAL simplification

It is actually a new abstract picture

(vector spaces) — but we will stress the utility (ψ ↔ | ⟩ relationships)

rather than the philosophy!

Find equivalent matrix form of standard ψ(x) concepts and methods.

1. Orthonormality

∫ ψ*i ψ j dx = δij

2. completeness

ψ(x) is an arbitrary function

(expand ψ)

A. Always possible to expand ψ(x) uniquely in a COMPLETE BASIS SET {φ}

ψ(x) =

∑ a iφi (x)

i

*

(expand Bψ)

B.

mixing coefficient — how to get it?

*

left multiply by φ j

∫

a i = ∫ φ*i ψdx

ˆ ψ in {φ} since we can write ψ in terms of {φ}.

Always possible to expand B

ˆ φ = ∑ b φ What are the b ? Multiply by ∫ φ*

So simplify the question we are asking to B

j

j

i

j j

j

{ }

b j = ∫ φ*j B̂φ i dx ≡ B ji

B̂φ i = ∑ B ji φ j

j

note counter-intuitive pattern of

indices. We will return to this.

* The effect of any operator on ψi is to give a linear combination of ψj’s.

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 3

3. Products of Operators

(ÂB̂)φi = Â(B̂φi ) = Â∑ Bjiφ j

j

can move numbers (but not operators) around freely

= ∑ B ji Âφ j = ∑ ∑ B ji A kjφ k

j

note repeated index

j k

(

)

= ∑ A kjB ji φ k = ∑ (AB)ki φ k

j,k

k

* Thus product of 2 operators follows the rules of matrix multiplication:

ÂB̂ acts like A B

Recall rules for matrix multiplication:

indices are

A

row,column

must match # of columns on left to # of rows on right

order

matters!

(N × N )

(1 × N )

⊗

⊗

" row vector"

( N × 1)

column

vector

(N × N )

( N × 1)

→

→

(N × N )

(1 × 1)

a matrix

a number

→

(N × N )

a matrix!

" column vector"

⊗

(1 × N )

row

vector

Need a notation that accomplishes all of this memorably and compactly.

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 4

Dirac’s bra and ket notation

Heisenberg’s matrix mechanics

ket

a1

a

is a column matrix, i.e. a vector 2

M

aN

contains all of the “mixing coefficients” for ψ expressed in some basis set.

[These are projections onto unit vectors in N-dimensional vector space.]

Must be clear what state is being expanded in what basis

[i

ai

]

ψ(x) = ∑ ∫ φ*i ψdx φ i (x)

∫ φ1*ψdx

* ψ expressed in φ basis

*

* a column of complex #s

φ

ψdx

ψ =∫ 2

* nothing here is a function of x

M

φ* ψdx

∫ N

φ ← bookkeeping device (RARE)

OR, a pure state in its own basis

0

1

φ 2 = 0

one 1, all others 0

M

0 φ

bra

is a row matrix

( b 1,b 2 … b N )

{ }

contains all mixing coefficients for ψ* in φ* basis set

ψ * (x ) =

∑[∫ φiψ * dx ]φ*i (x )

(This is * of ψ (x) above)

i

The * stuff is needed to make sure ψ ψ = 1 even though φ i ψ is complex.

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 5

The symbol ⟨a|b⟩, a bra–ket, is defined in the sense of product of

(1 × N) ⊗ (N × 1) matrices → a 1 × 1 matrix: a number!

Box Normalization in both ψ and ⟨ | ⟩ pictures

1 = ∫ ψ * ψdx

(

i

)

ψ = ∑ ∫ φ*i ψdx φ i

(

expand both in

φ basis

)

ψ* = ∑ φ jψ * dx φ*j

j

∫

1 = ψ * ψdx =

i, j

∑ ∫ φ*j ψdx

c.c.

2

δij

p

1=

∑ ∫ φj ψ * dx ( ∫ φ*i ψdx )∫ φ*j φidx

forces 2 sums to

collapse into 1

j

real, positive #’s

We have proved that sum of |mixing coefficients|2 = 1. These are called

“mixing fractions” or “fractional character”.

now in

picture

*

φ

ψ

dx

1

ψ ψ = φ1ψ * dx

φ2 ψ * dx L φ*2 ψdx

144444424444443

M

row vector: “bra”

1

424

3

column

vector “ket”

∫

=

∑∫

∫

∫

∫

2

φ*j ψdx

same result

j

[CTDL talks about dual vector spaces — best to walk before you run. Always

translate ⟨ ⟩ into ψ picture to be sure you understand the notation.]

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 6

Any symbol ⟨ ⟩ is a complex number.

Any symbol | ⟩ ⟨ | is a square matrix.

again ψ ψ = ( ψ φ1

φ1 ψ

ψ φ2 …) φ2 ψ

M

= ∑ ψ φi φi ψ = ψ ψ = 1

i

1 unit matrix

1

1 0 L

0

what is φ1 φ1 = (1 0 … 0) = 0 0

M

M

O

0

1

1 0

what is ∑ φ i φ i =

0

1

i

O

a shorthand for

specifying only

the important

part of an

infinite matrix

unit or identity

matrix = 1

“completeness” or “closure” involves insertion of 1 between any two symbols.

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 7

Use 1 to evaluate matrix elements of product of 2 operators, AB (we know how

to do this in ψ picture).

square matrix

i-th

0

:

φi A φ j = (0 …1… 0)(A) 1

j-th position – picks

:

out j-th column of A

i-th

0

A1 j

= (0 …1… 0) A 2 j = A ij

M

φi AB φ j = ∑ φi A φ k φ k B φ j

k

1

= ∑ A ik Bkj = (AB)ij a number

k

In Heisenberg picture, how do we get exact equivalent of ψ(x)?

basis set δ(x,x0) for all x0 – this is a complete basis (eigenbasis

for ^

x , eigenvalue x0) - perfect localization at any x0

⟨x ψ ⟩ is the same thing as ψ(x)

↑

i.e.,

∫

δ ( x, x′ ) * ψ ( x′ ) dx′ = ψ ( x)

x is continuously variable ↔ δ(x)

overlap of state vector ψ with δ(x) – a complex number. ψ(x) is a complex

function of a real variable.

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 8

other ψ ↔ ⟨ ⟩ relationships

1. All observable quantities are represented by a Hemitian operator (Why –

because expectation values are always real). Definition of Hermitian operator.

A ij = A*ji

A = A†

or

2. Change of basis set

Aφ ↔ A u

Easy to prove that if all

expectation values of A

are real, then A = A† and

vice-versa

{φ} to {u}

Aijφ ≡ φi A φ j = φi 1A1 φ j

=

∑φ

k,l

uk u k A u l ul φ j

i

S*ki ≡ S†ik

↑

u

1st index is u

=

∑

Slj

← φ S is Frequently used

to denote “overlap”

↑

integral

u

j-th column of

S

φ

(

)

Sik≤ AuklSlj = S ≤ Au S

k,l

φ

A = S ≤ Au S

φ

ij

≡ Aij

a special kind of

transformation (unitary)

(different from usual

T–1AT “similarity”

transformation)

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 9

What kind of matrix is S?

Sl j = u l |φ j

[

]

Sl* j = u l |φ j * = φ j |u l ≡ Sj≤l

≤ means take complex conjugate and interchange indices.

Using the definitions of S and S ≤ :

∑

SljS†jk = u l | φj φj | u k

SljS†jk =

j

∑

u l | φj φj | u k = u l | 1 | u k

j

= u l | u k = δ lk = 1 lk

∴ SS† = 1

OR

S† = S–1 “Unitary”

a very special and

convenient property.

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 10

Unitary transformations preserve both normalization and orthogonality.

Aφ = S†A uS

SAφS† = SS†A uSS† = A u

A u = SAφS†

Take matrix element of both sides of equation:

A uij = u i | A | u j = (SAφS† ) ij

=

∑

S ik φ k | A | φl Sl† j

k ,l

∴ uj =

∑

φ l S l≤j

j - th column of S ≤

l

φ → u via S† ,S : u j is j- th column of S†

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 11

Thus,

j-th

uj

≤

0 S1 j

M S≤

2j

= 1 = M

M M

≤

0 Snj

φ

Alternatively,

A φpq = φ p|A|φq = (S ≤ Au S) pq

=

∑

≤

Spm

um |A|u n Snq

mn

∴ φq =

∑

u n Snq

q - th column of S

n

φq

q-th

0

S1q

M

S

2q

= 1 =

M

M

S

nq u

0 φ

updated 9/18/02 8:55 AM

5.73 Lecture #10

10 - 12

Commutation Rules

*

[Â, B̂] = ÂB̂ − B̂Â

e.g. [ x̂, p̂] = ih

means ( xp - px )φ = x

h dφ h

dφ

− φ+x

i dx i

dx

= ihφ

*

If  and B̂ are Hermitian, is ÂB̂ Hermitian?

Hermitian A and B

( AB)ij = ∑ A ik Bkj = ∑ A*ki B*jk = ∑ B*jk A*ki = (BA )*ji

k

but this is not what we need to say AB is Hermitian: That would be:

(AB)ij = (AB)*ji

AB is Hermitian only if [A,B] = 0

However,

1

[AB + BA] is Hermitian if A and B are Hermitian.

2

This is the foolproof way to construct a new Hermitian operator out of

simpler Hermitian operators.

Standard prescription for the Correspondence Principle.

updated 9/18/02 8:55 AM