Building an Automatic Speech Annotation System

advertisement

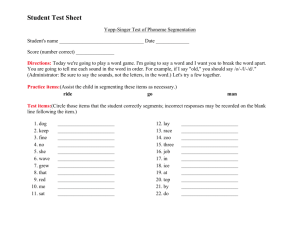

1

WICT PROCEEDINGS, DECEMBER 2008

Building an Automatic Speech Annotation System

Anthony Psaila

Department of Communications

and Computer Engineering

University of Malta

Email: antantyy@yahoo.co.uk

Abstract

The availability of phonetically

annotated and labelled speech corpora is highly

desirable from the point of view of speech

research and speech technology development.

Corpora that are built by the generation of

manual phoneme annotation are a laborious and

time-consuming task normally carried out by

expert phoneticians. The adoption of automatic

phoneme annotation for the Maltese language is

highly desirable for the building of a Maltese

Language Central Corpus database.

For this purpose, two automated

methods will be proposed. The first will include

phoneme annotation by utilising the EnglishAmerican TIMIT database, while the second will

utilise a set of manually-segmented Maltese

utterances tailor-made for this project. In order

to do this, it is required to have a previouslyannotated set of utterances up to phoneme level,

and to train a set of models, one for each

phoneme.

For the building of phoneme models,

several algorithms may be used, based on,

among others, Neural Networks, Hidden Markov

Models (HMMs), Wavelets and Dynamic Time

Warping. The Hidden Markov Model algorithm

was preferred, being the one that is most widely

used, and also due to the ready availability of the

HTK software suite.

Initially, the system will automatically

annotate simple Maltese words, but eventually it

will be expanded to perform generic Maltese

Speech Annotation. This paper will present the

methods applied in more detail together with the

results obtained.

Prof. Paul Micallef

Department of Communications

and Computer Engineering

University of Malta

Email: pjmica@eng.um.edu.mt

1 Introduction

Speech annotation is the task of

partitioning a speech signal into basic

speech sounds of a language, also known

as phonemes. It is a process of locating

the phoneme boundaries as precisely as

possible from ‘a priori’ sequence of

phonemes. Several algorithms have been

developed during the last years, but the

most successful were based on Hidden

Markov Models (HMM). These are

probabilistic

models

where

the

observations are a probabilistic function

i.e. the hidden states. HMM is defined in

terms of its set of transition probabilities

{aij} and state output probability

distributions {bj(Ot)} [1]

2 The power of HMM

For an efficient observation

system, it is imperative to optimize a set

of HMM models to a sequence of

available training data. For this purpose,

a re-estimation procedure must be

designed so that the set of model

parameters are adjusted to better

describe a given observation sequence.

The observation sequence used to adjust

such a model is referred to as training.

Training is very difficult and crucial.

One well known solution is an iterative

2

procedure such as the Baum-Welch

algorithm. In the proposed examples, the

models were re-estimated several times

during the training phase to obtain an

optimized model. To uncover the hidden

part, namely the start and end timings of

each phoneme, the Viterbi algorithm is

usually applied for a known sequence of

phonemes. This is an optimization

procedure for selecting the best phoneme

start and end timings for a given

observation. This is known as decoding

or Maximum Likelihood Sequence [2].

In this case, speech annotation

was performed by using HTK, which is a

toolkit for building HMMs [3]. It is

designed for building HMM-based

speech processing tools, such as speech

recognisers, or to perform speech

annotation. Usually two stages are

performed, each one complementing the

other. First, the HTK training tools are

used to estimate HMM parameters by

using training utterances. Secondly,

unknown utterances are transcribed

using the developed HTK recognition

tools in the first stage. For this purpose,

the HTK toolkit will be used to

implement Maltese Speech Annotation.

3 Using the DARPA TIMIT Database

The setting up of a Maltese

annotation

system

requires

a

considerable amount of previouslysegmented data, which was not available

for the purposes of this project. As an

alternative solution, testing commenced

using DARPA TIMIT [4]. This is a well

known multi-speaker, English/American

annotated database. The purpose of this

work was to automatically annotate

Maltese speech by making use of

existing material from other languages.

Initial experiments were therefore

devoted to the building of phoneme

HMMs from an existing annotated

database, i.e. DARPA TIMIT. The

DARPA TIMIT database has already

been used for bootstrapping recognition

from another language to Maltese [5]. In

this case, it has been used for speech

annotation.

For this purpose, the HTK toolkit

and 23 utterances from TIMIT were used

for the training phase of the phoneme

models. The topology used was a

continuous-density Gaussian 5-state

HMM. A sequence of parameterized

feature vectors was extracted from the

utterances. The system made use of

MFCC

using

Energy,

Delta,

Acceleration and Cepstral Mean

Normalization. Each analysis made use

of a 25 ms Hamming window with a 10

ms overlap. The training of all the

phonemes was carried out by a system

based on HTK toolkit.

For the purpose of system

evaluation, it was required to map the set

of TIMIT phonemes into the equivalent

Maltese phonemes. In practice, some

Maltese phonemes used the same TIMIT

phonemes while the rest made use of the

nearest equivalent. System evaluation

was performed by a set of Maltese

utterances. Phoneme segmentation found

to be within tolerance, which for this

experiment measured 17.2 ms (see

section 5), was marked as correct.

System performance was not so

satisfactory because only 73% of the

segmentation were within tolerance

range. However, these were only

preliminary results on which further

research was developed.

This relatively unsatisfactory

system performance was due to one or

3

more of the following factors:

1) the training of HMMs was carried

out using a small number of

utterances,

2) the training utterances were recorded

by various speakers,

3) not all the Maltese phonemes had an

exact

mapping

with

TIMIT

phonemes,

4) the results obtained were limited to a

mapped set of Maltese phonemes.

To resolve some of these issues

and increase system performance, it was

decided to provide some single-speaker,

freshly-recorded Maltese speech data for

the training of the HMMs.

4 Using a tailor-made Maltese

Utterance Database

The second method was based on

a speaker-dependent system utilizing a

continuous-density Gaussian, 5-state,

left-right HMM with mixture topology.

To build the Maltese speech database,

100 Maltese utterances were recorded by

one speaker at a professional studio. By

utilizing HTK toolkit and Cool Edit, all

the utterances were manually labelled so

that for each phoneme there was an

associated start and end timing. For the

training of HMMs, 70 of these

utterances were used while the other 30

were used for the evaluation phase.

4.1 Data Preparation Phase

The labelling of all the 70

training utterances were collectively

saved in one Master Label File (MLF).

For the training of HMMs, the 70

training speech waveforms were

parameterized into MFCC coefficients,

using Energy, Delta, Acceleration and

Cepstral Mean coefficients. A 39element acoustic vector was extracted

from each frame. The source waveforms

were sampled at 16000 Hz, utilizing a 25

msec Hamming window and a frame

period of 10 msec.

4.2 Initialization Phase

In order to create the HMM

definitions for the required phonemes, a

prototype model was designed with the

already mentioned topology. Due to the

fact that several parameter distributions

are inherently multi-modal, the design of

the model contains multiple mixture

components to improve annotation

performance. Initially the prototype is

configured with all mean values equal to

0 while all diagonal variance values are

equal to 1. The HTK HInit tool was used

for the initialization of the set of

phoneme HMMs [3]. The system made

use of the available training data and

their respective MFCC parameters. In all

there were 27 phonemes, plus one for sil

to represent ‘silence’ and one for breath

to represent ‘voice breath’.

4.3 Iteration Phase

During this phase the initial

HMM parameters are further reestimated by the HTK HRest tool. This

tool is based on Baum-Welch algorithm,

and it makes use of the available training

material, until the re-estimation change

converges up to a given threshold.

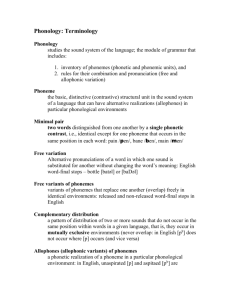

4.4 Re-estimation Phase

This phase was dominated by the

HERest embedded training tool [3]. It

performed

re-estimation

of

the

parameters, which have been previously

estimated by HRest, of all the HMM

4

phoneme models simultaneously. For

each

training

utterance,

the

corresponding phoneme models were

concatenated, and subsequently, the

forward-backward algorithm was used to

accumulate the relevant statistics for

each HMM in the sequence. Each

training utterance was processed in turn,

and had an associated label file with the

corresponding transcription for that

utterance. This process is illustrated by

Fig 1. To avoid the computations of

highly improbable combinations during

the forward-backward probabilities, and

also for speeding up the computation,

HERest made use of pruning [3].

Pruning restricts the computations of

t (i ) and t (i ) values to just those

within a threshold, while the rest are

ignored. On completion, HERest outputs

the new updated HMM definitions in a

master macro file. These new phoneme

HMM definitions will be the key

parameters

during

the

phoneme

annotation process.

vectors similar to the training utterances.

Automatic Annotation was performed by

the HTK HVite tool in forced

alignment mode. In this mode the

system locates phoneme boundaries for

each speech utterance with a known

sequence of phonemes.

HVite computes a new network

for each input utterance, using the

phoneme level transcriptions and a

phoneme one-to-one dictionary. The

HVite decoding process, when in forced

alignment mode, builds a network with

only one path, since it is constrained by

the known phonemic sequence. For each

test utterance, HVite constructs an

alignment network, by utilizing a master

macro file, which contains the list of all

the required HMM models. The

annotation process will then attach the

appropriate HMM definition to each

phoneme instance. The decoder, by

applying the Viterbi algorithm, then

selects the optimal path through the

network which leads to the best

matching pronunciations. A lattice that

includes model alignment information is

then eventually constructed. Finally, the

lattice is converted into a transcription,

and also produces the phoneme labels

with their associated boundaries. The

output transcriptions will be saved in an

output file containing the phoneme

sequence

with

their

associated

boundaries. The annotation system

works by using these principles.

5 Results

Fig 1 File Processing in HERest (The HTK book 2002)

4.5 Evaluation Phase

The second set of 30 Maltese

utterances was used for system

evaluation. These utterances were

parameterized into a series of feature

Due to the fact that the

annotation system was utilizing a frame

of 25 ms duration, at a period of 10 ms,

and the location of phoneme boundaries

was frame dependent. This dependence

was calculated using the formula

5

2

12

tolerance =

+ frame period,

where = 25 ms. It was established

that label markings within the 17.2 ms

tolerance were correct.

System

performance

was

established by comparing the annotated

phoneme label markings with the

manually marked ones. Detection

accuracy was worked out by the

following formula:

Detection Accuracy

number of boundaries correctly located

total number of boundaries tested

Boundary detection accuracy reached the

88.11% mark.

All the voiced phones, which total 652,

were further divided into 5 categories,

and their percentage accuracy is listed in

Table 1. One can notice that the

semivowels

produced

the

worst

performance and constitute the group of

phonemes that are also most difficult to

determine manually.

Percentage Accuracy

Quantity

244 - vowels

Percentage <= 17.2 ms

87.91

141 - stops

97.16

26

- affricate

100.00

132 - fricative

93.94

109 - semivowels

72.94

652 - all phones

89.11

Table 1 Percentage Accuracy for each category

Fig 2 illustrates a comparison between

the manual and automatic transcriptions

with respect to waveform S071. The

upper set of labels represents the manual

data transcription, while the lower set of

labels represents the automatic data

transcription.

6 System Drawbacks and

Recommendations

For the purpose of proper

training of model parameters, and to

better evaluate the variety of phonemes,

it would be preferable for a larger

number of manually-segmented and

recorded utterances to be used. It is also

important to apply high quality speech

recording

for

better

phoneme

identification. Ideally, each utterance

should consist of clearly spoken words.

Another drawback was the fact that the

speech utterances were not phonetically

balanced, and thus some phonemes had

relatively few occurrences. Since the

training of the Hidden Markov Models is

dependent on the manual labelling of the

training data, it is very desirable that

their phoneme markings are as accurate

as possible.

For those phonemes that

presented difficulty with respect to

annotation, semivowels in particular, one

would recommend that special dedicated

training HMMs be applied to

specifically train this group of

phonemes. This could be carried out by

using an HMM with a different number

of states or another topology.

Although the annotation system

was speaker-dependent, it could be

expanded to a speaker-independent

system. This would require a larger,

multi-speaker

Maltese

annotated

database for the training of the HMMs.

6

Fig 2 A WaveSurfer screen shot showing, vertically from top to bottom, S071 waveform, manual phonetic

labels and the automatic-aligned phonetic labels.

The design of the HMM model was

based on a tri-mixture topology, which is

somewhat limited in the resultant

distributions. To improve system

performance and obtain a more robust

model, the number of mixtures should

preferably be increased to nine or more.

References

[1]

L.R. Rabiner, “A Tutorial on Hidden

Markov Models and Selected Applications in

Speech Recognition”, Proceedings of the IEEE,

Vol.77, No. 2, 1989

[2]

S. Young, and G. Bloothooft, “Corpusbased Methods in Language and Speech

Processing”, 1997

[3]

S. Young, G Evermann, T. Hain, D.

Kershaw, G. Moore, J. Odell, D. Ollason, D.

Povey, V. Valtchev, and P. Woodland. The HTK

Book. Cambridge University Engineering Dept,

Cambridge, UK, 2002

[4]

J.S. Garofolo, L.F. Lamel, W.M. Fisher,

J. G. Fiscus, D. S. Pallet, N.L. Dahlgren,

“DARPA TIMIT Acoustic-Phonetic Continuous

Speech Corpus CD-ROM”, NIST, 1990

[5]

R. Ellul-Micallef, “Speech Annotation”,

Dissertation, Department of Communications

and Computer Engineering, University of Malta,

2002