STUDYING THE EFFECTS OF DIFFERENTIATED INSTRUCTION IN THE SCIENCE CLASSROOM by Pablo Rojo

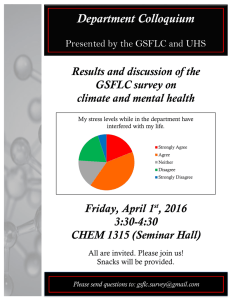

advertisement