Document 13454078

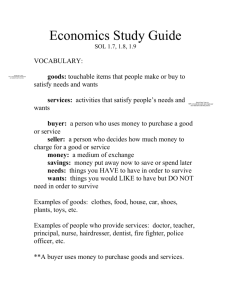

advertisement