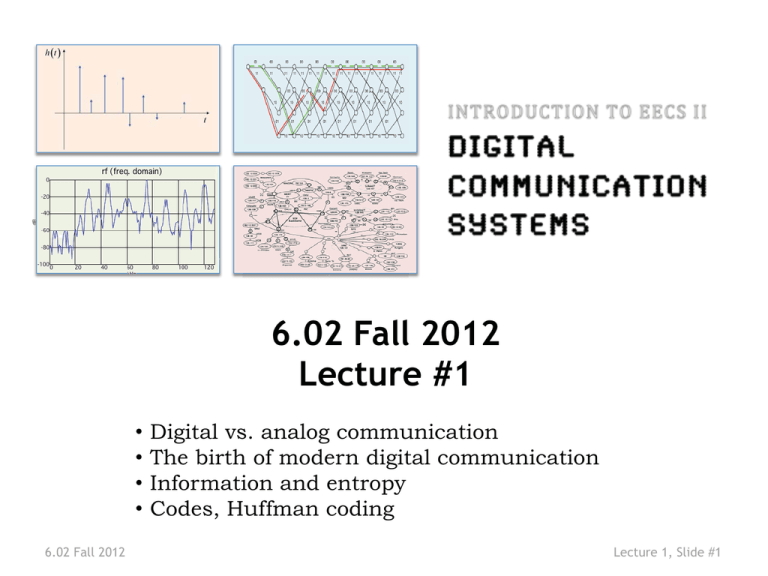

6.02 Fall 2012 Lecture #1

advertisement

6.02 Fall 2012

Lecture #1

•

•

•

•

6.02 Fall 2012

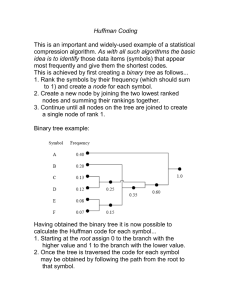

Digital vs. analog communication

The birth of modern digital communication

Information and entropy

Codes, Huffman coding

Lecture 1, Slide #1

)')4,93B.,/'/--8.)5/.

• /--8.)5.'/.5.8/83I5- :9 !/2-

J B'B@9/,4' !2/--)2/0(/. K@9)-0,)48 -/8,5/.

JK/2!2 18 .<-/8,5/.JK/2BBB

– .,/' , 42/.)3

– ) ,)4<4/4( :9 !/2-

• /--8.)5.'- 33' /-02)3).')32 4 I

5- 3 18 . /!3<-/,3!2/-3/- 3/82 ,0( 4

– & ./ /.4/3/- /4( 23 18 . /!3<-/,34(4D304 4/

4( /--8.)5/.(.. ,@ B'B@).2<)')43@P.QB

– & .).9/,9).'.,/'/--8.)5/.2/334( 0(<3),(.. ,

– ) ,)4<4/4( - 33' – ,,38)4 4/2)).'4( 34'' 2).''2/:4()./-0845/.,0/: 2@

34/2' @)'4@C

6.02 Fall

Lecture 1, Slide #3

2012

6.02 Syllabus

Point-to-point communication channels (transmitterreceiver):

• Encoding information BITS

• Models of communication channels SIGNALS

• Noise, bit errors, error correction

• Sharing a channel

Multi-hop networks:

• Packet switching, efficient routing PACKETS

• Reliable delivery on top of a best-efforts network

6.02 Fall 2012

Lecture 1, Slide #4

SamuelBB/23 • .9 .4 JQXSR/.:23@04 .4MQ@VTW).QXTPK4( -/34025,!/2-

/! , 42),4 , '20(<@).,8).'+ <3@:)2 22.' - .43@

, 42/-'. 43@-2+).' 9) 3@2 ,<3@C@./23 / ?

• /2+ 52 , 33,<4/ 34,)3(4( 4 (./,/'<

• & 2).)5,3428'', 3@4 , '20(<:318)+,</04 .:) ,<

0,/< – 2.3I4,.5, 7 -043QXUWJQV(/8234/3 .YX:/23!2/-8 .

)4/2)4/2 3) .48(..?K@QXUX@QXVU@#.,,<38 33).QXVVJX:/23G

-).84 K

– 2.3I/.5. .4,).QXVQJ " 59 ,< . 4( /.<;02 33K

– 2.3I)#QYPR

• , '20(<42.3!/2- /--8.)5/.J42.3I4,.55- !2/-QP<3

<3()04/-).84 3<4 , '20(K./-- 2 @,3/30822 -*/2

9 ,/0- .43).4( /2<L025 J .2<@ ,9).@ 9)3) @80).@CK

6.02 Fall 2012

Lecture 1, Slide #7

34I!/2:2QPP< 23

• )

– , 0(/. JE

-02/9 - .4). , '20(<F@04 .4M

QWT@TUV@ ,,QXWVK

– )2 , 334 , '20(<J2/.)QYPQK

– 2)/J 33 . .QYPVK

– 2)/J2-342/.'QYSSK

– , 9)3)/.2/35.'<4( JQYSVK

– C

• ,,3',;</!2 3 2( 23

– <18)34@/ @24, <@C

6.02 Fall 2012

Lecture 1, Slide #13

Claude E. Shannon, 1916-2001

QYSW34 234( 3)3@ 04@

.42/8 00,)5/./!//, .

,' 24/,/'))28)43@.9) 9 23B

2<).%8 .5,).)')4,)28)4 3)'.B

E/34)-0/24.434 234( 3)3/!4( .482<F

QYTP(@4( 04@

/.,<= 4( <.-)3/! . ,).

Photograph © source unknown. All rights reserved.This

content is excluded from our Creative Commons license.

0/08,5/.3B

For more information, see http://ocw.mit.edu/fairuse.

MIT faculty

/). ,,3).QYTPB

1956-1978

E-4( -5,4( /2</!2<04/'20(<FQYTUGQYTY

6.02 Fall 2012

Lecture 1, Slide #14

A probabilistic theory requires at least a one-slide

checklist on Probabilistic Models!

•

•

•

•

•

•

•

.)9 23 /! , - .42</84/- 33Q@3R@C@3@CB. ./.,</. /84/- ). ( ;0 2)- .4/228./!4( -/ ,B

9 .43@@@C2 383 43/!/84/- 3B 3< 9 .4(3/822 )!4( /84/- /!4( ;0 2)- .4,) 3).B

9 .43!/2-.E,' 2F/!3 43@)B B@J8.)/.@,3/:2)7 .ZK)3.

9 .4@J).4 23 5/.@,3/:2)7 .K)3. 9 .4@J/-0, - .4@

,3/:2)7 .K)3. 9 .4B/.4( .8,,3 42 ,3/ 9 .43B

2/),)5 32 #. /. 9 .43@38(4(4P\JK\Q@JK[Q@.

JZK[JKZJK)!.2 -848,,< ;,83)9 @)B B)